From Manual to AI-Driven: Tmall’s Full-Process Automation Transformation Practices

AI-Powered Transformation in Tmall’s Testing Workflow

Article No. 118 (2025)

Estimated read time: 15 minutes

---

Tmall’s quality assurance engineers are leveraging AI to revolutionize the five core testing stages:

> Requirement Analysis → Test Case Generation → Data Construction → Execution & Verification → Comparison & Validation

The vision is end-to-end automation, traceability, and manageability through an AI + Natural Language approach — ultimately boosting efficiency and reducing the manual workload. Months of practice show remarkable gains in test case generation, test data construction, and transaction flow data execution.

This article shares practical methods and implementation results in integrating AI into the testing process.

---

01 — Revolution in Testing Systems

1.1 Challenges in Manual Testing

Traditional workflows rely on manual effort, leading to:

- Scattered processes

- Low efficiency and incomplete data coverage

- Poor reusability

- Subjective biases in test case design

- Time-consuming data construction prone to omissions

- Error-prone verification and slow reporting cycles

---

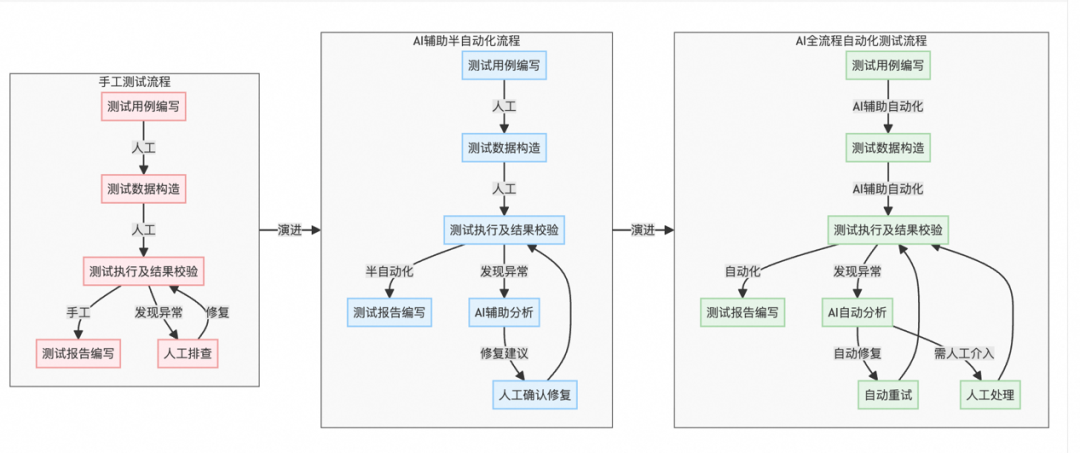

1.2 Manual → AI-Assisted → Full AI Automation

Phase 1 — AI-Assisted Semi-Automation

Focused on data construction and verification:

- Data Construction: AI-assisted generation tools quickly build and compare datasets after humans define core rules.

- Verification: Automated scripts configured with preset account information perform bulk comparisons.

- Reporting: Test reports generated automatically; case design still manual.

> This marks the transition from fully manual to human + AI collaboration.

---

Phase 2 — Full AI-Driven Testing

AI takes the lead in all stages:

- Test Case Design: AI analyzes requirement documents to produce coverage-oriented test cases. Humans fine-tune edge cases interactively.

- Data Construction: Large models generate matching test data using AI-created templates.

- Simple Requirement Automation: Natural language tuning and model training enable end-to-end automation with human supervision only for anomaly review.

---

1.3 Extending the Concept Beyond Testing

Open-source platforms like AiToEarn官网 apply similar automation principles for AI content creation and monetization.

Examples include:

- Multi-platform publishing (Douyin, Kwai, WeChat, YouTube, etc.)

- Cross-platform deployment

- AI model ranking and analytics

More resources:

---

1.4 Intelligent Integration & Continuous Optimization

In Stage 3, multiple engineering systems are integrated:

- AI-generated cases sync to test case management for collaboration & history tracking.

- Data factories + AI orchestration detect changes and adjust testing scope.

- Continuous growth of knowledge assets for reuse and evolution.

---

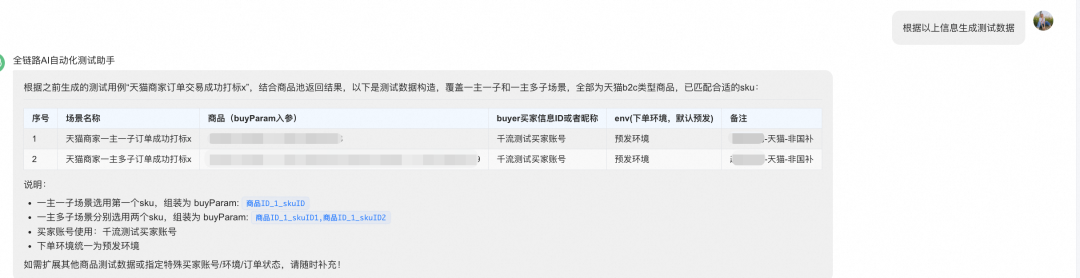

Practical Results in AI-Assisted Testing

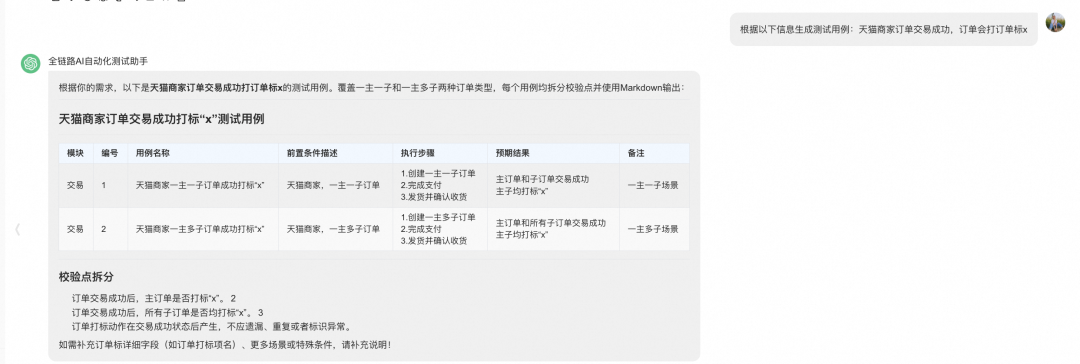

- Test Case Generation & Editing: AI assembles case sets, supports human–machine co-editing.

- Test Data Construction: AI queries keywords in the data platform, executes batch data building.

- Test Data Execution: AI orchestrates prompt-driven batch execution tasks.

---

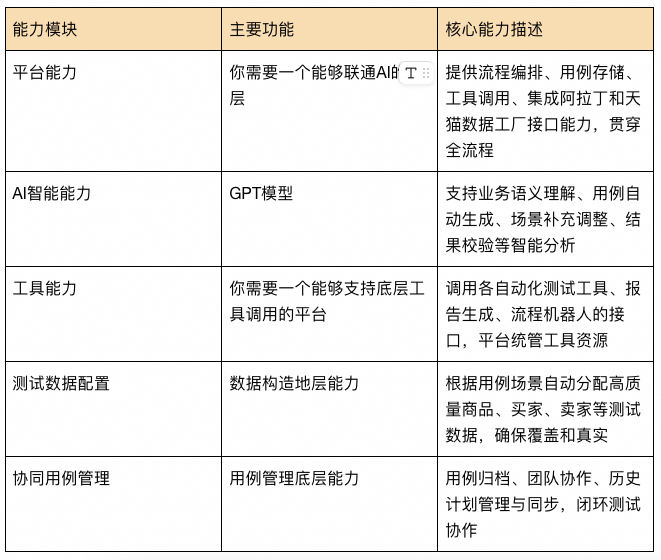

02 — Key Capabilities for AI End-to-End Testing

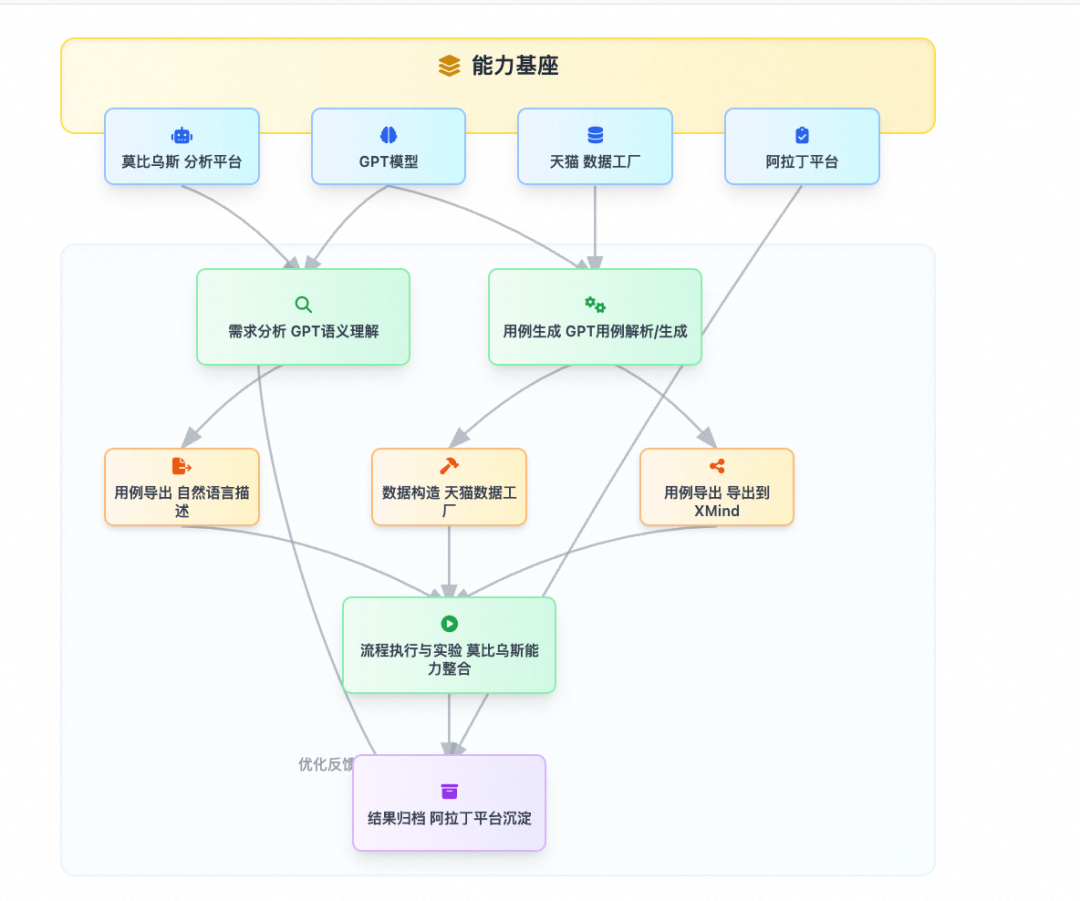

2.1 Process Orchestration & Unified Entry

- One-stop automation for case design → data gen → execution → validation → report archive

- Branching & exception handling in workflow engine for complex scenarios

- Real-time integration with case management & external data sources

2.2 AI Understanding & Scenario Modeling

- Natural language requirement input → structured test cases

- Large model training to enhance semantic and scenario coverage

- Exceptional case reasoning to prevent missed risks

2.3 Toolset & API Integration

- Case management, data construction, and validation platforms linked via APIs

2.4 Test Data Factory & Intelligent Allocation

- AI matches product/store data to cases

- Validates data quality and coverage

- Maintains a live product pool for historical traceability

2.5 Intelligent Validation, Reporting & Archiving

- Analyzes transaction chain feedback

- Supports multi-dimensional comparisons

- Output in Markdown/XMind formats; sync to platforms

2.6 Collaborative Case Management

- Tracks requirement changes & supports multi-team reviews

- Promotes knowledge asset capitalization

- Enables AI-driven reuse for industry-level solutions

---

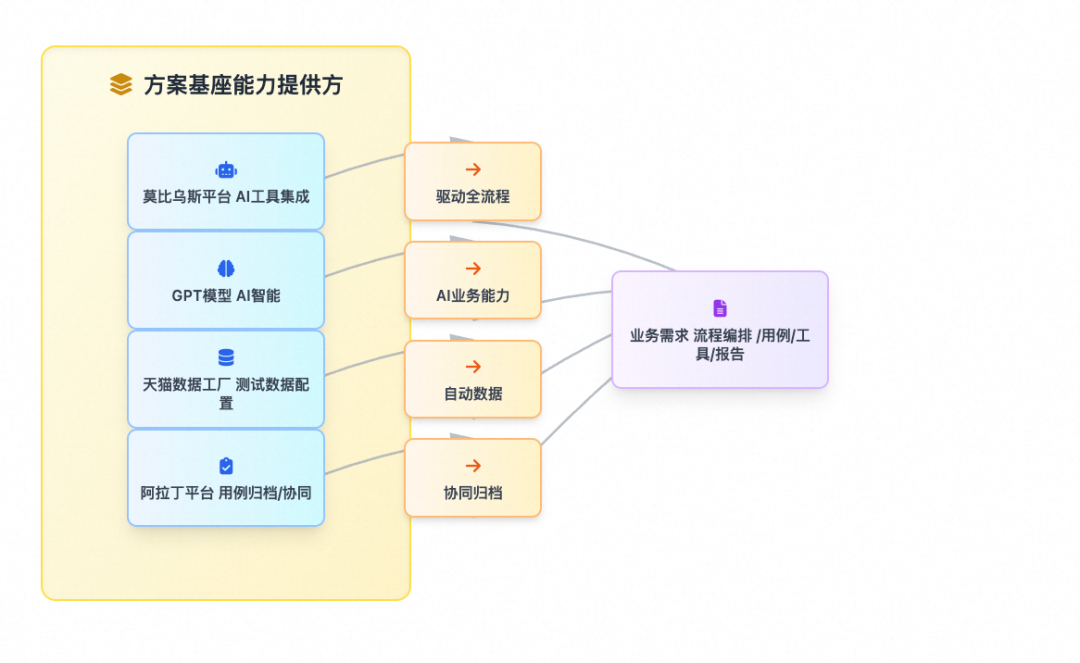

03 — Technical Architecture & Data Flow

Platform Capabilities:

---

04 — Outcomes & Future Trends

Current Results

- Automated testing across business lines → 40% faster cycle from requirements to validation

- AI-generated cases covering 70%+ of scenarios

Innovation Outlook

- Industry-level testing knowledge base

- R&D–Testing integration for smoother collaboration

---

Final Note

AI-driven testing workflows share principles with AI content monetization platforms like AiToEarn官网.

Both emphasize integration, automation, and asset management — whether for software quality assurance or multi-platform AI creativity.

---

Join the Discussion