Gemini 3 Finally Released — What’s Its True Ace?

Gemini 3 Pro Preview — A Deep Dive

When Gemini 3 Pro preview went live, many reacted with a simple: “Finally, it’s here.”

After weeks of teases — leaks, hints, and promises of stronger parameters, smarter reasoning, and more advanced image generation — users were eager to try it. Competitive moves from OpenAI and Gork helped cement the feeling that Gemini 3 was a major rollout for Google.

---

Headline Features

Google’s main selling points for Gemini 3 feel familiar, but more refined:

- Powerful reasoning

- Natural conversation

- Native multimodal understanding

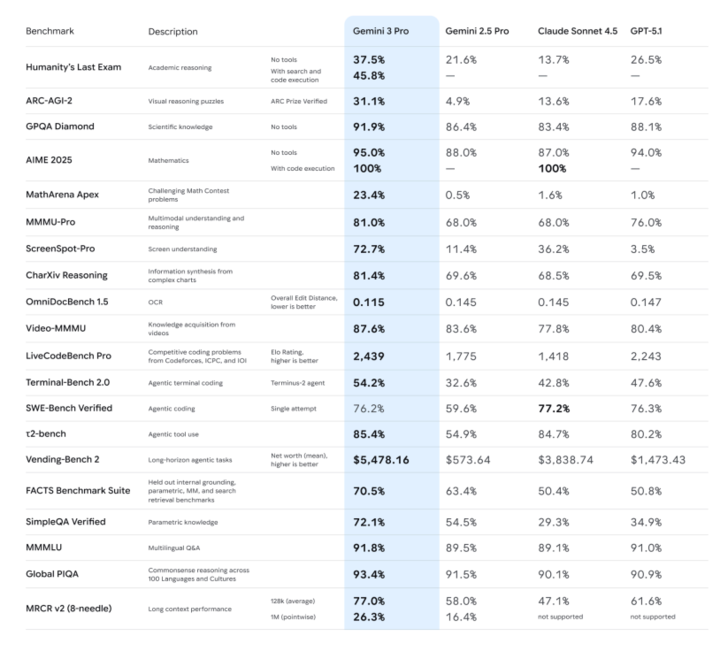

Google states it surpasses Gemini 2.5 across a wide range of academic benchmarks.

> Key takeaway: Gemini 3 is more than just a model refresh — it’s a strategic “system update” for Google’s ecosystem.

---

Model Upgrades — The Hard Numbers

Reasoning ability:

- Scored new highs in Humanity’s Last Exam, GPQA Diamond, MathArena, and other advanced reasoning/mathematics benchmarks.

- Positioned as PhD-level reasoning.

Multimodal understanding:

- Processes images, PDFs, and long video content.

- Achieves top scores in MMMU-Pro, Video-MMMU.

- Stronger image discussion and video summarization capabilities.

Deep Think mode:

- Improves performance on novel problems such as ARC-AGI.

> Metrics give context — but the story is where Google deploys this model and what it connects together.

---

Native Multimodality — A True Milestone

Google introduced native multimodality back in 2023 with Gemini 1:

- Ingesting text, code, images, audio, video directly into pretraining data.

- Avoids the pipeline approach of adding speech/image modules after training a text-only model.

Pipeline issues:

- Speech: processed by ASR, then passed to LLM.

- Images: encoded separately, then integrated later.

- Leads to information loss.

Native multimodality advantages:

- Unified Transformer handles all modalities from the start.

- Less loss of tone, detail, and temporal sequence.

- Enables fluid, multi-signal interaction — more than Q&A.

---

Ecosystem Integration — Search to Antigravity

AI Mode in Search

Gemini 3 powers dynamic content blocks — summaries, structured cards, timelines — directly within Search.

- Trigger-based activation

- Rare simultaneous release with model launch

Connected Products

Google calls Gemini 3 a “thinking partner”:

- Offers direct answers, opinions, independent actions.

- Can watch video, coach movements, listen to lectures, generate flash cards.

- Combines notes, PDFs, and web pages into concise multimodal summaries.

API Capabilities

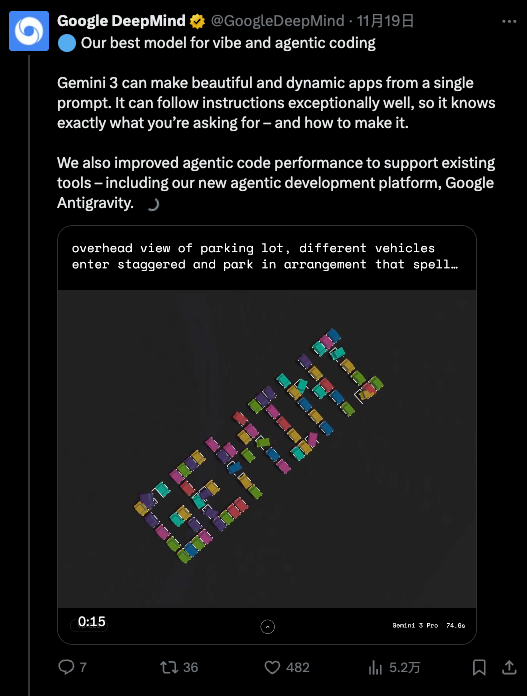

- Ideal for agentic coding and vibe coding (frontend + interactive design).

- Handles tool orchestration and multi-step development tasks.

---

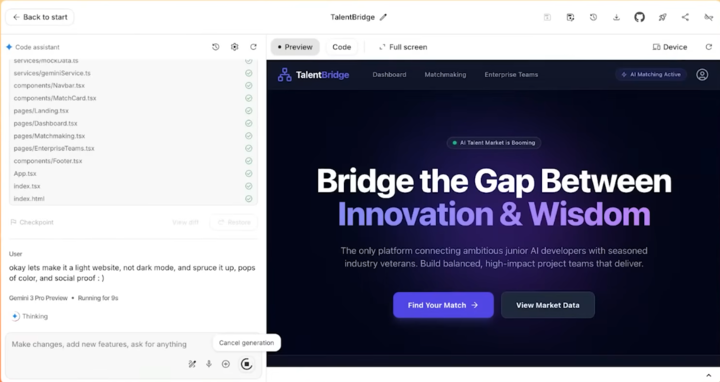

Antigravity — AI-Led Development Environment

Core features:

- Multiple AI agents access editor, terminal, and browser.

- Task division: Code writing, research, testing.

- Artifacts log all operations (task lists, screenshots, videos).

Demo highlight:

Given only “copy everything” instructions, Gemini built an entire website — asset configuration and deployment included.

> Gemini 3 is now a strategic backbone across Search, Apps, Workspace, and developer tools — focused on collaboration as much as intelligence.

---

Industry Impact

Raising the Bar

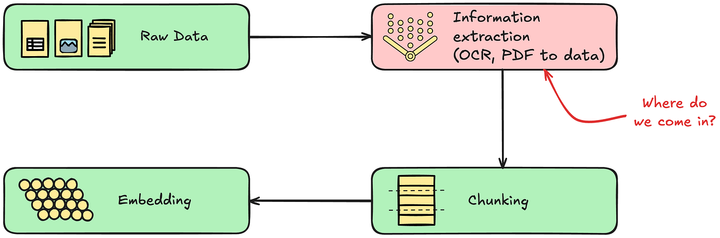

Gemini 3 forces LLMs toward native multimodality as a baseline:

- Vision + audio now core, not bonus.

- End-to-end understanding will push out “OCR + screenshot” pipelines.

Competitive Pressures

- Agentic Workflow startups face direct competition from the base model.

- Mobile on-device AI points to a shift from cloud arms race to integration war (phones, glasses, cars).

---

Paradigm Shift — From Strength to Presence

Early LLM battles asked: “Whose model is stronger?”

Now: “Whose capabilities are embedded seamlessly into daily life?”

For creators and developers:

Platforms like AiToEarn官网 provide AI-powered, cross-platform publishing and monetization, connecting tools like Gemini 3 to workflows spanning Douyin, Kwai, Bilibili, Instagram, LinkedIn, YouTube, Pinterest, and X (Twitter).

---

Google’s Roadmap

Gemini 3 stacks up as:

- Foundational model (native multimodality)

- Tool invocation + agentic architecture

- Integration with Search, Gemini App, Workspace, Antigravity

Effectively, a unified intelligent bus across Google’s ecosystem.

Impact will be measured in adoption, not launch hype.

---

> Bottom line: Gemini 3 isn't just a "smarter model" — it's Google's embedded modular intelligence strategy. If creators and professionals use platforms that unify generation, publishing, and monetization, the shift from model scores to real-world presence will accelerate.