Gen Z Team Builds 3D Foundation Model, Secures Major Game Partnership, Redefining 3D Generation Rules

Yingmou Tech and the Rise of AI-Driven 3D Generation

---

From Lab to Global Stage

A year and a half ago, the young founding team of Yingmou Tech took their unreleased 3D generative model Rodin to San Francisco’s Game Developers Conference (GDC) — showcasing it live to some of the world’s top game developers.

That live demo captured the attention of multiple game studios. Eventually, Rodin-powered Hyper3D.AI brought large-scale, real-time 3D generation into practical mobile game development.

Their research paper — "CLAY: Controllable Large-scale Generative Model for Creating High-quality 3D Assets" — and another paper from the same team were both nominated for Best Paper at SIGGRAPH, the world’s leading computer graphics conference.

CTO Zhang Qixuan reflected:

> "It could be luck to get one best paper nomination. Getting two at once… not sure if that’s luck or bad luck."

---

01 — What Happens When a 3D Model Explodes?

CLAY is trained entirely with native 3D data, overcoming limitations in dataset size and model parameters that typically hamper 3D work. This breakthrough yielded emergent behavior: the ability to generate brand-new objects never seen during training, shifting 3D generation from experimental to production-viable.

From their early light-field capture experiments at ShanghaiTech University, this team has consistently been at the forefront of native 3D R&D.

---

Major Players Are Entering 3D AI

- Roblox — open-sourced CUBE 3D and launched Mesh Generator API

- ByteDance — released Seed3D 1.0 using DIT architecture

- Tencent Hunyuan — scaled 3D model parameters from 1B to 10B

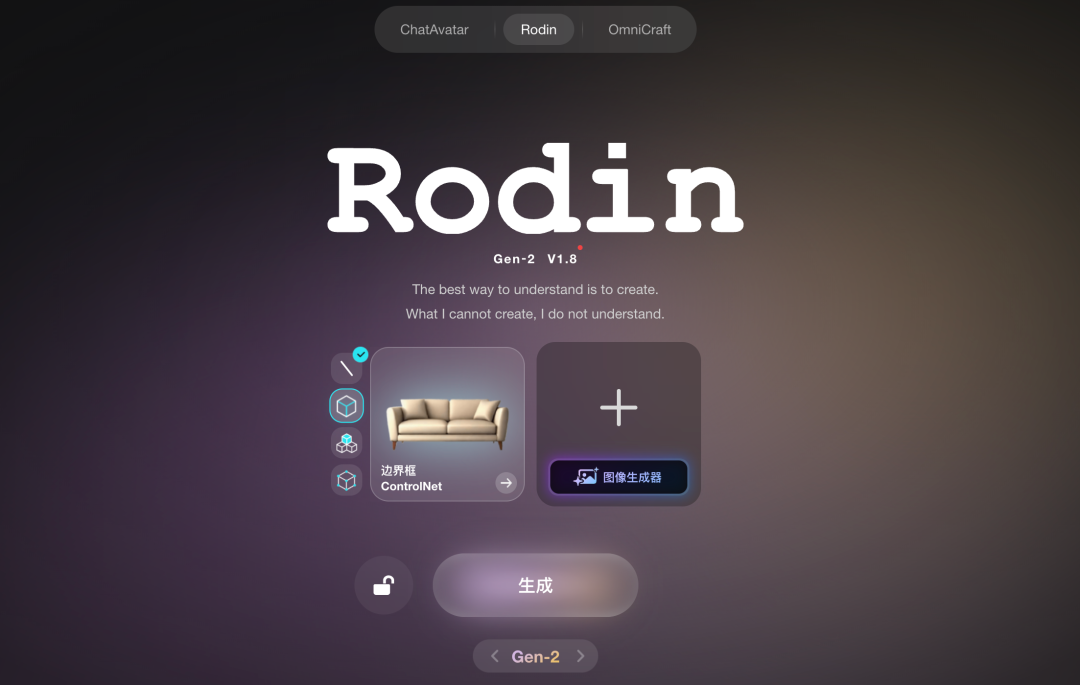

Yingmou Tech’s response: Rodin Gen-2

- Dataset scale: millions of samples

- Model size: 10 billion parameters

- Quality leap: cleaner geometric surfaces, less post-processing

- Supports million-face meshes, HD textures on low-poly models, high-res material outputs

---

Production-Ready Meshes

The mesh defines a 3D model’s structure, smoothness, and deformability. Cleaner meshes mean less cleanup in tools like Blender or Unity — shortening the gap to production readiness.

---

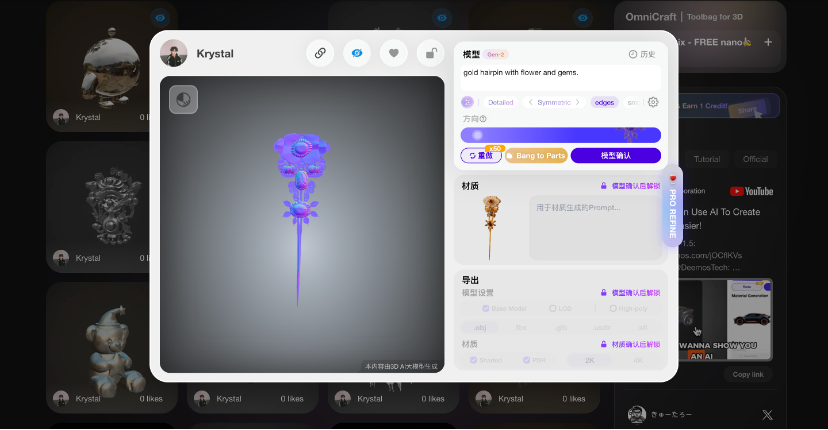

Breakthrough: Bang to Parts

Rodin Gen-2 introduces Bang to Parts — selecting any generated model and exploding it into components along its original structure.

Why it matters:

- Gaming: modular equipment swapping

- Industrial design: module-level detail optimization

- 3D printing: large object splitting for production

Old way: generate parts individually, manually adjust relationships

New way: generate whole → split intelligently → edit components

Bang to Parts: instantly reveal component structure

---

Integration and Monetization

Tools like AiToEarn bridge creation and commerce:

- AI-generated content publishing to Douyin, Kwai, Bilibili, Facebook, Instagram, Threads, YouTube, Pinterest, X/Twitter

- Performance analytics

- AI Model Ranking — AI模型排名

These ecosystems help convert 3D AI innovation into real-world value.

---

02 — 3D Scaling & Post-Training

Bang to Parts parallels post-training in AI: refining a foundational 3D model to understand object-part relationships.

Pattern parallels to text/image/video:

- Generate → Understand

- Understand → Generate

- Understanding by Generation

The paper BANG: Generative Explosive Dynamics for 3D Asset Part Segmentation was a Top 10 Technical Paper Fast Forward at SIGGRAPH 2025.

---

Best Paper Win

While they won SIGGRAPH 2025 Best Paper for CAST — scene generation from a single image — Zhang Qixuan was most excited about BANG’s recognition for its workflow impact.

---

Breaking Industry Consensus

When others followed 2D-to-3D pipelines, Yingmou trained native 3D models from scratch — delaying product launch by 6 months but achieving true production fidelity.

---

Quality & Controllability as Core Threads

- Quality: Gen-1 achieved native 3D fidelity; Gen-2 pushed precision with more parameters.

- Controllability: From 3D ControlNet (bounding box, voxel, point cloud) to BANG’s part-level editing.

Rodin’s exclusive 3D ControlNet

Hyper3D.AI delivered a new feature every 9 days over 16 months — including Partial Redo for local model edits.

---

03 — Hidden 3D Powering Visible Applications

Artwork by T-BOY using Hyper3D.AI Rodin

---

Generation Modes

To meet varied 3D demands:

- Zero: low-poly optimization for <10s generation times

- Focal: high detail

- Speedy: fast previews

- Default: balance of smoothness and detail

Artwork by Dzysmile using Hyper3D.AI Rodin

---

Beyond Gaming

Partnerships with consumer-grade 3D printer makers enable physical prints of Rodin models.

But 3D will remain “hidden infrastructure” in many applications — quietly enabling spatial consistency, fidelity, and integration.

---

Market-Driven Expansion

The team aims for horizontal growth:

- Gaming → Film modeling → Industrial use cases

- Goal: turn algorithms into SaaS

- Principle: “Market demand comes first.”

---

Why 3D Is Fundamental

3D technology resolves spatial cognition ambiguity, ensuring consistent shape logic.

Example: generating object views from a single image — 3D modeling preserves perspective and occlusion accuracy.

Long-term vision: As AI evolves toward real-world spatial reasoning (AR/VR, industrial design, robotics), 3D will be a cornerstone technology.

---

Ecosystem Support

Platforms like AiToEarn官网 offer:

- AI content generation & optimization

- Multi-platform publishing

- Performance analytics

- Global monetization integration

They make it possible for 3D AI breakthroughs to reach — and profit from — global audiences.

---

In summary: Yingmou Tech’s journey blends deep technical R&D, bold strategic decisions, and ecosystem connections — redefining quality, controllability, and workflow integration for next-generation 3D content creation.