Goodbye GUI! CAS Team Launches “LLM-Friendly” Computer Interface

Large Model Agents Automating PC Operations: Dream vs. Reality

The Current Pain Points of LLM-Based Agents

Most LLM-based agents today face two major challenges:

- Low success rate – Slightly complex tasks often cause the agent to get stuck or “crash” midway.

- Poor efficiency – Even simple tasks may require dozens of slow, back-and-forth interactions, testing user patience.

Is this simply because large models aren’t yet “smart enough”?

Surprisingly, research from the Institute of Software, Chinese Academy of Sciences suggests otherwise:

> The real bottleneck is the GUI (Graphical User Interface) — a design paradigm unchanged for over 40 years.

The GUI was built for human users, not AI agents, and its design philosophy clashes with LLM capabilities.

---

Why GUI Is a Mismatch for LLMs

GUI-based applications require access to functionalities through navigation and interaction, not direct commands.

Examples:

- Hidden controls – Nestled in menus, tabs, or dialogs; require repeated navigation steps.

- Interactive elements – Scroll bars, selection tools demand an “observe–act” loop: act, check, repeat.

Four Assumptions GUI Designers Make About Humans

- Good eyesight – Quick visual scanning of buttons and menus.

- Fast actions – Near-instant reaction time for repeated tasks.

- Limited memory – Interfaces show few options to avoid overwhelming humans.

- Avoid deep thinking – Humans prefer multiple-choice selection to recalling exact rules.

Where LLMs Differ

- Poor eyesight – Limited ability to parse visual layouts accurately.

- Slow response cycles – Each reasoning step can take seconds to minutes.

- Vast memory – Can handle large datasets without hiding options.

- Prefer structured rules – Suited for generating formal, precise instructions.

Outcome:

LLMs end up doing both high-level planning and low-level execution — the GUI forces them into tedious, error-prone mechanical work.

---

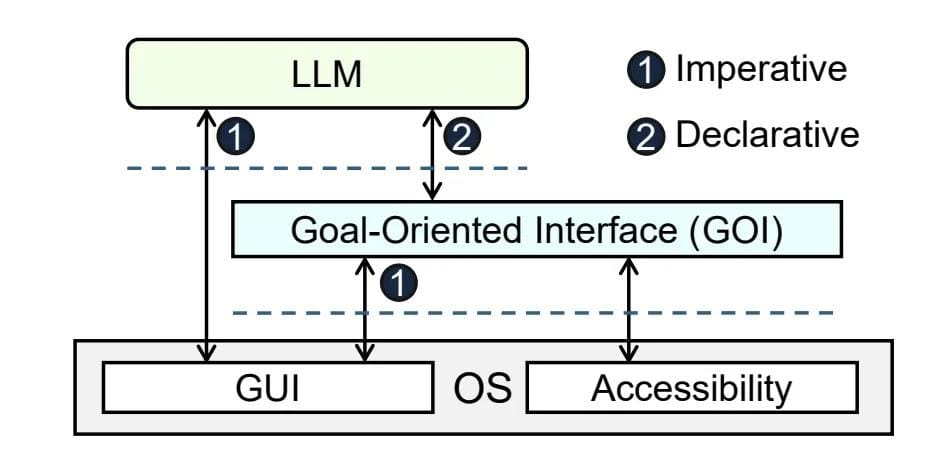

A New Paradigm: Declarative vs. Imperative Interfaces

The Core Question

Instead of telling the AI how to click every button, can we let it specify the goal, while a fast module handles the UI navigation?

Proposed Solution:

> GOI – GUI-Oriented Interface

A new abstraction layer based on OS/application accessibility mechanisms.

---

Policy–Mechanism Separation

- Policy – What to do: High-level planning like “Set all presentation backgrounds to blue.”

- Mechanism – How to do it: Actual navigation (“Click `Design` → `Format Background` → `Solid Fill`...”), handled by automation.

Separating these layers removes the mechanical burden from the LLM.

---

GOI in Action

GOI replaces repetitive UI instructions with three declarative primitives:

- Access – Directly visit a target control by ID (`visit`).

- State – Set control states directly:

- `set_scrollbar_pos(80%)`

- `select_lines()`

- Observation – Retrieve structured info (`get_texts()`), no image parsing required.

Implementation Stages

1. Offline Modeling

GOI explores accessible controls and builds a UI Navigation Graph.

- Removes loops and merges paths into a Forest structure — every function has a single, unambiguous path.

2. Online Execution

LLMs issue declarative commands; GOI handles UI mechanics.

No need for app-specific APIs — works via standard Accessibility features.

---

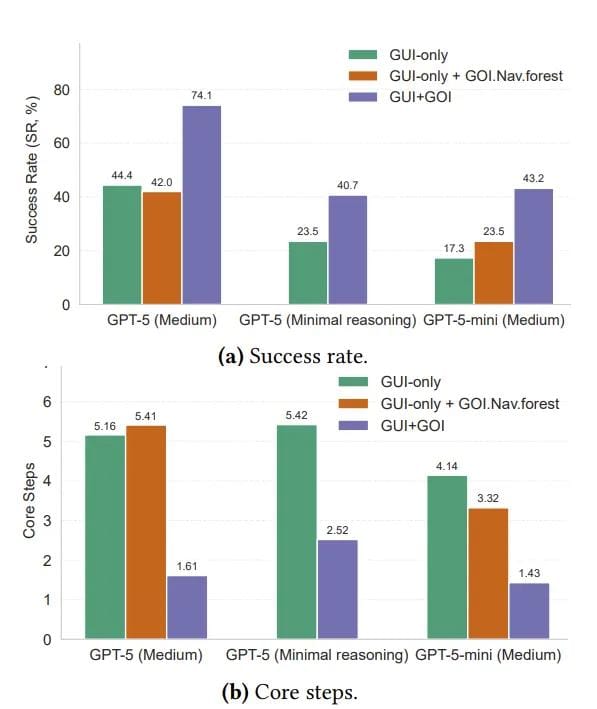

Performance Gains

Benchmark: OSWorld-W (Word, Excel, PowerPoint tasks)

- Success rate with GPT‑5 jumped from 44% to 74%.

- In 61% of successes, the task finished in a single LLM call.

Failure Pattern Shift:

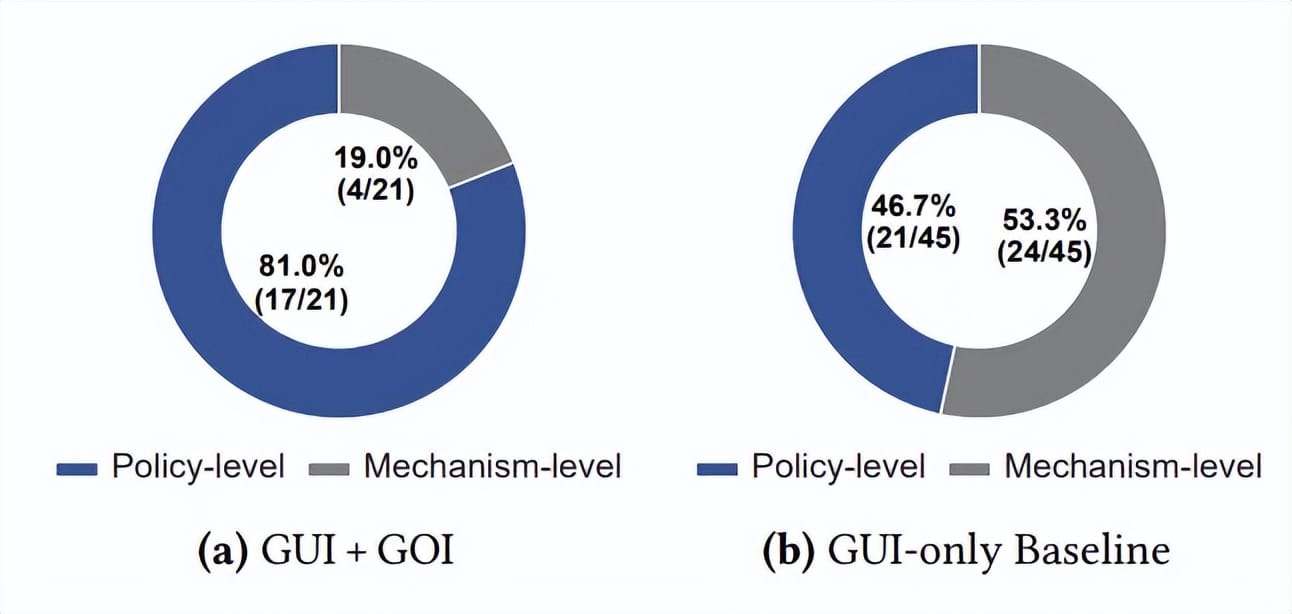

- GUI baseline: 53.3% were mechanical errors (misclicks, wrong control IDs).

- GOI: 81% were strategic errors (semantic misunderstandings).

👉 LLMs now fail due to reasoning problems, not navigation — a healthier bottleneck.

---

Industry Trend: Separating Strategy From Execution

Platforms like AiToEarn官网 show the benefits of this philosophy at scale:

- Open-source global AI content monetization

- Supports publishing to Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, Twitter/X

- AI focuses on creative strategy, while automation handles cross-platform content delivery.

AiToEarn博客 extends this with analytics and model rankings — a model that echoes GOI principles in content creation.

---

Should OS and Apps Provide “LLM-Friendly” Declarative Interfaces?

Potential Benefits:

- Structured semantics – Functions and parameters defined clearly.

- Goal-driven APIs – Commands describe end states, not step-by-step actions.

- Cross-platform standards – Agents learn once, apply everywhere.

- Safety metadata – Context on permissions and side-effects for reliable execution.

Possible Use Case

A productivity AI could:

- Schedule meetings across calendar apps

- Draft and edit documents

- Summarize discussions

- Distribute outputs via email/messaging

All without fragile UI parsing.

---

📄 Paper link: https://arxiv.org/abs/2510.04607 — for full technical details.

---

In Short:

GOI demonstrates that freeing LLMs from mechanical tasks dramatically improves performance.

Future OSes and apps should consider declarative, LLM-friendly interfaces to unlock agents that are faster, more reliable, and better at strategic reasoning.