Goodbye GUI! CAS Team Launches "LLM-Friendly" Computer Interface

Large Model Agents Operating Your Computer — Big Dreams, Harsh Reality

Current LLM-based agents face two persistent pain points:

- Low success rate — Even slightly complex tasks can cause agents to stall or “crash,” getting stuck without knowing what to do.

- Poor efficiency — Completing simple tasks often takes dozens of unnecessary back-and-forth steps, wasting time and patience.

So, is the problem simply that large models aren’t “smart” enough?

Not exactly. Research indicates the real bottleneck is something we’ve been comfortable with for 40+ years: the graphical user interface (GUI).

---

The GUI–LLM Mismatch

The GUI revolution began in the 1980s, transforming human–computer interaction. It’s designed for humans, but this philosophy clashes with how LLMs operate.

Researchers at the Institute of Software, Chinese Academy of Sciences found:

- Application functions are not directly accessible — they require navigation (menus, tabs, dialogs) and interaction (scrolling, selecting text, repeated adjustments).

GUI’s imperative design assumes human traits:

- Good eyesight — quick visual recognition of buttons and menus.

- Fast actions — humans complete “observe–act” loops rapidly.

- Limited memory — interfaces present few options at once.

- Reluctance to think deeply — humans prefer choices over learning complex rules.

How LLM Abilities Clash

- Poor visual perception — struggles with pixel-level recognition.

- Slow step execution — reasoning takes seconds to minutes per step.

- Excellent memory — capable of handling large context sizes.

- Strong structured output skills — excels at precise, structured commands.

Result: When paired with GUIs, LLMs must act as both strategist and operator, executing low-level steps they are poor at — leading to inefficiency and high error rates.

Analogy:

It’s like telling a taxi driver each turn instead of just giving the destination. One bad step and the trip fails.

---

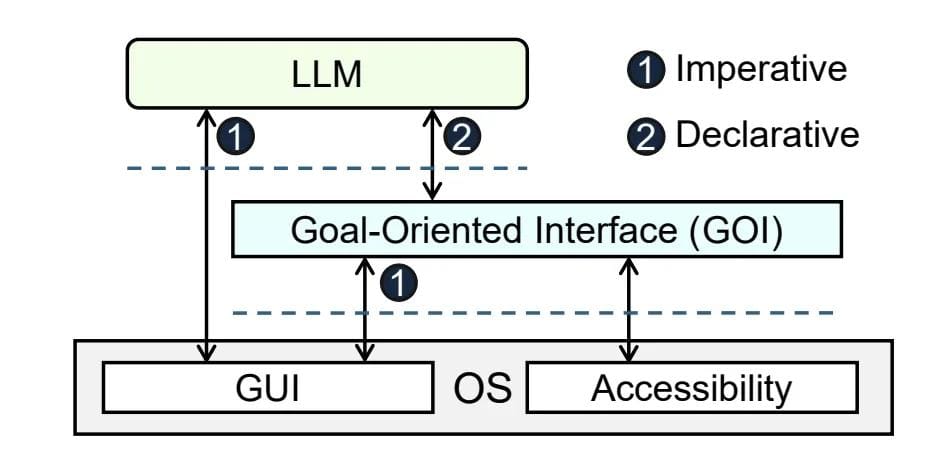

The Proposed Solution: Declarative Interaction via GOI

Researchers propose: Shift GUI from imperative to declarative.

Enter the GUI-Oriented Interface (GOI) — built using GUI and OS accessibility mechanisms.

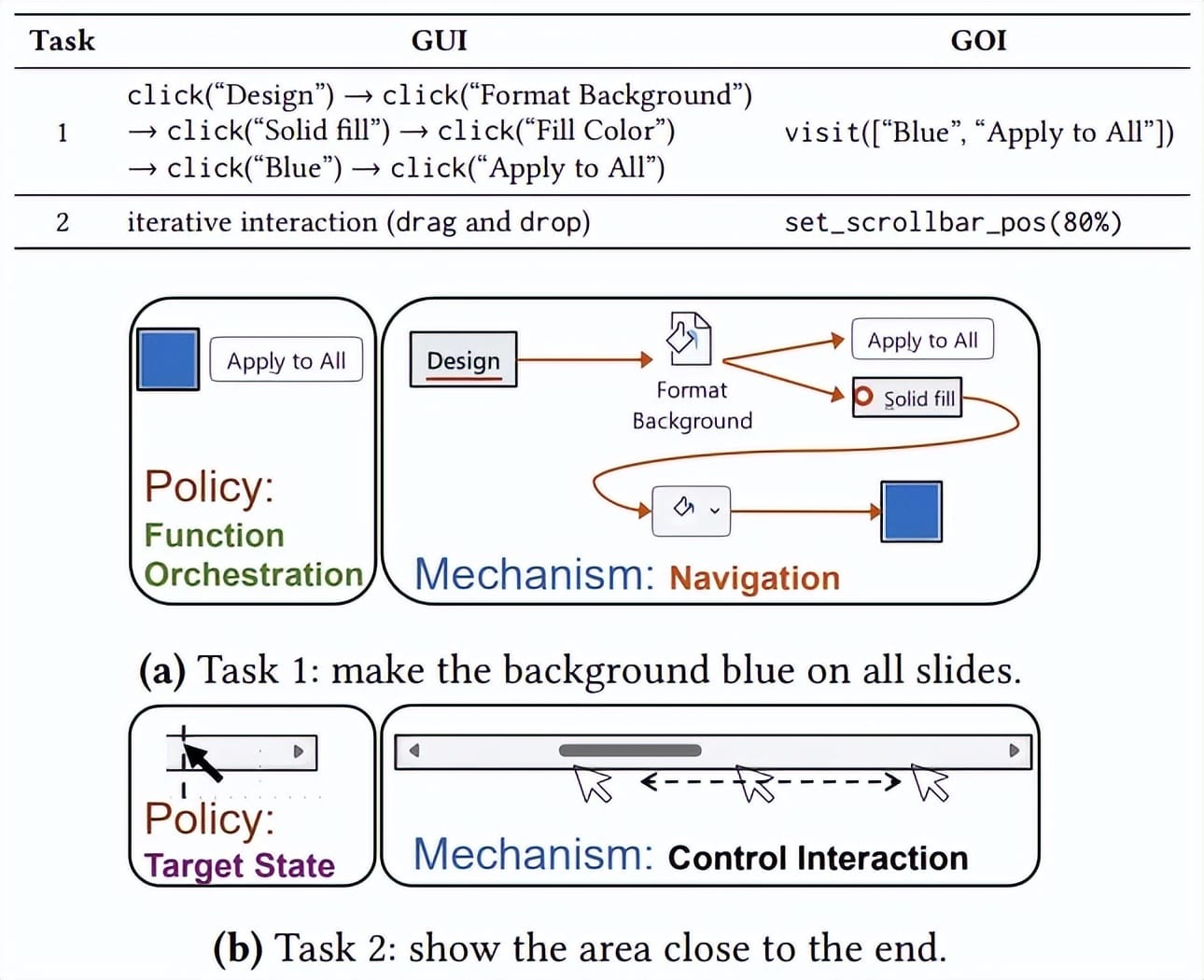

Policy–Mechanism Separation

- Policy (the “what”) — High-level semantic planning, orchestration (e.g., “Set all slide backgrounds to blue”).

- Mechanism (the “how”) — Navigation & clicking sequences (e.g., “Click ‘Design’ tab → Click ‘Format Background’…”).

With GOI, LLMs only handle the policy; GOI handles the mechanics.

---

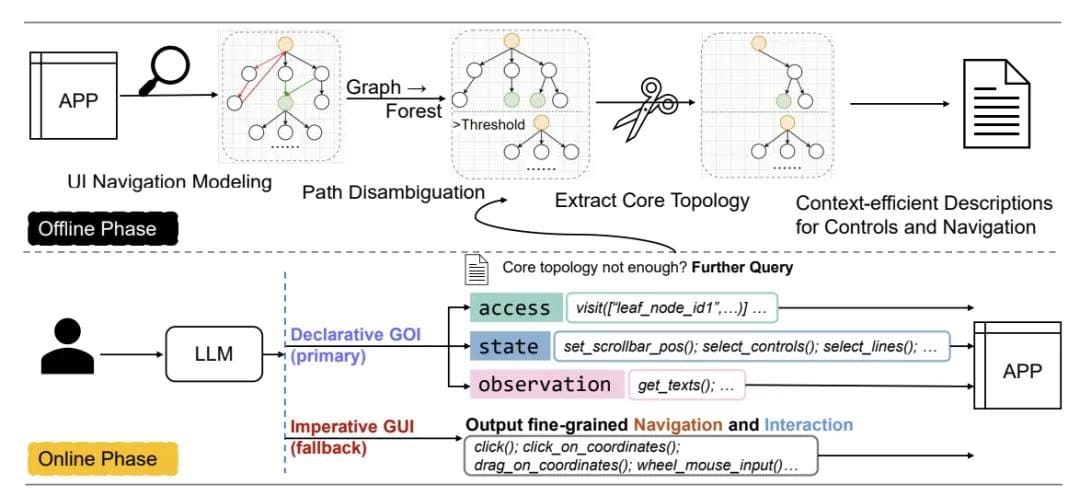

How GOI Works

Declarative Primitives

GOI reduces GUI interaction to three key primitives:

- Access — Declare the target control directly (`visit` by ID).

- State — Set end-state (`set_scrollbar_pos()`, `select_lines()`).

- Observation — Get structured info (`get_texts()`) without pixel parsing.

LLMs tell GOI the destination; GOI drives.

---

GOI Implementation

Stage 1: Offline Mapping

- GOI explores app controls (e.g., MS Word) via accessibility.

- Builds a UI Navigation Graph (loop-removal + cost-based algorithms).

- Outputs an ambiguity-free forest structure — every function reachable via a single deterministic path.

Stage 2: Online Execution

- LLM uses textual map + declarative primitives.

- GOI executes navigation & interaction automatically.

---

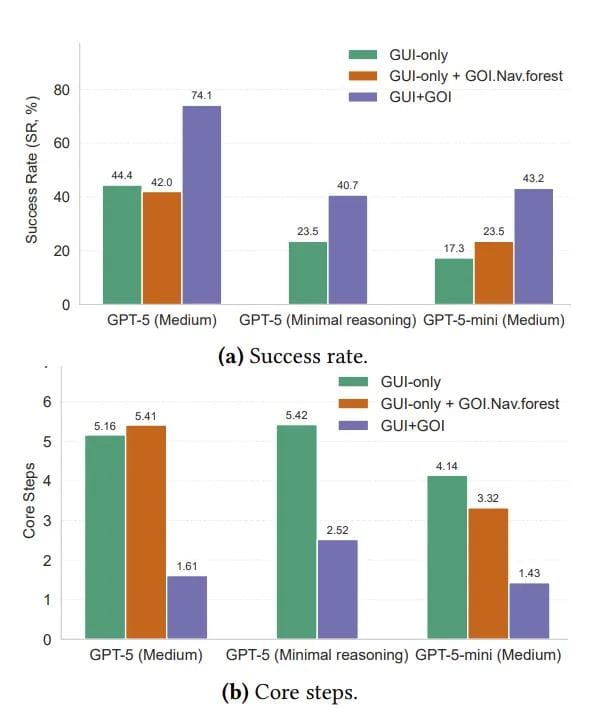

Evaluation Results

- Test suite: OSWorld-W (Word, Excel, PowerPoint).

- GPT-5 model: Success rate jumped 44% → 74%.

- Efficiency: 61% of tasks succeeded with a single LLM call.

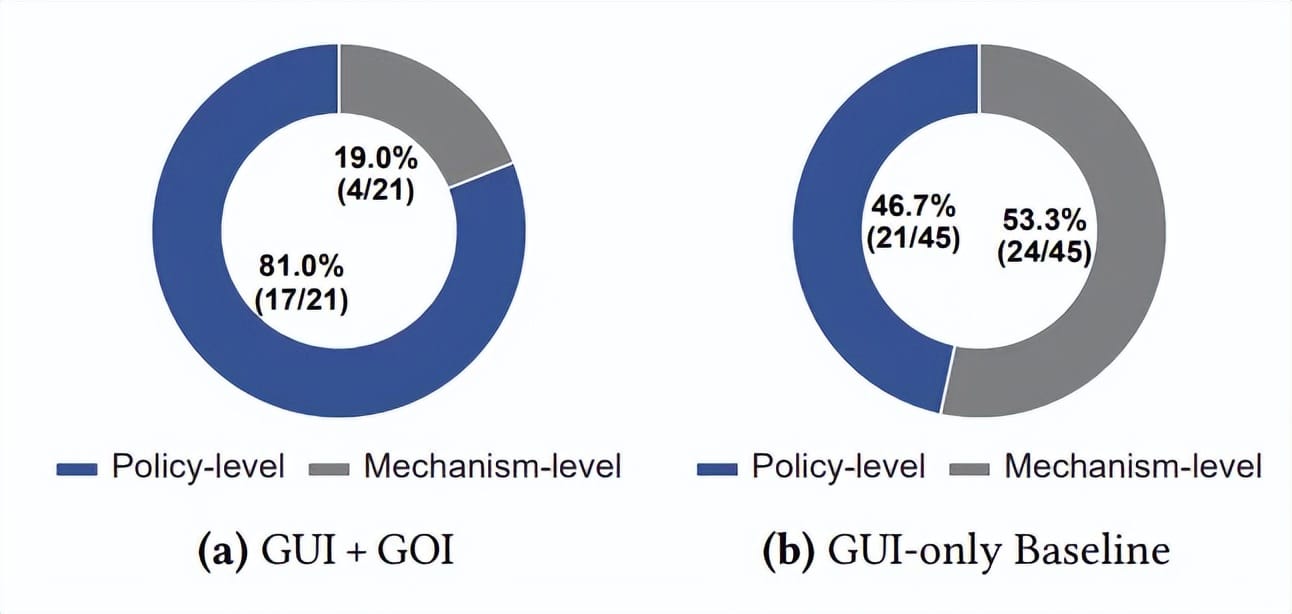

Failure Type Shift

- GUI baseline: 53.3% failures from mechanical-layer mistakes.

- GOI setup: 81% failures now from strategy layer (misunderstanding semantics, misjudging functions).

---

Implications: Rethinking Interfaces for LLMs

Key point: GOI alleviates low-level mechanical burden, letting LLMs focus on semantics.

Potential Applications

Declarative paradigms could also enhance multi-platform workflows. Platforms like AiToEarn官网 show how integration can boost efficiency:

- Open-source global AI content monetization.

- AI-powered creation + cross-platform publishing (Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X).

- Built-in analytics & model ranking (AI模型排名).

Pairing GOI-like controls with such ecosystems could enable end-to-end automation.

---

Should OSs & Apps Provide Native “LLM-Friendly” Declarative Interfaces?

Yes — potentially transformative.

Benefits

- Consistent interoperability across diverse environments.

- Higher reliability than pixel-based or parse-based automation.

- Security/governance via controlled API permission checks.

- Scalable complexity for multi-step AI tasks.

Challenges

- Need standardization to avoid fragmentation.

- Possible performance overhead.

- Require developer adoption and tooling support.

- Security risks if APIs lack robust auth/authz.

Reference: ArXiv: 2510.04607

---

Final Takeaway

Embedding LLM-friendly declarative interfaces into OSs/apps could be a critical enabler for robust, general AI agents — much like unified creative platforms streamline content workflows.

With GOI, the LLM names the destination, GOI drives the route.

The future may see widespread declarative APIs bringing AI control from low-level clicks to high-level commands — across productivity tools, creative publishing, and beyond.

---

In short: GOI and declarative controls remove the GUI as a bottleneck, letting LLMs shine where they excel — semantic reasoning and strategic execution — while paving the way for smarter, faster, and more reliable AI automation.