# Datawhale Course

**Course:** AI Research Foundations

**Compiled by:** Datawhale

---

## Learn Artificial Intelligence from Top Experts

Google DeepMind, in collaboration with **University College London (UCL)**, has launched a free course — **AI Research Foundations**.

This course is designed to help learners:

- Gain a **deep understanding of Transformers**.

- Develop **hands-on experience** in building and fine-tuning modern language models.

It is now **fully available** on the Google Skills platform.

🔗 **Course Homepage:** [https://www.skills.google/collections/deepmind](https://www.skills.google/collections/deepmind)

---

## Guided by the Head of Gemini

**Oriol Vinyals**:

- Vice President of Research & Head of Deep Learning at Google DeepMind

- Co-Lead for Gemini

- Co-inventor of the *seq2seq* model

- Key contributor to TensorFlow development

- Led the **AlphaStar** project that defeated top StarCraft II players

Recognized as a **leading authority** in both **deep learning** and **reinforcement learning**.

---

## Course Overview

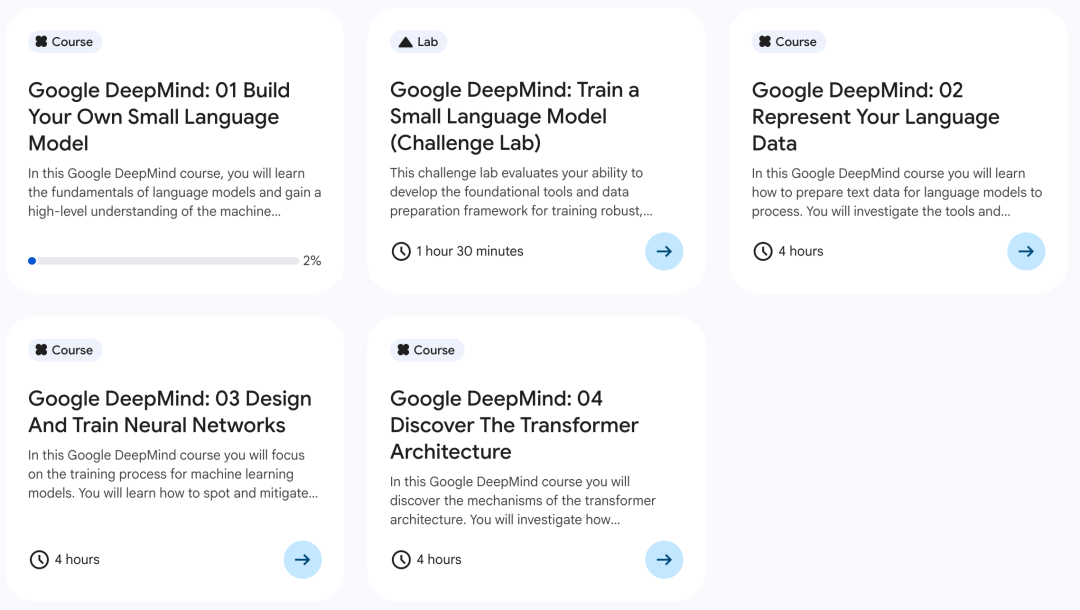

### **Course 01: Build a Small Language Model Step-by-Step**

Focus: Fundamentals of language models + complete ML development workflow.

**You will learn:**

1. **Language Model Fundamentals**

- Predicting the next word

- Probability in modeling language patterns

2. **Traditional Methods & Limitations: N-gram Models**

- How N-gram models work

- Limitations in long-range dependencies

3. **Modern Architectures: Transformers**

- Core architecture powering today’s LLMs

- Comparison with N-grams

4. **Model Training & Evaluation**

- From data preparation to training a Small Language Model (SLM)

- Techniques for evaluating performance

---

### **Course 02: Representing Your Language Data**

Focus: Converting text into machine-readable formats with **Tokenization** and **Embeddings**.

**You will learn:**

1. **Data Processing Workflow** — Preprocess raw text for use in language models.

2. **Tokenization Techniques** — Characters, words, subwords; build a custom BPE tokenizer.

3. **Representing Meaning** — Map text into vectors/matrices that capture meaning and relationships.

4. **Ethics & Bias** — Detect dataset biases and apply the *data cards* process for ethical AI datasets.

---

💡 **Bonus for AI Content Creators**

Platforms like [AiToEarn官网](https://aitoearn.ai/) offer AI-powered content monetization tools — integrating generation, cross-platform publishing, analytics, and model ranking. Publish and earn across **Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X** efficiently.

---

### **Course 03: Designing and Training Neural Networks**

Focus: How to design, implement, and optimize neural networks.

**You will learn:**

1. **Training Mechanisms** — Backpropagation principles and how neural networks learn.

2. **Practical Implementation** — Code and evaluate a multilayer perceptron (MLP).

3. **Optimization & Troubleshooting** — Handle **overfitting** and **underfitting** effectively.

4. **Risk Awareness** — Consider potential risks, security concerns, and societal impacts.

---

### **Course 04: Exploring the Transformer Architecture**

Focus: The heart of modern LLMs — the **Transformer**.

**You will learn:**

1. **Transformer Mechanisms** — How models process prompts and predict next tokens.

2. **Attention Mechanism** — Visualize attention weights; understand masked & multi-head attention.

3. **Neural Network Techniques** — Additional concepts to improve NLP model performance.

---

⚡ **Tip for Advanced Learners**

Combining expertise in **neural network training** and **Transformer architecture** paves the way for building advanced AI systems. Monetizing these skills is possible via tools like [AiToEarn官网](https://aitoearn.ai/) — a global, open-source AI content creation and distribution platform.

---