Google TPU Explained: How Meta’s Embrace and Nvidia’s Plunge Tie Back to This “Self-Rescue Chip”

!image

Alphabet and Google CEO Sundar Pichai

---

Stock prices have been “dropping relentlessly” — prompting even NVIDIA to proclaim:

> “We are ahead of the entire industry by a generation.”

This chapter begins with Warren Buffett’s “farewell masterpiece” — Berkshire Hathaway’s first purchase of Alphabet, Google’s parent company. Meanwhile, rumors swirl that NVIDIA’s major customer Meta may deploy Google’s TPU in its data centers by 2027, and start renting TPU compute via Google Cloud as early as 2026.

In an urgent statement, NVIDIA asserted GPUs are “far superior” to ASICs (Application-Specific Integrated Circuits) across performance, flexibility, and portability — emphasizing Google’s self‑developed TPU cannot replace GPU versatility. A Google spokesperson responded that the company would continue cooperating with NVIDIA, while supporting both TPU and NVIDIA GPUs.

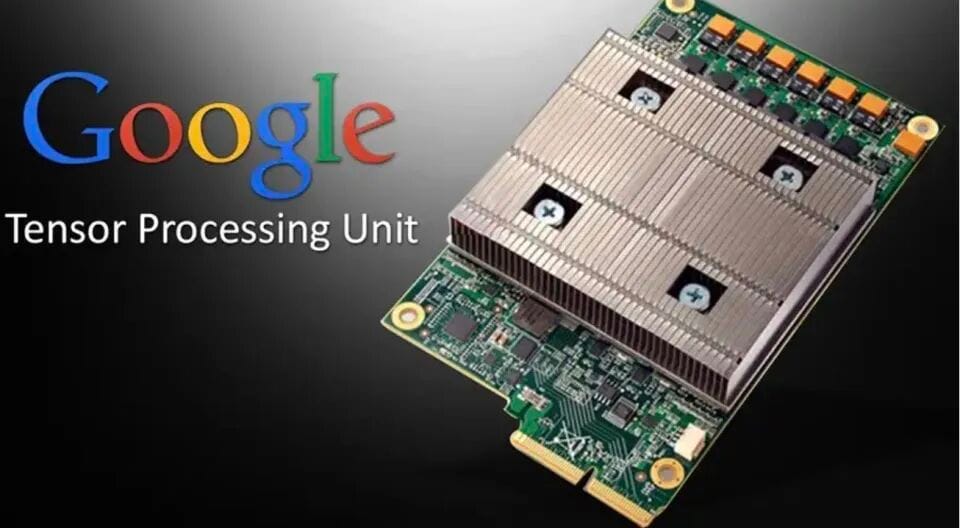

The TPU — once a 10-year-old “lifeline project” built to break AI compute bottlenecks — has become Google’s economic pillar.

TPU’s identity as a custom ASIC chip presents potential to challenge NVIDIA’s dominance. Google’s approach is not about single‑card performance head‑to‑head — but redefining AI infrastructure with ultra‑large‑scale systems.

---

01. TPU’s Past and Present

TPU v1

Google launched the TPU project in 2015 out of necessity:

> Without specialized hardware, future business growth would be unsustainable.

The Problem

- Core services like Search and Ads generate massive requests.

- Adoption of deep learning models would skyrocket data center energy costs — potentially 10× globally.

- Endless GPU purchases wouldn’t meet demand — cost would be astronomical.

- GPUs were (then) better for training, but not energy‑efficient enough for real‑time inference.

Strategic Decision

- Develop an ASIC accelerator focused on high‑efficiency matrix operations.

- Deploy at massive scale across data centers.

Milestones

- TPU v1 (2016): Supported Google Translate & parts of Search → ASIC approach proven viable.

- Transformer paper (2017): Dense matrices & extreme parallelism perfect for TPU → Google committed to owning the full stack:

- Frameworks, compilers

- Chip architecture & topology

- Cooling systems

- TPU became Google’s AI foundation — for training largest models & embedding AI cheaply into every product.

---

Growth Phases

- TPU v2, v3: Cloud availability for external customers.

- TPU v4 (2021):

- 4,096 chips into one supernode

- 2D/3D torus ring topology → near-lossless inter-chip communication.

- Enabled PaLM 540B model training.

- TPU v5p (2023–2024):

- Double v4 performance

- Elastic node architecture → scale to ~9,000 chips on demand.

- Enterprise adoption by Meta, Anthropic.

---

2024 — TPU v6 (“Trillium”): Inference Era

Google targets inference cost reduction, now the largest expense in AI operations.

Key Improvements

- FP8 throughput skyrockets

- On‑chip SRAM doubles

- KV cache optimized

- Inter‑chip bandwidth ↑

- Energy efficiency +67% vs v5p

Goal: “the most cost‑efficient commercial engine” for inference.

---

02. TPU v7 (Ironwood): From Catch-Up to Offense

What Ironwood Represents

- First dedicated inference chip in TPU history.

- Targets massive‑scale online inference.

- Competes directly with NVIDIA Blackwell series.

Specs vs B200 GPU:

- FP8 compute: 4.6 petaFLOPS (vs 4.5)

- Memory: 192 GB HBM3e @ 7.4 TB/s (close to B200’s 8 TB/s)

- Inter‑chip bandwidth: 9.6 Tbps (NVIDIA: 14.4 Tbps) — but Google’s topology changes the game.

---

System-Level Power

- Pods with 9,216 chips → supernodes

- FP8 peak: 42.5 exaFLOPS

- Up to 118× performance in certain workloads.

- 2D/3D torus topology + optical circuit switch (OCS)

- Direct chip-to-chip links

- OCS reroutes instantly around failed nodes

- 99.999% uptime (<6 min/year downtime).

- Systems liquid‑cooled for stability & efficiency.

---

Inference Advantages

- 1.77 PB shared high-bandwidth HBM

- Low comm overhead → high KV cache hit rates

- 30–40% lower inference costs vs flagship GPUs

---

Software Stack

- MaxText framework

- GKE topology-aware scheduling

- Prefix-cache-aware routing → 96% latency reduction for first token

---

Impact

Ironwood powers:

- Google Gemini series

- Anthropic’s Claude series — up to 1M TPUs planned.

---

03. Google–NVIDIA–Amazon: Three Paths

NVIDIA

- General-purpose GPU strategy

- CUDA ecosystem = strong lock‑in

- Weak points in inference efficiency

- Expensive → "NVIDIA tax"

- Specialized TPU strategy

- Systolic array → optimized for deep learning

- Full-stack system control (chips, models, networks, data centers)

Amazon

- Cost-driven chip strategy

- Trainium & Inferentia for AWS infrastructure

- Flexible but focused on lowering cloud costs

---

04. Escaping the “CUDA Tax”

The CUDA Tax

- High margins on GPU sales

- Manufacturing cost: a few thousand $

- Sale price to clouds: tens of thousands $

- Gross margin: ~80%

Google’s Edge

- Own supply chain: design → manufacture → deploy

- No CUDA tax

- Pass savings to customers

- Inference cost as low as 20% of competitors

---

TPU@Premises Program

- TPUs deployed inside enterprise data centers

- Low-latency local inference

- Greater cost advantage

---

05. TPU as Google’s Economic Pillar

Evolution

From catching up in ecosystem maturity → leading in large-scale AI infrastructure.

Achievements

- Gemini 2.0 model trained entirely on TPUs

- Google Cloud AI revenue: $44 B annualized

- Increased cloud market share in AI workloads

Competitive Future

- AI battle shifts:

- Cost dimension

- Inference scale

- Infrastructure integration

Google’s decade-long TPU investment secures:

- Technical moat

- Cost leadership

- System stability advantages

---

Related Ecosystem Example: AiToEarn

Platforms like AiToEarn官网 mirror TPU’s integration ethos.

They connect:

- AI content generation tools

- Cross-platform publishing (Douyin, YouTube, Instagram, LinkedIn, etc.)

- Analytics

- Model ranking (AI模型排名)

These systems give creators:

- Scalable publishing

- Efficient monetization

- End-to-end workflow — much like Google’s AI infrastructure approach.

---

Summary:

Google’s TPUs have evolved from an internal efficiency fix to a cornerstone of its AI strategy, enabling system‑level optimization, massive inference cost reduction, and competitive leadership in the AI infrastructure era. This move parallels broader trends where full-stack integration becomes the decisive differentiator — whether in enterprise-scale inference or creator-scale monetization.