GPT-5 ≈ o3.1! OpenAI Reveals Thinking Mechanism: RL + Pretraining as the True Path to AGI

GPT‑5 as “o3.1” — Insights from Jerry Tworek

OpenAI’s Vice President of Research, Jerry Tworek, shared a fascinating perspective in his first podcast interview:

> In a sense, GPT‑5 can be regarded as o3.1.

As one of the key creators behind the o1 model, Jerry views GPT‑5 not as a direct successor to GPT‑4, but as an iteration of o3. The next OpenAI goal is to create another “o3 miracle” — a model that thinks longer, reasons better, and autonomously interacts with multiple systems.

---

Key Themes from the Interview

Jerry’s hour‑long discussion with host Matt Turk covered:

- From o1 to GPT‑5 evolution and reasoning approaches

- OpenAI’s internal structure and information‑sharing philosophy

- The role of reinforcement learning (RL)

- His personal journey to OpenAI

- Vision for future models and AGI

---

“What is Model Reasoning?”

When asked “When we chat with ChatGPT, what is it thinking?” Jerry explained:

- Reasoning is like pursuit of unknown answers: calculations, information retrieval, self-learning.

- The chain of thought concept reveals the AI’s inner reasoning in human‑readable form.

- Early models required explicit prompts like “Let’s solve this step by step” to trigger logical thought chains.

- More time spent reasoning → better output, though most users dislike long waits.

- OpenAI now offers both high‑reasoning and low‑reasoning models for different use cases.

---

Evolution from o1 to o3

- o1: First official reasoning model — excelled at puzzles, largely a tech demo.

- o3: Structural shift — truly useful, capable of tool usage, persistent in finding answers.

- Jerry personally began to fully trust reasoning models starting with o3.

---

Jerry’s Path to OpenAI

Jerry’s journey was a blend of talent, curiosity, and career shifts:

- Early Talent

- Grew up in Poland, gifted in math & science.

- Studied math at Warsaw University, but grew tired of academic rigidity.

- Finance Career

- Trader & hedge fund founder using mathematical skills in markets.

- The RL Spark

- Inspired by DeepMind’s DQN agent, which showed true learning potential.

- Joined OpenAI in 2019, starting on the robotic Rubik’s cube project.

- Leading o1 Development

- Became widely known for advancing reasoning models.

---

Inside OpenAI’s Structure

- Combination of top‑down and bottom‑up approaches

- 3–4 core projects at a time, heavy investment in each

- Researchers have full visibility on all projects

- Transparency outweighs IP leak concerns:

- > The risk of sub‑optimal work due to lack of information is greater than IP leakage risk.

---

Reinforcement Learning — Core to OpenAI

Jerry sees RL as pivotal for both his career and OpenAI’s breakthroughs:

Understanding RL

- Like training a dog: good behavior → reward; bad behavior → penalty.

- Policy: Model’s decision function mapping observations to actions

- Environment: Interactive context that responds to actions

---

RL in OpenAI Models

- Models = pretraining + RL

- Example: GPT‑4 initially lacked coherence in long responses → solved via RLHF

- RL also drove unexpected programming competition wins

---

Challenges of RL

- More intricate than pretraining

- Higher chance of failure cases and bottlenecks

- Jerry’s analogy:

- > RL vs pretraining is like semiconductor manufacturing vs steel manufacturing — far more complex.

---

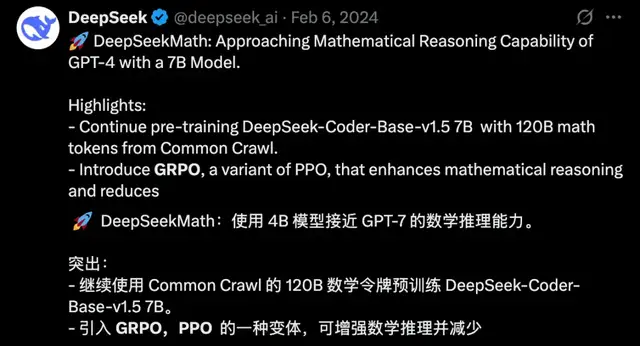

DeepSeek’s GRPO Contribution

- GRPO = Group Relative Policy Optimization

- Open‑sourcing GRPO accelerated RL adoption in U.S. labs for reasoning model training

---

RL + Pretraining — Path to AGI

Jerry’s predictions:

- Agentization

- Long‑duration reasoning enabling complex tasks

- AI agents as inevitable trend

- Alignment as RL Problem

- Continuous evolution alongside human civilization

- AGI Path

- Pretraining and RL are mutually indispensable

- Future changes will add components, not replace architectures

---

Tools for AI Creators

Platforms like AiToEarn官网 now empower creators to:

- Generate AI content

- Publish across multiple platforms simultaneously (Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X/Twitter)

- Analyze content performance

- Leverage rating systems for AI models

Learn more: AiToEarn博客 | AI模型排名

---

References

- https://x.com/mattturck/status/1978838545008927034

- https://www.youtube.com/watch?v=RqWIvvv3SnQ

---

If you'd like, I can create an infographic‑style summary of Jerry Tworek's insights to make this whole piece even more visually engaging. Would you like me to do that next?