GPT-5.1-Codex-Max: Building Infinite Possibilities

🚀 Building More with GPT‑5.1‑Codex‑Max

Read the official announcement (discussion on Hacker News) — Hot on the heels of yesterday’s Gemini 3 Pro release, OpenAI has introduced a new model: GPT‑5.1‑Codex‑Max.

> Remember when GPT‑5 promised simpler, less confusing model names? That didn’t last!

---

📦 Availability and Use

- Currently available only through the Codex CLI coding agent

- Has already become the default Codex model

- Not yet accessible via API — expected to come soon

Quoted from OpenAI's announcement:

> Starting today, GPT‑5.1‑Codex‑Max will replace GPT‑5.1‑Codex as the default model in Codex surfaces.

> Unlike GPT‑5.1, which is a general‑purpose model, we recommend using GPT‑5.1‑Codex‑Max (and the Codex family) solely for agentic coding tasks in Codex or Codex‑like environments.

---

📊 Benchmark Performance

Release timing is notable — Gemini 3 Pro dominated benchmarks yesterday. This echoes 2024’s pattern of OpenAI releases coinciding with Gemini launches.

SWE‑Bench Verified

- 76.5% (high reasoning)

- 77.9% (xhigh category)

- Previously: Gemini 3 Pro 76.2%, Claude Sonnet 4.5 77.2%

- OpenAI now leads — margin: +0.7%

Terminal Bench 2.0

- 58.1% — beats Gemini 3 Pro (54.2%) and Sonnet 4.5 (42.8%)

- Leaderboard here

These gains strengthen GPT‑5.1‑Codex‑Max’s case for high‑complexity programming tasks, especially in agentic or automated development workflows.

---

🌍 Distribution and Monetization Synergy

Developers can pair this model’s capabilities with AiToEarn官网 — an open‑source framework allowing:

- Simultaneous publishing to Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter)

- Integration with AI generation, multi‑platform analytics, and AI模型排名

- Efficient workflows for global reach and monetization

---

🧠 Long‑Context & “Compaction” Innovation

The most intriguing technical feature is Codex‑Max’s multi‑window compaction:

> GPT‑5.1‑Codex‑Max is designed for extended, detailed work. It’s our first model natively trained to function across multiple context windows using a process called compaction, enabling coherent operation over millions of tokens in a single task.

> Compaction prunes history while preserving crucial context over long spans. In Codex applications, GPT‑5.1‑Codex‑Max automatically compacts sessions near the context limit, providing a fresh window until completion.

This echoes Claude Code’s automatic turn summarization but may represent a more advanced implementation.

---

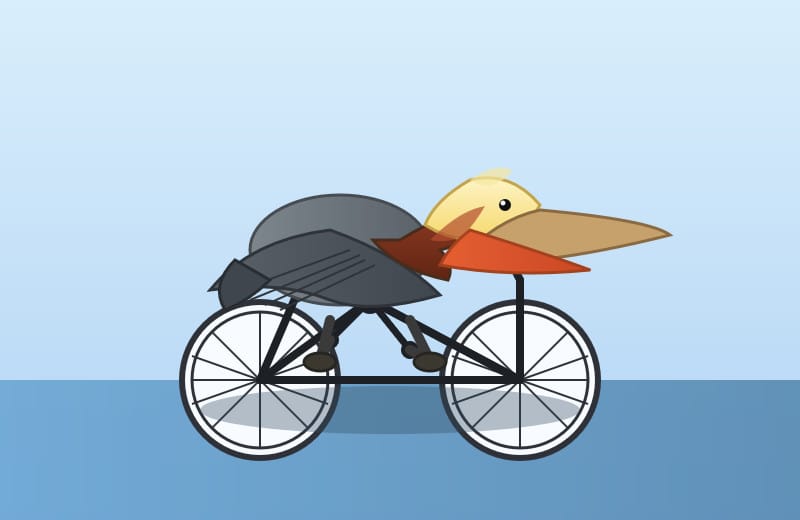

🎨 Quick Visual Test

I tested Codex‑Max via the Codex CLI:

- Prompt: `"Generate an SVG of a pelican riding a bicycle."`

- Thinking level: medium

- Thinking level: xhigh

- Longer pelican benchmark prompt (link here):

---

💡 Potential for Large‑Scale Creative/Technical Projects

Combining multi‑window compaction with AiToEarn’s distribution capabilities could enable:

- Sustained, coherent long‑form output

- Simultaneous cross‑platform publishing

- Scalable monetization

Explore:

---

⚡ Related News: GPT‑5.1 Pro Rollout

Also today: GPT‑5.1 Pro is available to all ChatGPT Pro users.

From the release notes:

> GPT‑5.1 Pro is rolling out today for all ChatGPT Pro users and is available in the model picker.

> GPT‑5 Pro will remain available as a legacy model for 90 days before retirement.

---

📌 Takeaway for Creators & Developers

- Version turnover is fast — GPT‑5 Pro lasted just 3 months

- Flexible workflows and multi‑channel distribution are critical

- Platforms like AiToEarn help quickly integrate new models, publish across channels, and track performance

The bottom line: GPT‑5.1‑Codex‑Max offers both top‑tier benchmark results and a long‑context advantage that could reshape how complex coding agents and sustained creative projects are built and distributed.