Helping AI Better Understand Context: Principles and Practice of MCP

MCP (Model Context Protocol): A Standard for AI Interaction with Tools and Data

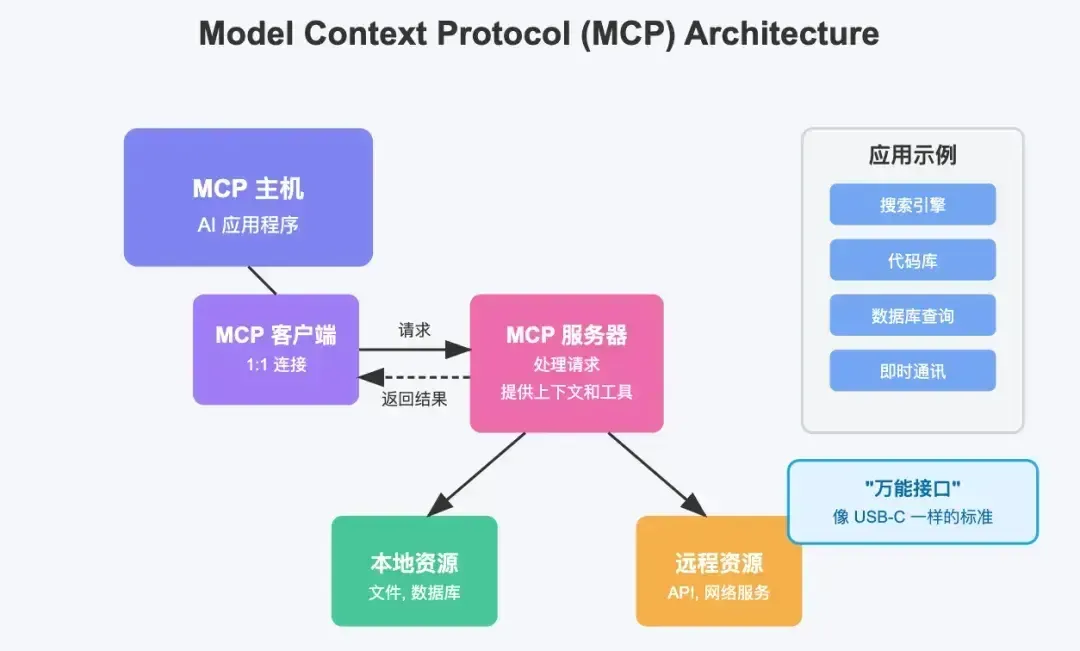

MCP is a unified protocol standard for enabling AI models to interact with external data sources and tools — similar to giving AI a USB-C–style universal interface.

This article explores the essence, value, usage, and development of MCP, complete with practical code and implementation examples.

---

I. What is MCP?

1. MCP — An Open, Consensus-Based Protocol

MCP is an open, general, consensus-based protocol led by Anthropic (Claude).

- Provides a standardized interface for AI models to connect seamlessly with various data sources and tools.

- Designed to replace fragmented agent integrations, reducing complexity and improving security.

- Helps developers avoid reinventing the wheel by leveraging a shared, open-source MCP ecosystem.

- Maintains context across apps and services for autonomous task execution.

> Analogy: MCP works like USB-C for AI — once you have a single standard, you can connect to everything.

---

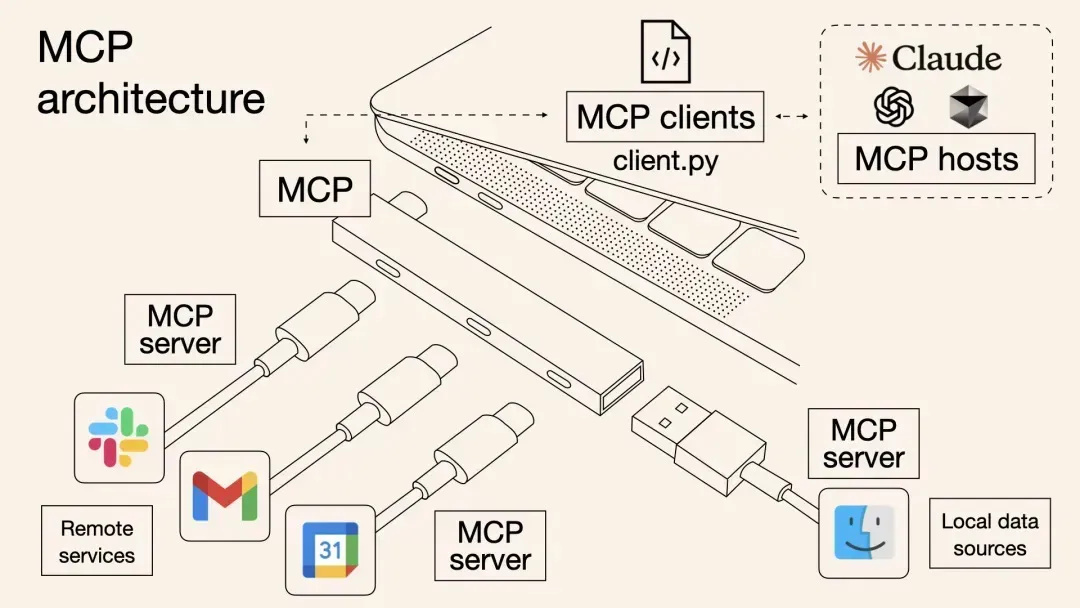

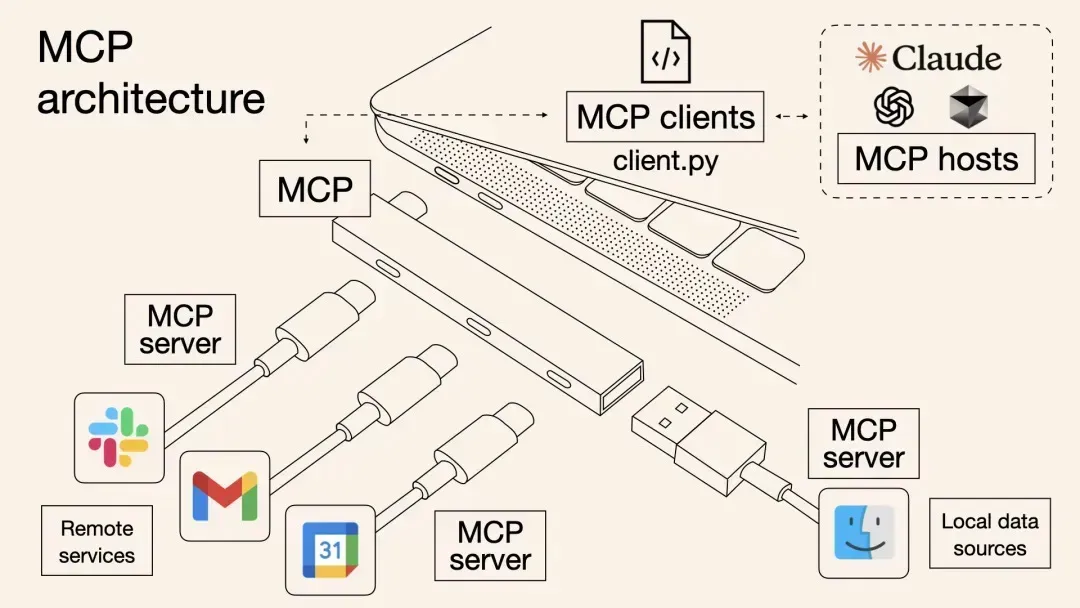

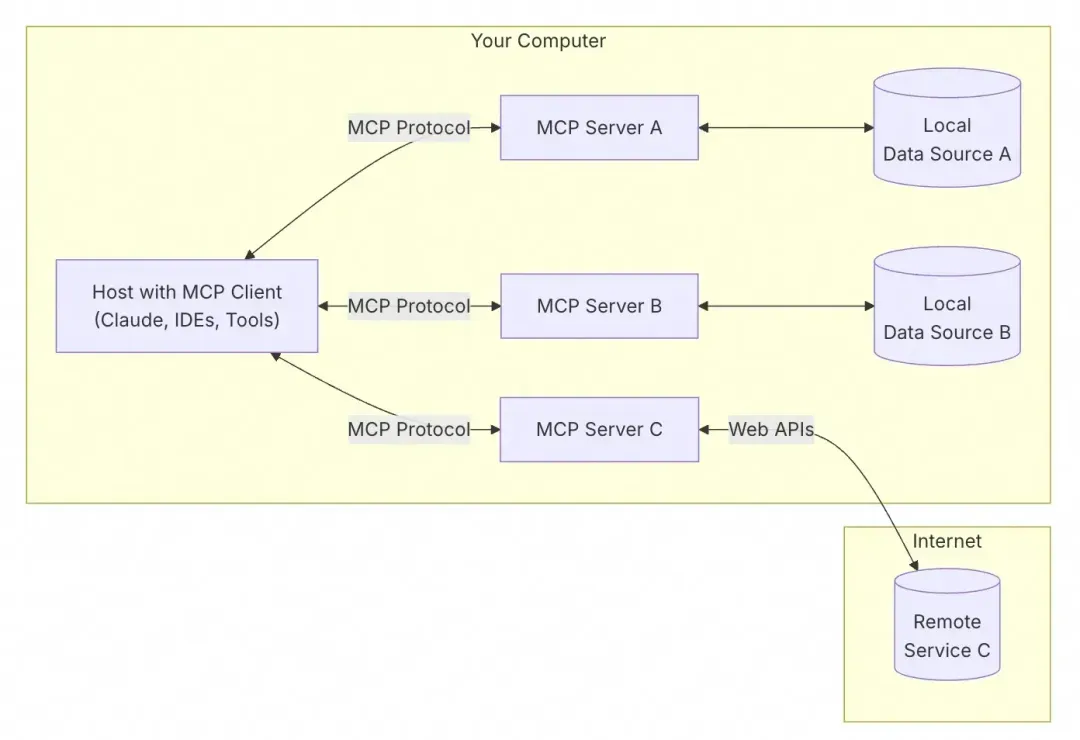

2. Core MCP Architecture

MCP follows a client–server model:

- MCP Hosts – AI applications (e.g., chatbots, AI IDEs).

- MCP Clients – Components within hosts maintaining direct server connections.

- MCP Servers – Provide context, tools, and prompts to clients.

- Local Resources – Files, databases on the local machine.

- Remote Resources – APIs, external data sources.

---

3. Why MCP is Needed

MCP solves the problems of fragmentation and integration complexity by:

- Standardizing AI–tool interoperability.

- Scaling AI-driven solutions without rewriting code for every integration.

- Enabling secure local processing without sending all data to the cloud.

---

II. Real-World Need for MCP

Without MCP, it’s nearly impossible to:

- Search the web

- Send email

- Publish a blog

- Query a database

- All in one unified AI session

With MCP, integrating these capabilities into a single application becomes achievable.

Example Development Workflow With MCP:

- Query local database with AI in your IDE.

- Search GitHub Issues for bugs.

- Send PR feedback via Slack.

- Retrieve and modify AWS/Azure configs.

---

Everyday Example:

“Find the average score for the latest math exam, list failing students in the duty roster, and remind them about the retake in the WeChat group.”

With MCP:

- AI reads local Excel file.

- AI connects to WeChat.

- AI updates online document.

- No manual steps.

- Data remains local and secure.

---

Benefits:

- No need for separate interfaces per app.

- Works locally without cloud uploads.

- AI maintains richer context.

---

III. MCP Principles

1. MCP vs. Function Calls

Before MCP:

- Function calls tied to specific LLM platforms.

- Developers rewrite code when switching models.

- Security and compatibility issues.

MCP Advantages:

- Ecosystem – Any service provider can add MCP-compatible tools.

- Compatibility – Works with any model that supports MCP.

---

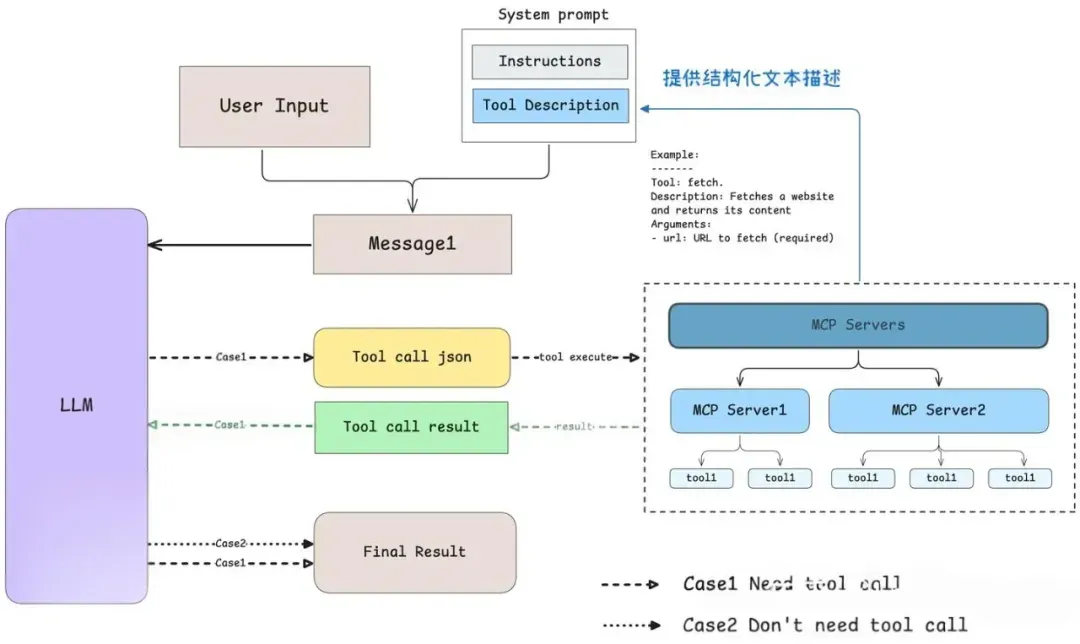

2. How Models Choose Tools in MCP

Invocation Process:

- Client sends query to LLM.

- LLM analyzes available tools.

- Client executes chosen tool via MCP server.

- Execution result returned to LLM.

- LLM processes and replies naturally.

---

Example Code – Tool registration and description formatting:

class Tool:

"""Represents a tool with its properties and formatting."""

def __init__(self, name: str, description: str, input_schema: dict[str, Any]) -> None:

self.name = name

self.description = description

self.input_schema = input_schema

def format_for_llm(self) -> str:

"""Format tool information for LLM."""

args_desc = []

if "properties" in self.input_schema:

for param_name, param_info in self.input_schema["properties"].items():

arg_desc = f"- {param_name}: {param_info.get('description', 'No description')}"

if param_name in self.input_schema.get("required", []):

arg_desc += " (required)"

args_desc.append(arg_desc)

return f"""

Tool: {self.name}

Description: {self.description}

Arguments:

{chr(10).join(args_desc)}

"""---

Best Practices:

- Precise tool names.

- Clear docstrings.

- Accurate parameter descriptions.

---

IV. Tool Execution & Result Feedback

Execution Flow:

- If no tool is needed → reply directly.

- If a tool is needed → output JSON call → client executes tool → return result to LLM → generate final reply.

Error handling:

- Invalid tool calls are skipped.

- Safe handling of hallucinations.

---

V. MCP Server Development

1. MCP Server Types

- Tools – External functions or service calls.

- Resources – Structured data the LLM can read.

- Prompts – Task templates for the user.

---

2. Quick Start Example – Python MCP Server

Goal: Count `.txt` files on desktop and list their names.

Prerequisites:

- Claude Desktop

- Python 3.10+

- MCP SDK 1.2.0+

Setup:

curl -LsSf https://astral.sh/uv/install.sh | sh

uv init txt_counter

cd txt_counter

echo "3.11" > .python-version

uv venv

source .venv/bin/activate

uv add "mcp[cli]" httpx

touch txt_counter.py---

Server Implementation:

import os

from pathlib import Path

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("Desktop TXT File Counter")

@mcp.tool()

def count_desktop_txt_files() -> int:

"""Count the number of .txt files on the desktop."""

username = os.getenv("USER") or os.getenv("USERNAME")

desktop_path = Path(f"/Users/{username}/Desktop")

return len(list(desktop_path.glob("*.txt")))

@mcp.tool()

def list_desktop_txt_files() -> str:

"""Get a list of all .txt filenames on the desktop."""

username = os.getenv("USER") or os.getenv("USERNAME")

desktop_path = Path(f"/Users/{username}/Desktop")

txt_files = list(desktop_path.glob("*.txt"))

if not txt_files:

return "No .txt files found on desktop."

file_list = "\n".join([f"- {file.name}" for file in txt_files])

return f"Found {len(txt_files)} .txt files:\n{file_list}"

if __name__ == "__main__":

mcp.run()---

Testing:

$ mcp dev txt_counter.py

Starting MCP inspector...

Proxy server listening on port 3000Open browser at `http://localhost:5173` to inspect.

---

Claude Integration:

Add to `claude_desktop_config.json`:

{

"mcpServers": {

"txt_counter": {

"command": "/opt/homebrew/bin/uv",

"args": [

"--directory",

"/Users/you/mcp/txt_counter",

"run",

"txt_counter.py"

]

}

}

}Restart Claude Desktop → MCP ready for use.

---

VI. Summary

MCP delivers:

- Essence: Standardized AI connection to tools and data (AI’s USB-C).

- Value: Cross-model compatibility, minimal rework, secure local execution.

- Usage: Rich ready-to-use ecosystems for users; simple dev process for programmers.

MCP’s future depends on ecosystem growth — more MCP-compliant tools increase AI’s potential.

---

Tip: If you integrate MCP into a wider content automation workflow, consider open-source platforms like AiToEarn官网 — which connects AI generation, multi-platform publishing, analytics, and model ranking into one framework. Perfect for monetizing MCP-powered workflows across Douyin, Bilibili, Instagram, X (Twitter) and more.

Links: