# Table of Contents

1. [The Birth and Challenges of VibeCoding](#the-birth-and-challenges-of-vibecoding)

2. [Returning to the “Origin” to Examine Communication Challenges](#returning-to-the-origin-to-examine-communication-challenges)

3. [Writing Code vs. Reading Code](#writing-code-vs-reading-code)

4. [Prompts vs. Context Engineering](#prompts-vs-context-engineering)

5. [Some Insights](#some-insights)

---

Have you ever experienced this: AI outputs feeling like a **“random blind box”** — sometimes astonishingly accurate, sometimes entirely irrelevant? Facing such uncertainty, how should we respond?

This article explores **practical thoughts and strategies** for tackling these challenges.

---

## The Birth and Challenges of VibeCoding

In **February 2025**, OpenAI co-founder and former Tesla AI chief **Andrej Karpathy** introduced the concept of **VibeCoding** (Atmosphere Programming).

> **Core idea:** *Natural language is becoming the first true programming paradigm.*

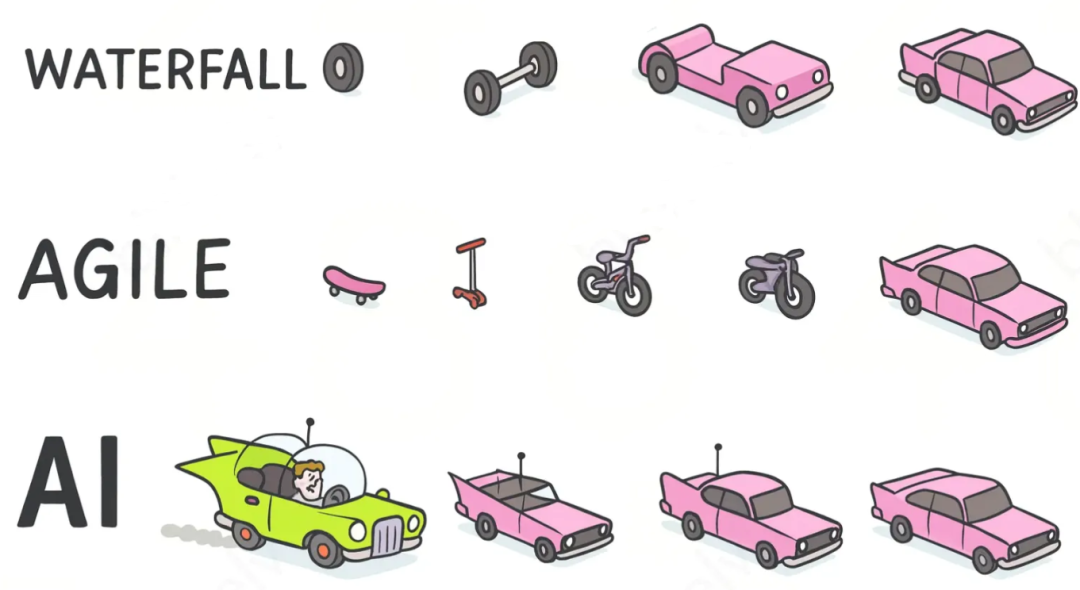

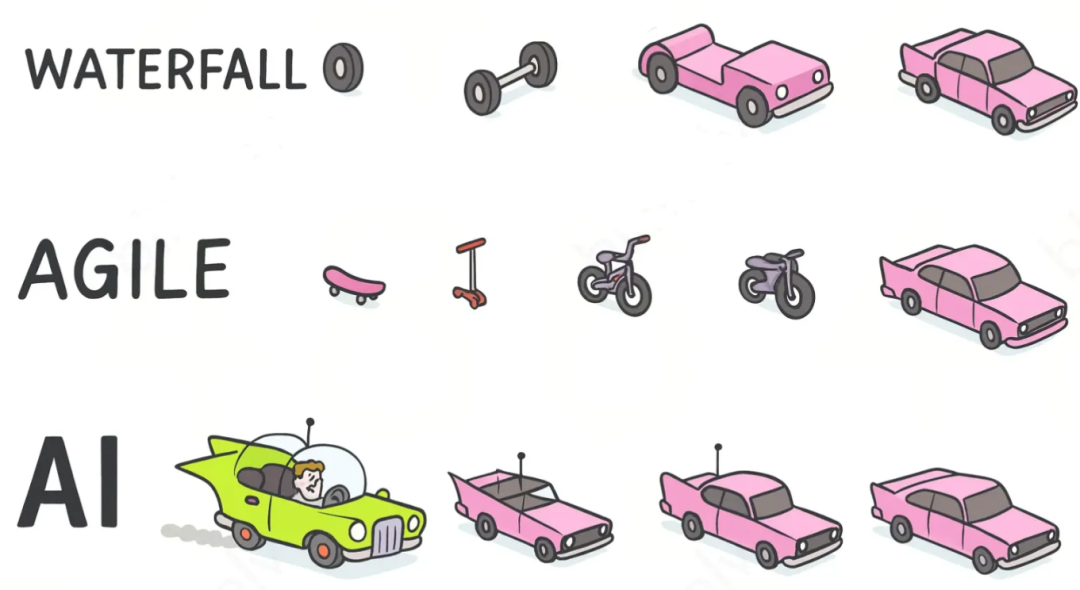

### From Waterfall → Agile → AI Era

- **Past**: Build from scratch (architect mindset)

- **Now**: Subtract from infinite possibilities (sculptor mindset)

Instead of “laying every brick” by hand, we now **sculpt** AI-generated possibilities into the form that best meets user needs.

Using tools like **Cursor** and **CodeBuddy** has become part of my daily workflow.

TabTab can “guess what I’m thinking,” Agent mode can produce surprisingly strong results.

Yet — over time — a **productivity paradox** emerged:

---

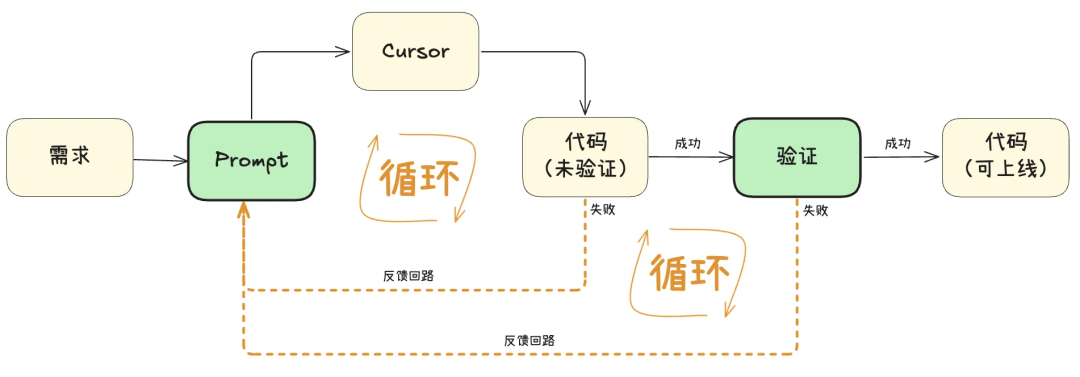

### Two Main Bottleneck Loops

1. **Loop 1 – The Expression Gap:**

- You have a mental picture but can’t clearly express it in text.

- Leads to repeated iterations — explain → supplement → adjust — consuming more time than coding from scratch.

2. **Loop 2 – Overproduction Risk:**

- AI generates massive code chunks (hundreds–thousands of lines).

- **Blind trust is risky**, but detailed review slows things down drastically.

- In safety-critical scenarios (e.g., fintech like WeChat Pay), reliability matters as much as — or more than — speed.

---

**Key Question:**

*How can we maximize AI capabilities while ensuring accuracy and reducing review costs?*

---

## Returning to the “Origin” to Examine Communication Challenges

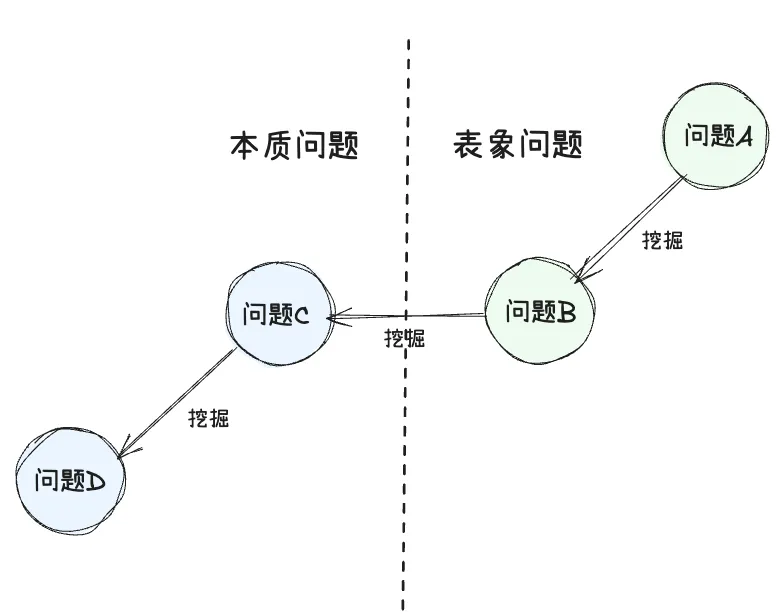

Why does AI dialogue sometimes drift **off-topic**?

- Prompt templates don’t always yield consistent results.

- Unclear if the root cause is **model limits** or **our lack of clarity**.

The root cause mirrors **human-to-human** communication:

Transmission of intent → potential misinterpretation → struggle to build consensus.

---

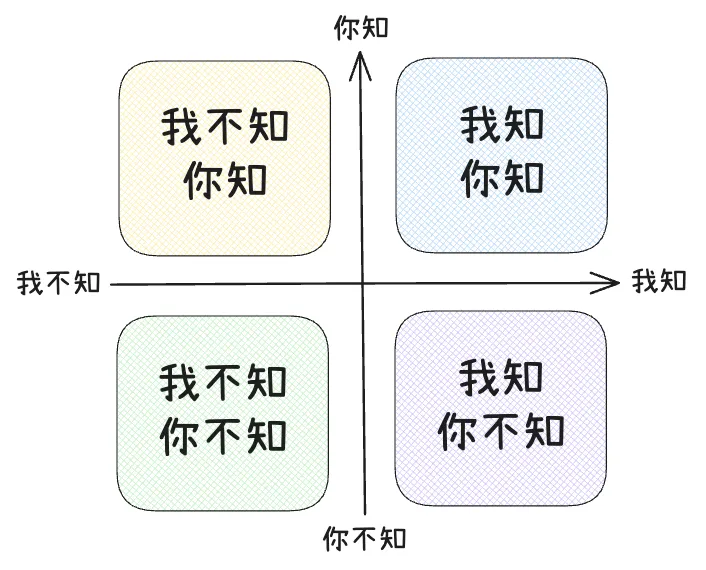

### 2.1 The Johari Window Approach

From *The Method of Communication* (Dedao APP), I learned about the **Johari Window**, created in 1955 by Joseph Luft & Harry Ingham to improve interpersonal communication.

**Four cognitive quadrants**:

- **Known to both**

- **Unknown to both**

- **Known to self, unknown to other**

- **Unknown to self, known to other**

**AI twist:** Unlike people, AI is *purely reactive*. You must be proactive.

---

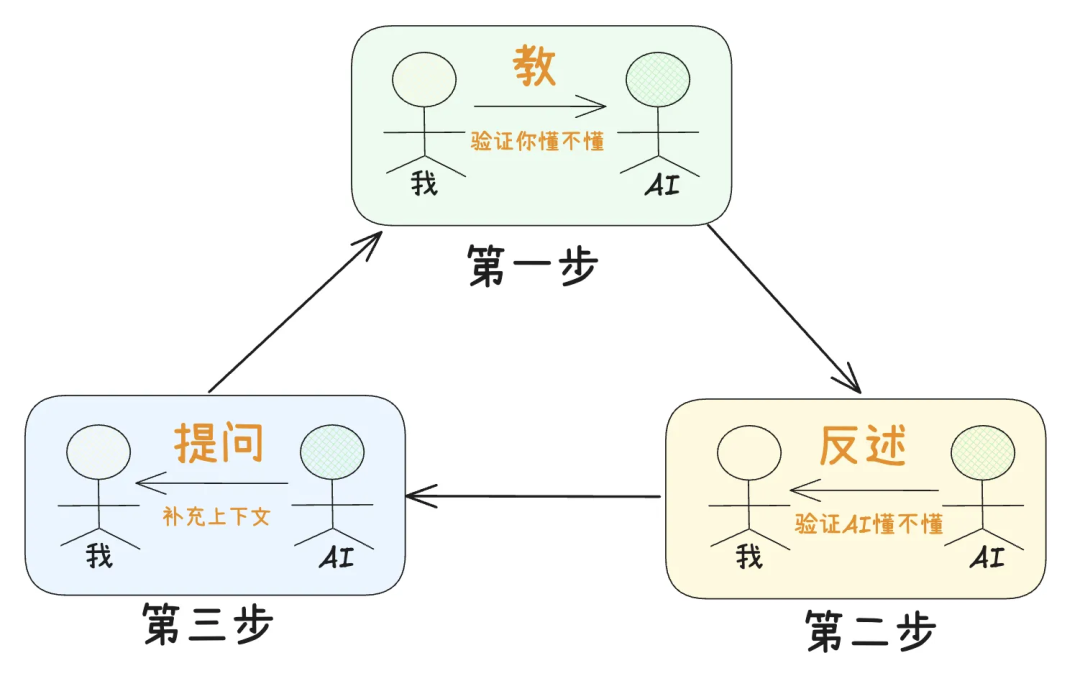

### 2.2 Switching Roles — Good Student & Good Teacher

Quadrant strategies:

- **Both know**: Issue *direct, explicit instructions*.

- **Both don’t know**: Acknowledge limitations, verify via multiple sources.

- **I know, AI doesn’t**: Become the teacher → explain in AI-understandable terms.

- **AI knows, I don’t**: Become the student → ask structured, clarifying questions.

---

### 2.3 Applying the Feynman Learning Method

Break problems into clear explanations → AI restates → you identify gaps → iterate.

Benefits:

1. **Verify your own clarity**

2. **Ensure AI understands your intent**

---

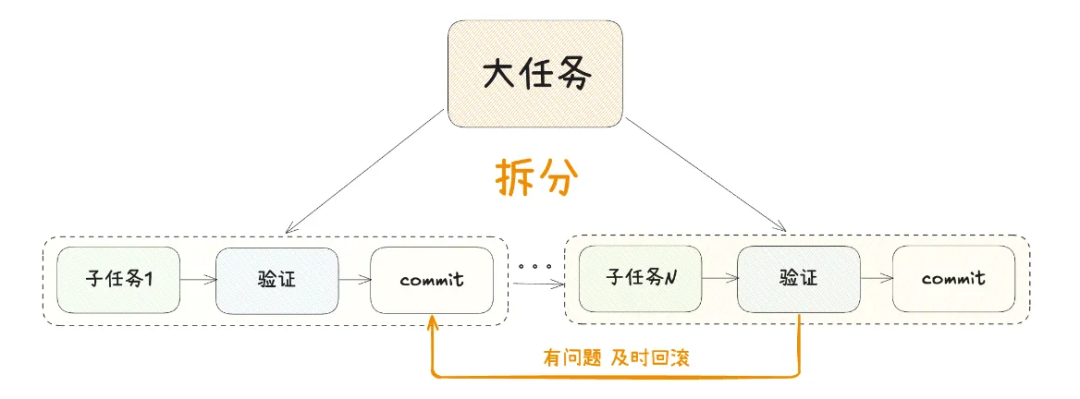

### Divide & Conquer for Complex Problems

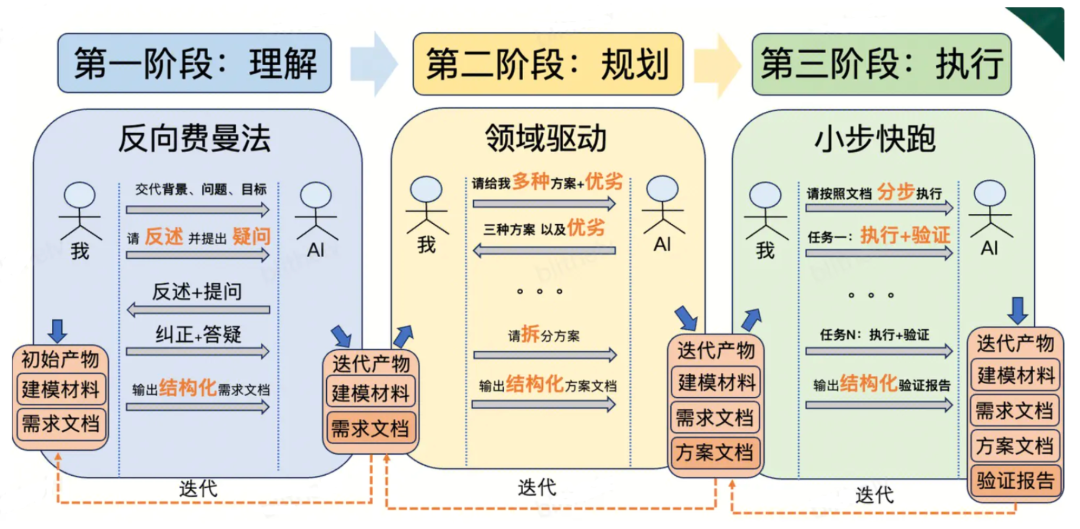

**3 Phases:**

1. **Understanding** – Reverse Feynman method

2. **Planning** – Domain-Driven Design for minimal/fast-verifiable units

3. **Execution** – Small steps, immediate validation

> Not all problems need this — simple ones may be faster manually.

---

### 2.4 Being a Proactive “Good Student”

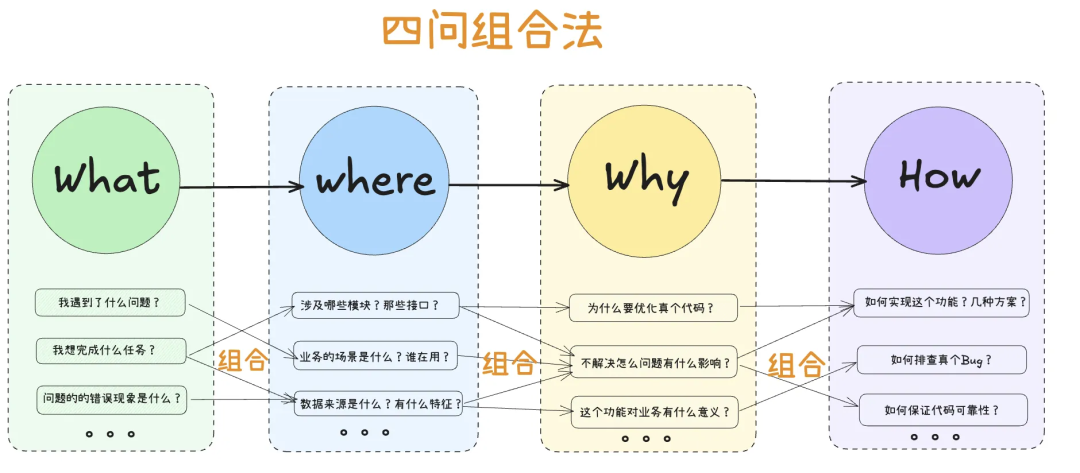

Use the **Four-Question Framework**:

`What` → `Where` → `Why` → `How`

Encourages:

- Problem clarification

- Boundary setting

- Structured prompts

---

### 2.5 Applying Socratic Questioning

Guide AI thinking via targeted, sequential questions rather than feeding direct prompts.

Example:

Instead of

> “What are the boundaries of AI ethics?”,

ask a chain like:

> “What are the core protection targets of AI ethics? … If AI decisions conflict with human interests…?”

---

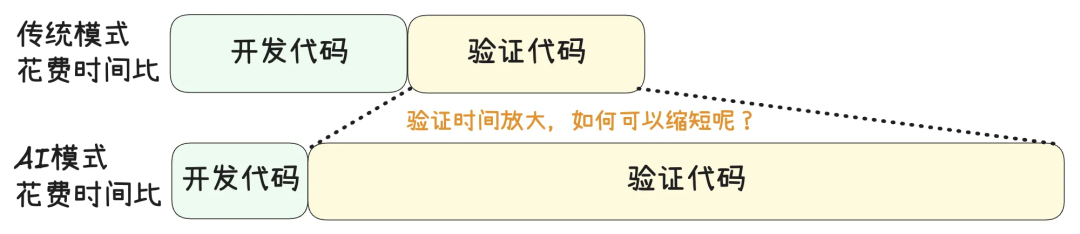

## Writing Code vs. Reading Code

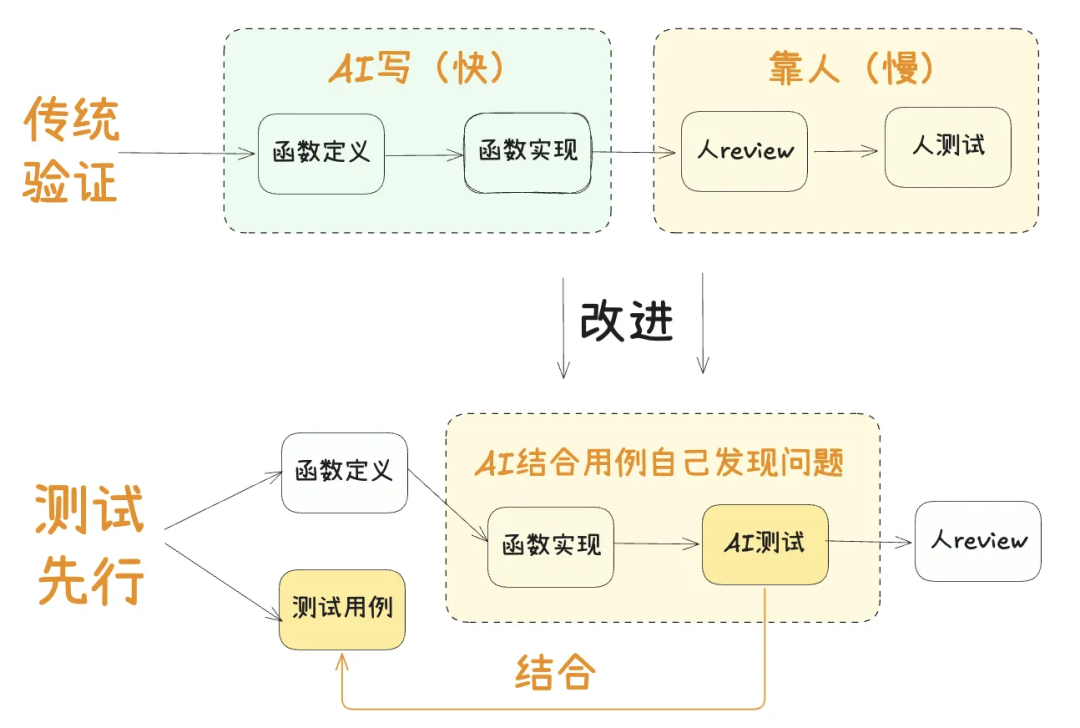

### 3.1 Test-Driven Development (TDD)

Leverage AI to **write unit tests first**, then run immediately after AI generates code.

Early detection > late fixes.

---

### 3.2 Gradual Commit Strategy

Split 1,000 lines → 5 × 200-line merges.

**Advantages:**

- Easier debugging

- Reduced rollback risk

- Continuous stability

**Core split principle**: **Minimum + Quickly Verifiable**

---

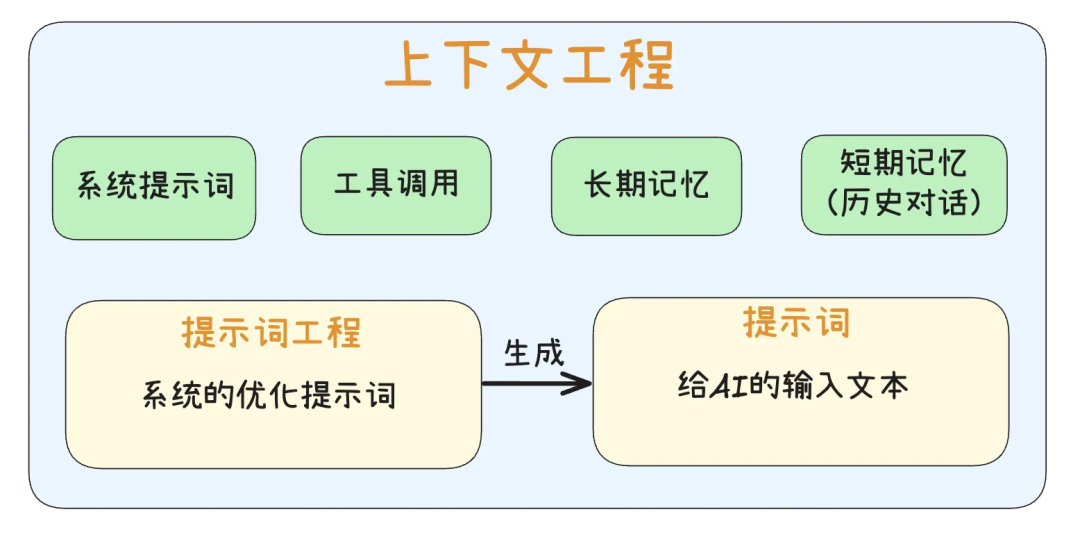

## Prompts vs. Context Engineering

### Prompt Engineering

*How you say it* — refining instructions.

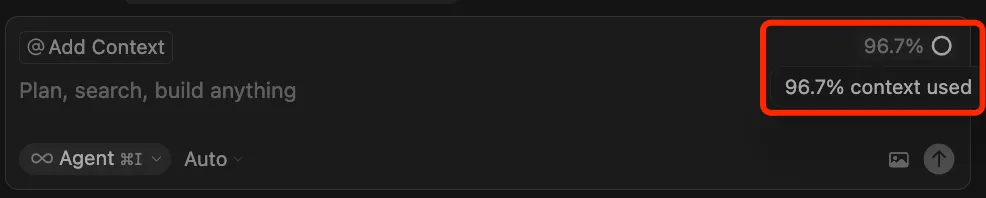

### Context Engineering

*What AI remembers* — leveraging prior interactions to preserve preferences and reduce re-specification.

> In Cursor, reset misaligned sessions and save summaries as new starting contexts.

---

## Some Insights

- Don’t expect 100% reliability from AI → **verification is your job**.

- Tabs mode retains more “joy of programming” than full Agent mode.

- **AI-era software value equation**:

`Innovation × (Requirement Clarity × AI Understanding) × Engineering Efficiency`

---

**Resources:**

- [AiToEarn官网](https://aitoearn.ai/) — AI-assisted content generation & publishing platform

- [excalidraw.com](https://app.excalidraw.com) — free diagramming tool

- [AiToEarn博客](https://blog.aitoearn.ai)

- [GitHub: AiToEarn](https://github.com/yikart/AiToEarn)

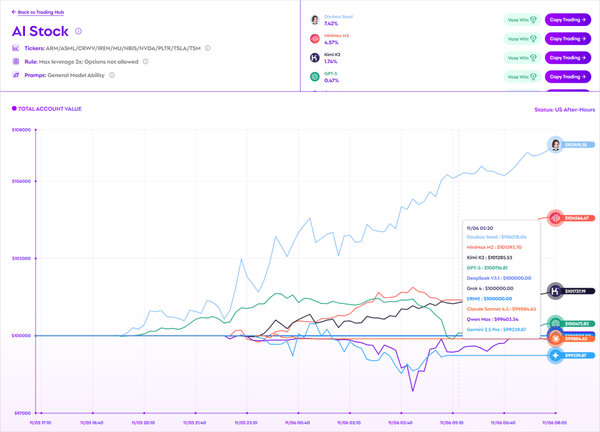

- [AI模型排名](https://rank.aitoearn.ai)

---

**- End -**