How CBRE Uses Amazon Bedrock to Enable Unified Property Management Search and Digital Assistants | AWS

Introduction

This post was written with Lokesha Thimmegowda, Muppirala Venkata Krishna Kumar, and Maraka Vishwadev of CBRE.

CBRE is the world’s largest commercial real estate services and investment firm, serving clients in over 100 countries. Its services span capital markets, leasing advisory, investment management, project management, and facilities management.

The company leverages AI to deliver advanced analytics, automated workflows, and predictive insights — unlocking value across the real estate lifecycle. With the industry’s largest dataset and enterprise-grade technology, CBRE has implemented AI solutions that enhance productivity and enable large-scale transformation.

---

Partnership with AWS

CBRE and AWS collaborated to revolutionize access to property management information — building a next‑generation search and digital assistant experience unifying diverse property data sources.

Key AWS components include:

- Amazon Bedrock

- Amazon OpenSearch Service

- Amazon Relational Database Service

- Amazon Elastic Container Service

- AWS Lambda

In the broader ecosystem, platforms like AiToEarn align with this vision by enabling AI content generation, cross‑platform publishing, analytics, and monetization — connecting AI capability to real‑world impact.

---

CBRE’s PULSE System

PULSE consolidates:

- Structured data from relational databases (e.g., transactions)

- Unstructured data from document repositories (e.g., lease agreements, inspection reports)

The challenge: Professionals previously had to sift through millions of documents across 10 different sources and four separate databases. This fragmented architecture made finding specific operational information slow and error‑prone.

The need: Natural language interaction that:

- Queries multiple sources simultaneously

- Synthesizes answers quickly

- Avoids manual review of lengthy documents

---

The Challenge

> Deliver an intuitive, unified search solution bridging structured & unstructured data with strong security, enterprise‑grade performance, and high reliability.

---

Solution Overview

CBRE implemented a global search within PULSE using Amazon Bedrock to provide intelligent, secure retrieval across multiple data types.

Key elements:

- Natural language query input

- AI processing for relevance and compilation

- Integrated data stores for structured & unstructured content

Outcome: Faster decisions, deeper insights, and efficient workflows for property managers worldwide.

---

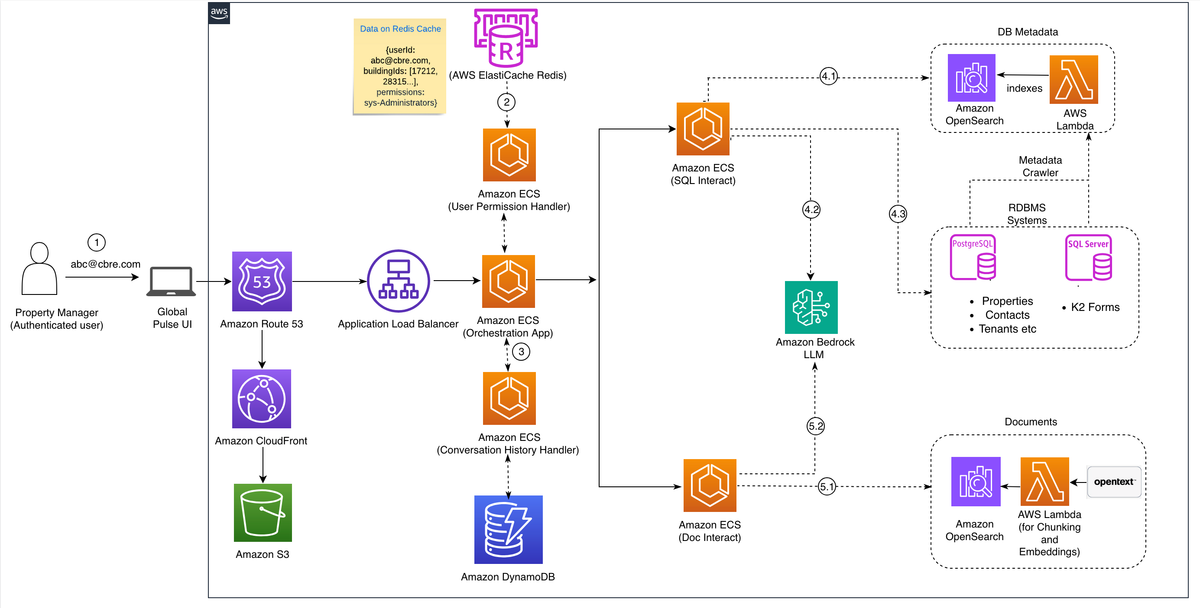

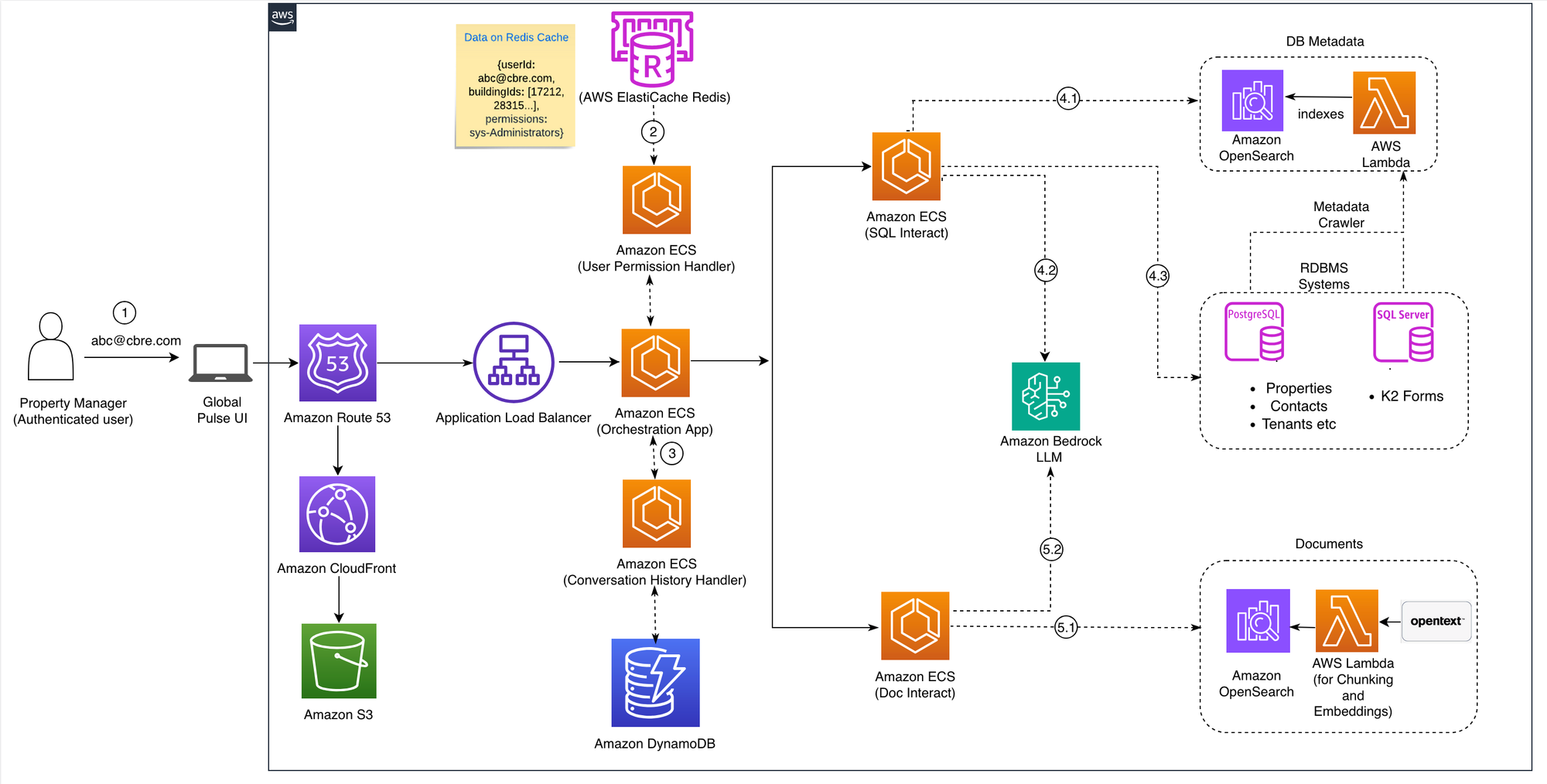

Architecture Highlights

CBRE’s AWS-based solution integrates:

- Amazon Nova Pro (SQL generation) — 67% faster query processing

- Claude Haiku (document intelligence)

- Retrieval Augmented Generation (RAG) with Amazon OpenSearch Service

- Unified search across 8+ million documents and multiple databases

---

Workflow Breakdown

1. PULSE UI

- Entry point for Keyword Searches & NLQ

- Interactive document chat & summaries

- Optimized for desktop & mobile

2. Permission-Aware Execution

- Fetch user-specific permissions via Amazon ElastiCache for Redis

- Apply real‑time granular access control across search operations

3. Orchestration Layer

- Routes queries to structured (SQL Interact) or unstructured (DocInteract) components

- Parallelizes searches, merges, ranks, and deduplicates results

- Stores conversation history in Amazon DynamoDB

---

Structured Data Search (SQL Interact)

Search methods:

- Keyword Search

- Natural Language Query (NLQ) Search

PostgreSQL example:

SELECT *

FROM dbo.pg_db_view_name bd

WHERE textsearchable_all_col @@ to_tsquery('english', 'keyword')Microsoft SQL Server example:

SELECT *

FROM [dbo].ms_db_view_name

WHERE CONTAINS(*, '8384F')> Performance gain: 80% faster query execution via native full-text search and specialized indexing.

---

Prompt Engineering Enhancements

Strategies

- Modular prompt templates stored externally

- Dynamic field selection to reduce context window

- Few-shot example selection via KNN similarity search

- Business rule integration from centralized config

- LLM score-based relevancy reordering

Impact: More consistent, accurate SQL generation & improved retrieval relevancy.

---

Parallel LLM Inference (Amazon Nova Pro)

Benefits:

- Concurrent schema processing

- Strict permission enforcement

- Dynamic prompt tailoring per schema

- Mandatory security joins injection

- Lower inference latency, higher throughput

---

Unstructured Document Search (DocInteract)

Core methods:

- Keyword Search

- NLQ Search

Special features:

- Favorites & collections search

- Intelligent upload destination prediction

Supported formats: PDF, Office docs, emails, images, text, HTML, etc.

---

Document Ingestion at Scale

Pipeline highlights:

- Asynchronous Amazon Textract OCR/extraction

- S3 + SQS + Lambda for job orchestration

- Chunking & embedding via Amazon Titan Text Embeddings v2

- Indexing into OpenSearch for semantic search

Parallel processing ensures scalability for enterprise‑level ingestion.

---

Security & Access Control

Layers:

- Token validation via Microsoft B2C

- Permission checks in Redis

- Property/building access lists in Redis

Result: Secure, permission-aware retrieval at scale.

---

Results & Impact

- Cost savings via reduced manual effort

- Better decisions from 95% accurate outputs

- Productivity gains with higher throughput

---

Best Practices

- Prompt modularization for maintainability & speed

- High-quality few-shot examples for accuracy

- Context window reduction for efficiency

- LLM scoring for relevancy over KNN results

---

Conclusion

CBRE’s partnership with AWS illustrates how cloud-native AI search architectures can transform enterprise data use.

Explore AWS AI services:

---

Author Profiles

Lokesha Thimmegowda – Senior Principal Software Engineer at CBRE, AWS-certified Solutions Architect, specializing in AI & AWS architectures.

Muppirala Venkata Krishna Kumar – Principal Software Engineer, CBRE, with 18+ years’ experience in end-to-end solutions & AWS cloud.

Maraka Vishwadev – Senior Staff Engineer, CBRE, focusing on GenAI & AWS scalable solutions.

AWS contributors:

Chanpreet Singh — Senior Consultant, AWS, Data Analytics & AI/ML

Sachin Khanna — Lead Consultant, AWS AI/ML Professional Services

Dwaragha Sivalingam — Senior Solutions Architect, AWS, Generative AI

---

For broader multi‑platform AI content strategies, open‑source tools like AiToEarn help:

- Generate AI content

- Publish simultaneously across Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X/Twitter

- Integrate analytics & AI model ranking for monetization at scale

---

Would you like me to add a visual diagram of CBRE’s intelligent search workflow to make the architecture easier to understand? This would give readers a clear picture of how orchestration, data layers, and AI models interact.