How Do Videos Work Explained Through Science and Technology

Learn how videos work through science and technology, covering motion perception, frame rates, resolution, aspect ratios, color encoding, and compression.

Introduction: Understanding "How Do Videos Work"

When we watch a movie, stream a YouTube clip, or join a video conference, it feels like we’re viewing continuous motion. In reality, videos are built from a sequence of static images shown in quick succession to trick our brains into perceiving movement. This phenomenon is deeply tied to human motion perception and the science of visual persistence.

In this blog post, we'll break down how do videos work using science and technology, from classical film reels to modern immersive formats.

---

A Brief History of Video Technology

Video as an entertainment and communication medium has evolved drastically:

- Late 1800s: Motion photography began with experiments by pioneers like Eadweard Muybridge and Thomas Edison.

- Early 1900s: Silent films, hand-cranked cameras, and mechanical projectors dominated theaters.

- Mid 20th century: Analog television broadcast brought moving pictures into homes.

- Late 20th century: VHS and camcorder technology empowered consumers to record and replay moments.

- 2000s onwards: Transition to digital video, DVDs, Blu-ray, and internet streaming reshaped consumption habits.

Digital transformation brought higher quality, greater portability, and instant global distribution, forever changing how videos are produced and consumed.

---

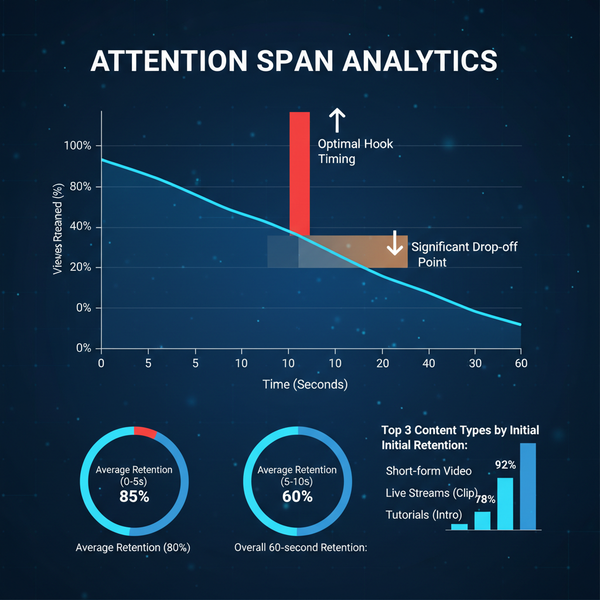

How Frame Rates Create the Illusion of Motion

The illusion of motion hinges on frame rate — the number of images (frames) displayed per second.

- Persistence of vision: The human eye retains images for a fraction of a second; when frames succeed one another rapidly, motion appears smooth.

- Common frame rates:

- 24 fps: Traditional cinema standard.

- 30 fps: Television and many online videos.

- 60 fps: High-definition sports broadcasts and gaming.

Higher frame rates yield smoother motion but also require more storage/bandwidth. For artistic purposes, filmmakers may stick to lower rates for a "cinematic" feel.

---

Understanding Video Resolution and Aspect Ratios

Resolution defines how detailed a video is, measured in pixels (width × height).

Examples:

- 1920×1080 (Full HD)

- 3840×2160 (4K)

Aspect ratio expresses width-to-height proportion. Common ratios:

- 4:3: Classic TV.

- 16:9: Widespread for HD content.

| Resolution | Aspect Ratio | Usage |

|---|---|---|

| 1280×720 | 16:9 | HD streaming, lower bandwidth |

| 1920×1080 | 16:9 | Full HD TV, most online videos |

| 3840×2160 | 16:9 | 4K UHD televisions |

| 4096×2160 | 17:9 | Digital cinema projection |

Aspect ratio selection affects how the video fills screens and how immersive the visual experience feels.

---

Role of Color Encoding and Compression Formats

Raw video data is massive. Without processing, video would be impractical to store or stream. Color encoding and compression formats solve this:

- Color spaces: YUV or RGB define how colors are represented numerically.

- Chroma subsampling: Reduces color detail while keeping luminance detail sharp (e.g., 4:2:0 format).

- Compression: Uses algorithms to remove redundant information.

Common compression formats (codecs) include:

- H.264/AVC: Balanced quality and size; widely supported.

- HEVC/H.265: Better compression efficiency for 4K and higher.

- VP9/AV1: Open-source alternatives optimized for web streaming.

---

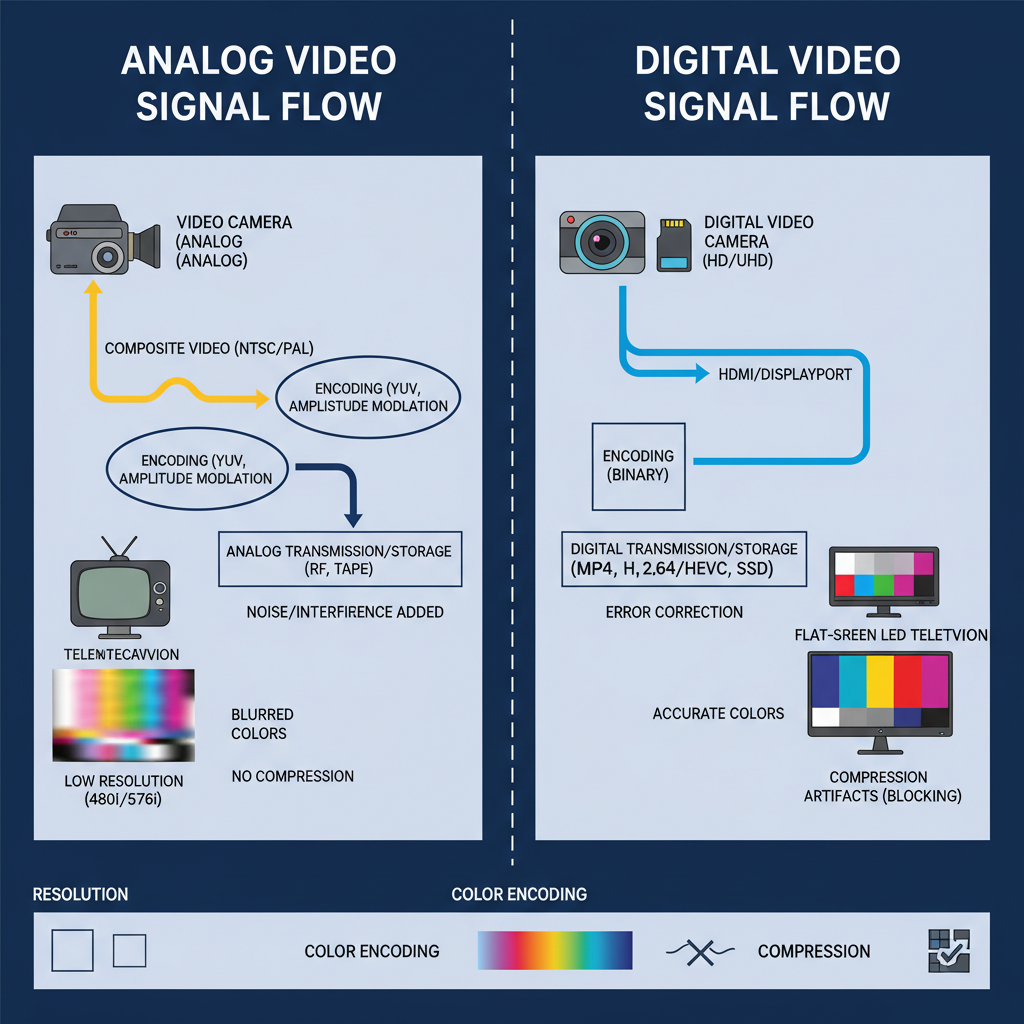

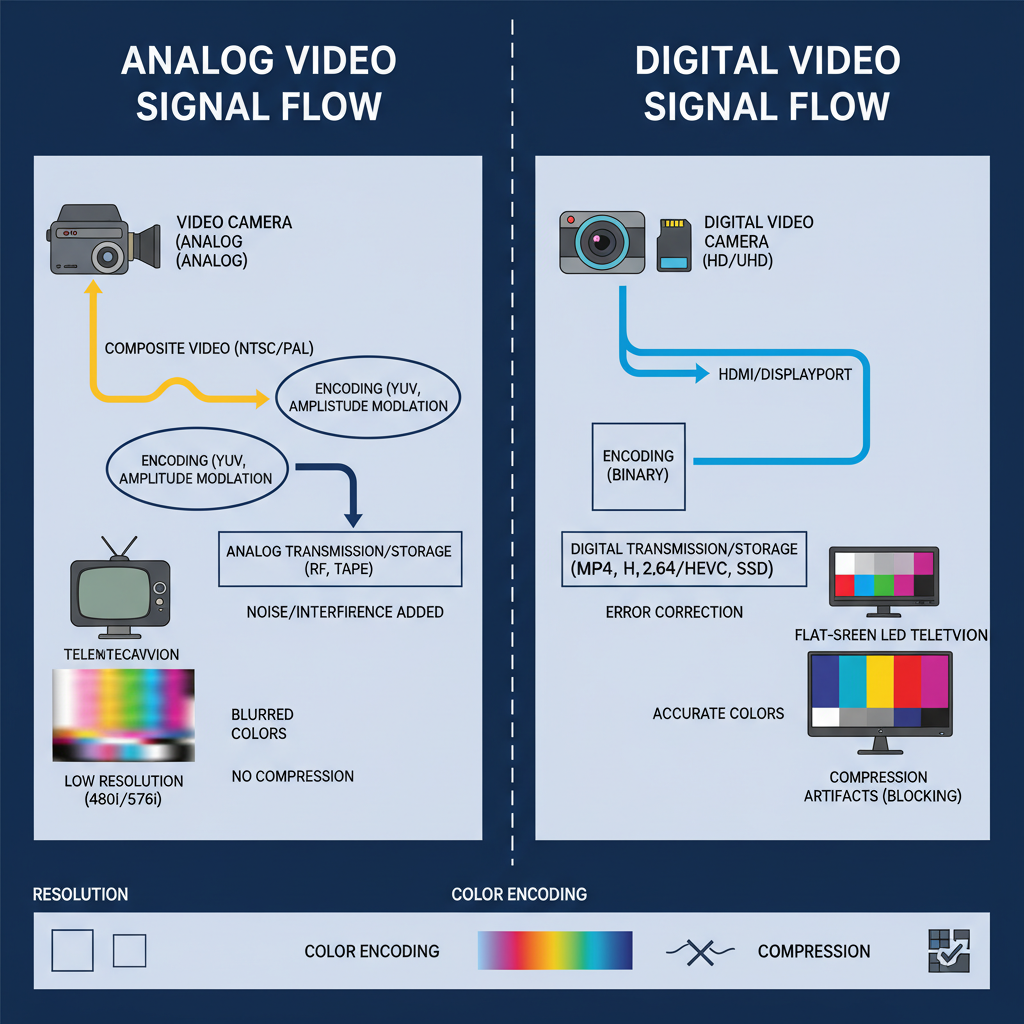

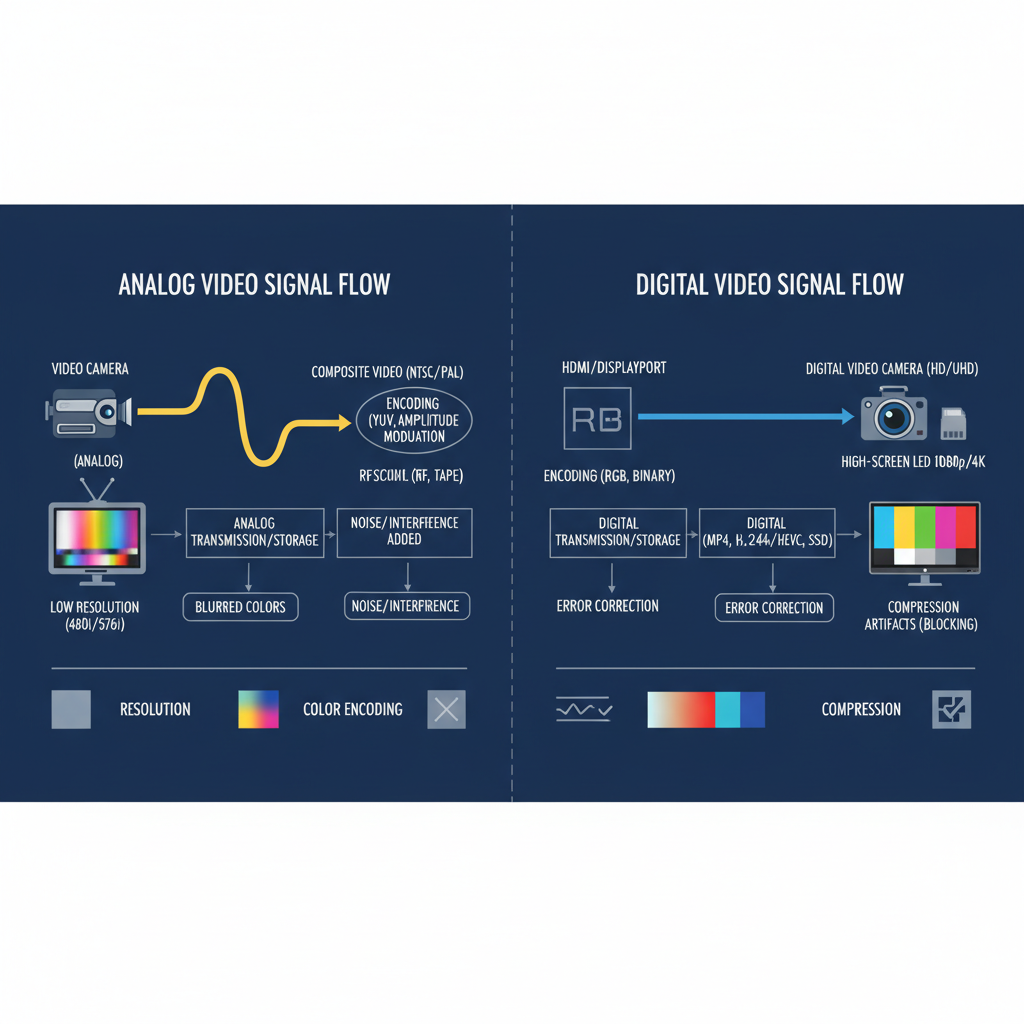

Analog vs Digital Video: Key Differences

Analog video stores and transmits continuous signals, while digital video represents images as discrete pixels.

Analog video characteristics:

- Continuous electrical signals correspond to brightness and color.

- Susceptible to noise and signal degradation during storage/transfer.

Digital video characteristics:

- Encoded as binary data.

- Easier to compress, store, and transmit without quality loss.

Digital formats overcame analog issues and enabled editing, replication, and global distribution.

---

How Video Cameras Capture and Store Footage

Video cameras work by transducing light into an electronic signal.

- Lens focuses light onto the sensor.

- Image sensor (CCD or CMOS) converts photons into electrical charges.

- Analog-to-digital conversion turns those charges into pixel data.

- Processing unit applies compression and stores data onto memory cards or storage media.

Modern devices — from cinema cameras to smartphones — can shoot in resolutions from HD to 8K, often with advanced image stabilization, HDR recording, and raw footage capabilities.

---

How Video Playback Works on Screens and Devices

When a video is played back:

- The decoder reads compressed video data.

- The device’s processor reconstructs frames from the compressed stream.

- Display hardware (LCD, OLED) presents frames at specified refresh rates.

- Audio/video synchronization ensures lips match speech and sound effects align with visuals.

In LCD screens, a backlight passes through liquid crystals; for OLED, each pixel emits its own light, enhancing contrast and color vibrancy.

---

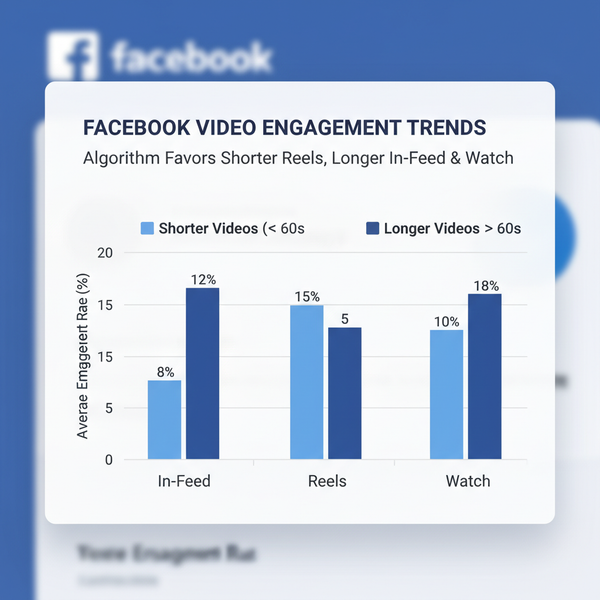

Role of Codecs and Streaming Technology in Modern Video Delivery

Streaming platforms like Netflix, YouTube, and Twitch rely on:

- Codecs: Transform raw video into formats suitable for bandwidth constraints.

- Adaptive bitrate streaming: Adjusts quality according to network conditions.

- Content delivery networks (CDNs): Distribute video files geographically to minimize latency.

These technologies ensure seamless playback even across diverse devices and varying internet speeds.

---

Video File Formats and Their Uses

A video file format wraps the encoded video stream along with metadata and audio tracks.

Popular formats:

- MP4: Universal compatibility and efficient compression.

- MKV: Supports multiple audio tracks and subtitles.

- MOV: Preferred in professional editing on macOS.

- WebM: Optimized for web; uses VP9 or AV1 codecs.

Choosing the right format depends on the intended use, compatibility, and desired balance between size and quality.

---

Emerging Technologies: HDR, 8K, VR Video

The future is ushering in richer and more immersive video experiences:

- HDR (High Dynamic Range): Expands contrast and color gamut for lifelike visuals.

- 8K resolution: Offers incredible detail, useful for large displays and post-production cropping.

- Virtual reality (VR) video: Records 360-degree environments, allowing viewers to explore scenes interactively.

These innovations require advanced capture, storage, and playback capabilities, as well as robust data pipelines.

---

Conclusion: The Future of Video Technology

From flickering film reels to breathtaking VR landscapes, the science of motion perception and the engineering of video capture and delivery continue to advance rapidly.

The interplay of frame rates, resolution, color encoding, and compression forms the foundation for answering how do videos work. Emerging formats and ultra-high-definition standards are pushing boundaries, promising even more lifelike and immersive experiences.

As storage capacities grow, processing power increases, and network infrastructures improve, videos will eclipse current definitions of realism—blurring the lines between virtual and physical worlds.