How to Build Your Own MCP Server with Python

# Building an MCP Server in Python with FastMCP

Artificial intelligence is evolving rapidly. Modern AI models can **reason**, **write**, **code**, and **analyze** in ways that once seemed impossible.

However, there’s still one important limitation: **context**.

Most AI models cannot directly access your **local files**, **APIs**, or **real‑time data** — their knowledge is limited to what’s inside a prompt.

The [Model Context Protocol](https://www.turingtalks.ai/p/how-model-context-protocol-works) (**MCP**) solves this challenge. MCP securely connects AI models to your tools, APIs, and systems through **MCP servers**.

In this guide, you’ll learn to **build your own MCP server using Python**, step‑by‑step.

By the end, you’ll have a server capable of:

- **Adding numbers**

- **Returning random secret words**

- **Fetching live weather data**

You’ll also learn how to **deploy your MCP server to the cloud**.

---

## 📚 Table of Contents

- [Understanding the Model Context Protocol](#understanding-the-model-context-protocol)

- [Setting Up Your Environment](#setting-up-your-environment)

- [Creating the Project](#creating-the-project)

- [Configuring Logging](#configuring-logging)

- [Creating the MCP Server](#creating-the-mcp-server)

- [Defining Tools](#defining-tools)

- [Example 1: Adding Two Numbers](#example-1-adding-two-numbers)

- [Example 2: Returning a Random Secret Word](#example-2-returning-a-random-secret-word)

- [Example 3: Fetching Weather Data](#example-3-fetching-weather-data)

- [Running the Server](#running-the-server)

- [Testing the Tools](#testing-the-tools)

- [Deploying to Sevalla](#deploying-to-sevalla)

- [Why Build an MCP Server?](#why-build-an-mcp-server)

- [Expanding the Server](#expanding-the-server)

- [Conclusion](#conclusion)

---

## Understanding the Model Context Protocol

**MCP** is an open standard defining how AI models communicate with external systems.

It works like an API, but designed specifically for AI assistants.

With MCP, you can:

- Build a server so ChatGPT can read local files

- Expose internal APIs to AI models

- Make Python functions callable by AI

MCP ensures all communication is **structured**, **secure**, and **scalable**. It uses [Server-Sent Events (SSE)](https://developer.mozilla.org/en-US/docs/Web/API/Server-sent_events/Using_server-sent_events) for real‑time updates without constant polling.

---

## Setting Up Your Environment

**Requirements:**

- **Python 3.9+**

Install required packages:

pip install fastmcp requests

We’ll use:

- **FastMCP** — to easily build MCP servers

- **Requests** — for API calls (e.g., weather data)

Source code available on [GitHub](https://github.com/sevalla-templates/python-demo-mcp-server).

---

## Creating the Project

Create `server.py`:

import logging

import os

import random

import sys

import requests

from mcp.server.fastmcp import FastMCP

---

## Configuring Logging

name = "demo-mcp-server"

logging.basicConfig(

level=logging.INFO,

format='%(name)s - %(levelname)s - %(message)s',

handlers=[logging.StreamHandler()]

)

logger = logging.getLogger(name)

Logs will be clean and informative:

demo-mcp-server - INFO - Tool called: add(3, 5)

---

## Creating the MCP Server

port = int(os.environ.get('PORT', 8080))

mcp = FastMCP(name, logger=logger, port=port)

- Port defaults to `8080` if `PORT` env var is not set

- `FastMCP` object = your running MCP server

---

## Defining Tools

Every function decorated with `@mcp.tool()` becomes available to clients.

### Example 1: Adding Two Numbers

@mcp.tool()

def add(a: int, b: int) -> int:

"""Add two numbers"""

logger.info(f"Tool called: add({a}, {b})")

return a + b

---

### Example 2: Returning a Random Secret Word

@mcp.tool()

def get_secret_word() -> str:

"""Get a random secret word"""

logger.info("Tool called: get_secret_word()")

return random.choice(["apple", "banana", "cherry"])

---

### Example 3: Fetching Weather Data

@mcp.tool()

def get_current_weather(city: str) -> str:

"""Get current weather for a city"""

logger.info(f"Tool called: get_current_weather({city})")

try:

endpoint = "https://wttr.in"

response = requests.get(f"{endpoint}/{city}", timeout=10)

response.raise_for_status()

return response.text

except requests.RequestException as e:

logger.error(f"Error fetching weather data: {str(e)}")

return f"Error fetching weather data: {str(e)}"

---

## Running the Server

if __name__ == "__main__":

logger.info(f"Starting MCP Server on port {port}...")

try:

mcp.run(transport="sse")

except Exception as e:

logger.error(f"Server error: {str(e)}")

sys.exit(1)

finally:

logger.info("Server terminated")

Run:

python server.py

---

## Testing the Tools

In an **MCP-compatible client**, request:

{

"tool": "add",

"args": [5, 7]

}

Response:

{

"result": 12

}

Or via cURL:

curl http://localhost:8080/tool/get_current_weather?city=London

---

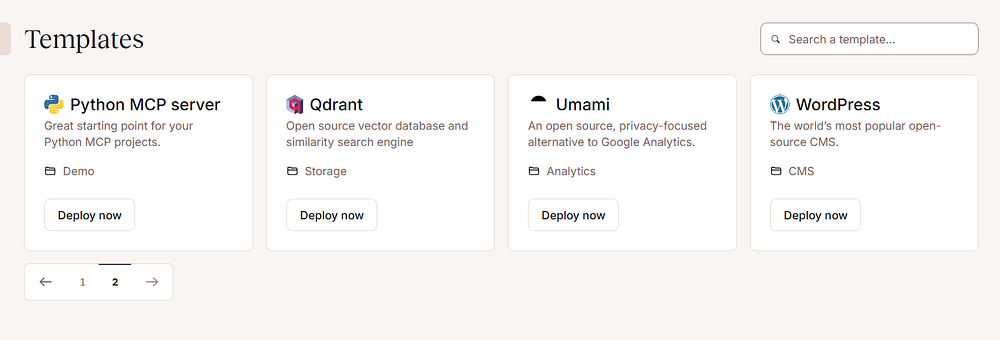

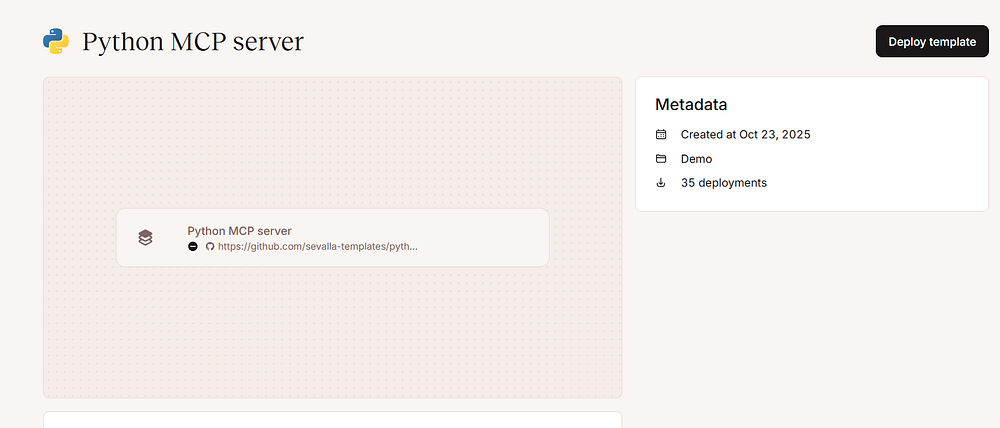

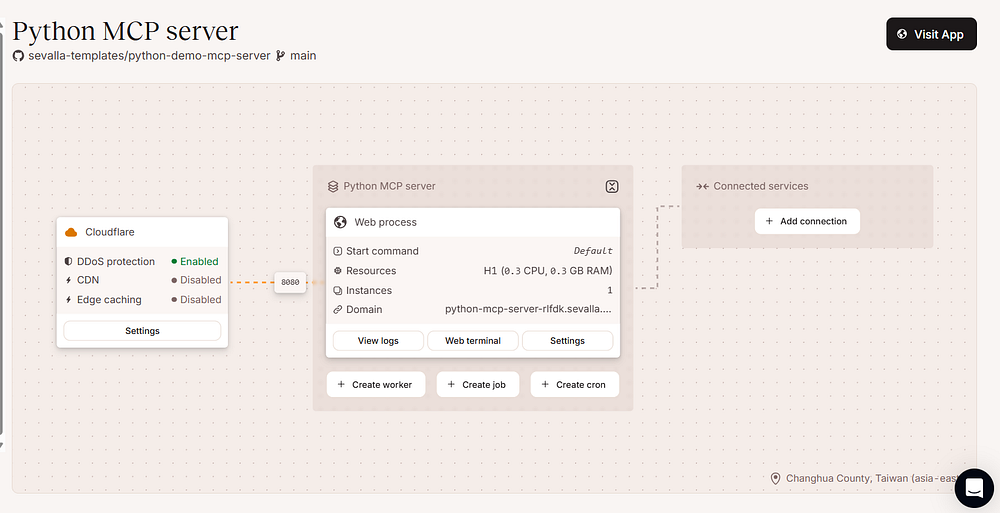

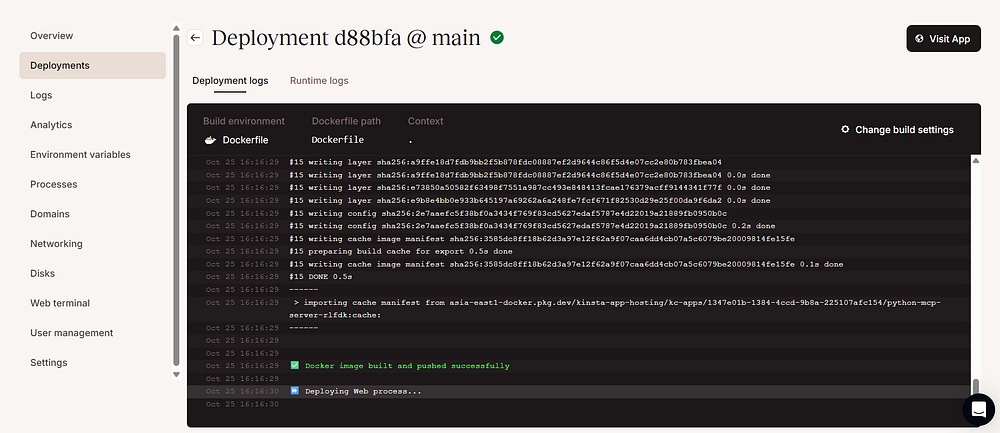

## Deploying to Sevalla

[Sevalla](https://sevalla.com/) offers quick, affordable MCP deployment.

Steps:

1. Log in to [Sevalla](https://app.sevalla.com/login)

2. Open **Templates**

3. Select **Python MCP Server**

4. Click **Deploy Template**

5. Wait for green checkmark ✅

6. Click **Visit app** — use given URL for production

---

## Why Build an MCP Server?

- **Direct AI-to-Database** connectivity

- **Automation**

- **Data governance**

- **Rapid experimentation**

---

## Expanding the Server

Ideas:

- File operations

- Database queries

- Integrations with GitHub, Slack APIs

- System monitoring

Each becomes an MCP tool available to your AI.

---

## Conclusion

You now know how to:

- Build an MCP server with **FastMCP**

- Add functional tools

- Run and test locally

- Deploy via **Sevalla**

The **Model Context Protocol** lets AI models interact with real‑world systems. With just a few Python functions, you can expand your AI’s capabilities in meaningful, safe ways.