How to Report a Facebook Post on Desktop and Mobile

Learn when and how to report a Facebook post on desktop and mobile, including steps for posts from pages, groups, and profiles.

Introduction to Facebook Reporting Tools

Facebook hosts billions of posts from users, pages, and groups every day. While most are harmless, some may contain harmful, misleading, or inappropriate material. Thankfully, Facebook offers built‑in reporting tools that let you flag questionable content for moderator review.

Learning how to report a Facebook post is essential for maintaining a safe online environment, protecting your mental wellbeing, and supporting Facebook’s community standards. This guide explains why and when to report a post, outlines step‑by‑step instructions for desktop and mobile, shows how to report from different sources, and details how to track the status afterward.

---

Reasons You Might Need to Report a Facebook Post

You may encounter posts that break Facebook rules or local laws. Reporting is appropriate in scenarios such as:

- Harassment or bullying – Posts that target, demean, or threaten individuals.

- Hate speech or discrimination – Content promoting prejudice or violence against groups.

- Graphic violence – Images or videos showing extreme violence.

- Misinformation – False news or health information that may harm others.

- Spam or scams – Fraudulent links, clickbait, or multi‑level marketing schemes.

- Sexual content – Explicit material prohibited by Facebook policy.

Acting quickly helps prevent the spread of harmful content.

---

Understanding Facebook’s Community Standards

Before you report, it’s useful to know what Facebook considers a violation. The Community Standards outline rules for acceptable behavior and content designed to:

- Protect user safety.

- Promote respectful communication.

- Prevent harmful misinformation.

The main policy categories include:

- Violence and criminal behavior

- Safety (self‑injury, child exploitation)

- Objectionable content

- Integrity and authenticity

- Respecting intellectual property

Violations can lead to post removal or account suspension.

---

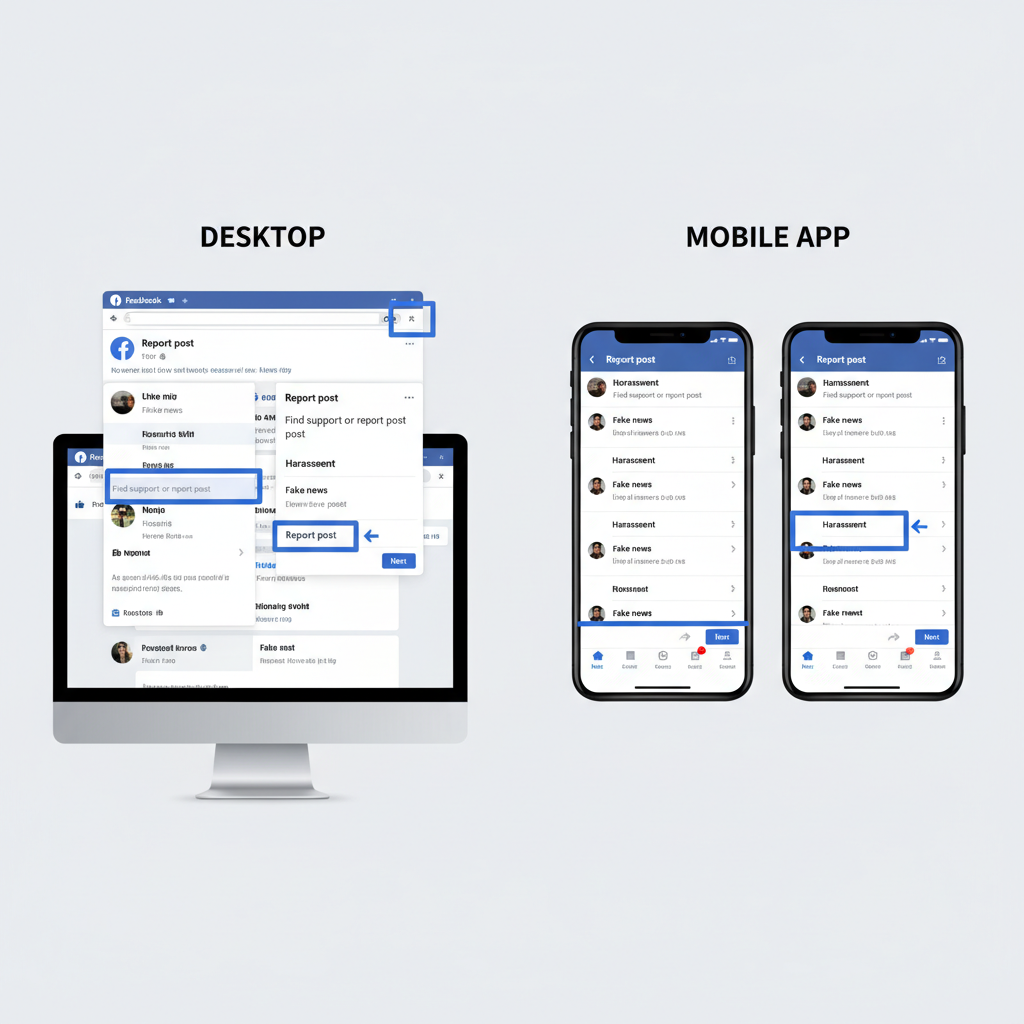

Step‑by‑Step Guide to Reporting a Post on Desktop

Reporting from a desktop computer is simple:

- Locate the post – Navigate to the post you believe violates Facebook’s standards.

- Click the options menu – Hover over the post and click the three dots (•••) in the upper right corner.

- Select “Report post” – Choose Report post from the dropdown menu.

- Pick a reason – Facebook presents categories; select the one that best fits.

- Submit your report – Follow on‑screen prompts. You may be asked for more info.

Example:

Step 3: Report post

Step 4: Choose "Hate speech"

Step 5: Click "Submit"---

Step‑by‑Step Guide to Reporting a Post on Mobile App

On the mobile app (iOS and Android), the process is similar but optimized for touchscreens:

- Find the post – Scroll through your feed or open the relevant profile/page.

- Tap the options icon – Usually three dots in the upper right of the post.

- Tap “Find support or report post” – Facebook will show categories.

- Select the relevant category – Choose the most accurate match.

- Confirm and submit – Send your report for review.

App layouts can vary slightly depending on the version.

---

How to Report Posts from Pages, Groups, and Profiles

Facebook posts originate from different sources, and the reporting steps differ:

| Source | Reporting Steps | Notes |

|---|---|---|

| Page Posts | Open post → Click/Tap three dots → Select “Report post” | Page admins may be notified. |

| Group Posts | Open post → Options menu → “Report to admin” or “Report post” | You can report to both the group admin and Facebook. |

| Profile Posts | Go to profile → Find post → Options → “Report post” | Reports are reviewed by Facebook’s moderation team. |

Using the correct channel ensures faster resolution—especially in private groups.

---

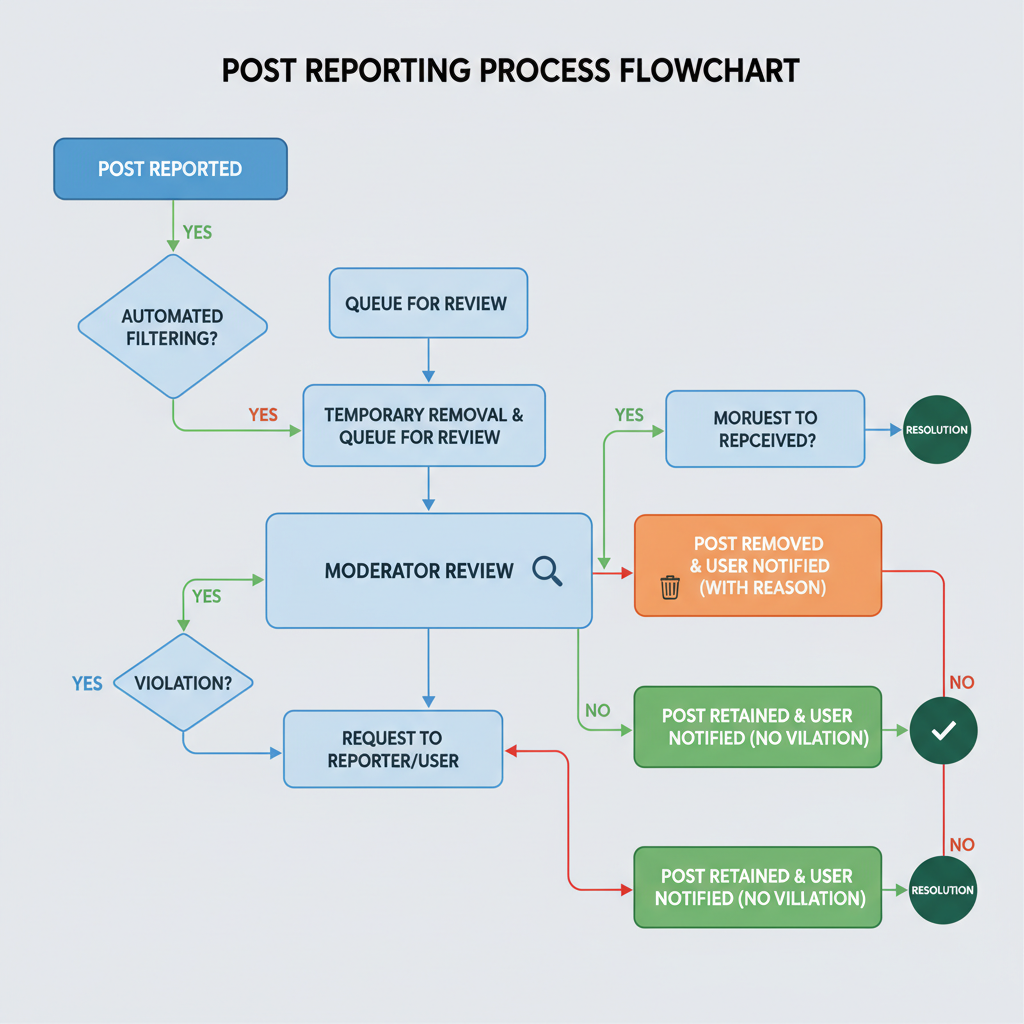

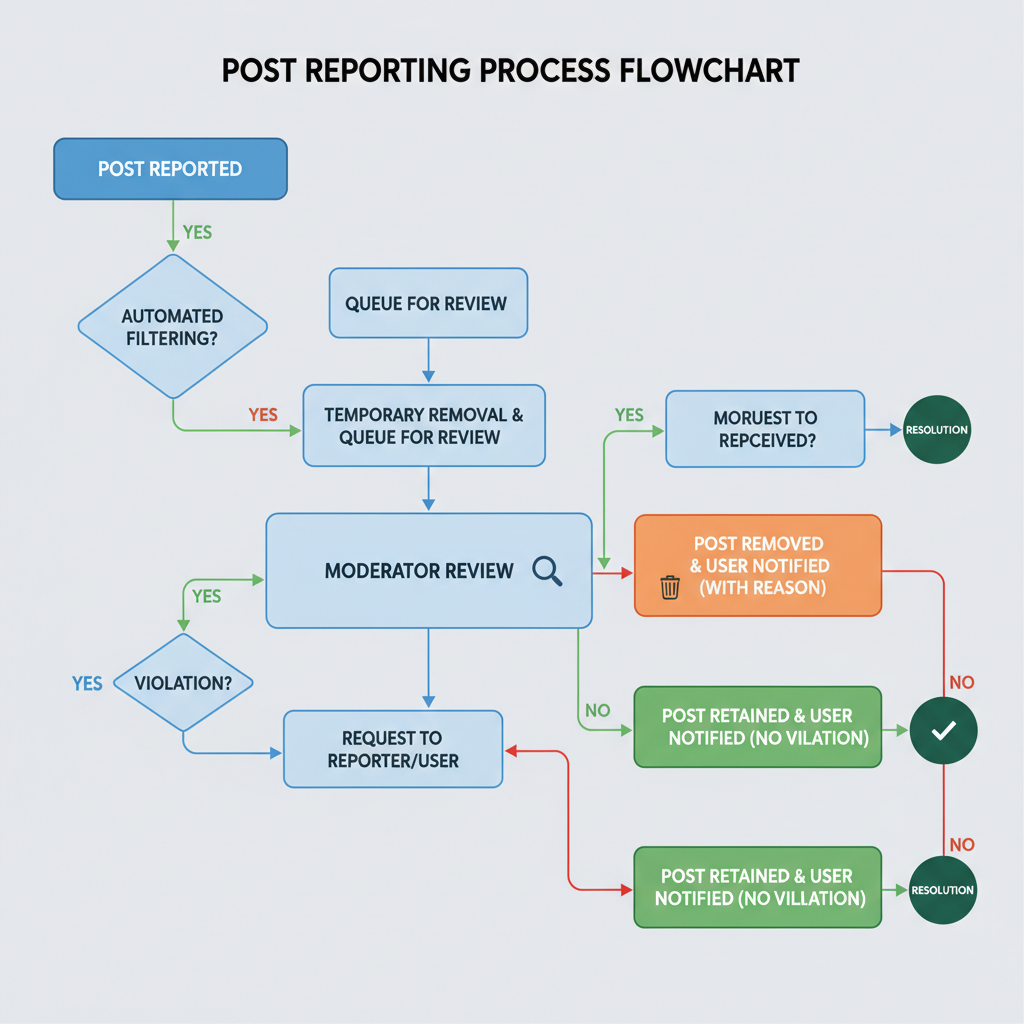

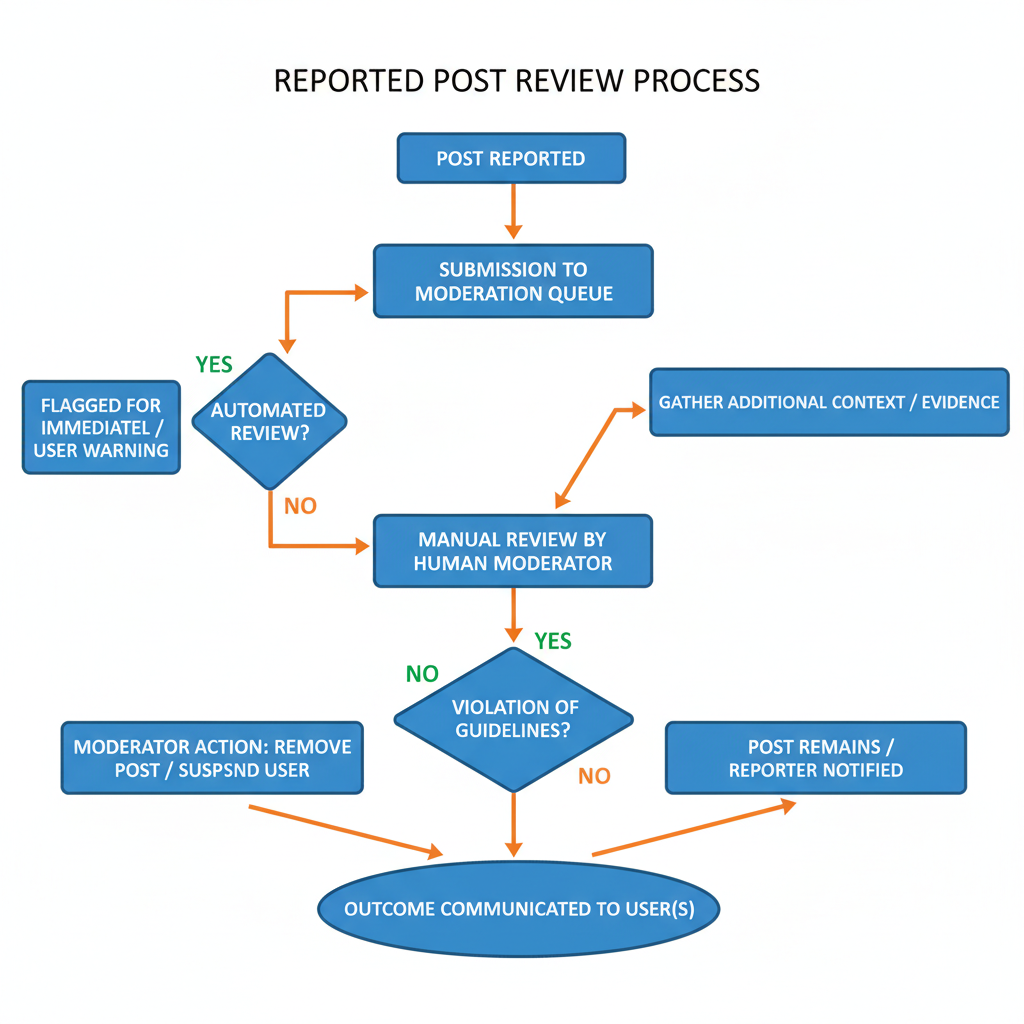

What Happens After You Report a Post

After submitting a report:

- Initial review – Automated systems and human moderators assess your concern.

- Action taken – Violating posts may be removed; accounts may be warned or disabled.

- Notification – A resolution message appears in your Support Inbox.

If Facebook finds no violation, the post remains live. Your report is kept confidential.

---

Tips for Effective and Accurate Reporting

Boost your report’s impact by:

- Being specific – Select the category that best matches the violation.

- Avoiding false reports – Repeated false claims may affect credibility.

- Adding context – Provide details when prompted.

- Acting promptly – Report issues immediately.

Remember: reporting aims to keep communities safe—not suppress differing opinions.

---

How to Track the Status of Your Report

You can check outcomes of your reports:

- Open “Settings & privacy” – Click your profile picture, then choose “Settings & privacy.”

- Select “Support Inbox” – Lists recent reports.

- View results – See whether action was taken for each.

The Support Inbox is your central hub for content‑violation updates.

---

Additional Safety and Privacy Measures on Facebook

Beyond reporting posts, you can protect yourself by:

- Adjusting privacy settings – Control who views your content or contacts you.

- Blocking or unfollowing – Remove toxic sources from your feed.

- Enabling two‑factor authentication – Secure access to your account.

- Reviewing activity regularly – Ensure no harmful material is posted from your account.

These proactive steps help you enjoy Facebook with greater peace of mind.

---

Conclusion and Next Steps

Knowing how to report a Facebook post enables you to play an active role in maintaining respectful digital spaces. Whether you use desktop or mobile, the process is quick, anonymous, and integral to enforcing Community Standards.

Understand the policies, use precise categories when reporting, and follow up in the Support Inbox. By taking these actions, you contribute to a safer community for all users.

If you found this guide helpful, share it with friends to encourage responsible content reporting on Facebook.