Step-by-Step Guide to Report a Facebook Post

Learn how to report a Facebook post step-by-step on desktop or mobile, choose the right category, and understand the review and follow-up process.

## Step-by-Step Guide to Report a Facebook Post

Reporting inappropriate content on Facebook is an important way to help maintain a safe, respectful online community. If you’ve come across harassment, hate speech, spam, or misinformation, understanding the exact steps to **report a Facebook post** will ensure that your concerns are addressed effectively and in line with Facebook’s Community Standards.

---

## Understanding Facebook’s Community Standards and Reporting Guidelines

Before starting the reporting process, it helps to know what Facebook defines as a policy violation. Facebook’s **Community Standards** detail acceptable behavior and content, with enforcement aimed at keeping the platform secure for all users.

**Common violations include:**

- Hate speech or discriminatory language.

- Graphic violence or explicit content.

- Harassment or bullying.

- False information affecting safety, health, or elections.

- Spam or scams.

**Tip:** If you understand these guidelines fully, you can select the correct category when reporting, which increases the chances of Facebook taking meaningful action.

---

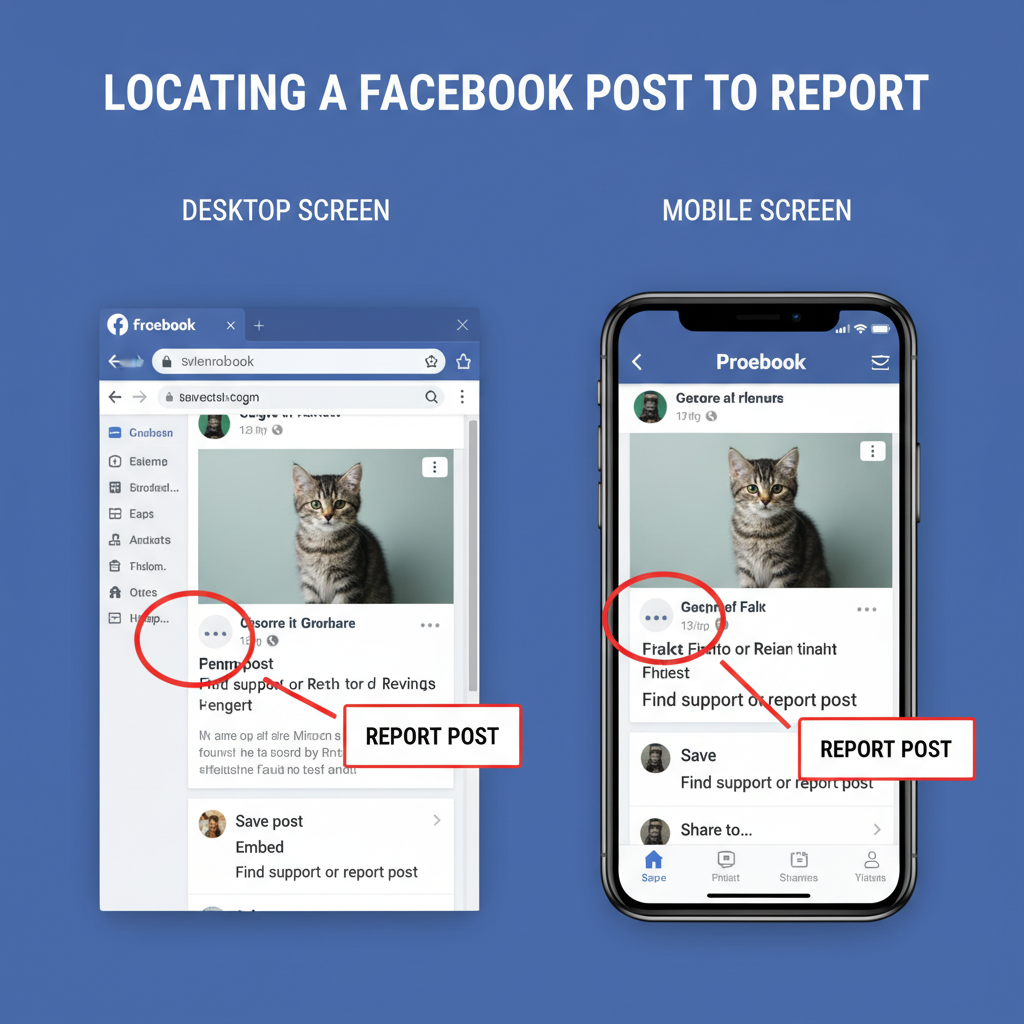

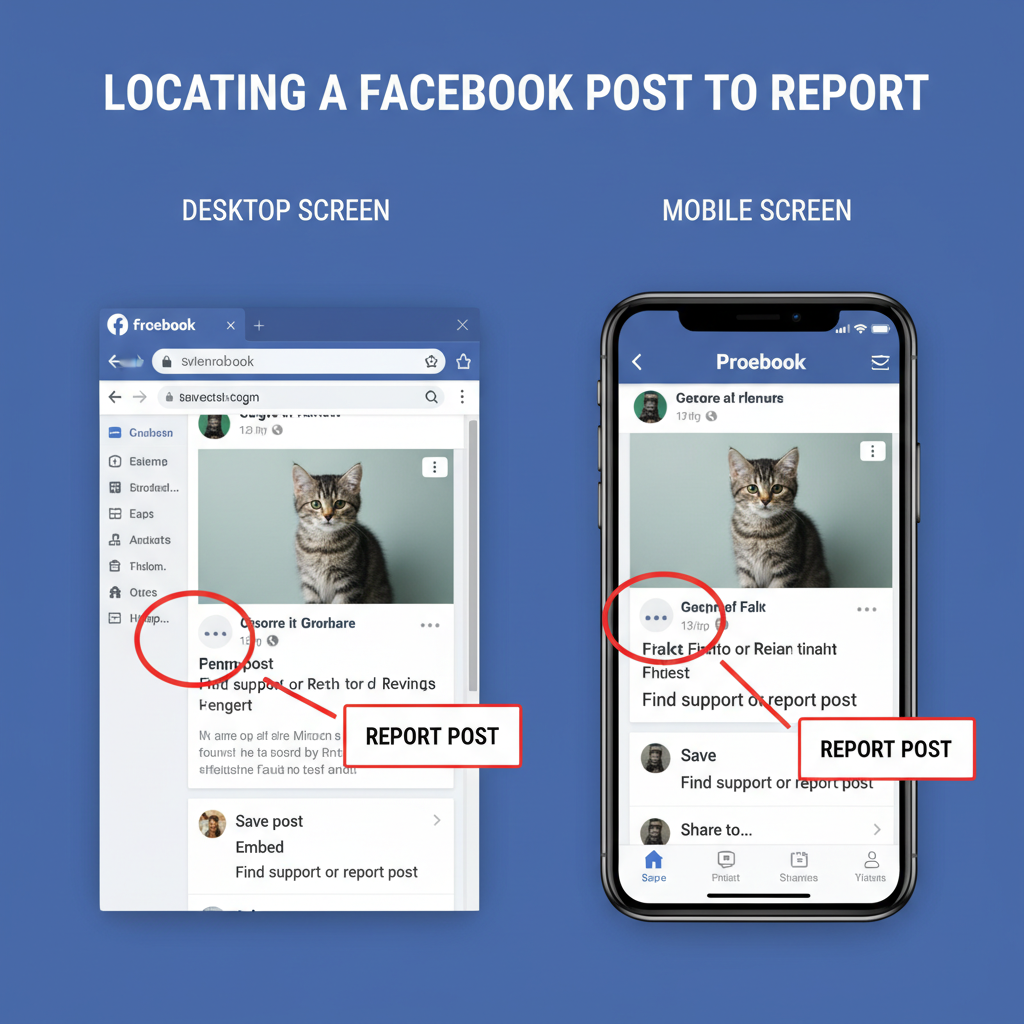

## Locate the Post You Want to Report (Desktop and Mobile Instructions)

Finding the right post is the foundation for an accurate report.

### On Desktop

1. Navigate to Facebook in your web browser.

2. Use the search bar to find the post or scroll your feed/timeline.

3. Identify and open the exact post you want to report.

### On Mobile App

1. Open the Facebook app.

2. Scroll through your news feed or visit the relevant profile/page.

3. Tap the post to open and review it in full.

---

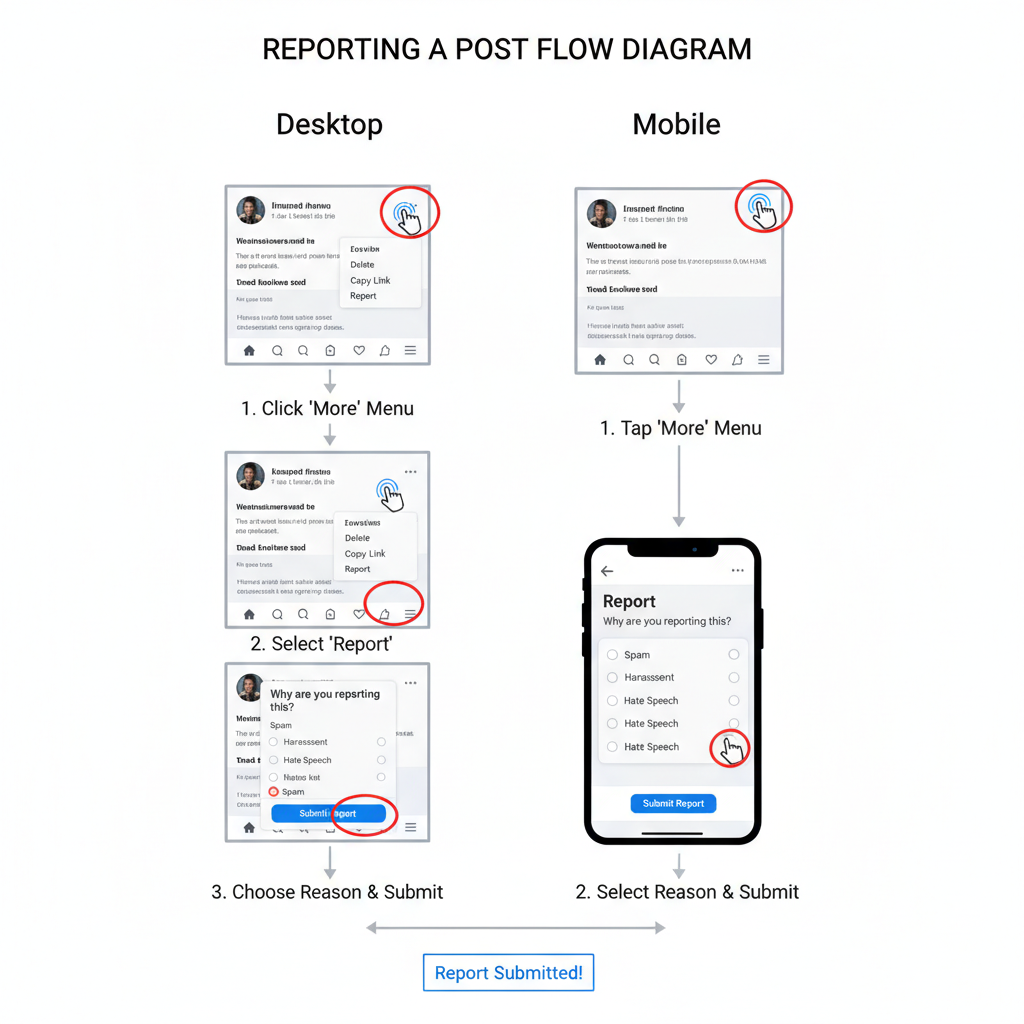

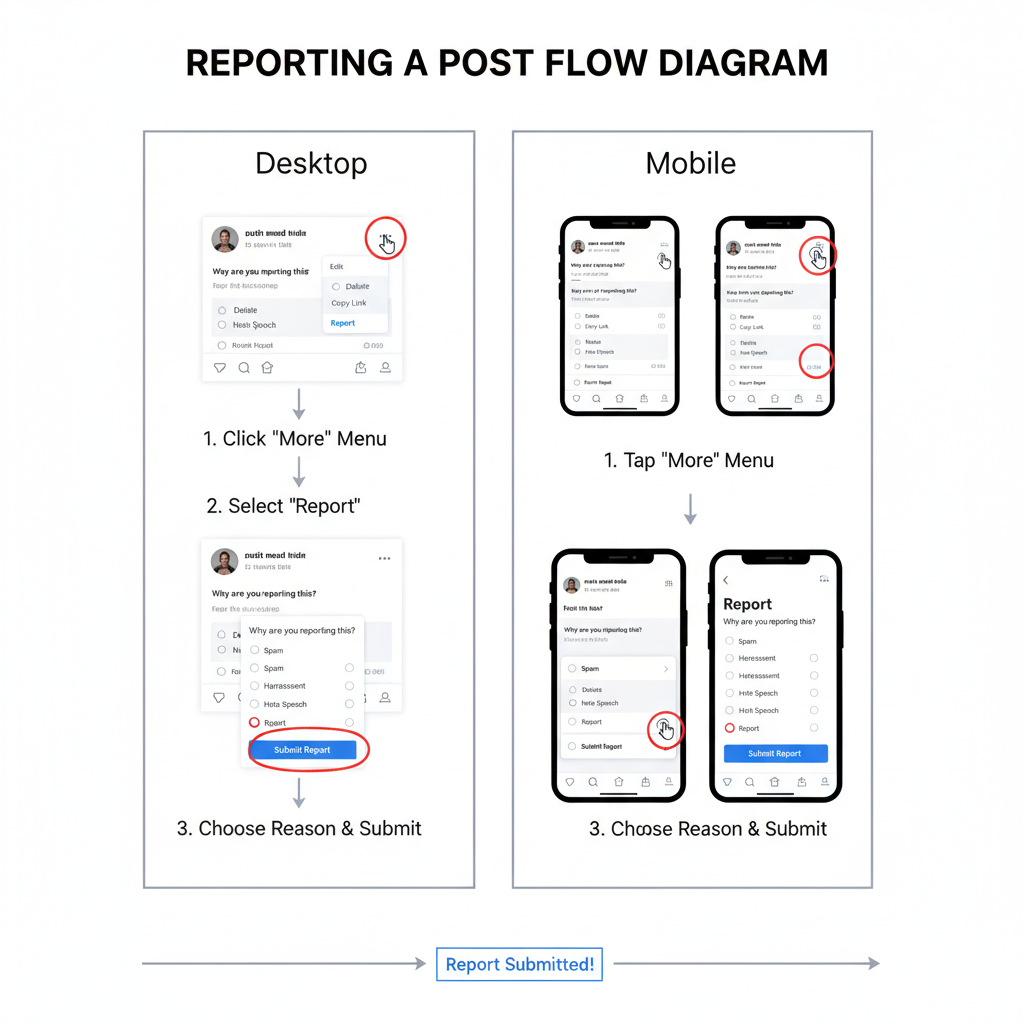

## Step-by-Step Guide to Reporting a Post on Desktop

Once you have located the post via your desktop browser:

1. Hover over the post with your mouse pointer.

2. Click the **three-dot menu** (“More options”) at the top right of the post.

3. Select **Report post** from the drop-down.

4. Choose the most accurate reporting reason.

5. Follow the on-screen guidance to submit.

**Example Desktop Flow:**Post → Three-dot menu → Report Post → Select Category → Submit

| Report Category | When to Use |

|---|---|

| Harassment | For targeted bullying or abusive content aimed at you or others. |

| Hate Speech | Content attacking people based on race, religion, gender, etc. |

| Violence or Threats | Posts inciting violence or containing threats. |

| Misinformation | False claims affecting health, safety, or elections. |

| Spam/Scam | Irrelevant links, fake offers, or fraudulent content. |

| Nudity/Explicit | Sexually explicit or pornographic material. |

---

## Step-by-Step Guide to Reporting a Post on Mobile App

For mobile users, the reporting process is similar but adapted to the app interface:

1. Tap the **three-dot icon** in the upper-right corner of the post.

2. Choose **Find support or report post**.

3. Select the matching category (e.g., Harassment).

4. Follow prompts to add details.

5. Confirm and submit your report.

---

## Choosing the Correct Report Category for Accuracy

Selecting the right category ensures the review team understands the exact nature of your concern. Facebook offers several options:

---

## What Happens After You Submit a Report (Review Process)

Once your report is submitted, Facebook’s process generally involves:

1. Sending your report to the review team.

2. Assessing the content against Community Standards.

3. Taking appropriate action:

- Removing the post.

- Disabling the account/page.

- Issuing warnings.

Automated detection systems assist human reviewers to respond quickly and consistently.

---

## How to Check the Status of Your Report

Checking the progress of your report helps you understand Facebook’s decision.

### On Desktop

1. Click your **profile icon → Help & Support → Support Inbox**.

### On Mobile

1. From the main menu, scroll down to **Help & Support → Support Inbox**.

2. Review the status, resolution, and any action taken.

---

## Tips for Reporting Harassment, Hate Speech, or Misinformation

For sensitive reports, consider these best practices:

- **Document evidence:** Take screenshots before reporting.

- **Be precise:** Select the most relevant category.

- **Do not engage:** Avoid commenting or reacting to harmful content.

- **Act quickly:** Reporting early can help prevent harm.

---

## What to Do if the Post is Not Taken Down (Alternative Actions)

If Facebook doesn’t remove the post, you still have measures you can take:

- **Block the user:** Stops them from contacting you or appearing in your feed.

- **Tighten privacy settings:** Restrict who can see and interact with your profile.

- **Contact authorities:** If threats are involved, seek legal help.

- **Check other platforms:** Remove similar content shared elsewhere.

---

## Preventing Exposure to Harmful Content in the Future

Proactive measures can reduce unwanted material in your feed:

1. **Adjust feed preferences:** Hide posts or snooze users who post harmful content.

2. **Update privacy filters:** Limit access from strangers.

3. **Block keywords:** Filter out certain words or phrases in groups/pages.

4. **Review policies regularly:** Stay updated on Facebook’s evolving Community Standards.

---

## Summary and Next Steps

By learning how to **report a Facebook post** effectively, you’re contributing to a safer digital community. Understanding policies, selecting accurate categories, and following up in your Support Inbox are all part of responsible social media use.

**Call to Action:** If you see content that violates Facebook’s rules, take immediate action by reporting it. Together, we can make the platform a safer space for all.