How Uber Built a Conversational AI Agent for Financial Analysis: The Finch Case Study

Disclaimer

The details in this post are derived from publicly shared information by the Uber Engineering Team.

All credit for the technical content belongs to them.

Links to original articles and sources can be found in the References section.

We have analyzed the details and added our own insights.

If you notice any inaccuracies or omissions, please leave a comment so we can update promptly.

---

The Challenge: Slow & Fragmented Financial Data Access

For a company operating at Uber’s scale, agile decision-making depends on fast and accurate data access.

Delays in retrieving reports can stall actions that affect millions of transactions worldwide.

Observations by Uber Engineering

Uber found that finance teams spent excessive time retrieving the right data before beginning analysis:

- Analysts used multiple platforms: Presto, IBM Planning Analytics, Oracle EPM, Google Docs

- Manual searches increased the risk of outdated or inconsistent data

- Complex data retrieval required SQL queries, demanding technical knowledge and time

- Requests to data science teams could take hours or days

---

The Need: Streamlined Data Access

Challenges like these are common across large enterprises.

Integrating AI-powered data assistants or centralized dashboards eliminates bottlenecks.

Platforms such as AiToEarn官网 show how intelligent, centralized content/data workflows help teams publish, analyze, and share efficiently — without switching tools.

---

Uber’s Solution: Finch

Objective:

Build a secure, real-time financial data access layer integrated into the finance team's daily workflow.

Vision:

- Analysts ask questions in plain language

- Answers delivered in seconds inside Slack

- No SQL, no platform switching

Result: Finch — Uber’s conversational AI data agent, embedded in Slack.

---

---

How Finch Works

Finch lets users type natural language requests in Slack.

It:

- Translates requests into structured SQL queries

- Maps terms to correct data sources & filters

- Checks permissions via RBAC (Role-Based Access Controls)

- Retrieves live data in real time

- Returns results in Slack, or exports to Google Sheets for large datasets

Example:

> “What was the GB value in US&C in Q4 2024?”

Finch:

- Identifies relevant table

- Constructs query

- Executes & returns answer instantly

---

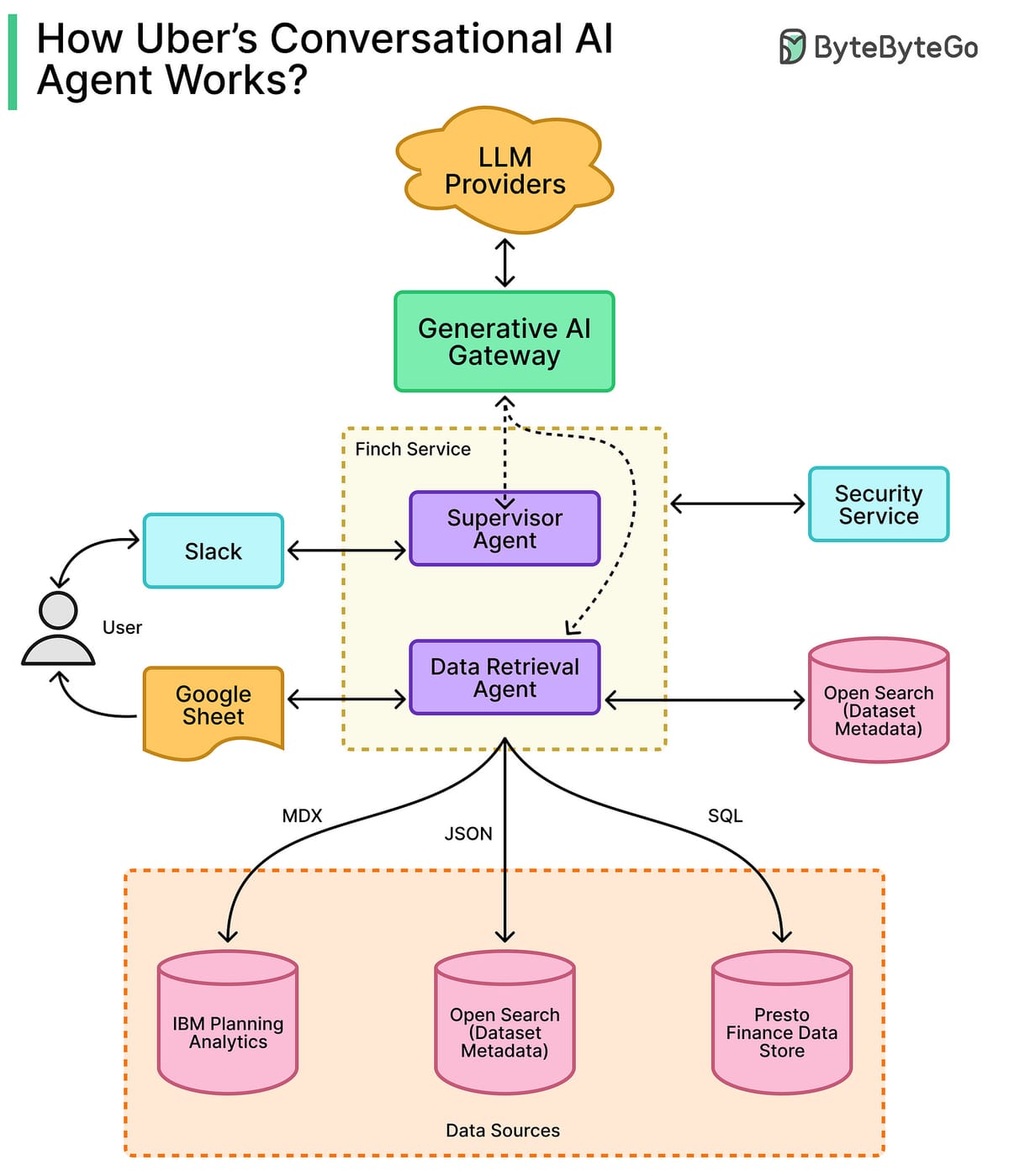

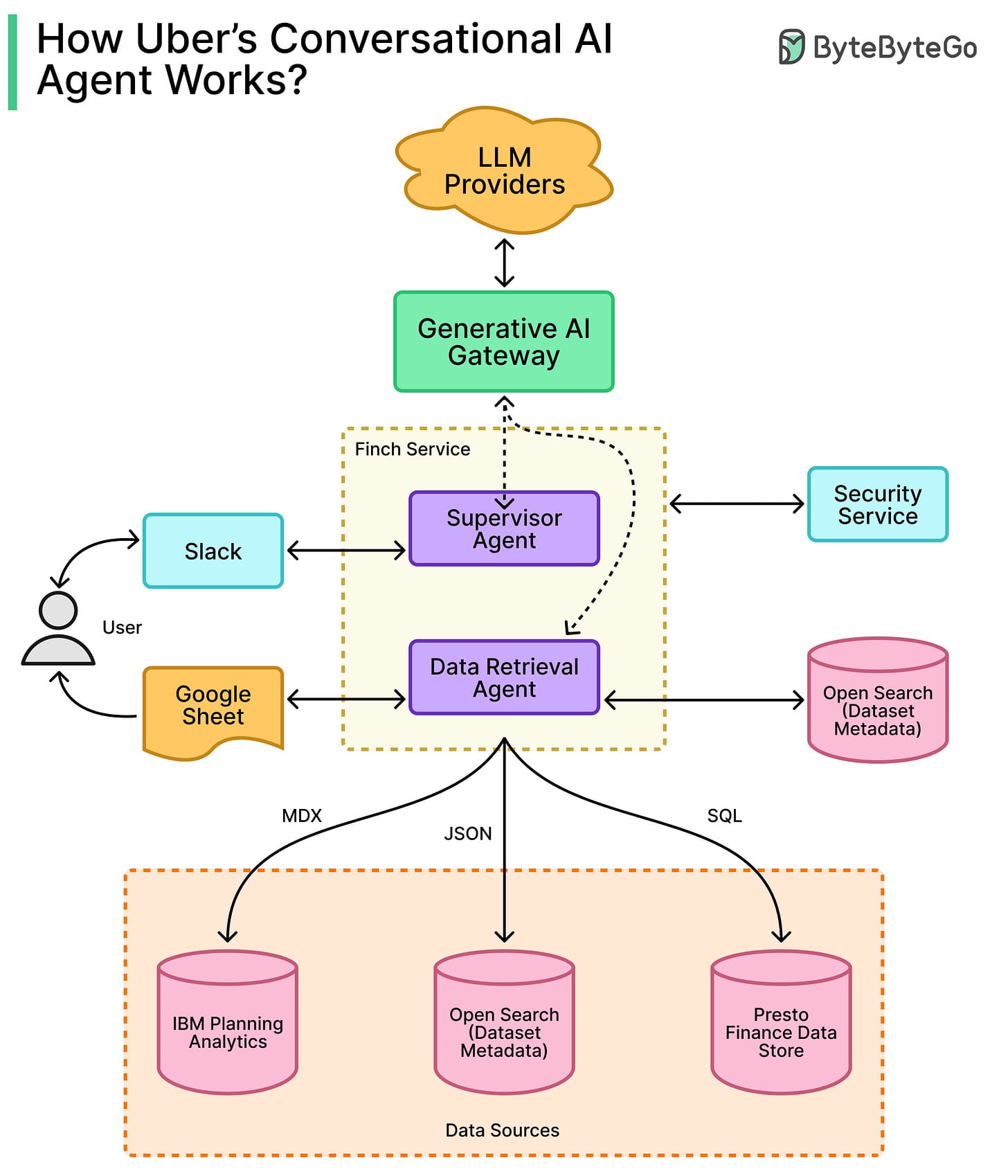

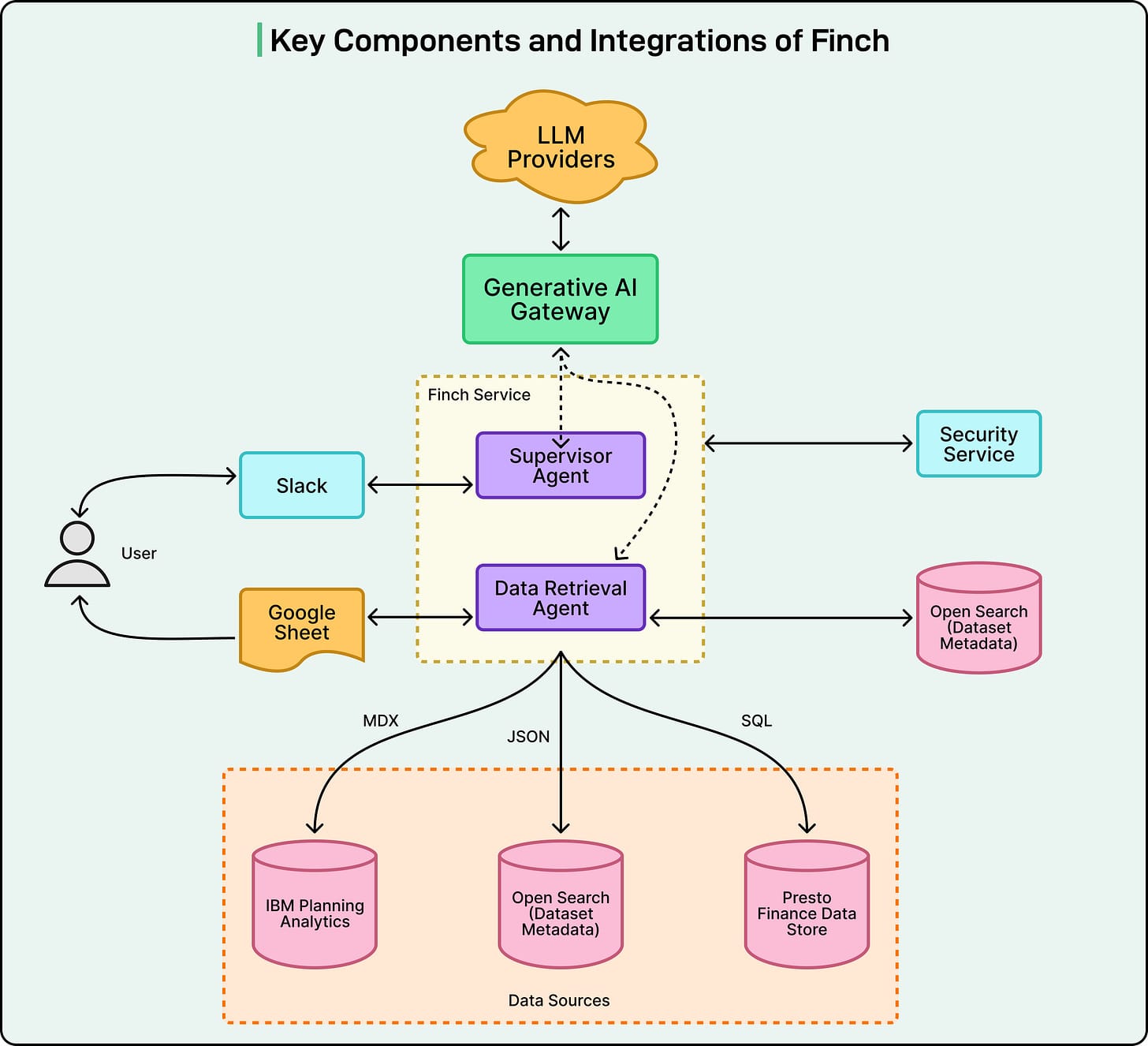

Architecture Overview

Design Goals:

- Modularity — independent but integrated components

- Security — strong access controls

- Accuracy — reliable LLM-generated queries

Data Layer:

- Uber uses single-table data marts for speed & clarity

- Semantic Layer via OpenSearch for natural language term matching

- Fuzzy matching improves accuracy in SQL `WHERE` clauses

---

Core Technologies:

- Generative AI Gateway — Access multiple LLMs (internal & third-party)

- LangChain + LangGraph — Orchestration of specialized agents

- SQL Writer Agent

- Supervisor Agent

- OpenSearch — Metadata indexing for language-schema mapping

- Slack SDK + Slack AI Assistant APIs — Real-time chat interface within Slack

- Google Sheets Exporter — Automatic export for large datasets

---

Agentic Workflow

- User asks question in Slack

- e.g., “GB values for US&C in Q4 2024?”

- Supervisor Agent determines request type

- Assigns to SQL Writer Agent

- SQL Writer Agent pulls metadata from OpenSearch

- Builds correct SQL query

- Executes query

- Slack updates in real time:

- “Identifying data source”

- “Building SQL”

- “Executing query”

- Returns answer in Slack or exports to Google Sheets

- Follow-up context preserved for additional queries

---

Accuracy & Performance Evaluation

Critical at Uber’s Scale

Continuous Evaluation:

- Sub-agent testing with golden queries

- Routing accuracy evaluation for Supervisor Agent

- End-to-end validation with real-world simulations

- Regression testing for historical accuracy

Performance Optimization:

- Efficient SQL to minimize DB load

- Parallel agents for reduced latency

- Pre-fetch metrics for instant responses

---

Conclusion

Finch drastically improves speed, accuracy, and efficiency for Uber’s finance teams.

Key Benefits:

- Real-time, natural-language access to curated financial data

- Modular, scalable architecture

- Integrated testing for consistent accuracy

- Low-latency responses with performance tuning

Future Enhancements:

- Wider FinTech integration

- Human-in-the-loop validation for sensitive queries

- Expanded user intents and specialized agents

- Advanced capabilities: forecasting, reporting, automated analysis

---

References

---

Sponsor Opportunity

Reach over 1M tech professionals — engineering leaders & senior engineers.

Email sponsorship@bytebytego.com to reserve.

Space sells out ~4 weeks in advance.

---

Broader Context

Tools like Finch exemplify AI’s potential in enterprise workflows.

Similarly, AiToEarn官网 enables global, cross-platform AI content creation, publishing, analytics, and monetization — integrating:

- AI content generation

- Multi-channel publishing

- Performance tracking

- AI model rankings (AI模型排名)

For creators, analysts, and engineers, unified AI-powered systems reduce friction and maximize impact.

---

Would you like me to create a clear comparative table showing Finch’s capabilities vs traditional workflows? That could improve the readability even further.