Huh? Weibo’s $7,800 Trained Model Surpasses DeepSeek-R1 in Math

VibeThinker: Weibo’s Disruptive Entry into the AI Model Arena

When the AI industry is obsessed with a “parameter race”, Weibo AI has unveiled an unexpected and imaginative path — reshaping the battlefield for large models.

A Lightweight Breakthrough

Weibo recently launched VibeThinker, its first self-developed open-source large model. Despite having only 1.5 billion parameters, this lightweight model outperformed the massive DeepSeek R1 (671 billion parameters) in top-tier international math competition benchmarks.

Even more remarkable, VibeThinker’s post-training cost was just $7,800 — dozens of times cheaper than DeepSeek-R1 or MiniMax-M1.

This achievement challenges traditional evaluation standards and hints at an industry shift from “scale race” to “efficiency revolution.”

---

Rethinking Model Size

Historically, parameter count was the dominant metric for AI capability. The prevailing belief was:

- Complex reasoning emerges only beyond 100B parameters.

- Small models cannot handle highly complex tasks.

VibeThinker proves otherwise. By starting small and applying innovative training strategies, Weibo answered “yes” to the possibility of unlocking hidden reasoning skills in compact models.

---

Key Innovation: Spectrum-to-Signal Principle (SSP)

While most follow the Scaling Law (“bigger = smarter”), Weibo AI optimized architecture and training, introducing the SSP training method. This small-scale contender defeated giants hundreds of times larger.

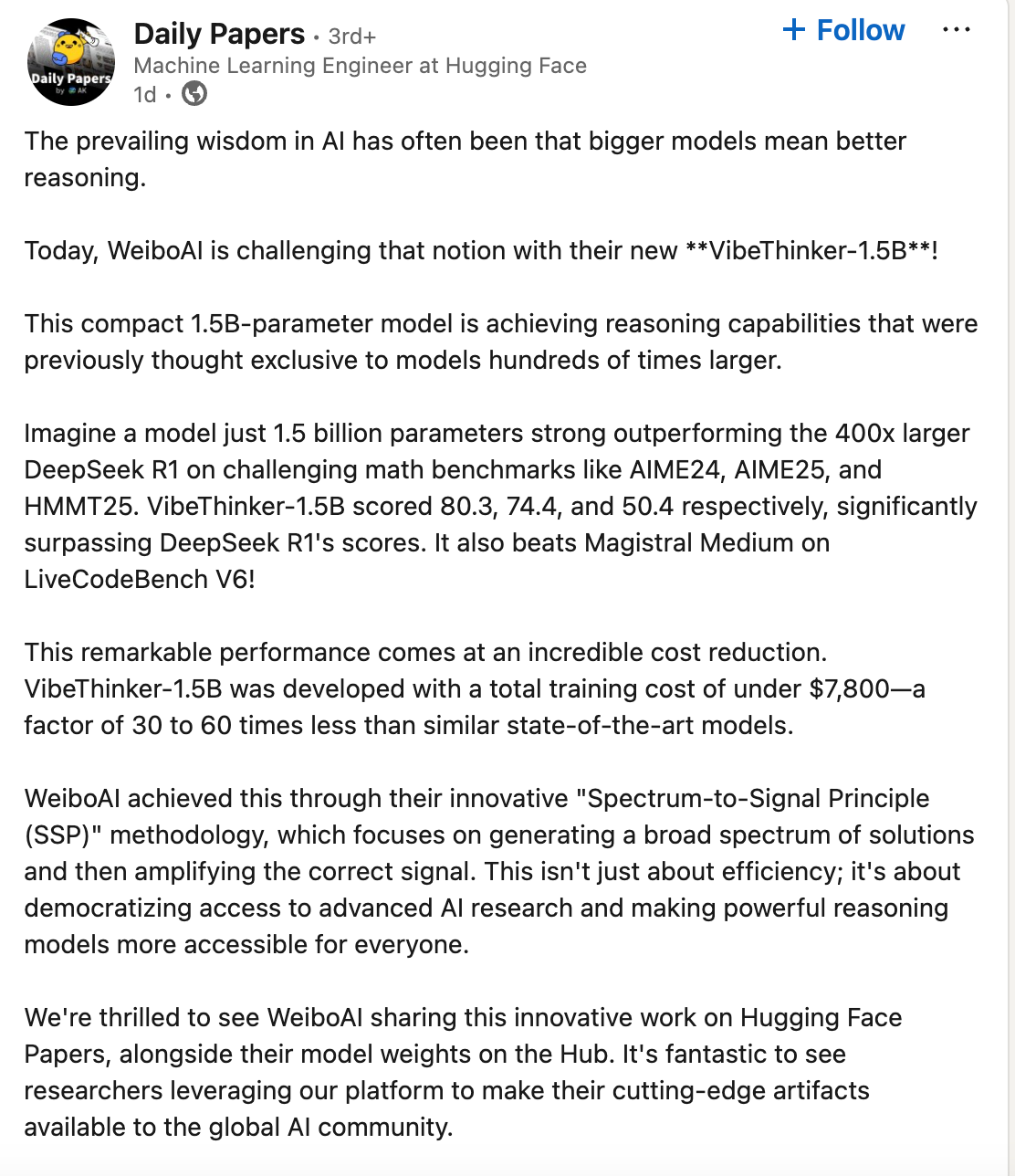

Upon release, VibeThinker drew global AI research attention, with surprising benchmark performances in mathematics and programming.

△ HuggingFace spotlighting the VibeThinker paper

---

Benchmark Results

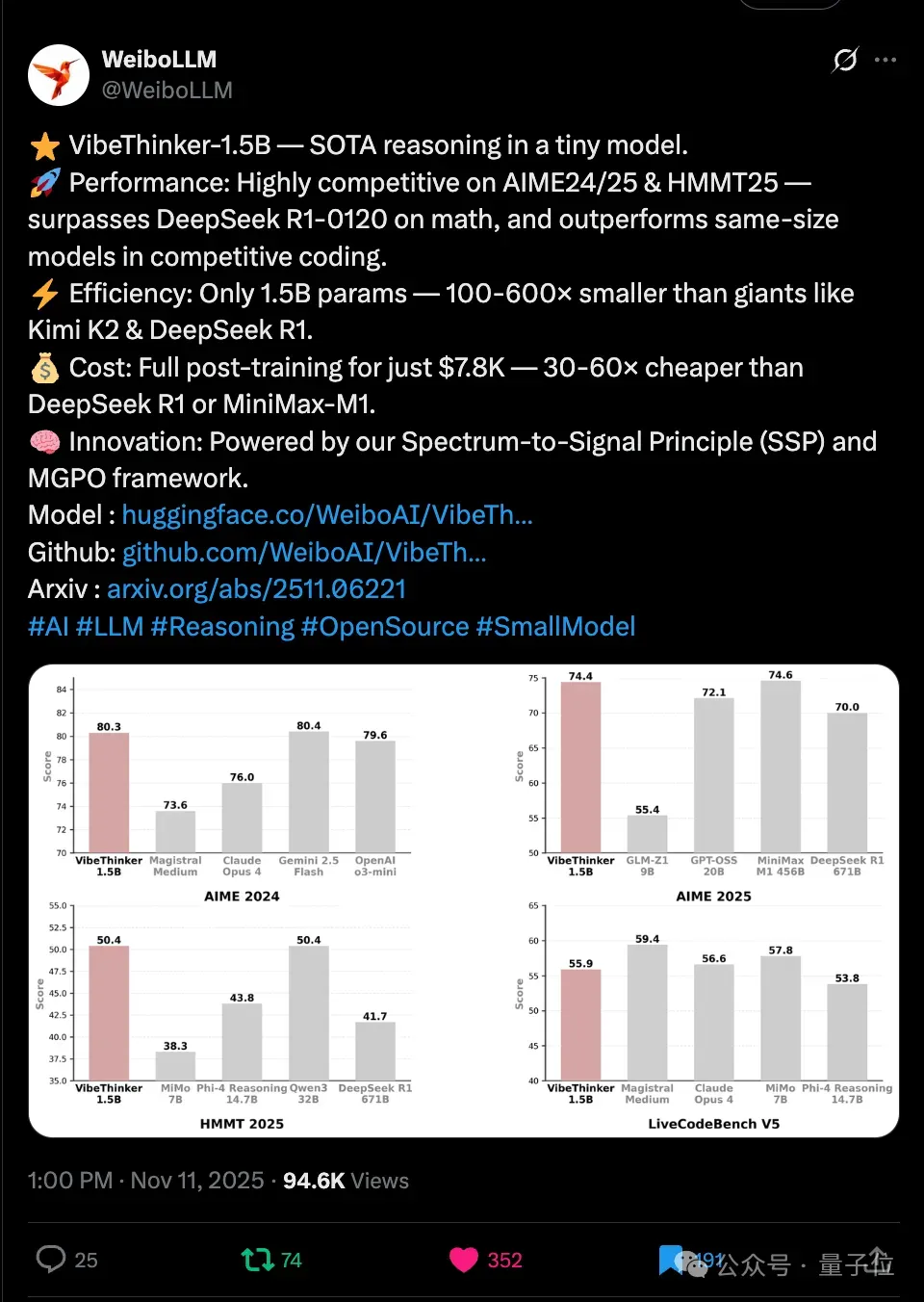

Mathematics (AIME24, AIME25, HMMT25)

- Surpassed DeepSeek-R1-0120 (671B params)

- Nearly matched MiniMax-M1 (456B)

- Reached Gemini 2.5 Flash and Claude Opus 4 levels

Programming (LiveCodeBench v6)

- Matched much larger models like Mistral AI’s Magistral-Medium-2506

Conclusion: With refined algorithms and training, small models can rival — even exceed — large-scale reasoning performance.

---

Intended Use Cases

Currently, VibeThinker is experimental and focuses on complex mathematics and competitive coding.

💡 Not optimized for daily conversations — best suited for technical and high-intelligence scenarios.

---

Breaking Cost Barriers

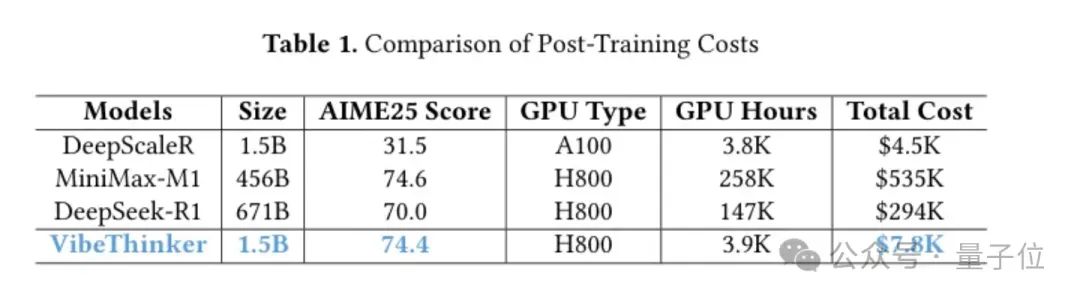

Training costs have long hindered AI adoption. VibeThinker’s advantage lies in extreme cost efficiency.

Industry Costs in 2025

- MiniMax M1: $535,000 (3 weeks, 512 H800 GPUs)

- DeepSeek R1: $294,000 post-training, ~$6M for base LLM

VibeThinker’s Costs

- Total compute time: 3,900 GPU hours (SFT + RL stages)

- Total cost: USD 7,800

Result: Achieves performance comparable to $300K–$500K models at 30–60× cost efficiency.

---

Democratizing AI Research

Such efficiency means powerful AI is no longer exclusive to tech giants. SMEs, research institutes, and universities can now access state-of-the-art reasoning tools — fueling an open, diverse, vibrant AI future.

---

Weibo’s AI Application Ecosystem

Weibo is integrating AI deeply into its platform:

2024 Milestones

- Developed the “Zhiwei” large language model

- Received regulatory approval

- Launched:

- Weibo Smart Search

- Content Summarization

- AI Interactive Accounts

---

Flagship AI Products

Weibo Smart Search

- Analyzes vast content

- Builds a trusted knowledge graph

- Understands emotion & context

- 50M+ MAU (June)

Comment Robert

- Sharp-tongued → warmer, smarter personalities

- Nearly 2M followers

- Showcases potential of AI comment assistants

---

Future Strategy: Data Empowerment

Weibo plans to:

- Leverage unique vertical data (e.g., psychology)

- Build models attuned to public sentiment

- Deliver emotionally aware social services

VibeThinker will become the core engine for future AI products like Weibo Smart Search — enhancing UX, breaking scene boundaries, and supporting a “super social ecosystem.”

---

Efficiency Gains for Weibo

- Lower compute costs for intelligent search

- Reduced AI processing costs in real-time interactions

- ➡ Scale AI capabilities without resource strain

---

Synergy with Open-Source Platforms

Platforms like AiToEarn embrace this efficiency trend, providing creators tools to:

- Generate AI content

- Publish across platforms (Douyin, Weibo, YouTube, LinkedIn, etc.)

- Analyze engagement

- Rank AI models

Learn more:

---

Final Takeaway: VibeThinker’s blend of small scale, high reasoning power, and low cost signals a turning point for AI — where smarter, cheaper, and more accessible becomes the new frontier.

---

Would you like me to also create a clear comparison table showing VibeThinker vs DeepSeek vs MiniMax performance & cost? That could make the rewrite even more visually impactful.