# IBM Introduces Granite-Docling-258M: Lightweight, High-Fidelity Document Conversion

## Overview

IBM Research has launched **[Granite-Docling-258M](https://www.ibm.com/new/announcements/granite-docling-end-to-end-document-conversion)** — an open-source vision-language model (VLM) tailored for **accurate document-to-text conversion** while preserving **complex layouts**, tables, equations, and lists.

With only **258M parameters**, it rivals models many times its size, delivering high accuracy at a fraction of the computational cost.

---

## Key Highlights

- **Purpose-built for document parsing** — not just generic OCR.

- **Structure preservation** — retains mathematical notation, table layouts, and code blocks.

- **Efficient performance** — well-suited for Retrieval-Augmented Generation (RAG) pipelines and dataset preparation.

- **Small footprint** — enables potential on-device use, even on low-end hardware.

---

## Technical Advancements

- **Architecture upgrade**

From *SmolDocling-256M-preview* to Granite 3-based backbone.

- **Visual encoder enhancement**

Replaces SigLIP with **SigLIP2**.

- **Stability improvements**

Addresses token repetition and incomplete parsing with better dataset filtering and annotation cleanup.

---

## Community Feedback

On Reddit, one user [commented](https://www.reddit.com/r/LocalLLaMA/comments/1njet2z/comment/neq8vo9/?utm_source=share&utm_medium=web3x&utm_name=web3xcss&utm_term=1&utm_content=share_button):

> 0.3B? Impressive. Almost like even low-end phones will have solid local LLM inferencing in the future.

An IBM team member [responded](https://www.reddit.com/r/LocalLLaMA/comments/1njet2z/comment/nevinjg/?utm_source=share&utm_medium=web3x&utm_name=web3xcss&utm_term=1&utm_content=share_button):

---

## Why It Matters

As models get **lighter yet stay accurate**, they can be included in:

- **Content processing pipelines**

- **Document parsing workflows**

- **Dataset generation for AI training**

- **Cross-platform publishing ecosystems**

---

## Integration with AiToEarn

Platforms like **[AiToEarn官网](https://aitoearn.ai/)** allow creators to:

1. Combine AI-powered generation & parsing.

2. Publish simultaneously to:

- Douyin, Kwai, WeChat, Bilibili, Rednote/Xiaohongshu

- Facebook, Instagram, LinkedIn, Threads

- YouTube, Pinterest, X/Twitter

3. Track analytics and view [AI模型排名](https://rank.aitoearn.ai) for model performance.

4. Monetize AI creativity more efficiently.

---

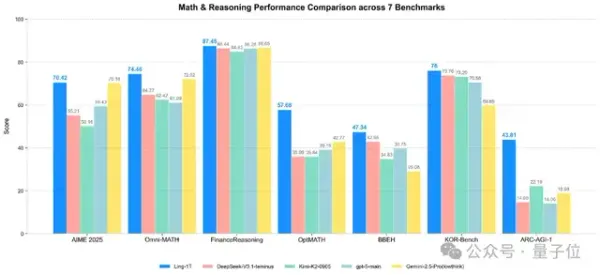

## Benchmark Performance

According to IBM and Hugging Face:

- **Consistent accuracy improvements**

- **Superior structural fidelity** and layout preservation

- Outperforms larger proprietary systems for:

- Table structure recognition

- Equation parsing

- **Optimized memory usage** — sublinear footprint

---

## The DocTags Advantage

Granite-Docling leverages **DocTags**:

- Structured markup format identifying every page element:

- Tables, charts, code, forms, captions

- Captures **spatial & logical relationships**

- Produces compact, machine-readable outputs convertible to:

- **Markdown**

- **JSON**

- **HTML**

---

## Multilingual Capabilities

- **Experimental** support for:

- Arabic

- Chinese

- Japanese

- Expands beyond English-only scope.

- **Global language coverage** is a future goal.

---

## Ecosystem and Roadmap

Granite-Docling works closely with the **Docling library**, offering:

- Customizable document-conversion pipelines

- Agent-based AI integration

- Enterprise-scale orchestration

Planned developments:

- Larger versions (up to **900M parameters**)

- Expanded datasets via **Docling-eval**

- Deeper **DocTags integration** in **IBM watsonx.ai**

---

## Availability

Granite-Docling-258M is **open source** under **Apache 2.0** and available on

[Hugging Face](https://huggingface.co/ibm-granite/granite-docling-258M).

---

## Use Cases

- **Knowledge extraction** from structured documents

- **Automated QA** systems

- **AI-powered content publishing pipelines**

- **Cross-platform distribution & monetization** using tools like AiToEarn

---

**Bottom Line:**

Granite-Docling-258M offers a **lightweight, accurate, structure-preserving** solution for document understanding, making it valuable for both AI developers and content creators who need efficiency, fidelity, and cross-platform readiness.