# Introduction

IDC recently released a report titled *"AI-Native Cloud / New Cloud Vendors Restructuring Agentic Infrastructure"*.

Key survey highlights include:

- **87% of Asia-Pacific enterprises** had deployed at least 10 GenAI scenarios by 2024 — expected to rise to **94% by 2025**.

- **Daily GenAI usage among consumers** increased from 19% (2024) to 30% (2025).

- **65% of enterprises** will have more than 50 GenAI scenarios in production; **26%** will have over 100 by 2025.

- By 2028, medium-to-large enterprises will run **hundreds of agents concurrently**.

This trend forces enterprises to reconsider whether **existing AI infrastructure** can keep pace with the coming era of **agentic collaboration** — impacting **business models**, **market structures**, and **global strategy**.

To dissect these findings, InfoQ invited:

- **Lu Yanxia** — Research Director at IDC China

- **Alex Yeh** — Founder & CEO of GMI Cloud

They shared both data insights and hands-on perspectives on emerging AI infrastructure trends in the Asia-Pacific region.

---

## 1. The Surge in Demand: Global-Scale AI Applications Fuel the Rise of **AI-Native Cloud**

### The Shift from Training to Inference

- Since ChatGPT (2022), large companies focused on **training foundation models**.

- From 2022–2024: AI infrastructure investment was **training-heavy**.

- By 2025: **Pre-training converges** ➡ market pivots to **model inference**.

- In Asia-Pacific, AI inference infrastructure adoption jumps from **40% (2023) → 84% (2025)**.

> **Result**: Industry shifts from development to **large-scale deployment**.

---

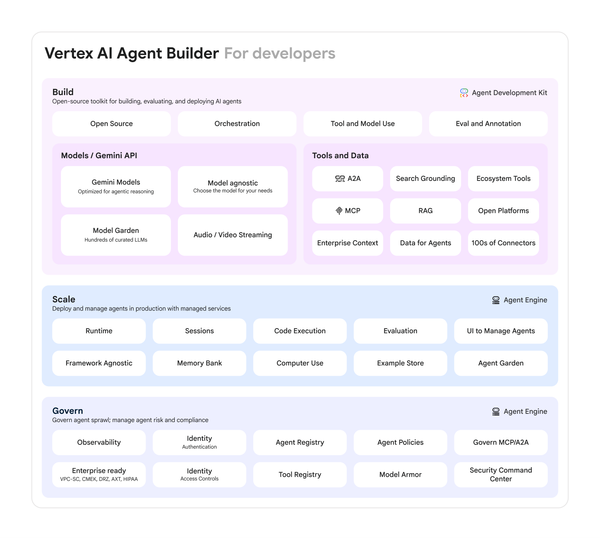

### Defining AI-Native Cloud

IDC describes it as:

> *"High-density GPU computing, ultra-low-latency networking, orchestration and cooling optimized for GenAI."*

**Lu Yanxia’s perspective**:

> Enterprises may run thousands of interacting agents — requiring **new distributed computing capabilities** and **fast model-to-model communication**.

---

### Technical Barriers & Insights (Alex Yeh)

1. **Efficient GPU Cluster Scheduling**

- Uses Kubernetes for dynamic resource allocation.

- Sustains **98%+ utilization** across Asia-Pacific nodes.

- Leverages **time zone differences** to reuse idle compute globally.

2. **Computational Power Adaptability**

- Different AI scenarios require different GPU profiles.

- *Inference Engine* bridges hardware layers; customers pay by **API token usage** rather than per GPU card.

3. **End-to-End Optimization**

- Moves beyond “cards + storage” to distributed inference architectures.

- Optimized TTFT (Time To First Token) by improving model + hardware integration.

---

### Paradigm Shifts in AI Cloud

- **From Virtualization → Bare Metal**

Direct hardware access ensures peak performance (critical for training & low-latency inference).

- **From Remote Support → Companion-Style Service**

On-site, rapid-response SLA (10 min response; 1 hr diagnosis; 2 hr recovery).

- **Global Compliance & Operations**

Localized clusters for complex regulations & latency optimization.

**GMI Cloud’s Plan**: Launch Asia’s largest **10,000-card liquid-cooled GB300 cluster**, with expansions in Southeast Asia, Japan, the Middle East, and the U.S.

---

## 2. Efficiency Revolution: From “Compute Supplier” to “Value Co-Creation Partner”

### Multi-Cloud Challenges

- Enterprises use multiple clouds for **cost-performance**, **vendor diversity**, and **data localization**.

- Problem: **Fragmented resources, varied interfaces, and management complexity** → **hidden cost black hole**.

**Lu Yanxia’s analysis**:

- Heterogeneous stacks → complex integration.

- Non-standardized interfaces increase technical barriers.

---

### GMI Cloud’s Unified Compute Management

- **GPU Hardware Layer** — Bare metal + high-end GPU cloud partnerships.

- **IaaS: Cluster Engine** — Kubernetes-based global scheduling (98%+ utilization).

- **MaaS: Inference Engine** — Multimodal inference with unified API & per-token billing.

---

### Overcoming Idle Resource Dilemma

- Legacy model: Heavy **upfront GPU purchase** → idle during demand dips.

- AI chip cycles now **~3 years** → depreciation risk.

- Solution: **3-Year Rental Model** with upgrade paths — turning CapEx into OpEx.

---

## 3. Market Shift: **Inference Demand Explosion** Reshapes Competition

### Key Growth Sectors

- **General Internet** (social platforms, AIGC apps to-C, overseas content creation)

- **Manufacturing** (smart plants abroad, AI-driven industrial equipment)

- **Embodied Intelligence** (robotics)

---

### Modality Breakdown

- **Voice** — voice conversion, call centers, companion bots.

- **Video + Image** — e-commerce asset creation.

- **Text** — Copilot tools, meeting summaries.

---

### Technical Trends

- **Real-Time Video Generation**: B200 chip reduces 30 s → **0.4 s**.

- Open-source + closed-source mix lowers entry barriers for **fine-tuning**.

---

### Competition & Collaboration

- **GPU Clouds** could capture **15%** of GenAI infrastructure market.

- Shift from zero-sum to **collaboration**:

- Companies rent or lend GPUs to each other.

- Smaller vendors can set up clusters faster for niche deployments.

---

### Strategic Recommendations for Global Expansion

1. **Responsible AI Framework**

Ethics, compliance, full-process governance.

2. **Track Large Model Evolution**

Avoid redundant tool development; focus on industry-specific differentiation.

3. **Prioritize AI-Optimized Infrastructure**

Integrated training-inference systems & low-latency networks.

---

## Conclusion

Asia-Pacific AI infrastructure is undergoing **dual reconstruction** in **technology** and **industry dynamics**.

The old “resource seller” cloud model is fading — AI-native clouds deliver:

- **Bare-metal architectures**

- **Elastic Kubernetes scheduling**

- **Closed-loop training-inference optimization**

Platforms such as **[AiToEarn](https://aitoearn.ai/)** complement this shift — enabling creators to link **AI generation**, **multi-platform publishing**, **analytics**, and **model rankings** ([AI模型排名](https://rank.aitoearn.ai)), monetizing AI content across Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter).

---

**References**:

[Read the original](https://www.infoq.cn/minibook/fQRodO3b2d1XePXOG137)

[Open in WeChat](https://wechat2rss.bestblogs.dev/link-proxy/?k=b8ee2069&r=1&u=https%3A%2F%2Fmp.weixin.qq.com%2Fs%3F__biz%3DMjM5MDE0Mjc4MA%3D%3D%26mid%3D2651259990%26idx%3D2%26sn%3Dcafa9166cc54410125d4789e2e92badd)