# 43 Talks Salon: Ancient Wisdom Meets AI Thinking

At the **43 Talks** offline salon, conversations usually revolve around **technology, trends, and products**. But in a recent session, AIGC content creator **Tech Commander** — with 250,000 followers — shifted the focus in an entirely unexpected way.

> “Let me be clear—what I’m about to share has no scientific basis.”

Then, with a serious tone, he introduced *Xiuxian* — the ancient Chinese practice of "cultivating immortality."

This wasn’t superstition or parody. Instead, it evolved into **one of the most hard-core theoretical dissections of ancient wisdom**, reframed through the same cognitive tools we use to understand large AI models.

---

## Diverging from Binary Thinking

In this salon, the talk felt more like a **thousands-year-spanning thought experiment** than a tech presentation.

Listeners were encouraged to:

- Suspend **binary thinking** (true vs. false, science vs. superstition)

- Ask deeper questions, such as:

**Is our scientific paradigm simply one possible model among many for understanding the world?**

---

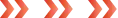

## Prediction: Prompting the Universe’s Large Model

**Core question:** How could shaking a few copper coins make the universe “respond”?

Tech Commander's **bold hypothesis**:

1. **Universe as a Pre-trained Supermodel:**

- Parameters: Beyond any imaginable scale

- Dataset: Everything from the Big Bang to now

- Operation: Continuous, following unknown rules that “generate” reality

2. **Divination as Prompt Engineering:**

- Rituals provide structured input — time, location, ceremonial steps — targeting specific outputs

- The hexagram becomes the **returned output tokens** from the model

- Not predicting a single future, but **sampling probabilities** across possible futures

3. **Practical Approach:**

- Avoid debating “is it scientific?”

- Instead ask: **Does it work?**

- Even if the model’s mechanics are a black box (like GPT writing poetry), repeated meaningful outputs make it a “usable technology”

---

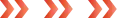

## Compute Power & Algorithms: Who Holds the API Key?

If the **universe is a large model**, who can run it and interpret its results?

Tech Commander’s answer: it depends on **hardware** and **algorithms**.

### Hardware Hypothesis

- **Most brains** = CPUs (general-purpose, survival-focused processing)

- **Some brains** = GPUs or TPUs (parallel computation, specialized processing)

- This architecture may allow certain people to naturally process complex “universe model” outputs

### Ancient Algorithms

- **Four Pillars of Destiny**, **Zi Wei Dou Shu** = optimized specialist models

- Iterated over centuries to accurately parse certain “data types” from the universe model

- When **high-end hardware** runs **refined algorithms**, exceptional insights can emerge

> Genius may not be divine inspiration — it might be advanced hardware running an as-yet-unmastered algorithm.

---

## Life Optimization: From External Query to Internal Fine-Tuning

The most exciting extension of this thinking is **turning the model inward** — treating **yourself** as the most complex, yet accessible model.

### Cultivation as Self-Optimization

The Science Captain’s view: cultivation (*xiuxian*) = **deep optimization and fine-tuning of your life system**.

- **Default state:** Like a factory-preset model, basic functions only, no access to source code

- **Goal:** Gain higher system permissions and become your own “model trainer”

---

### Key Process Stages

1. **Inner Observation**

Similar to **model interpretability**: turning inward to understand your body’s parameters and weights.

2. **Repairing Leaks & Transforming Desires**

Comparable to **model optimization/regularization**: limiting overfitting to external stimuli, redirecting energy toward improving core health and efficiency.

3. **Foundation Building**

Achieving a **low-consumption, high-efficiency, stable new version** of yourself.

---

## Practical Links Between Ancient Theory and Modern Tools

Modern open-source platforms like [AiToEarn官网](https://aitoearn.ai/) reflect the same principles:

- **Hardware:** Our brains and devices

- **Algorithms:** AI models and workflows

- **Model:** Integrated systems for creation and monetization

With [AiToEarn开源地址](https://github.com/yikart/AiToEarn) and [AI模型排名](https://rank.aitoearn.ai), creators can:

- Generate AI content

- Publish cross-platform

- Apply analytics for optimization

- Monetize creative output across Douyin, Kwai, WeChat, Bilibili, Rednote, YouTube, Facebook, Instagram, Pinterest, LinkedIn, Threads, and X

---

## Epilogue: Creation, Emergence, and New Perspectives

At the conclusion, someone asked:

**Was Newton’s theory of gravity invented or discovered?**

Science Captain’s reply: *“It just appeared out of nowhere.”*

This resonates with **AI emergence** — abilities materializing spontaneously once a model surpasses a parameter threshold.

Whether Newton’s breakthrough or AI’s emergent skills, **creation is often a phase shift**, not step-by-step derivation.

---

**Final Thought:**

Perhaps the most vital AI-era question isn’t just *What can AI do for us?* but *What can AI help us understand*—

about the universe, creation, and ultimately, ourselves.

By connecting **ancient wisdom** and **modern AI cognition**, we open doors both forward and backward in time—turning understanding itself into a shared global dialogue.