Ilya Speaks Out: The Scaling Era Is Over and Our Definition of AGI May Be Completely Wrong

The End of the Scaling Era — Ilya Sutskever’s Vision for AI’s Future

Fixing a bug can easily reintroduce the same error, creating a frustrating loop where development ends exactly where it began.

For years, almost every AI company believed in a simple formula:

> Bigger models + more data + more GPU power = more intelligence

This belief, known as the Scaling Law, was once Silicon Valley’s most unshakable creed.

After stepping away from the spotlight and founding SSI (Safe Superintelligence), former OpenAI Chief Scientist Ilya Sutskever calmly declared:

> "The era of Scaling is over — we are back to the research era."

In an in-depth conversation with Dwarkesh Patel, he explained his technical roadmap and addressed a key question:

Why current AI, no matter how powerful, still doesn’t feel human.

🔗 Podcast link: https://x.com/dwarkesh_sp/status/1993371363026125147

---

Why AI Is Like a High-Score, Low-Ability Honor Student

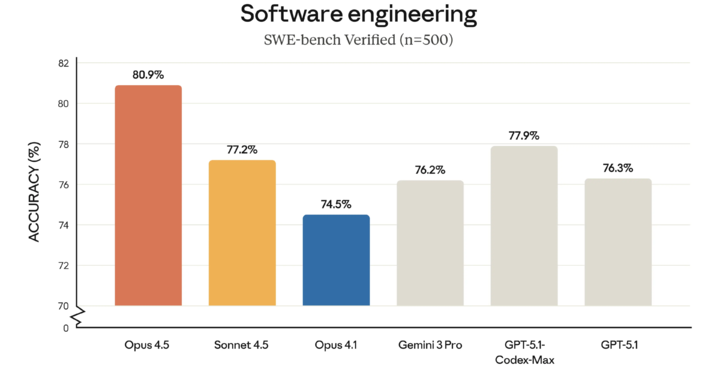

Modern AI can crush programming contests, math competitions, and benchmark leaderboards — shattering records with each new release. Yet Ilya highlights an odd reality.

▲ Claude 4.5 Opus scored 80.9 in programming benchmarks.

The Problem: "Vibe Coding"

- AI writes code → encounters a bug

- You point it out → AI fixes it

- Fix introduces another bug

- You point that out → AI reintroduces the first bug

- Loop repeats endlessly

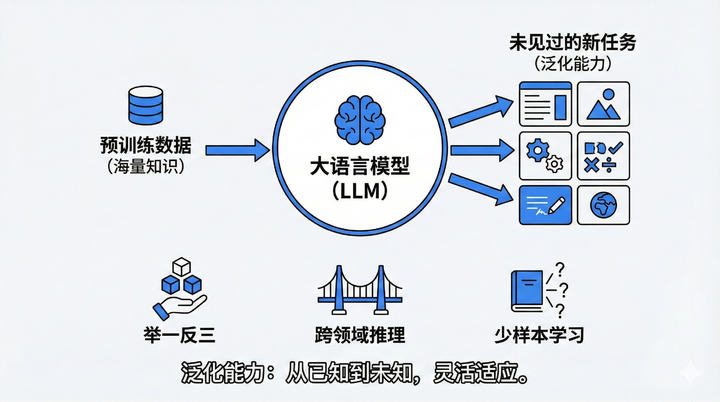

This reflects a lack of generalization — the ability to adapt flexibly to new situations.

---

Two Student Archetypes

- Student A (AI): Practices 10,000 hours, memorizes solutions, scores perfectly if problems are familiar.

- Student B (Human): Practices only 100 hours, but truly understands logic and adapts to new problems.

Long-term, B outperforms A. Today’s AI resembles Student A — optimized for training data, not for intellectual transfer between tasks.

---

From Scaling to Creativity

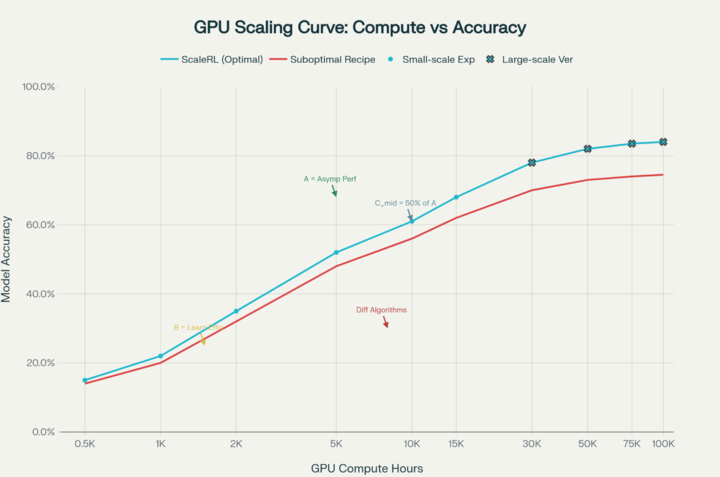

For years, the recipe for success was simple:

- Maximum compute

- Maximum data

- Larger neural networks

- Pre-train on everything available

Why Scaling Became Comfortable — and Risky

- Predictable results

- Low-risk for big companies

- No need for novel methods

But now:

- Data is nearly exhausted

- Marginal returns: even 100× more parameters yield diminishing benefits

---

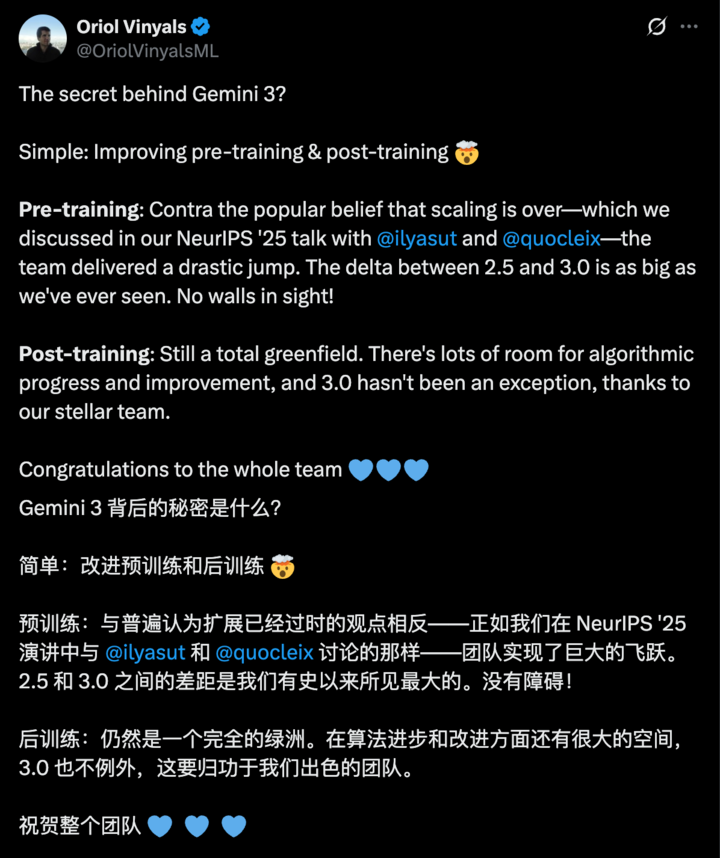

Gemini vs GPT-5

- Gemini 3: Allegedly solved key pre-training problems.

- GPT-5: Adding data no longer boosts intelligence substantially.

▲ Google DeepMind VP Oriol Vinyals credits Gemini’s leap to solving pre-training.

---

Back to the Research Era — With Supercomputers

Ilya divides AI history into:

- 2012–2020: Research Era — exploration and invention

- 2020–2025: Scaling Era — brute-force expansion

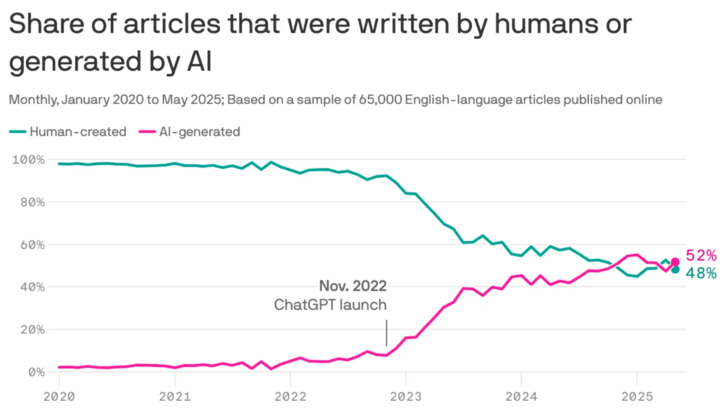

Now, we’re entering a Compute-Rich Research Era: using massive infrastructure from scaling to power new, creative methods.

---

Seeking Intuition — The Missing Piece

If stacking data isn't enough, what’s humanity’s secret?

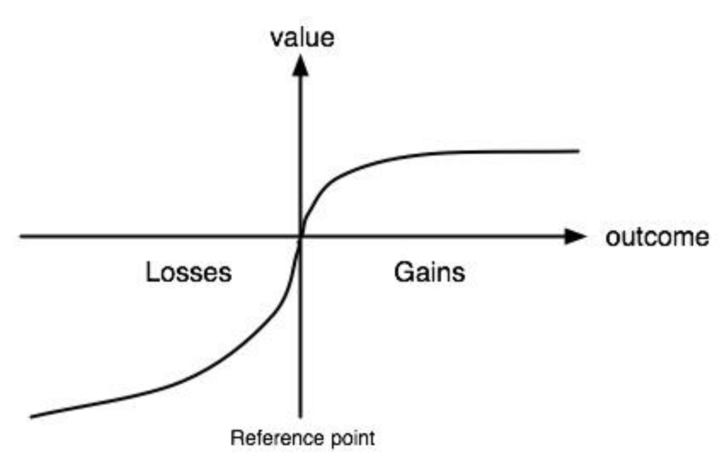

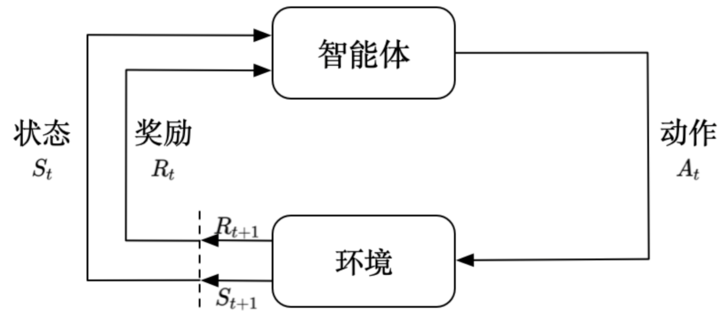

Emotions as a "Value Function."

Emotions as Real-Time Learning Signals

- Driving example: Humans learn in hours because emotions (nervousness, excitement) provide immediate feedback.

- In AI, traditional RL only scores outcomes after tasks finish — wasting compute and slowing learning.

---

Value Function Advantage:

- Feedback during intermediate steps

- Signals at every stage guide direction

- Faster learning with fewer trials

---

SSI’s Mission — Building Safe Superintelligence

Ilya criticizes the industry’s rat race of half-finished product releases. SSI will work privately until it achieves safe superintelligence.

However, he admits that gradual release might be safer — people believe warnings only when they see AI’s unsettling abilities firsthand.

---

Redefining AGI — A 15-Year-Old Genius

Forecast: AGI in 5–20 years.

- In Ilya’s mind: Superintelligence = brilliant, adaptable teenager

- Capable of mastering medicine in days and performing surgeries immediately after

---

The Power of "Amalgamation"

Unlike humans, AI can:

- Learn in parallel across millions of domains

- Merge all experiences into one shared brain

- Achieve instant collective evolution

---

Humanity’s Path Forward

Two safeguards:

- Empathy for all sentient beings — not just humans

- Merge with AI via Brain–Computer Interfaces — to remain relevant post-singularity

---

Ilya’s Guiding Principle

> "Seek beauty."

When data runs dry, only intuition and belief in beauty, simplicity, and biological plausibility sustain progress.

---

🌐 Related Tools — AiToEarn

While SSI focuses on superintelligence, platforms like AiToEarn官网 empower creators today:

- AI content generation

- Cross-platform publishing (Douyin, Kwai, WeChat, Bilibili, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X)

- Analytics & monetization

These tools illustrate an industry-wide shift — from raw scaling toward targeted creativity and multi-platform deployment.

---

If you want, I can also make a one-page visual summary chart mapping out:

- Scaling vs Research eras

- Bottlenecks in data

- Future path toward value functions and AGI.

Would you like me to prepare that next?