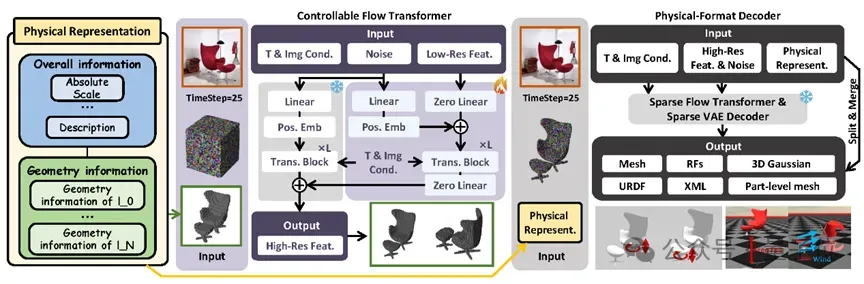

Image Generation Simulation: This AI Makes 3D Assets “Ready to Use” for Instant Robot Training

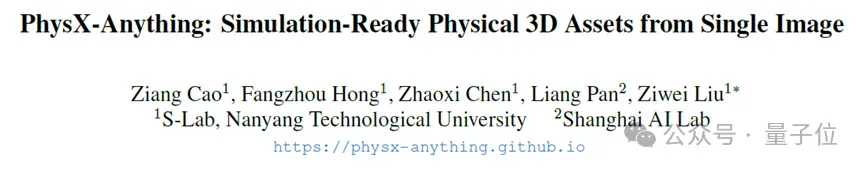

PhysX-Anything: Single-Image to Simulation-Ready 3D Assets

A single photo can now be transformed into simulation-ready 3D assets with realistic physical and joint properties.

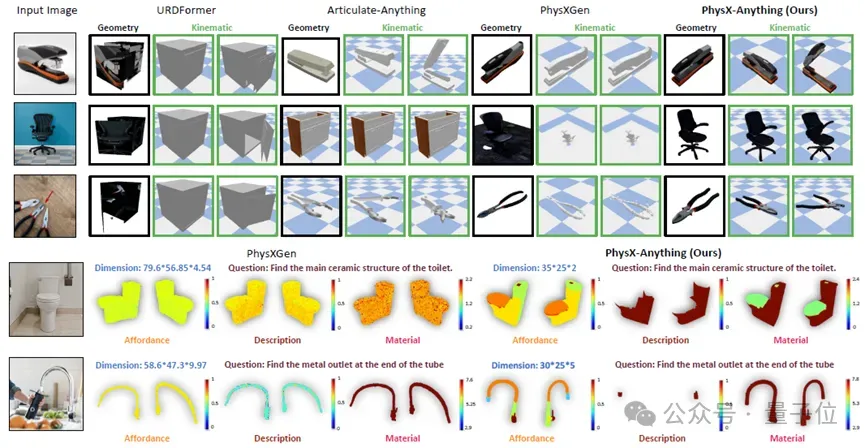

(Yes — in the image below, almost all objects are generated by AI.)

---

Why Simulation-Oriented 3D Generation Matters

As 3D modeling expands beyond static visual effects toward physically interactive assets, developers need objects that can be simulated and manipulated directly. These assets are crucial for building the next generation of embodied AI.

Current challenges:

- Most existing methods ignore physical and motion characteristics.

- Common outputs lack density, absolute scale, and joint constraints, making them unsuitable for integration into robotics or realistic simulators.

- Even physics-enabled methods like PhysXGen don't offer plug-and-play compatibility with mainstream physics engines.

---

Introducing PhysX-Anything

Researchers from Nanyang Technological University and the Shanghai Artificial Intelligence Laboratory have developed PhysX-Anything — the first simulation-oriented 3D generation framework with physical properties.

Key features:

- Works from a single image.

- Generates high-quality 3D assets with explicit geometry, articulated joints, and physical parameters.

- Assets are ready for industry-standard simulators and control workflows.

---

How PhysX-Anything Works

1. Coarse-to-Fine Generation Framework

From a real-world image:

- Multi-turn dialogue generates

- Global physical descriptions.

- Component-level geometric information.

- Physical representations are decoded into 3D assets in six common formats.

---

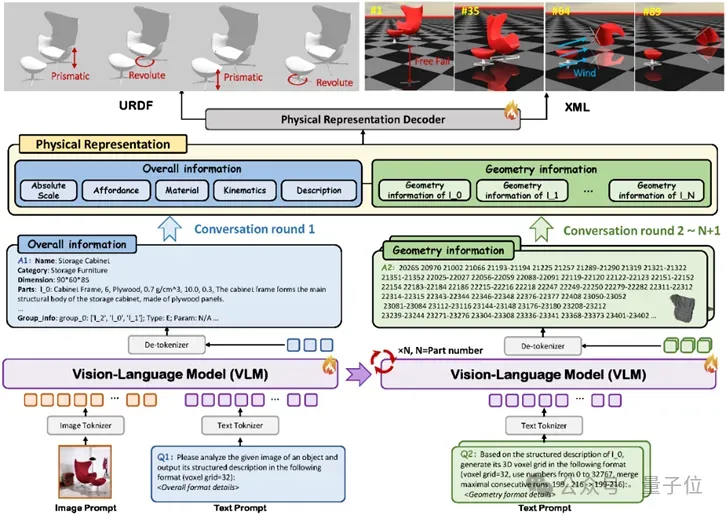

2. Novel Token-Efficient 3D Representation

Traditional VLM-based 3D generation struggles with long mesh token sequences. Solutions like 3D VQ-GAN require extra special tokens and increase complexity.

PhysX-Anything's approach:

- Voxel-based representation (32×32×32 grid).

- Coarse geometry modeled by VLM.

- Downstream decoder refines geometry into high-fidelity assets.

---

3. Physical Asset Schema

- Tree-like JSON-style format replaces standard URDF.

- Captures richer attributes and textual metadata for better VLM reasoning.

- Maps key kinematic parameters (e.g., motion range, axis positions) directly into voxel space.

---

4. Model Architecture and Training

- Fine-tuned Qwen2.5 on a custom physical 3D dataset.

- Multi-turn dialogue ensures accurate global descriptions and local details.

---

AiToEarn Integration

Platforms like AiToEarn官网 complement PhysX-Anything, offering:

- Cross-platform publishing to Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X (Twitter), and more.

- Analytics and monetization tools for AI-generated simulation-ready assets.

- Open-source ecosystem for long-term scalability.

---

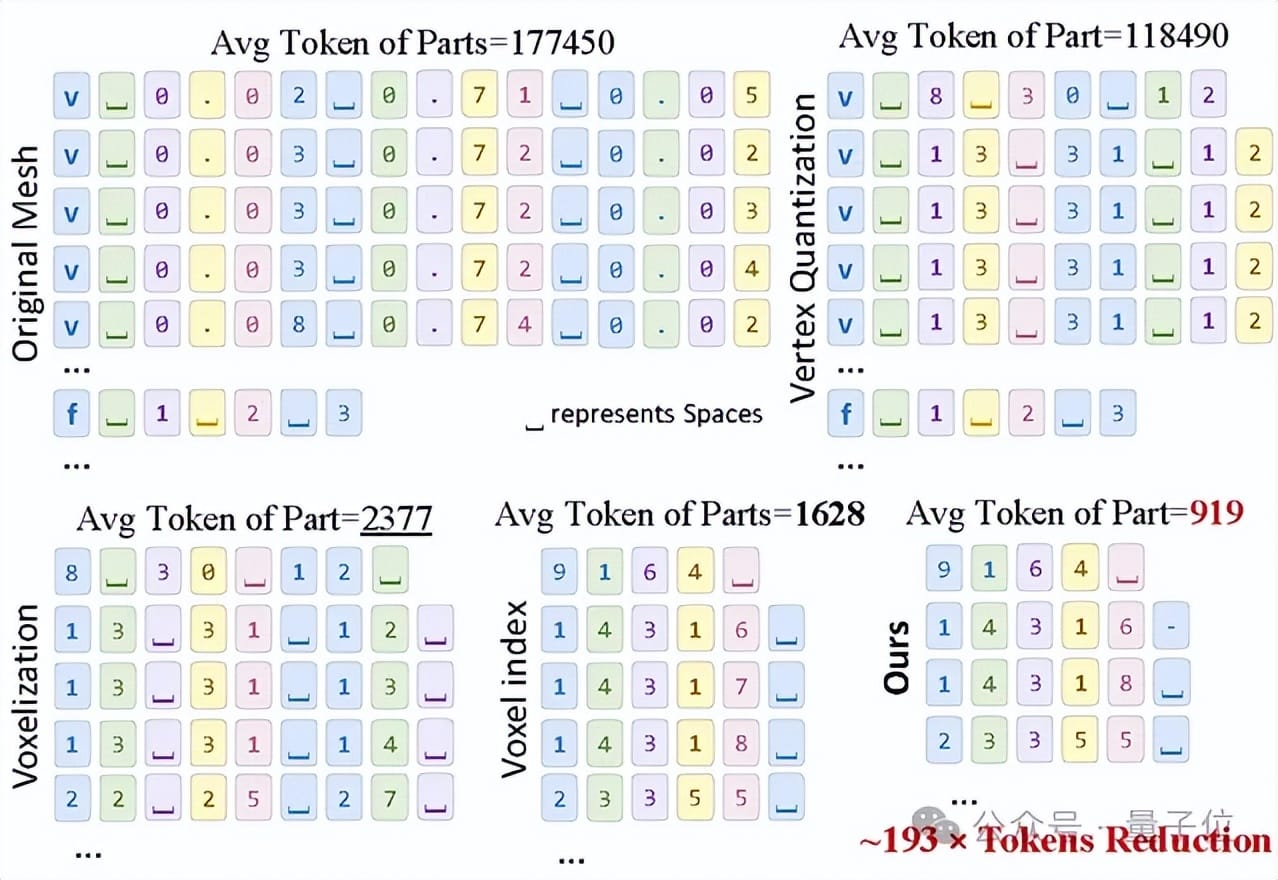

Controllable Fine Detail Generation

Inspired by ControlNet, PhysX-Anything uses:

- Coarse voxel guidance to steer fine-grained diffusion model generation.

- Structured latent diffusion decoding to produce multiple formats:

- Mesh surfaces

- Radiance fields

- 3D Gaussians

- Nearest neighbor segmentation to split meshes into part-level components.

- Combine global structure + fine geometry to output URDF, XML, and meshes ready for simulation.

---

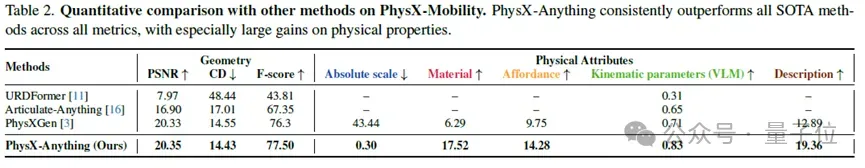

Benchmarking Against State-of-the-Art

Compared with URDFormer, Articulate-Anything, and PhysXGen, PhysX-Anything showed:

- Best scores in geometry and physical metrics.

- Lowest absolute scale error.

- Most coherent part-level textual descriptions.

---

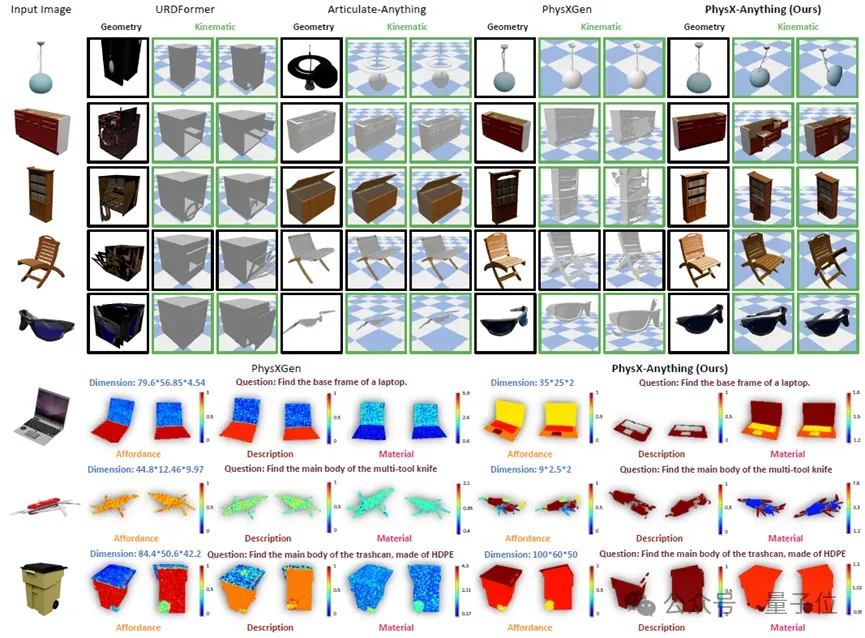

Qualitative Advantages

- Strong generalization ability beyond retrieval-based methods.

- More credible and reasonable physical properties than PhysXGen.

---

Evaluation

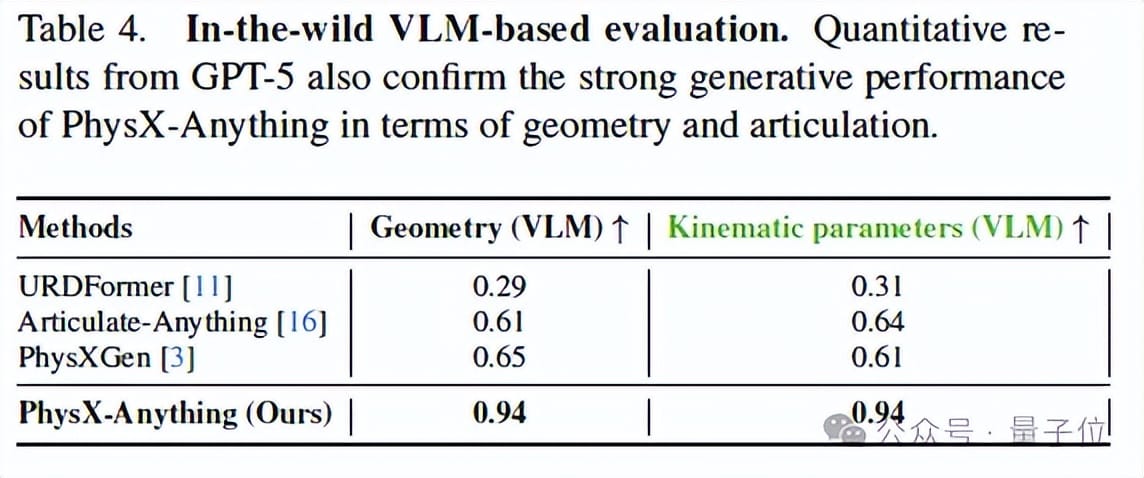

VLM-Based Tests

- Real-world everyday images used.

- Focused on geometry + joint motion quality.

- Significant superiority in kinematic accuracy and generalization.

---

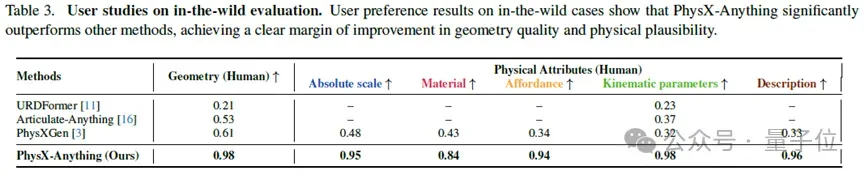

Human Judgments

- Volunteers scored generated structures on geometry and physical realism.

- PhysX-Anything ranked highest in both categories.

---

Real-World Visualization

PhysX-Anything produces:

- Accurate geometry.

- Realistic joint motion.

- Plausible physical attributes.

---

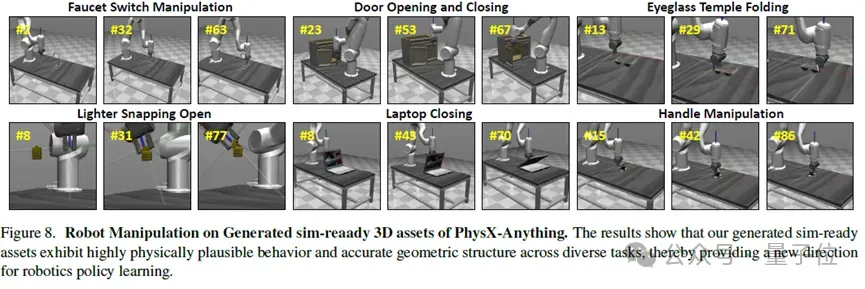

Downstream Simulation Tests

Using MuJoCo-style simulator, generated assets like faucets, cabinets, lighters, and glasses were:

- Directly imported into simulations.

- Applied successfully to robotic policy learning.

---

Key Contributions

- First simulation-oriented physical 3D generation paradigm.

- Unified VLM pipeline + custom 3D representation → 193× token compression.

- New dataset PhysX-Mobility with 47 categories & rich annotations.

- Proven generalization and simulation readiness in robotics tasks.

---

Impact

PhysX-Anything marks a paradigm shift from “visual modeling” to “physical modeling”, unlocking new directions in:

- 3D vision

- Embodied intelligence

- Robotics research

---

Video: https://mp.weixin.qq.com/s/gUooZUSc1yWQlf4NpViZrA

Paper: https://arxiv.org/abs/2511.13648

Project: hthttps://physx-anything.github.io/

GitHub: https://github.com/ziangcao0312/PhysX-Anything

Authors:

- First author: Cao Ziang, NTU PhD student (Computer Vision, 3D AIGC, Embodied Intelligence)

- Collaborators: Hong Fangzhou, Chen Zhaoxi (NTU), Pan Liang (Shanghai AI Lab)

- Corresponding author: Professor Liu Ziwei (NTU)

---

I’ve grouped and enhanced the content for readability, but if you’d like I can make you an additional “quick takeaway” cheat sheet that condenses PhysX-Anything’s core concepts and workflow into one diagram + bullet list. Do you want me to prepare that?