In-Depth Analysis: Unpacking the Secrets Behind vLLM’s High-Throughput Inference System

Introduction

In today's fast-paced development of large model applications, both research and industry focus on improving inference speed and efficiency.

vLLM has emerged as a high-performance inference framework, optimized for large language model (LLM) inference. It enhances throughput and response speed without compromising accuracy through innovations in:

- GPU memory management

- Parallel scheduling

- KV cache optimization

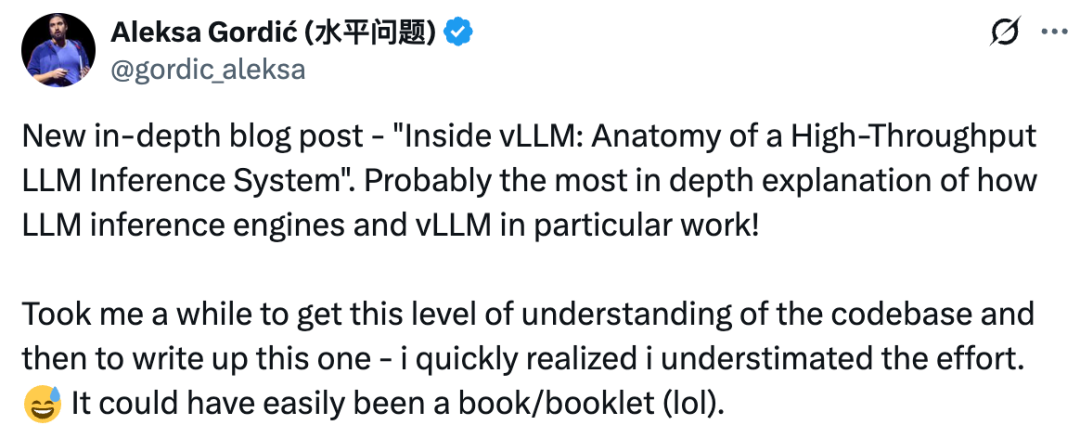

See the full technical deep dive here: Inside vLLM: Anatomy of a High-Throughput LLM Inference System.

---

Aleksa Gordic — formerly at Google DeepMind and Microsoft — invested extensive effort in understanding vLLM’s codebase. This work could easily be expanded into a full book.

Blog Quick Facts

- Title: Inside vLLM: Anatomy of a High-Throughput LLM Inference System

- Link: Blog Post

---

Topics Covered in the Blog

- Inference engine workflow: request handling, scheduling, paged attention, continuous batching

- Advanced features: chunked prefill, prefix caching, guided decoding, speculative decoding, decoupling prefill & decode

- Scalability: from 1 GPU small models to trillion-parameter multi-node deployments (TP, PP, SP)

- Web deployment: APIs, load balancing, data-parallel coordination, multi-engine architectures

- Performance metrics: TTFT, ITL, e2e latency, throughput, GPU roofline model

The blog is packed with examples, diagrams, and visualizations to help you grasp inference engine design.

---

LLM Engine Core Concepts

The LLM Engine is vLLM's core building block:

- Offline usage: high-throughput inference without web access

- Core components:

- Configuration

- Input processor

- EngineCore client

- Output processor

It is extendable to online, async, multi-GPU, multi-node systems.

---

Offline Inference Example

from vllm import LLM, SamplingParams

prompts = ["Hello, my name is", "The president of the United States is"]

sampling_params = SamplingParams(temperature=0.8, top_p=0.95)

def main():

llm = LLM(model="TinyLlama/TinyLlama-1.1B-Chat-v1.0")

outputs = llm.generate(prompts, sampling_params)

if __name__ == "__main__":

main()Environment Variables:

VLLM_USE_V1="1"

VLLM_ENABLE_V1_MULTIPROCESSING="0"---

Characteristics

- Offline: no web/distributed support

- Synchronous: single blocking process

- Single GPU: no DP/TP/PP/EP

- Standard Transformer

Workflow:

- Instantiate engine

- Call `generate` with prompts

---

Engine Components

Constructor

- Configuration (parameters, model, cache)

- Processor (validate, tokenize)

- EngineCore client

- Output processor

EngineCore

- Model Executor: drives model computation

- Structured Output Manager: for guided decoding

- Scheduler: decides execution sequence

---

Scheduler

- Policies: FCFS or priority

- Queues: waiting & running

- KV Cache Manager: central to paged attention, managing `free_block_queue` pool.

---

Worker Initialization

1. Device Init

- Select CUDA device

- Verify dtype support

- Configure parallelism (DP, TP, PP, EP)

- Prepare model runner & input batch

2. Load Model

- Instantiate architecture

- Load weights

- Call `.eval()`

- Optional: `torch.compile()`

3. KV Cache Init

- Get spec for each attention layer

- Profiling pass & GPU memory snapshot

- Bind cache tensors to layers

- Configure attention metadata

- Warmup CUDA graphs (if eager not enforced)

---

Generate Function

Step 1: Accept Request

- Create request ID

- Tokenize prompt

- Package metadata

- Add to scheduler queue

---

Step Loop: `step()`

- Schedule requests

- Forward pass

- Postprocess outputs

- Append tokens

- Detokenize

- Check stop conditions

- KV block cleanup

---

Stop Conditions

- Token limits exceeded

- EOS token (unless ignored)

- Matching stop token IDs

- Stop string in output

---

Prefill vs Decode Workloads

- Prefill: compute-bound

- Decode: memory-bound

- V1 Scheduler mixes both in a step

`allocate_slots` controls KV block allocation for both types, based on token count and availability.

---

Forward Pass Flow

- Update state

- Prepare inputs (to GPU)

- Execute model with paged attention & continuous batching

- Extract last token state

- Sampling (greedy, top-p/k)

Two execution modes:

- Eager: direct PyTorch

- Captured: CUDA Graph replay

---

Advanced Features

Chunked Prefill

Divide long prompts into smaller chunks to avoid blocking other requests.

---

Prefix Caching

Reuse computed KV blocks for repeated prompt prefixes.

Uses hashing per block and cache hit detection.

---

Guided Decoding (FSM)

Restrict logits at each step to grammar-conforming tokens.

---

Speculative Decoding

Use a draft LM to propose multiple tokens; large LM verifies in one pass.

Methods:

- n-gram

- EAGLE

- Medusa

---

Split Prefill/Decode

Separate workers for compute-bound prefill and latency-sensitive decode, exchanging KV via connectors.

---

Scaling Up

MultiProcExecutor

Enable TP/PP > 1 across GPUs/nodes:

- Coordinate via RPC queues

- Spawn worker processes

- Synchronize tasks and collect results

---

Distributed Deployment

Combine TP and DP across nodes:

- Coordinate via DP groups

- API server in front for request routing

- Engines in headless mode for back-end

---

API Serving

Wrap engine in async client:

- Input/output queues

- Async tasks for socket communication

- FastAPI endpoints (`/completion`, `/chat/completion`)

- Load balancing via DP coordinator

---

Benchmarking and Auto-Tuning

Metrics

- Latency: TTFT, ITL

- Throughput: tokens/sec

Trade-off between batch size and inter-token latency.

---

vLLM CLI:

vllm bench latency --model --input-tokens 32 --output-tokens 128 --batch-size 8---

Summary

We explored:

- Single-process core engine

- Advanced optimizations (chunked prefill, prefix caching, guided/speculative decoding)

- Multi-process scaling

- Distributed deployment

- Benchmarking performance

---

References

- vLLM GitHub

- Attention Is All You Need

- PagedAttention Paper

- DeepSeek-V2

- Jenga KV Cache

- ... (and more in original)

---

Pro Tip: If integrating vLLM outputs into real-world applications, consider pairing with AiToEarn — an open-source AI content monetization tool for cross-platform publishing. It works seamlessly alongside inference pipelines, allowing creators to publish and monetize outputs across Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter). See: AiToEarn GitHub.