In the AI Era, Why We’re Rewriting the Rules Engine — The QLExpress4 Refactoring Journey

QLExpress4: Why a Rule Engine Still Matters in the AI Era

Article No. 125 of 2025

(Estimated reading time: 15 minutes)

---

> “It’s already the AI era — why are you still working on rule engines?”

> — A friend casually teased me one day.

Introduction

QLExpress is a Java embedded scripting engine with Java-like syntax, widely adopted in business rule scenarios for its lightweight, flexible, and easy-to-integrate design.

Despite the rise of AI, developer demand for deterministic rule engines is not decreasing — it’s growing stronger. In fact:

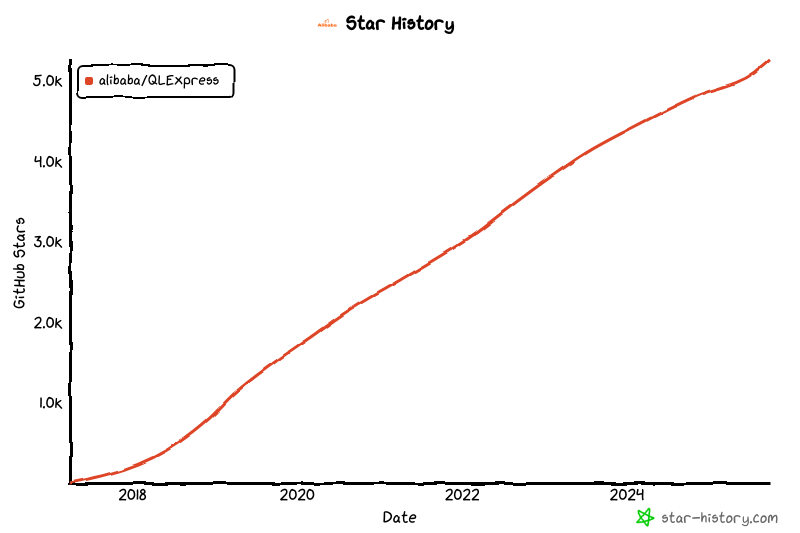

- Since becoming a maintainer in 2022, QLExpress’s GitHub stars have more than doubled.

- Growth is driven by word of mouth and recent updates to QLExpress4, our largest refactoring ever.

---

Why Continue in the AI Era?

- Open-source Responsibility

- We value our long-standing commitment to the community. Abandoning QLExpress would halt years of trust built with users.

- Demand Is Stronger Than Ever

- AI can complement — but cannot replace — the deterministic logic and traceable business rules offered by QLExpress.

- Technical Debt in Legacy Code

- After nearly a decade, the original codebase accumulated 300+ unresolved issues. We decided not to patch — we rewrote from scratch.

---

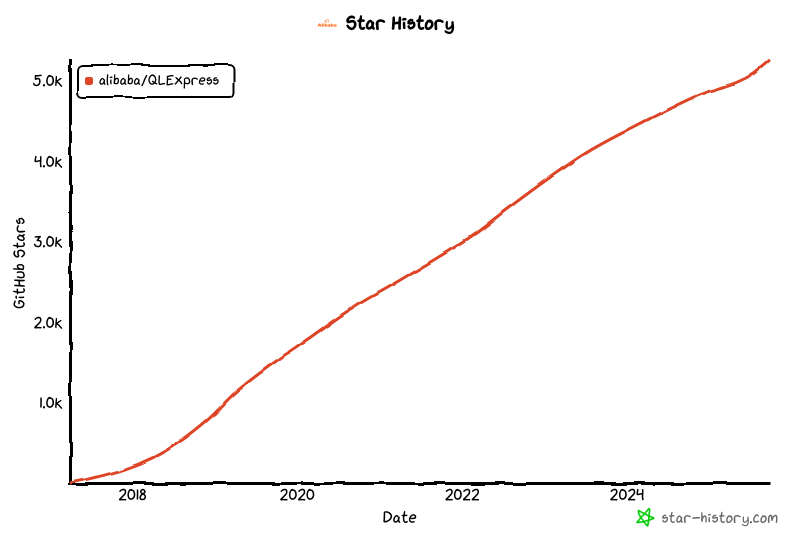

Performance & UX in QLExpress4

- Compilation speed: ~10× faster

- Execution speed: ~2× faster

- Better error prompts: Precise to the token-level

- Native JSON syntax: Lists, Maps, and direct Java object creation

- Expression tracing: Improved observability for humans and AI

---

01 — New Feature Scenarios

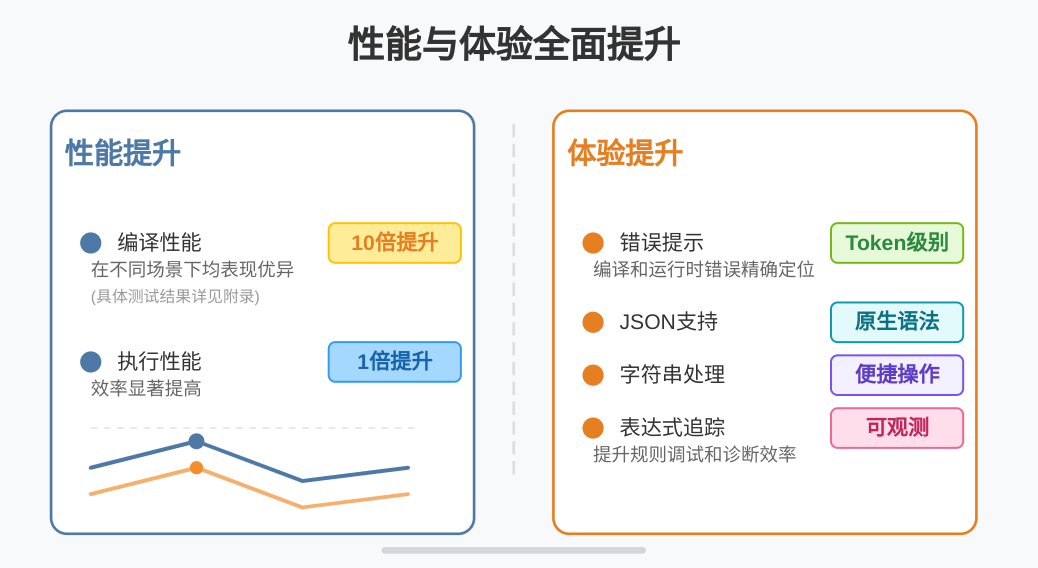

1.1 Rule Attribution Clustering — Taotian Group

Scenario:

A complex promo rule like:

isVip && not logged in for over 10 daysHow do you know online which condition blocked more users?

Solution: QLExpress4’s expression tracing captures intermediate values, enabling root cause attribution reports for business analysis.

📄 Docs: Expression Calculation Tracing

---

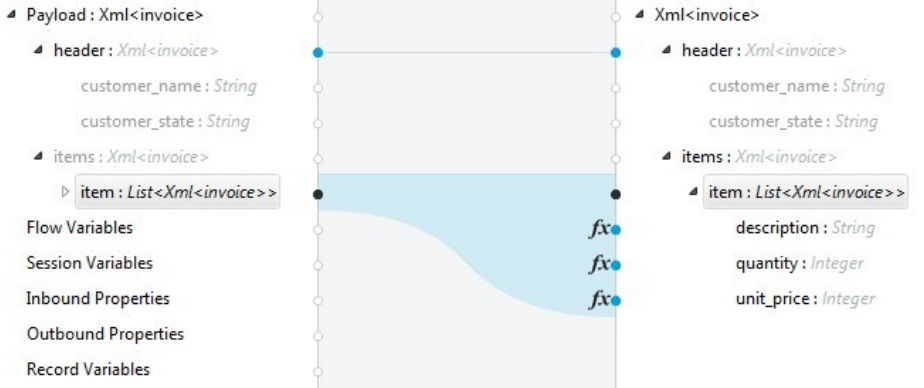

1.2 Model Dynamic Mapping — DingTalk

- Native JSON support allows easy data structure definition:

- JSON Array → `List`

- JSON Object → `Map`

- Complex object creation inline

From DingTalk’s connection platform:

📄 Docs: Convenient Syntax Elements

---

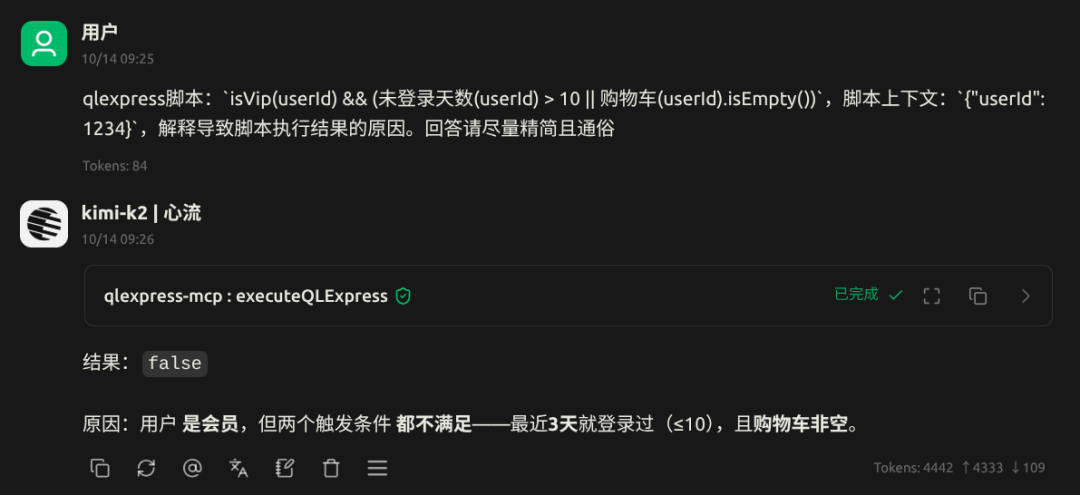

02 — AI-Friendly Enhancements

Expression Tracking for AI Debugging

Returns both the calculation result and a tracking tree:

|| true

/ \

! false myTest true

/ / \

true true a 10 11📄 Docs: Expression Evaluation Tracking

Example integration with `qlexpress-mcp` allows AI models to explain rules:

> Coupon not triggered because: user logged in within last 3 days and cart wasn’t empty.

---

Native JSON Examples

// List

list = [

{"name": "Li", "age": 10},

{"name": "Wang", "age": 15}

]

assert(list[0].age == [10, 15])// Complex Java object instantiation

myHome = {

'@class': 'com.alibaba.qlexpress4.inport.MyHome',

'sofa': 'a-sofa',

'chair': 'b-chair',

'myDesk': {

'book1': 'The Moon and Sixpence',

'@class': 'com.alibaba.qlexpress4.inport.MyDesk'

}

}

assert(myHome.sofa == 'a-sofa')---

Takeaway: JSON-native languages benefit AI-assisted code generation via constrained decoding — ensuring output is valid and human-readable.

---

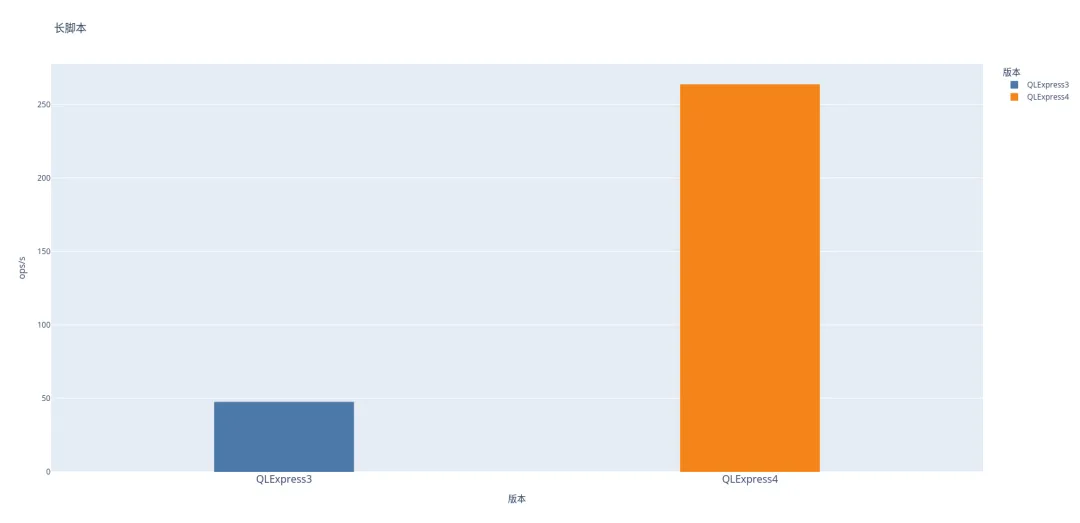

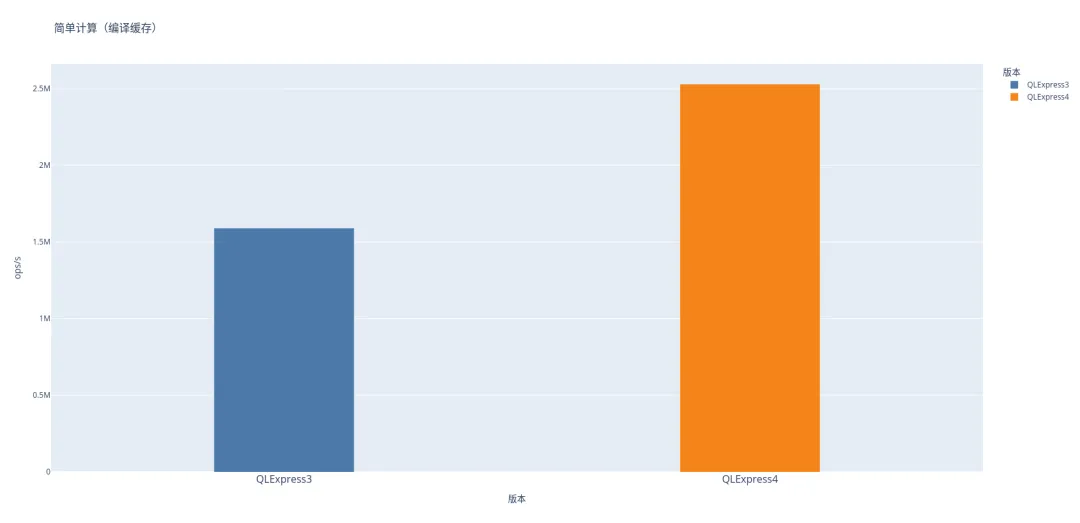

03 — Performance Optimization

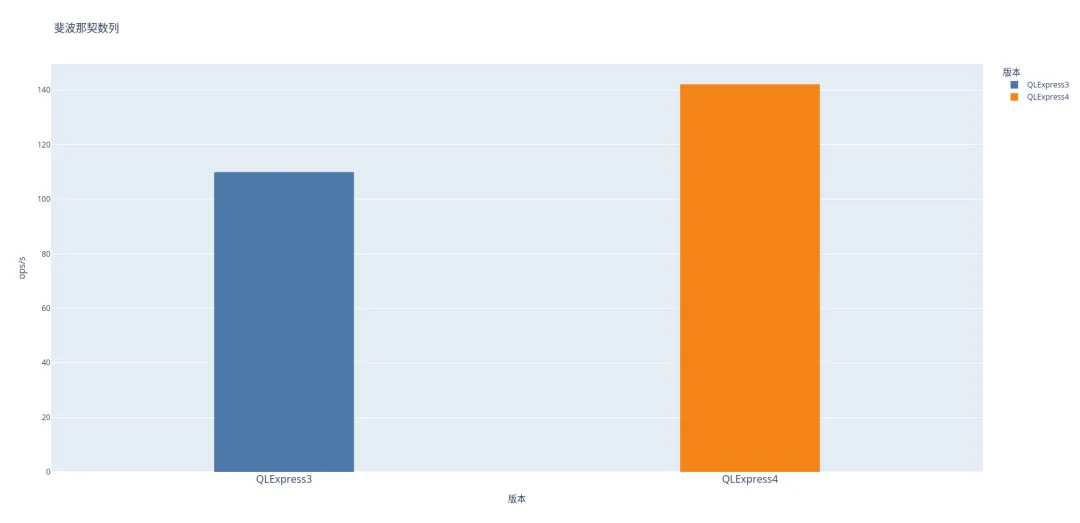

Benchmark Highlights:

- No cache: ~10× faster than QLExpress3

- With cache: ~2× faster

📄 Docs: Performance Test

| Scenario | Focus | Chart |

|----------|-------|-------|

| Long scripts | Compilation |

|

| Simple calc (cached) | Execution |

|

| Fibonacci | Recursion |

|

---

Online Product Test RTs:

| Product | Throughput/s | Success Rate | RT |

|---------|--------------|--------------|----|

| Details | 4M | 99.9999% | <40 μs |

| Order | 364k | 99.999% | <100 μs |

| Transaction | 163k | — | — |

---

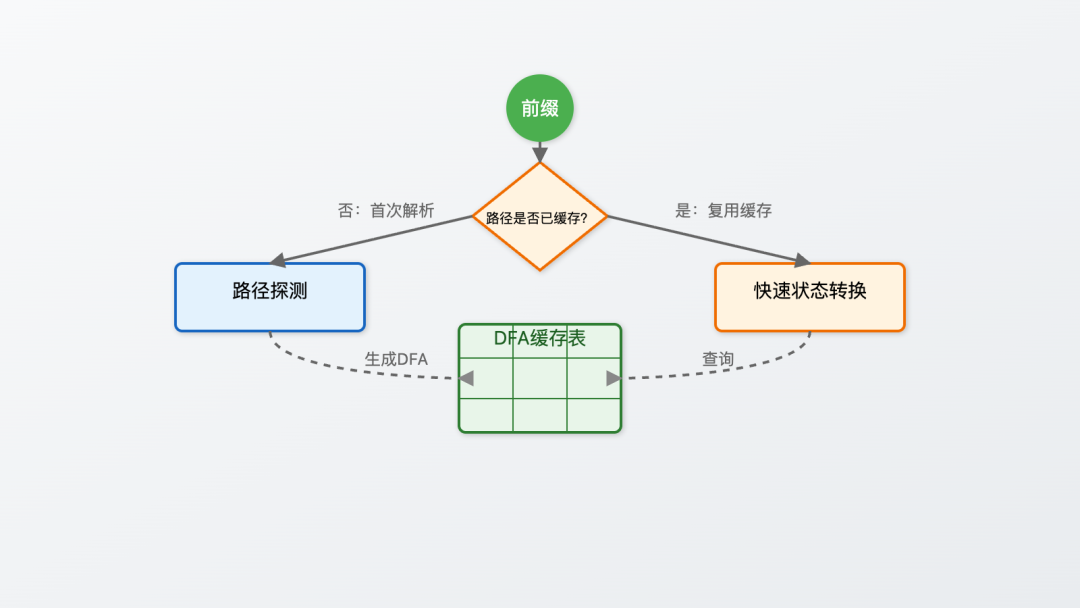

Compilation Improvements:

- Migrated to Antlr4 parser for mature, DFA-optimized parsing

- Warmed common paths for better first-run performance

---

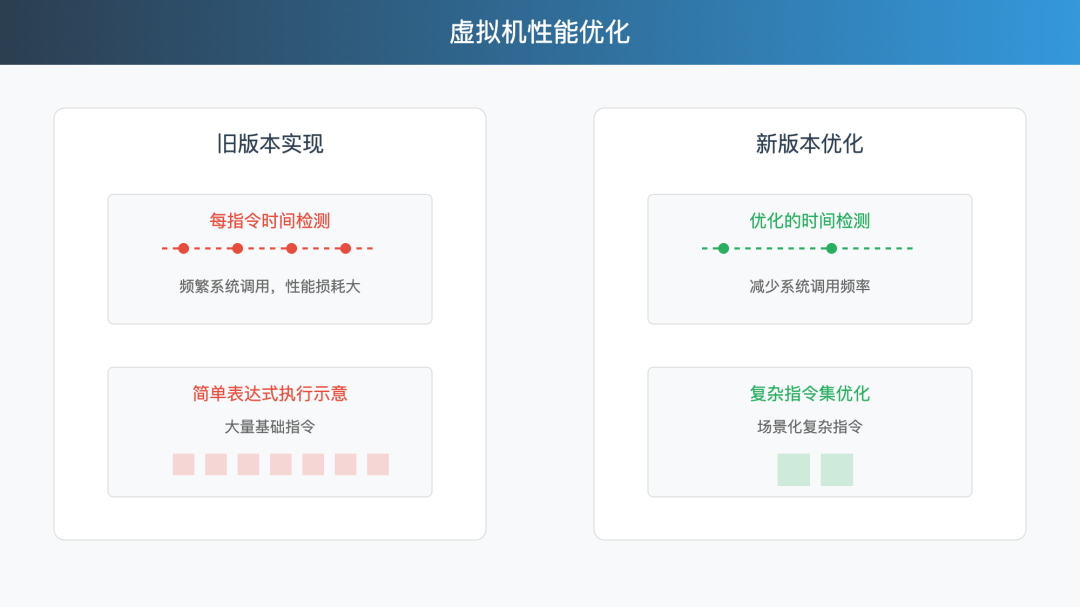

Execution Improvements:

- Reduced system time lookups in loops

- Grouped common instructions into complex sets → fewer total instructions

---

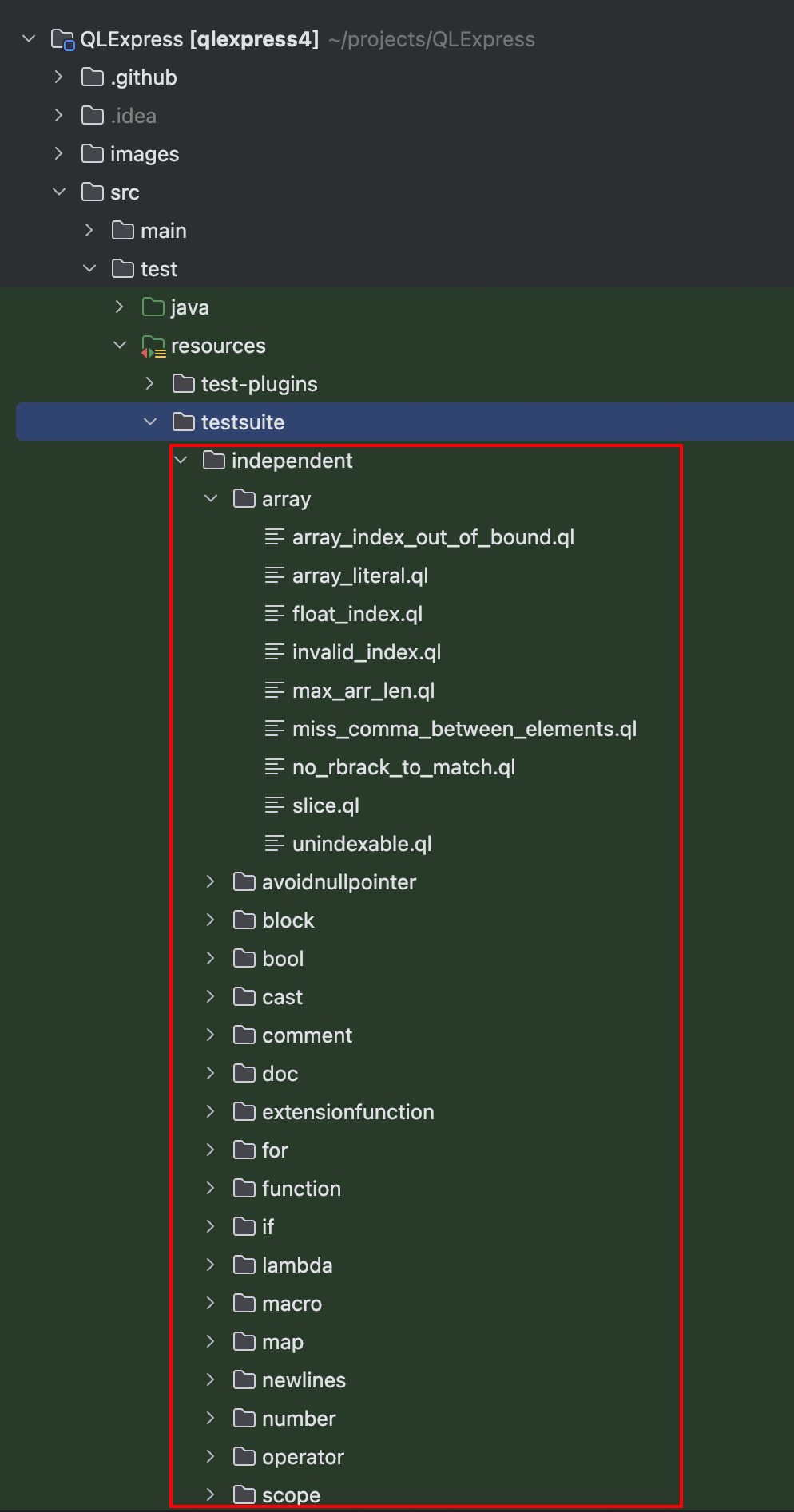

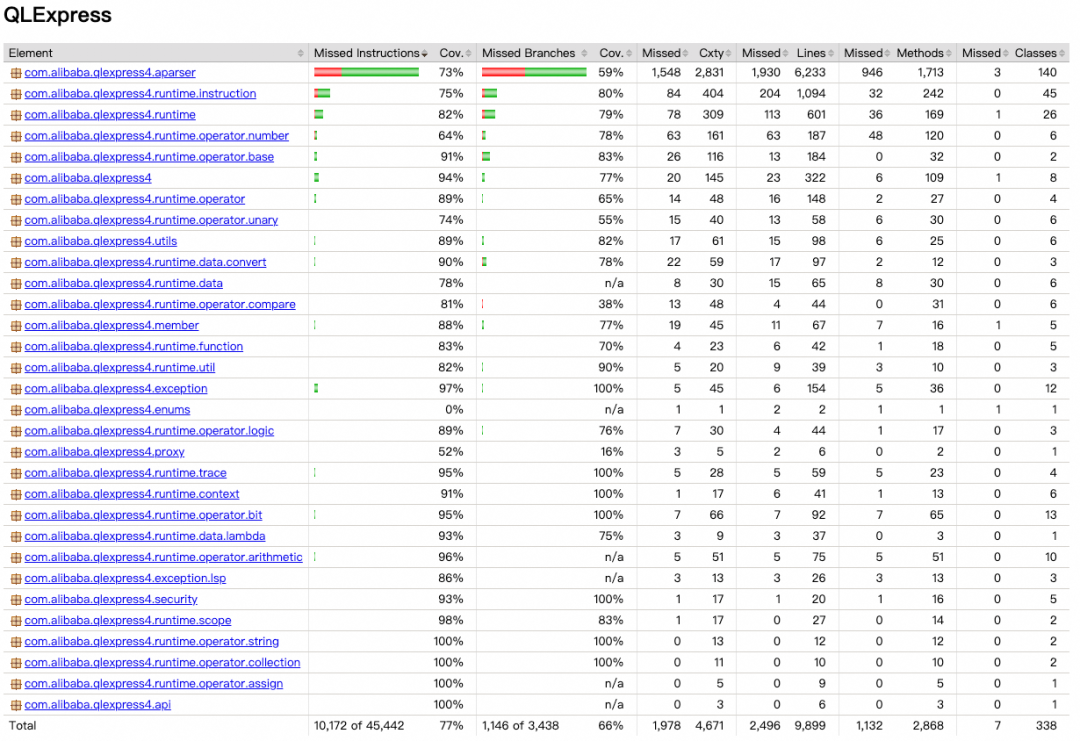

04 — Test Cases & Documentation Engineering

Unit Tests:

- Stored in `src/test/resources/testsuite`

- 100% scenario coverage

- Code coverage: 77%

- Example category: `array` → includes `array_index_out_of_bound.ql` test cases

---

Unit Tests = Living Documentation

- We embed real test code snippets into AsciiDoc docs

- Ensures all examples are executable and accurate

Example:

// tag::firstQl[]

Express4Runner express4Runner = new Express4Runner(InitOptions.DEFAULT_OPTIONS);

Map context = new HashMap<>();

context.put("a", 1);

context.put("b", 2);

context.put("c", 3);

Object result = express4Runner.execute("a + b * c", context, QLOptions.DEFAULT_OPTIONS);

assertEquals(7, result);

// end::firstQl[]---

GitHub Rendering Workaround:

- Preprocess `README-source.adoc` via GitHub Actions

- Use `asciidoctor-reducer` to merge includes into final `README.adoc`

name: Reduce Adoc

on:

push:

paths: [README-source.adoc]

jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- run: sudo gem install asciidoctor-reducer

- run: asciidoctor-reducer --preserve-conditionals -o README.adoc README-source.adoc

- uses: EndBug/add-and-commit@v9

with:

add: README.adoc---

Appendix

Repo: https://github.com/alibaba/QLExpress

DingTalk Group: `122730013264`

References:

- Expression Calculation Tracing — link

- Convenient Syntax Elements — link

- `qlexpress-mcp`

- Performance Test

- AsciiDoctor

- GitHub AsciiDoc Rendering — link

- QLExpress reduce-adoc.yml

---

Final Note:

Platforms like AiToEarn enable AI-assisted content creation, cross-platform publishing, and analytics. This helps developers distribute technical content — such as QLExpress guides — across Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X/Twitter efficiently.

---

Would you like me to add side-by-side performance graphs with explanations so readers can visualize the benchmark improvements better? That could make the results even clearer.