Inspired by the Human Brain’s Hippocampus-Cortex Mechanism, Red Bear AI Rebuilt Its Memory System

Memory — The Key Breakthrough for AI to Evolve from “Instant Q&A Tool” to “Personalized Super Assistant”

Memory is emerging as the pivotal breakthrough that can transform AI from a mere instant answer tool into a truly personalized super assistant.

---

From Transformers to Nested Learning

Recently, Google Research published a paper titled Nested Learning: The Illusion of Deep Learning Architectures. Many see it as a “V2 successor” to the landmark Attention is All You Need paper.

The 2017 Attention is All You Need introduced the Transformer architecture, igniting the large language model (LLM) revolution.

What makes Nested Learning a spiritual sequel is its paradigm-shifting proposal: a machine learning approach that allows LLMs to acquire new skills without forgetting the old — moving toward brain-like memory and continual evolution.

This signals a strategic shift in AI:

- The industry is slowing the “bigger and faster” race.

- Leading figures like Ilya Sutskever declare “Scaling is dead”.

- Focus is shifting toward memory capability and deeper user understanding.

---

Why Memory Is the Missing Piece

Over the past year, LLM apps have gone mainstream, spawning AI agents and “super assistants.” Yet none offer true personalization — most are still instant answer tools.

The key shortcoming: AI forgets too easily — lacking long-term memory.

Everyday Problems

- Opening a new chat window = starting from scratch.

- Multi-agent workflows lack persistent shared memory.

- Enterprises cannot build AI that learns continuously from experience.

All stem from fundamental defects in current LLM memory design.

---

Current Memory Limitations

- Context Window Limits

- Most LLMs: 8k–32k tokens.

- Long conversations push early info out, losing key details.

- Example: In round one, you say “I’m allergic to seafood.” By round five, it’s forgotten.

- Attention Decay (Recency Bias)

- Transformers prioritize recent input over earlier facts.

- Architecture: inherently short-term focused.

---

Fragmented Memory Across Agents

- Different agents maintain isolated memories.

- Switching agents feels like talking to a different AI.

- Users must repeat information.

---

Semantic Drift and Changing Preferences

- Ambiguous references, jargon, multi-language switching → misinterpretations.

- AI’s static knowledge base struggles to match evolving user needs.

---

The Race Toward Long-Term Memory

Research like Google’s Nested Learning points toward continuous learning without forgetting — essential for building AI that acts as a true personal assistant.

Memory advances will enable:

- Consistent conversations

- Continuous workflows

- Personalized recommendations

- Cross-agent cooperation

Platforms such as AiToEarn官网 show how open-source ecosystems can leverage “remembering” AI for multi-platform publishing, analytics, and monetization.

---

Red Bear AI’s “Memory Bear” — Giving AI Human-like Memory

Red Bear AI’s move into memory was driven by real-world customer problems.

Founded April 2024, the company initially built base-level AI platforms. In September, during an intelligent customer service project, they hit a “knowledge forgetting” issue. Multiple attempted fixes — context optimization, external KBs, hyperparameter tuning, long-term memory hacks — all failed.

CEO Wen Deliang realized: memory deficiency may be the core bottleneck stopping AI from evolving beyond instant answers.

---

Strategic Refocus: Multimodal + Memory Science

Red Bear AI switched to a multimodal + memory science path, launching Memory Bear after a year of R&D.

Memory Bear addresses long-term memory issues such as:

- Low accuracy

- High cost

- Frequent hallucinations

- High latency

---

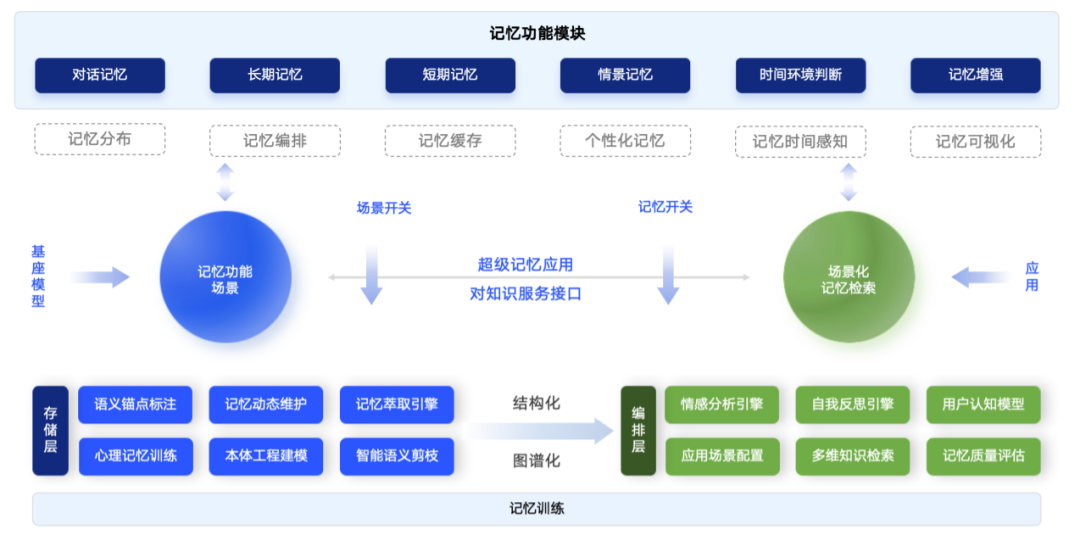

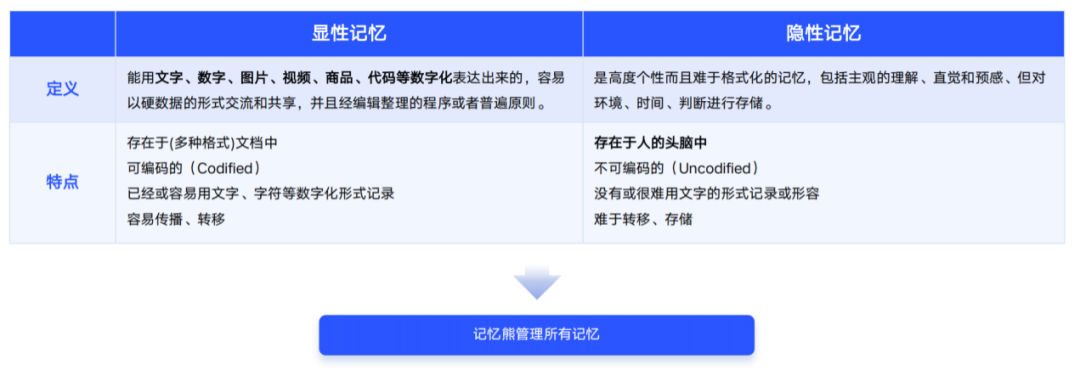

A Human-like Memory Architecture

Inspired by the brain’s hippocampus–cortex mechanism:

Human analogy:

- Hippocampus: temporary library & index builder

- Cortex: permanent distributed library for consolidation & association

Memory Bear mirrors this with:

Explicit Memory Layer

- Structured DB storage

- Episodic (conversation history)

- Semantic (domain-specific KB)

Implicit Memory Layer

- External, model-independent

- Behavioral habits, strategies, decision preferences

Emotional Weighting

- Prioritizes emotionally significant or recurring info

- Mimics human vivid memory in emotionally charged situations

---

Performance Highlights

- Token efficiency: +97%

- Context drift reduction: −82%

- Complex reasoning accuracy: 75.00 ± 0.20%

- LOCOMO benchmark: high scores across QA, reasoning, generalization, long sequence processing

- Latency: p50 search = 0.137s, p95 overall = 1.232s

---

Real-world Implementation Scenarios

1. Intelligent AI Customer Service

- Dynamic memory maps for each user

- “Lifetime memory” of customers

- Cross-agent memory sharing

- Results:

- Human replacement rate: 70%

- Self-service resolution: 98.4%

---

2. Marketing

- Interest-based memory maps

- Tracks user journey from click → repeat purchase

- Moves from “You might like” → “I remember what you like and know what you want now”

---

3. Enterprise Digital Transformation

- Unified organizational memory hub

- Breaks down departmental silos

- +50% efficiency in onboarding for new employees

---

4. AI Education

- Personalized teaching + emotion-weighted recommendations

- Tracks error history over months

- Delivers tailored instruction

---

Future Outlook

From Google’s technical research to Red Bear AI’s applied engineering, one consensus emerges: human-like AI memory is the missing link to AGI.

Memory isn’t just a feature — it's a strategic necessity in AI evolution.

Platforms like AiToEarn官网 integrate these capabilities into processes that help creators publish to Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter), while tracking AI model rankings (AI模型排名).

---

Bottom line: Memory Bear doesn’t just remember — it remembers fast, accurately, and efficiently.

---

© THE END