Intelligent Agent Design Patterns: Parallel Execution and Performance Optimization [Translated]

![Intelligent Agent Design Patterns: Parallel Execution and Performance Optimization [Translated]](/content/images/size/w1200/2025/10/img_001-29.png)

Launch of the Agentic Design Patterns Chinese Translation Project

Just as Design Patterns served as a cornerstone for software engineering, Agentic Design Patterns—freely shared by a senior engineering director at Google—introduces the first systematic set of design principles for the fast-evolving field of AI agents.

Author: Antonio Gulli

Foreword: Saurabh Tiwary (Google Cloud AI VP)

Recommendation: Marco Argenti (CIO, Goldman Sachs)

The book presents 21 core agent design patterns that cover techniques from prompt chaining and tool usage to multi-agent collaboration and self-reflection.

Translation Plan:

Over the next month:

- AI Initial Translation

- AI Cross-Review

- Human In-Depth Optimization

Translations will be posted at: github.com/ginobefun/agentic-design-patterns-cn

Completed Chapters

---

Parallelization Pattern Overview

The parallelization pattern boosts efficiency and responsiveness by executing multiple independent tasks simultaneously. This transforms previously serial waits into concurrent execution—a keystone for optimizing complex AI agent workflows.

1. Core Concept: Sequential → Concurrent

Identify workflow segments that are independent and execute them in parallel.

- Sequential Execution Issues:

- Each task waits for the previous one, and in I/O-heavy workflows this becomes the sum of all task durations.

- Parallel Execution Value:

- Multiple tasks start at once, allowing work during I/O waits and dramatically reducing total time.

- Example: Query multiple data sources at once.

2. Typical Scenarios

Parallelization excels in:

- Information Gathering & Research — Pull news, stock data, social media, and corporate DBs at the same time.

- Data Processing & Analysis — Perform sentiment analysis, keyword extraction, and classification simultaneously.

- Multi-API or Tool Interaction — Plan trips by fetching flights, hotels, events, restaurants in parallel.

- Multi-Component Content Creation — Generate email subject, body, images, and CTA text together.

- Verification & Validation — Check formatting, numbers, addresses, and content appropriateness at once.

- Multimodal Processing — Analyze text and images in parallel.

- A/B Testing or Idea Generation — Create multiple content variants in parallel for quick comparison.

---

3. Framework Implementation

- LangChain / LCEL — `RunnableParallel` packages multiple components to run concurrently.

- LangGraph — Graph topology triggers independent nodes in parallel.

- Google ADK — `ParallelAgent` coordinates sub-agents, combined with `SequentialAgent` for "parallel first, then aggregate".

---

4. Concurrency vs. Parallelism

- Python asyncio — Single-threaded concurrency, switching tasks during I/O wait; limited by GIL.

- Best Use Case — I/O-intensive operations (API calls, network requests).

- Trade-Off — Complexity in design, debugging, and logging.

---

5. Usage Conditions

Apply parallel patterns when:

- The workflow has independent, non-dependent subtasks.

- You must call multiple APIs/services for varied data.

- Various processing types are applied to the same input.

- Waiting for external resource responses dominates run time.

- You need multiple output versions for comparison/selection.

---

Framework Examples

LangChain Example

Using LCEL to run summarization, question generation, and keyword extraction in parallel:

# RunnableParallel groups tasks for concurrent execution

map_chain = RunnableParallel({

"summary": summarize_chain,

"questions": questions_chain,

"key_terms": terms_chain,

"topic": RunnablePassthrough(),

})

full_parallel_chain = map_chain | synthesis_prompt | llm | StrOutputParser()Complete example code is available in Colab and GitHub.

---

Google ADK Example

Three sub-agents researching renewable energy, EV technology, and carbon capture run in parallel with `ParallelAgent`, followed by `MergerAgent` for synthesis.

parallel_research_agent = ParallelAgent(

sub_agents=[researcher_agent_1, researcher_agent_2, researcher_agent_3]

)

sequential_pipeline_agent = SequentialAgent(

sub_agents=[parallel_research_agent, merger_agent]

)

root_agent = sequential_pipeline_agent---

Quick Overview

Problem: Sequential execution slows multi-task workflows, especially with external I/O calls.

Solution: Identify independent tasks and execute concurrently to reduce run time.

Rule: Use parallelization for truly independent tasks (e.g., multiple API calls, batch data processing, multi-output generation).

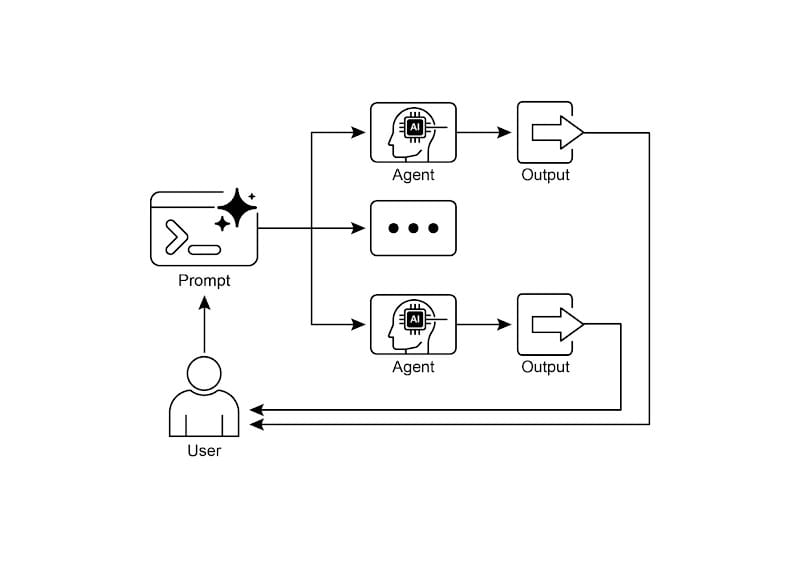

Visual Summary:

Figure 2: Parallelization Pattern

---

Key Takeaways

- Parallelization Pattern → Run independent tasks at the same time to improve efficiency.

- Ideal for workflows with external waits.

- Raises architecture complexity.

- Supported by LangChain (`RunnableParallel`) and Google ADK (`ParallelAgent`).

---

Conclusion

Parallel execution patterns are a cornerstone for efficient AI agent systems.

Framework Mechanisms:

- LangChain — explicit concurrent runnable definitions.

- Google ADK — LLM-driven sub-agent delegation.

Combination of parallel, sequential, and conditional patterns enables robust, high-performance workflows.

---

Bonus: Multi-Platform Publishing with AiToEarn

For creators applying these workflows to content production, AiToEarn offers:

- AI content generation tools

- Parallel multi-platform publishing (Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X/Twitter)

- Integrated analytics and AI model rankings

Resources:

This mirrors the efficiency of parallel agent workflows—helping creators maximize both reach and monetization.

---