# **New Intelligence Source Report**

---

## **Editor's Note**

Cursor didn’t just unveil its self-developed coding model **Composer** — it also **overhauled IDE interaction logic**, enabling **up to 8 concurrent AI agents**.

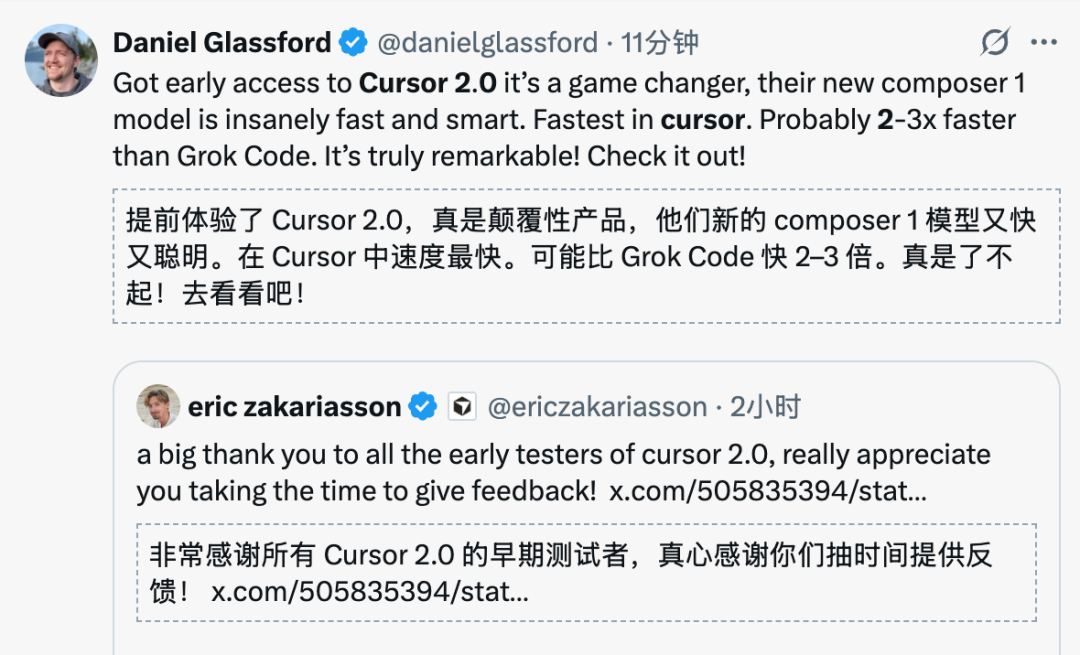

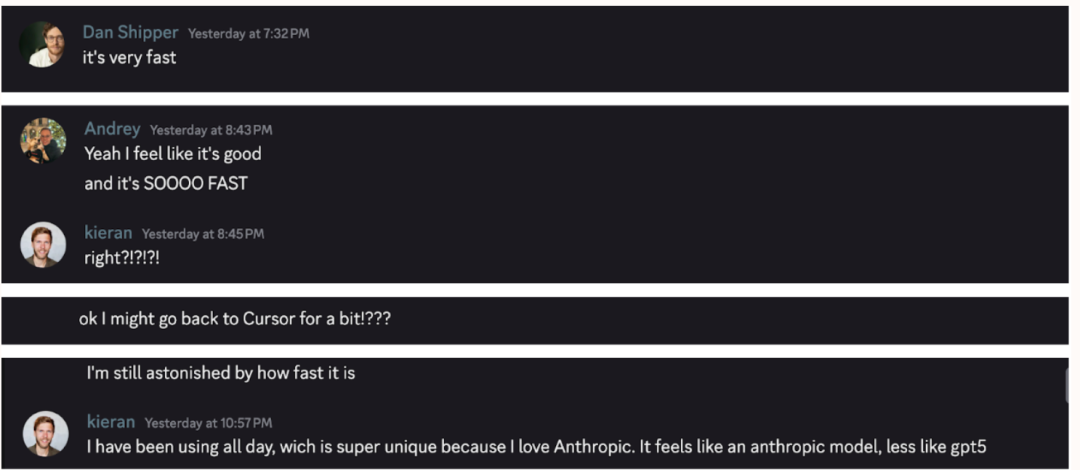

Early testing and developer feedback agree: **Cursor 2.0 is blazingly fast**.

---

## **Cursor 2.0 — A Major Leap Forward**

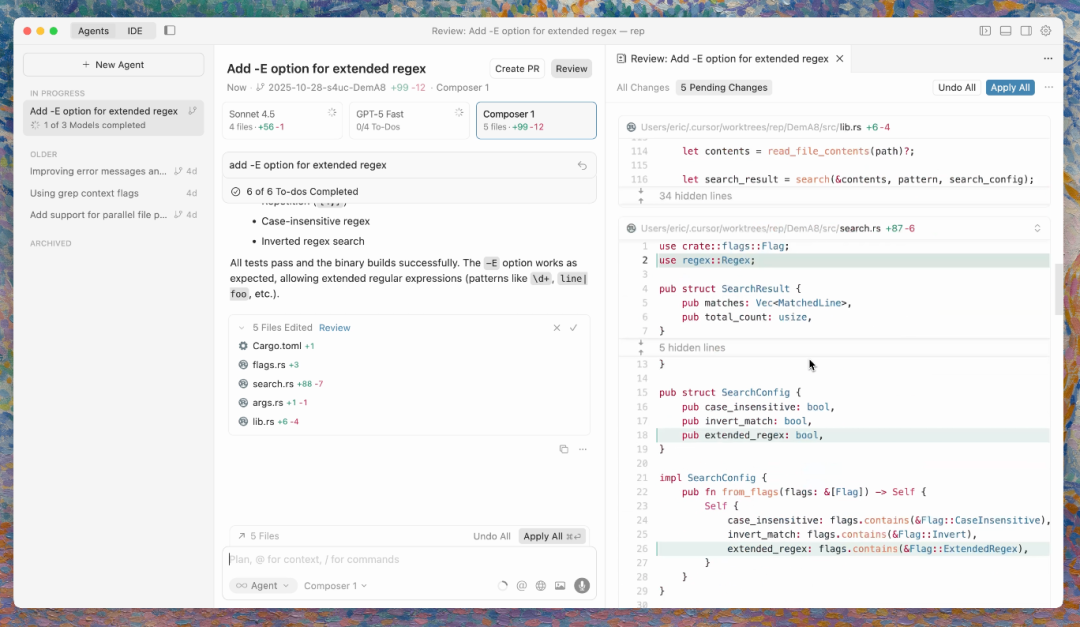

Cursor has released **version 2.0** with:

- **First proprietary coding model:** **Composer**

- **4× faster performance** than similar models

- **Low-latency builds:** most tasks finish within **30 seconds**

- **200 tokens/sec** generation

### **Multi-Agent Mode**

- Supports **up to 8 agents in parallel**

- Uses **git worktrees** or **remote machines** to avoid file conflicts

---

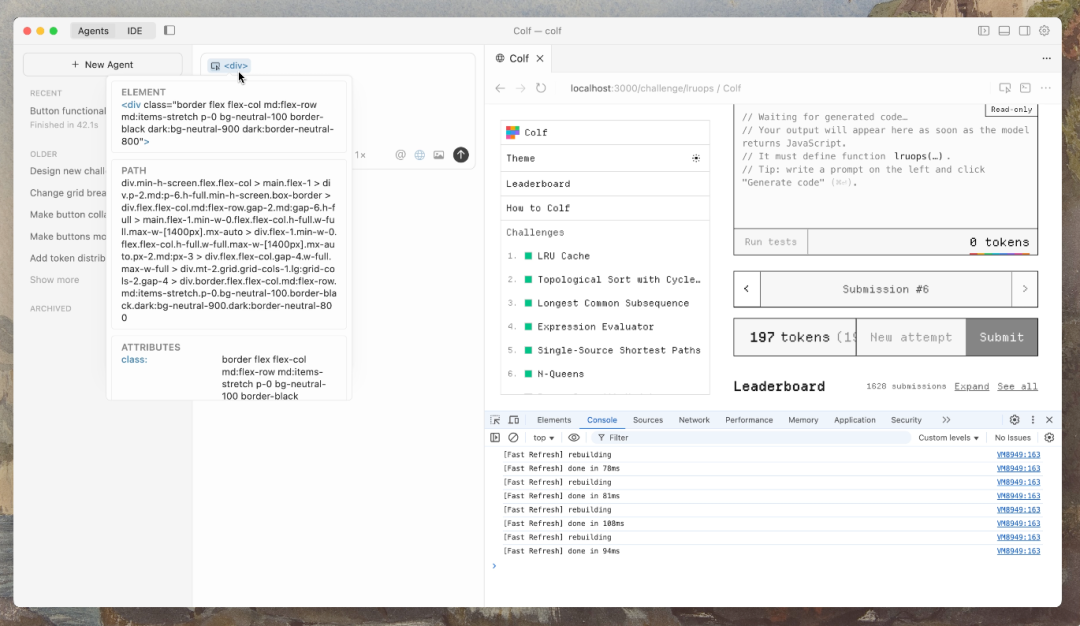

## **Embedded Browser Integration**

This update embeds a **browser directly inside the editor**, especially valuable for frontend developers.

**Benefits:**

1. Select DOM elements visually

2. Instantly pass selections to Cursor

3. Auto-highlight corresponding source code

---

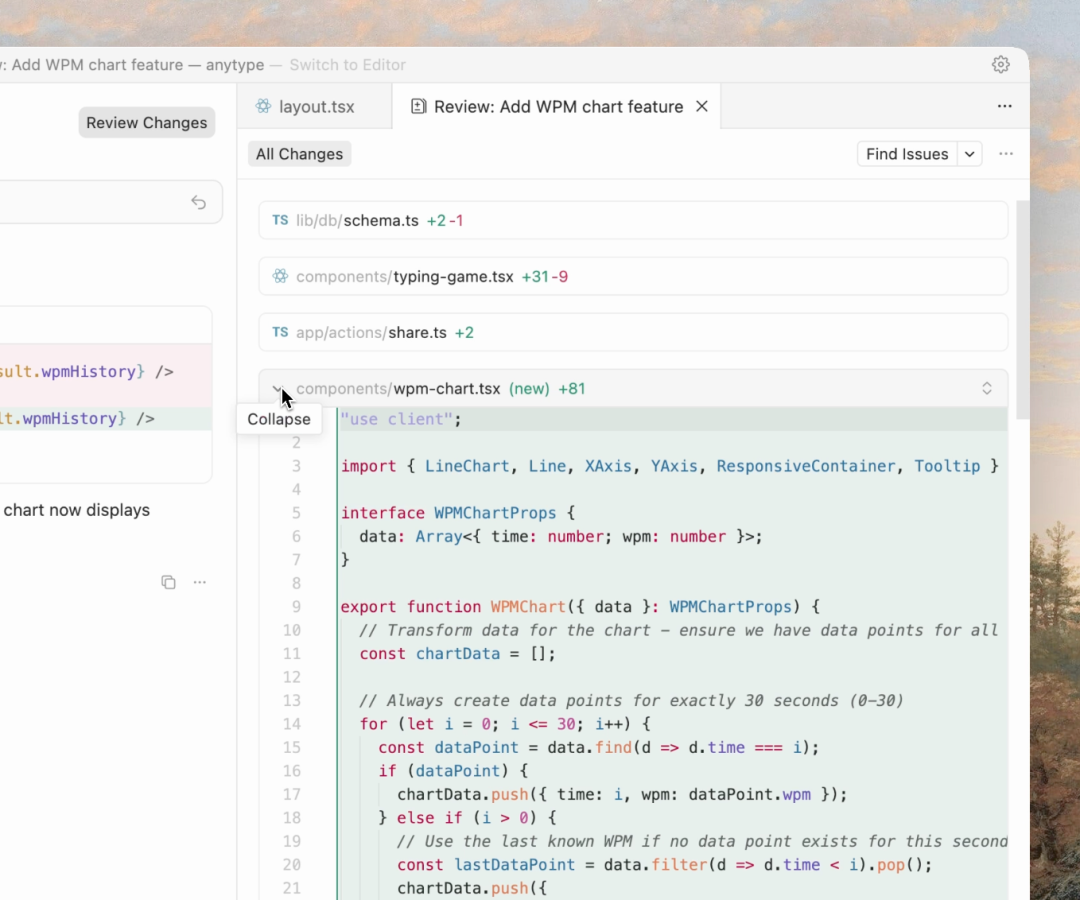

## **Cross-File Code Review**

New **cross-file review** lets you see all AI-generated changes without switching between files repeatedly.

---

## **Voice Mode — "Speech to Code"**

Cursor now supports **Voice Mode**, allowing natural speech-to-code workflows.

**Other improvements:**

- Prompts now auto-incorporate relevant context

- Manual markers (`@Definitions`, `@Web`, `@Link`, `@Recent Changes`, `@Linter Errors`) removed

- Agents auto-gather context without manual intervention

---

## **From Wrapper to Proprietary Model**

Cursor historically wrapped external models like Claude, paying heavy licensing fees.

**Composer changes this:**

- Proprietary AI model = better margins

- Full control over stack

> “You can’t have a $10 billion valuation and still just be a wrapper app.”

---

## **Recognition at GTC 2025**

NVIDIA CEO Jensen Huang praised Cursor:

> “At NVIDIA, every software engineer uses Cursor.

> It’s like having a programming partner that generates code and massively boosts productivity.”

---

## **Ecosystem Note**

Platforms like **[AiToEarn](https://aitoearn.ai/)** are also raising the game by enabling **multi-platform AI content creation** and monetization.

They support publishing across Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X, with built-in analytics and model ranking.

---

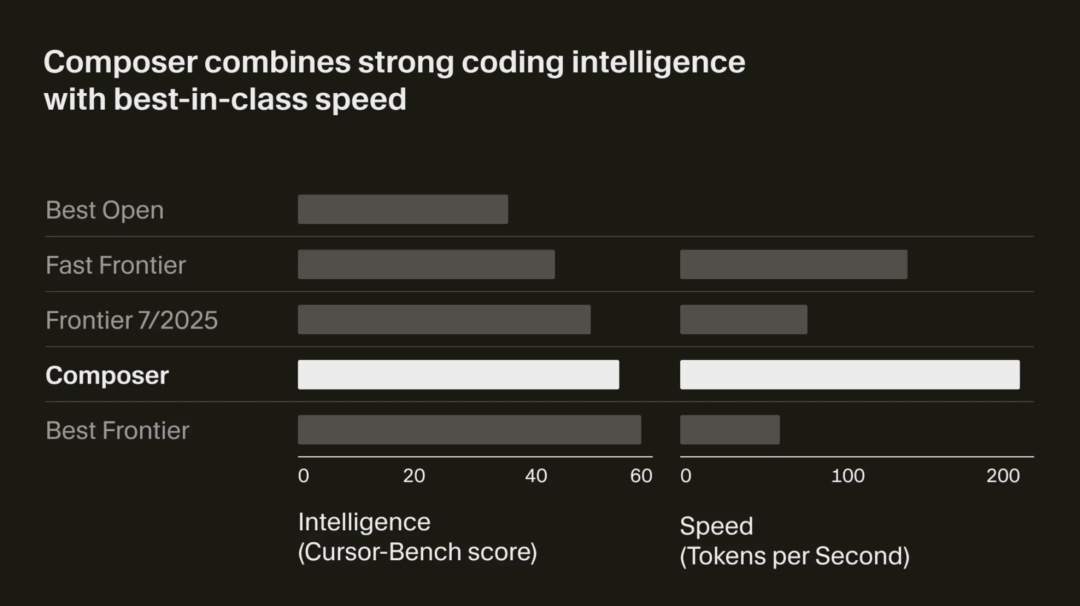

## **Composer — Built for Speed and Intelligence**

### **Origins**

- Inspired by early prototype **Cheetah**

- Goal: **Both intelligence & interactivity**

### **Core Design**

- **Mixture of Experts (MoE)** architecture

- Long-context support

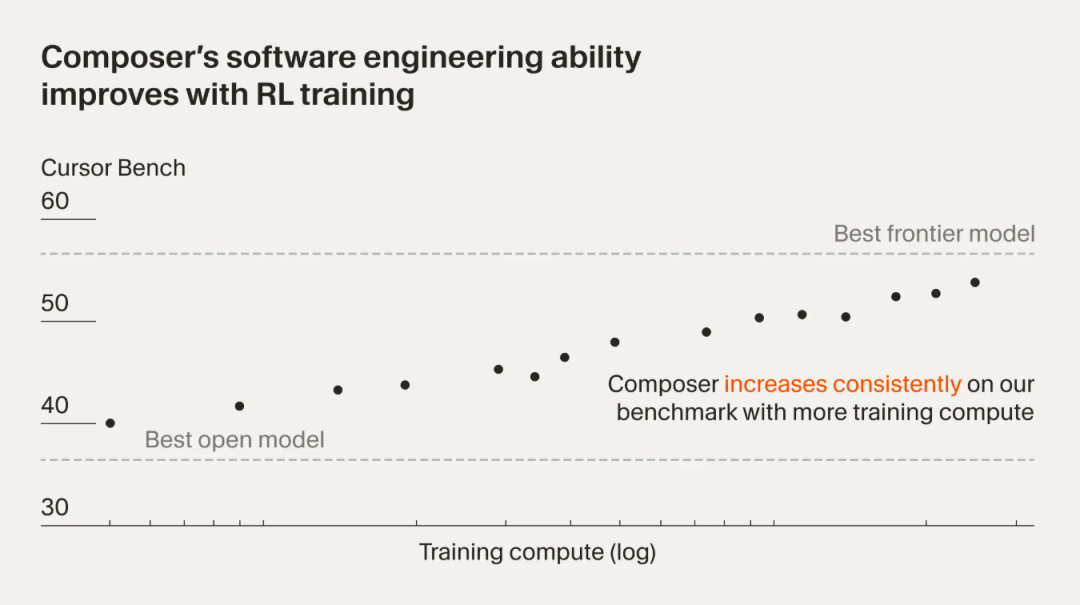

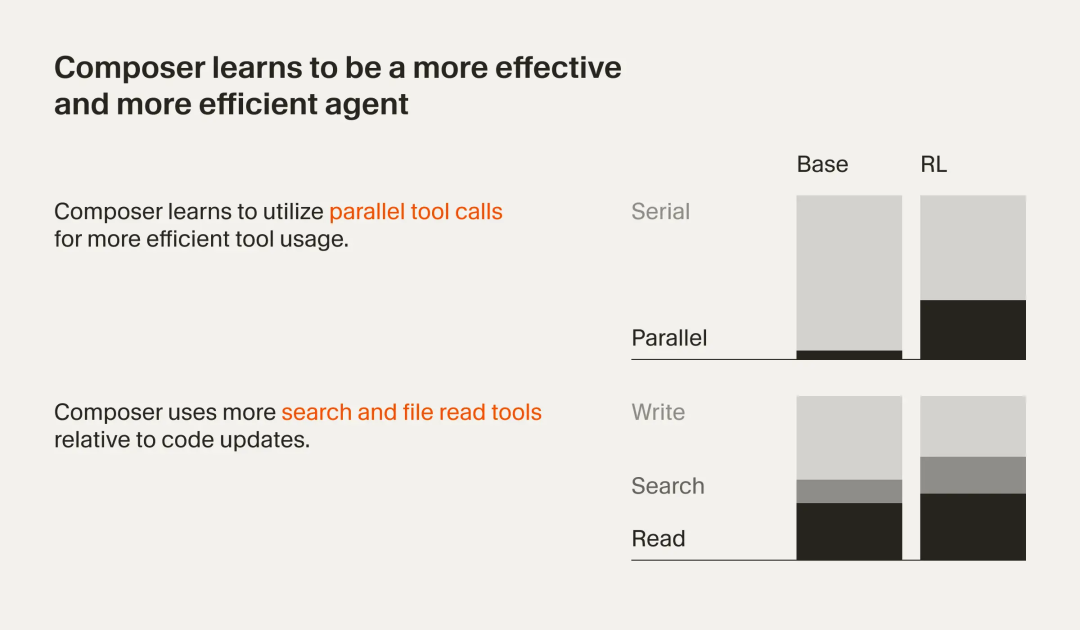

- **Reinforcement Learning (RL)** in real dev environments

---

## **Why RL Matters**

**RL advantages:**

- Targeted optimization for software engineering

- Efficient use of tools

- Maximize parallelism

- Minimize unnecessary responses

- Gains practical skills like fixing **linter errors**, writing/running **unit tests**, doing **complex semantic searches**

---

## **Training Infrastructure**

- Built on **PyTorch** + **Ray**

- Supports **asynchronous RL** at scale

- **MXFP8 MoE kernels** + expert parallelism + hybrid-sharded data parallelism

- Trains on thousands of NVIDIA GPUs with low communication overhead

- Native low-precision training — faster inference without post-quantization

---

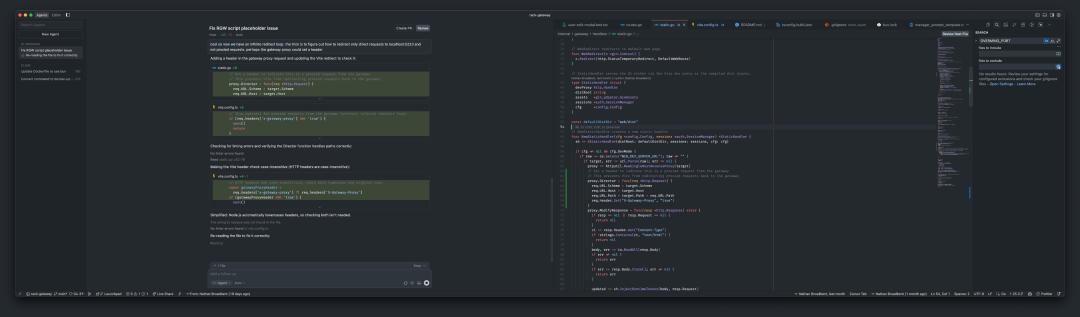

## **Hands-On Testing**

Observed:

- Ultra-fast responses — some in just **~10 seconds**

- Strength in **frontend UI generation**

- Minor accuracy issues in complex logic

---

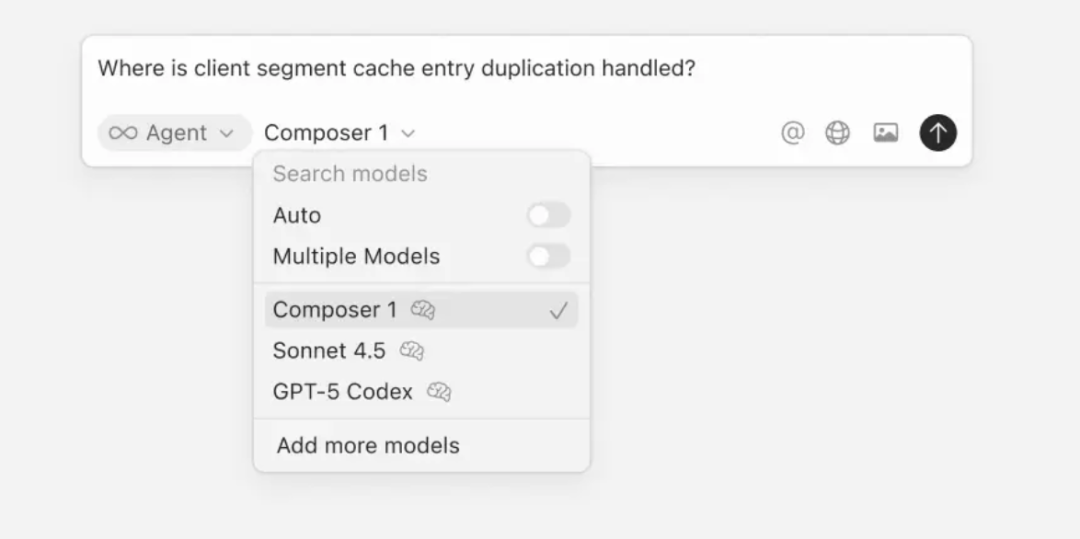

## **Shift Away from External Models**

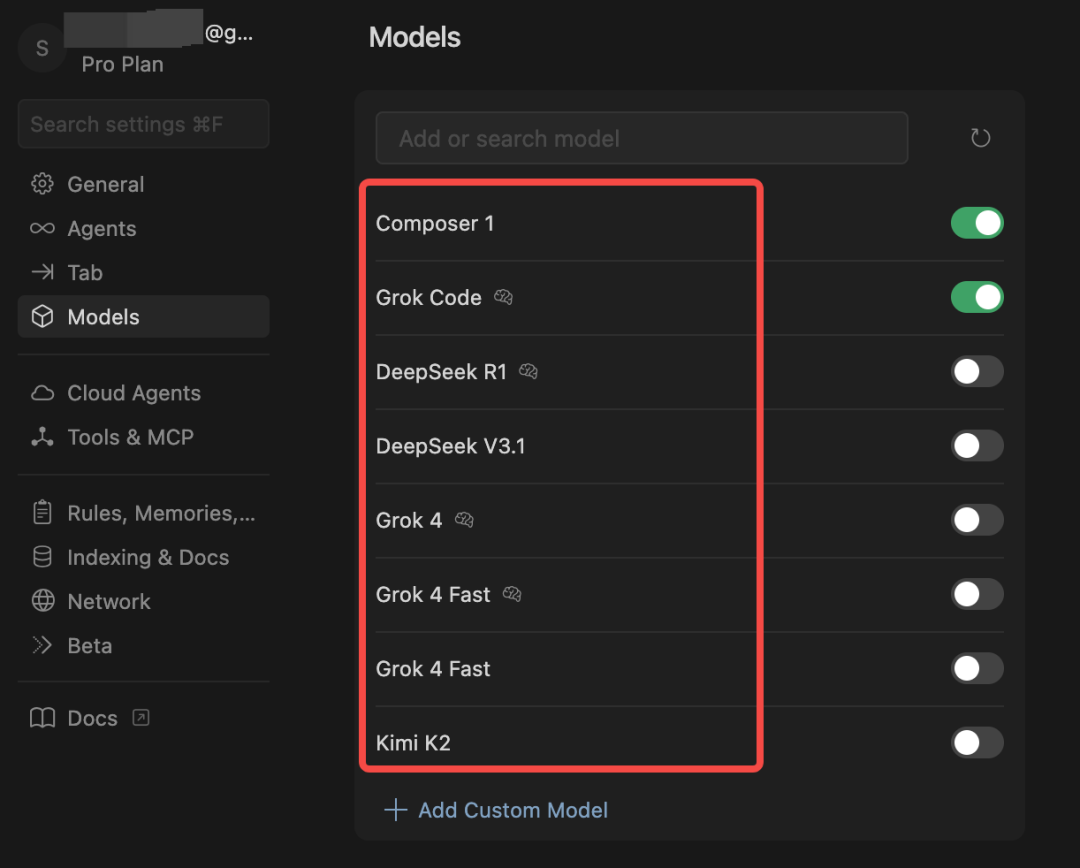

Post-update:

- GPT & Claude removed

- Available: Composer, Grok, DeepSeek, K2

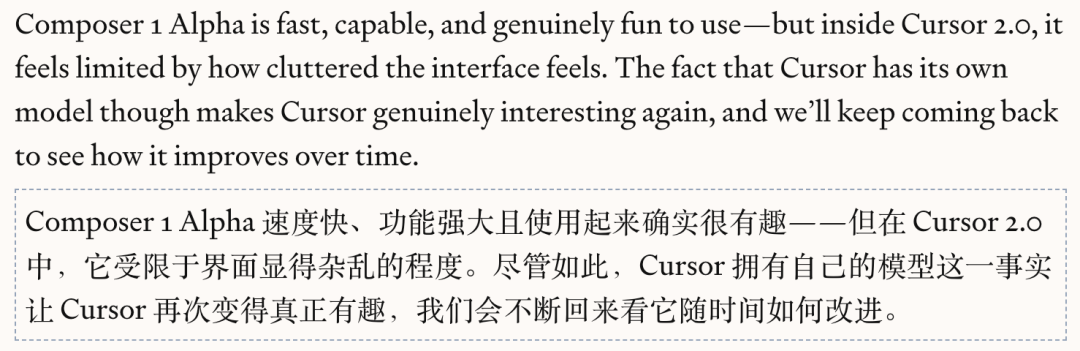

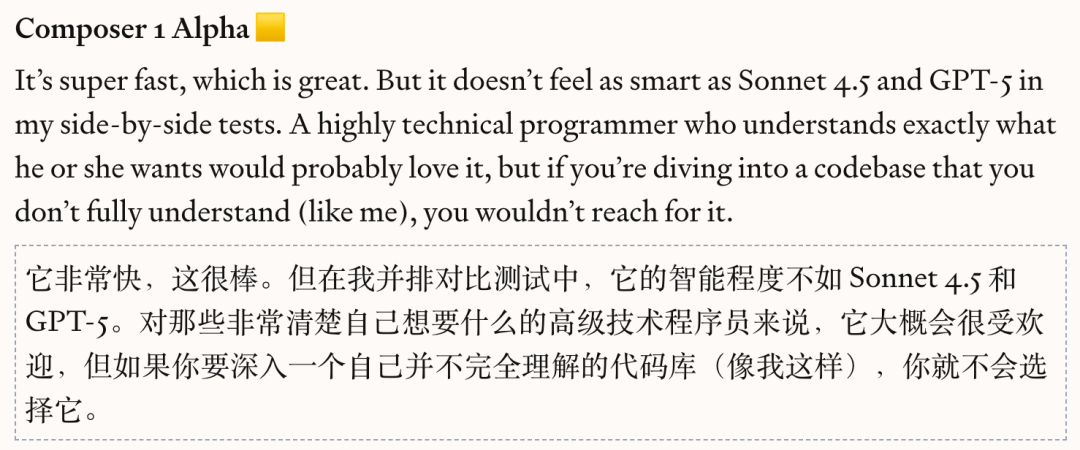

**Feedback:**

- Composer is very fast

- **Still less intelligent** than Sonnet 4.5 or GPT-5 (per some testers)

- CLI-first teams aren't fully sold yet

- Multi-agent shines on wide screens

---

## **Industry Context**

- Highly competitive AI coding space: Claude Code, Codex, domestic tools

- Cursor benefited from early mind-share

- Proprietary model development marks a **new milestone**

- The ultimate winner will likely be chosen by developers themselves

---

## **References**

- [Cursor Blog — 2.0](https://cursor.com/cn/blog/2-0)

- [Cursor Changelog — 2.0](https://cursor.com/cn/changelog/2-0)

[Read the original article](2652640343)

[Open in WeChat](https://wechat2rss.bestblogs.dev/link-proxy/?k=d7ae75a5&r=1&u=https%3A%2F%2Fmp.weixin.qq.com%2Fs%3F__biz%3DMzI3MTA0MTk1MA%3D%3D%26mid%3D2652640343%26idx%3D1%26sn%3D483dc9f314c8ba9ea3732075dfafa438)

---

**Final Thought:**

Cursor’s move to its own model, paired with tools like **AiToEarn** for distribution and monetization, signals the maturation of the AI dev stack — from **code generation to global deployment**.