Karpathy: Reinforcement Learning Is Terrible, but Everything Else Is Worse

Kaparthy’s Latest In‑Depth Interview

As Tesla’s former Director of AI and a founding member of OpenAI, Andrej Kaparthy spent nearly two and a half hours answering thought‑provoking questions, including:

- Why reinforcement learning performs poorly (but alternatives perform even worse)

- Why general artificial intelligence will sustain about 2% GDP growth

- Why autonomous driving has had an unusually long and challenging development cycle

Kaparthy has already announced he is now working full‑time in education. His perspective on the future of learning is as insightful as his technical remarks.

Netizens summed it up: “The knowledge density is insane — two hours with Kaparthy equals four hours with others.”

Buckle up — the knowledge bombs are coming.

---

The Decade of Agents — Not Just a Year

At the start, the host posed a popular question:

> Why do you say the future will be the decade of agents rather than the year of agents?

Kaparthy’s key points:

- Early agents like Claude and Codex already show impressive capabilities.

- However, they will need years of evolution before reaching the ideal form.

- He estimates roughly ten years for this transformation.

Why the delay?

- Agents cannot yet collaborate seamlessly with humans (like capable interns or employees).

- Intelligence levels are insufficient.

- Multimodal abilities are limited.

- They lack the ability to control computers for complex workflows.

- They have no continuous learning — you cannot teach and have them remember permanently.

- Core cognitive architecture gaps remain.

From his 15 years in AI, Kaparthy believes solving these systemic challenges will require a decade of progress.

---

How Developers Write Code Today

Kaparthy outlined three current approaches:

- Full rejection of LLMs — all code written manually.

- Middle ground — write most code by hand, leverage autocomplete (Kaparthy’s approach).

- Prompt‑driven coding — instruct model to “implement feature X” and let it generate the work.

His own project, NanoChat, requires deep thought for nearly every line and precise design intent.

Problems with Current Models

- Trained on common open web patterns → often reinforce production‑grade assumptions unnecessarily.

- May introduce complexity, bloat, and deprecated APIs.

- Final code can be messy and hard to maintain.

Kaparthy warns that industry narratives overstate real performance; substantial improvement is still needed.

---

On Reinforcement Learning (RL)

Kaparthy says:

> RL is worse than most people think — but alternatives are even worse.

Example: Solving a math problem with RL

- Generate hundreds of possible solutions in exploration.

- When one is correct, reinforce every single step in that correct path, even if many steps were wasteful detours.

- This wastes computing power to get a simple binary signal (correct/incorrect), then blindly weights the whole trajectory.

As Kaparthy bluntly put it: “It’s absurd.”

---

Human vs. AI Cognitive Process

Kaparthy notes:

- Humans don’t attempt hundreds of random trials — they reason.

- Upon finding an answer, humans conduct a complex review, identifying what worked and why.

Reading as an example:

- LLM “reading” = next‑word prediction and raw pattern intake.

- Human reading = integrating information, internalizing ideas, and restructuring knowledge to fit existing mental models.

His proposal:

Include a “thinking and digestion” phase during pre‑training so models can genuinely integrate new information with prior understanding.

---

Redefining AGI

The host compared AGI progression to education levels — from high school, to university, to PhD — via reinforcement learning.

Kaparthy disagrees. His preferred definition, aligned with early OpenAI:

> AGI can perform any economically valuable task at or beyond human level.

Autonomy in Work

Even in highly automatable roles (e.g., call center agents), AGI won’t instantly replace humans outright. Instead, autonomy will shift:

- AI handles 80% of routine tasks

- Humans supervise the remaining 20% — managing exceptions and oversight.

---

Will AGI Trigger an Intelligence Explosion?

Kaparthy’s view:

- We are already in a gradual intelligence growth phase — evident in historical GDP’s exponential curve.

- Industrial revolution = physical automation.

- Early software = digital automation.

- AGI will continue the same growth trend (~2% per year), not cause sudden massive jumps.

---

Tesla and Autonomous Driving Challenges

Kaparthy clarified: Autonomous driving is still far from finished.

Key reason for long timelines:

- Demonstration vs. product: Demos can be easy, but robust products are extremely hard — especially where failure costs are high.

- The “nines of reliability” challenge: Each additional “9” in reliability (e.g., 90% → 99% → 99.9%) requires massive effort.

- At Tesla, they may have reached two or three “nines” — but many more remain.

Autonomous driving must handle countless edge cases, just like production‑grade software must avoid catastrophic bugs.

---

Kaparthy’s Education Vision

Kaparthy is building a top‑tier modern technical school offering a true mentor experience.

His personal example:

- Learned Korean: started in small classes, switched to one‑on‑one tutoring.

- A great tutor can instantly gauge your current knowledge and ask the right questions.

- Even strong LLMs can’t yet do this — but human mentors tailor challenges just right.

Flagship course: LLM101N

- Includes NanoChat as a hallmark project.

- Plans to build intermediate content, recruit TAs, and refine the curriculum for top learning outcomes in AI.

---

Public Reactions

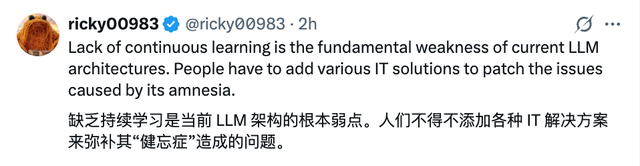

Some netizens emphasize LLM “forgetfulness”:

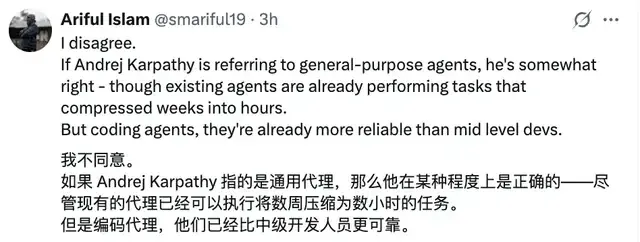

Others completely disagree, claiming coding agents are already reliable:

What’s your take? Share your thoughts in the comments.

---

Reference: https://www.dwarkesh.com/p/andrej-karpathy