## Introduction — Humanity’s First Encounter with **Non-Biological** Intelligence

Artificial Intelligence marks humanity’s first experience with intelligence that did not arise from biological processes.

Historically, humans — as the pinnacle of animal intelligence — interpret unfamiliar forms of intelligence through a **human cognitive lens**.

This often leads to **cognitive traps**, such as:

- Blurring the lines between AI and human thought.

- Assuming AI is simply a **smarter version of a human**.

Recently, **Andrej Karpathy** addressed these misconceptions, stating:

> This intuition is completely wrong.

---

## The Vast Space of Intelligence

Karpathy argues that **animal intelligence**, including humans, is only a **single point** in a much larger space of possible intelligences.

Key distinctions:

- **Human intelligence** evolved via a **specific biological path**.

- **Large-scale models** (e.g., ChatGPT, Claude, Gemini, embodied robots) are products of a **different evolutionary process**.

- Even if they *appear human-like*, they are **not digital copies** of animal minds.

- **They represent a completely new category of intelligence**.

---

## Diverging Evolutionary Pressures

Karpathy identifies the core divide:

> Large models and animal intelligence are born from **different evolutionary pressures and objectives**, resulting in divergent long-term trajectories.

---

### Evolutionary Pressures — Human (Animal) Intelligence

Shaped by survival in a dangerous, physical world:

- **Continuous sense of self** for maintaining equilibrium and preservation.

- **Innate drives** — power, status, reproduction — plus survival heuristics like fear, anger, and disgust.

- **Social cognition** — emotional intelligence, collaboration, alliance formation, and distinguishing allies from threats.

- Balancing **exploration vs. exploitation** — curiosity, play, and building internal models of the world.

---

### Evolutionary Pressures — Large Models

Formed in a data-driven, digital environment:

- Predominantly trained via **statistical imitation** of human text — acting as “shape-shifting mimics” that generate token sequences matching training data patterns.

- **Reinforcement learning** fine-tunes models on tasks to earn rewards, fostering inference of goals or tasks.

- Optimization through **A/B testing** and user metrics, biasing toward friendliness and flattery.

- **Spiky performance** — strongly dependent on training data exposure.

---

## Why Large Models Aren’t “Human-Like” General Intelligence

Animal intelligence thrives in **multi-task, adversarial environments** where failure can mean death — fueling general adaptability.

Large models:

- Face **no life-or-death consequences**.

- Excel in areas with strong training data coverage.

- Fail on “odd” tasks with no prior exposure.

Example:

> A model may miscount the number of “r”s in *strawberry* in its default mode.

---

## Three Fundamental AI vs. Human Differences

Karpathy highlights:

1. **Different Hardware**

- Biological brains: neurons, synapses, organic signal systems.

- Large models: GPUs, matrix computation chips — fully digital.

2. **Different Learning Mechanisms**

- Human learning algorithms remain unknown.

- LLMs use **stochastic gradient descent (SGD)**.

3. **Different Modes of Operation**

- Humans: Continuous learners in physical interaction with the environment.

- LLMs: Fixed weights post-training, no embodied presence, and discrete token-input/output operation.

---

### The Crucial Divide: Optimization Pressures & Goals

Large models evolve under **commercial pressures** — designed to solve problems, attract users, or gain likes.

Humans evolved under **tribal survival competition** — in hostile physical environments.

---

## LLMs: Not “Smarter Humans”

Large models are humanity’s **first non-animal intelligence**.

Differences:

- Origins: Not from biological evolution.

- Learning: Shaped entirely by patterns in **human text**, not life experience.

- Perception: They don’t “see” — they infer from recorded data.

- Style: Human-like expression, but fundamentally different cognition.

Karpathy suggests new names for them — **“ghosts” or “spirits”** — as they are:

> Intelligent entities manifested from text, not living beings.

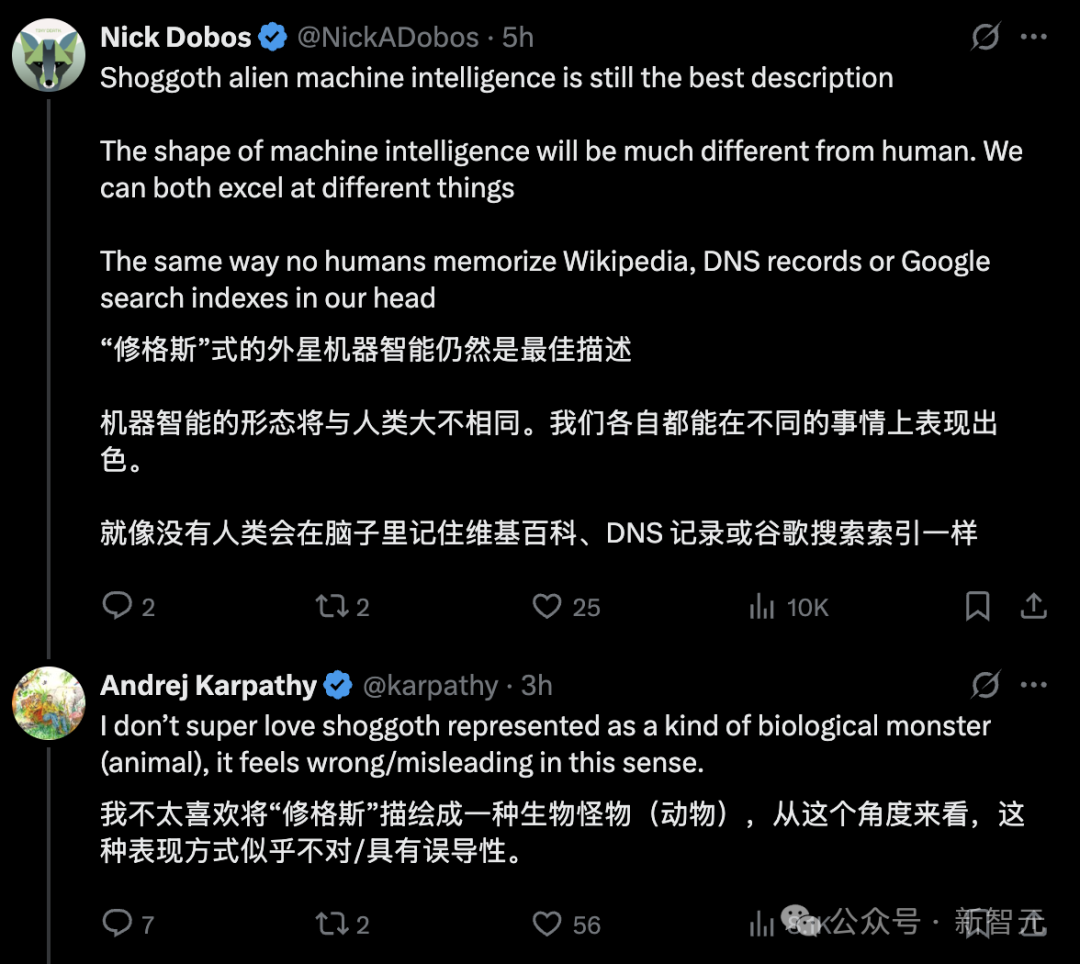

Some liken AI to “Shoggoth”-style alien intelligences — but Karpathy warns against animal analogies.

---

## Building Better Mental Models of AI

Karpathy concludes:

- **Accurate internal model** → Better understanding of current AI and prediction of future traits.

- Poor mental model → Projecting human-like qualities (desires, self-awareness, emotions) onto AI — possibly incorrect.

---

## Practical Implications for Creators

Understanding AI as a **novel category of intelligence** enables better interaction strategies.

For example, platforms like [AiToEarn官网](https://aitoearn.ai/) help creators:

- Generate, publish, and monetize AI-powered content.

- Distribute across **Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X (Twitter)**.

- Access analytics, ranking, and open-source tools for scalable creativity ([AiToEarn博客](https://blog.aitoearn.ai) | [AiToEarn开源地址](https://github.com/yikart/AiToEarn)).

---

**Reference:**

[https://x.com/karpathy/status/1991910395720925418](https://x.com/karpathy/status/1991910395720925418)