Kimi K2 Insights

Kimi K2 Thinking — Overview

Kimi K2 Thinking is a new release from Moonshot, a Chinese AI lab, building on their earlier Kimi K2 model introduced in July.

This Thinking variant maintains 1 trillion parameters (Mixture-of-Experts, 32B active) and is released under a custom-modified MIT license — meaning it is not fully open source.

---

Key Capabilities (from Moonshot)

> Starting with Kimi K2, we built it as a thinking agent that reasons step-by-step while dynamically invoking tools.

> It sets a new state‑of‑the‑art on Humanity's Last Exam (HLE), BrowseComp, and other benchmarks by increasing multi-step reasoning depth and maintaining stable tool use across 200–300 calls.

> It is a native INT4 quantization model with a 256k context window, delivering lossless reductions in inference latency and GPU memory usage.

---

Technical Details

- Model size on Hugging Face: 594GB vs. Kimi K2’s 1.03TB

- Reduction likely due to: INT4 quantization → faster and cheaper hosting

- Hosting: Currently only by Moonshot

- Access:

- Moonshot API

- OpenRouter proxy

- Plugins tested:

- llm-moonshot

- llm-openrouter

---

Potential Significance

Community reception is highly positive, prompting speculation:

Could Kimi K2 Thinking be the first open-weight model capable of rivalling OpenAI and Anthropic — especially for long, tool-driven agent workflows?

---

Using Kimi K2 Thinking with the `llm` CLI

Below is an example of running identical prompts via different providers.

Moonshot Provider

llm install llm-moonshot

llm keys set moonshot # paste key

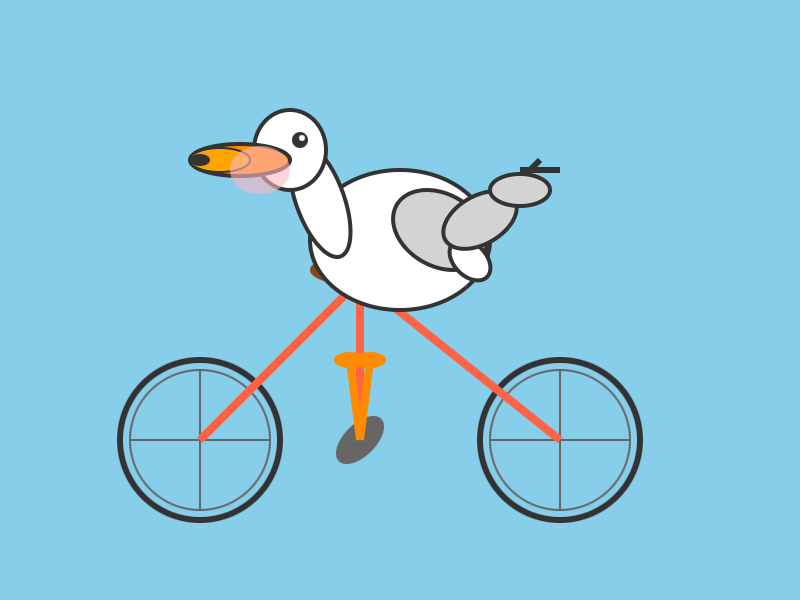

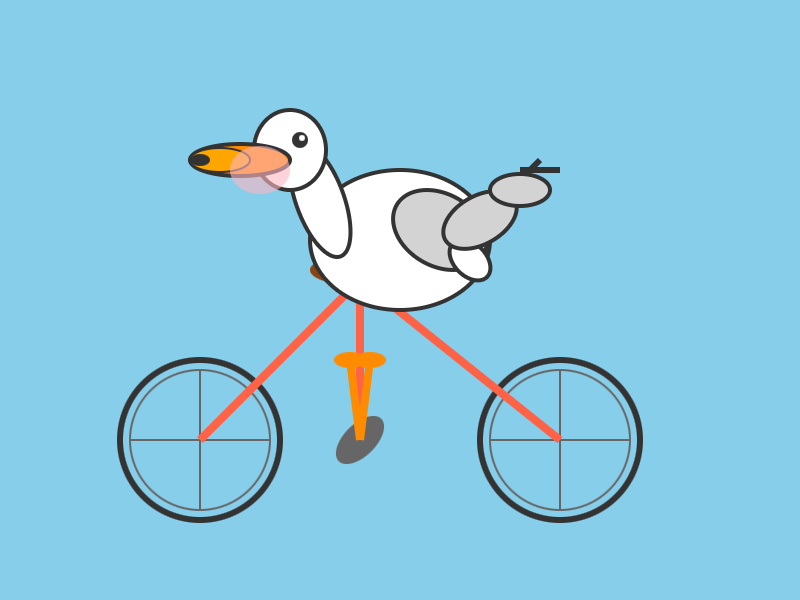

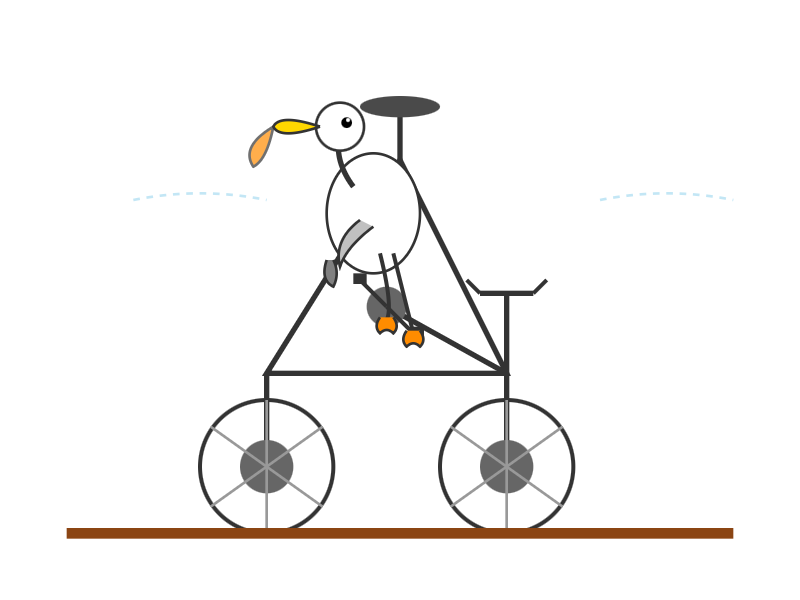

llm -m moonshot/kimi-k2-thinking 'Generate an SVG of a pelican riding a bicycle'

OpenRouter Provider

llm install llm-openrouter

llm keys set openrouter # paste key

llm -m openrouter/moonshotai/kimi-k2-thinking \

'Generate an SVG of a pelican riding a bicycle'

What this does:

- Installs the model provider plugin

- Sets your API key

- Runs the model with identical prompts

- Compares resulting SVG output (`img_001.png` vs. `img_002.png`)

---

Monetizing AI-Generated Content

For creators looking to distribute and earn from AI outputs, platforms like AiToEarn offer:

- Open-source, global infrastructure

- Cross-platform publishing to:

- Douyin, Kwai, WeChat, Bilibili, Rednote (Xiaohongshu), Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X (Twitter)

- Integrated analytics and AI model rankings

This is useful for:

- Expanding reach of AI-generated content

- Streamlining multi-channel publishing

- Turning creative outputs into revenue streams

---

Summary

- Kimi K2 Thinking delivers INT4 efficiency and 256k context at trillion-scale parameters

- Real-world access via Moonshot API and OpenRouter

- Easily tested in workflows using the `llm` tool

- Potential to rival state-of-the-art proprietary models

- Platforms like AiToEarn help turn outputs into monetizable multi-platform content

---

Would you like me to create a quick-start “Install & Test Kimi K2 Thinking” cheatsheet so readers can run it in under 5 minutes? That could make the guide even more actionable.