Large Quantities of NVIDIA GPUs Sitting Idle in Microsoft Data Centers

2025-11-05 00:01 · Jilin

Even Ultraman Is Worried

---

Source

Large Model Intelligence|Sharing

Source: Quantum Bit

---

A Surplus of GPUs… Gathering Dust

Imagine this — Microsoft owns mountains of GPUs, yet many are sitting unused in storage.

In the latest BG2 podcast episode, Microsoft CEO Satya Nadella openly admitted to an unprecedented problem: the company has vast GPU resources, but power shortages and limited physical space mean they cannot put them all to work.

The challenge?

> “The biggest problem is not chip supply, but electricity capacity — and whether we can build data centers near power sources fast enough. Otherwise, you’ll just have piles of chips lying in a warehouse.”

---

01 · Microsoft’s GPUs Sit Idle Due to Energy Bottlenecks

Inside Microsoft, large numbers of NVIDIA AI chips remain unused — not because computing demand is met, but because supporting infrastructure is lacking.

Two main causes:

- Electricity shortages

- Too few “warm shells” — physical data center spaces with power and cooling already installed

Nadella flagged this issue last year, noting:

> “We face electricity constraints, not chip supply constraints.”

The issue is industry-wide:

- Sam Altman echoed Nadella’s point — AI’s real challenge is matching compute with energy and infrastructure.

- Altman has already invested in energy ventures like Oklo (fission), Helion (fusion), and Exowatt (solar), though large-scale deployment is still far off.

- In the short term, AI data centers will continue relying on natural gas and renewables.

---

02 · Hoarding Chips Is Risky in an Energy-Constrained World

In the past five years, U.S. electricity demand has surged due to AI and cloud computing.

Power growth is lagging far behind data center construction speeds.

Key dynamics:

- Years-long timelines for new power plants vs. quarterly pace of AI expansion

- Developers increasingly build behind-the-meter generation — feeding electricity directly into data centers

- Even solar PV, the fastest flexible energy source, takes months–1 year to connect

- AI demand curves shift rapidly — a single model launch can drive spikes

Risk: Infrastructure overbuild could leave assets idle if AI demand slows.

Altman’s stance: Demand will not slow — cheaper, more efficient compute triggers even greater total usage, in line with the Jevons Paradox

> “If compute costs fell 100×, usage would grow far beyond that.”

---

Altman’s Proposal: Energy as an AI Strategic Asset

Altman urges the U.S. to expand power generation by 100 GW/year and treat electricity as a strategic AI resource.

Microsoft’s strategy shift:

- Will stop stockpiling single GPU generations

- Idle chips lose value faster — new architectures arrive within 2–3 years

- Data centers depreciate over 6 years — unused equipment ties up capital and wastes value

---

03 · Rethinking Performance: Energy-Efficient Chips

Since the late 1990s, U.S. electricity production has held at ~4 trillion kWh/year.

Yet:

- Population up 20%

- Aged grid infrastructure

- Lifestyle and tech progress driving load growth

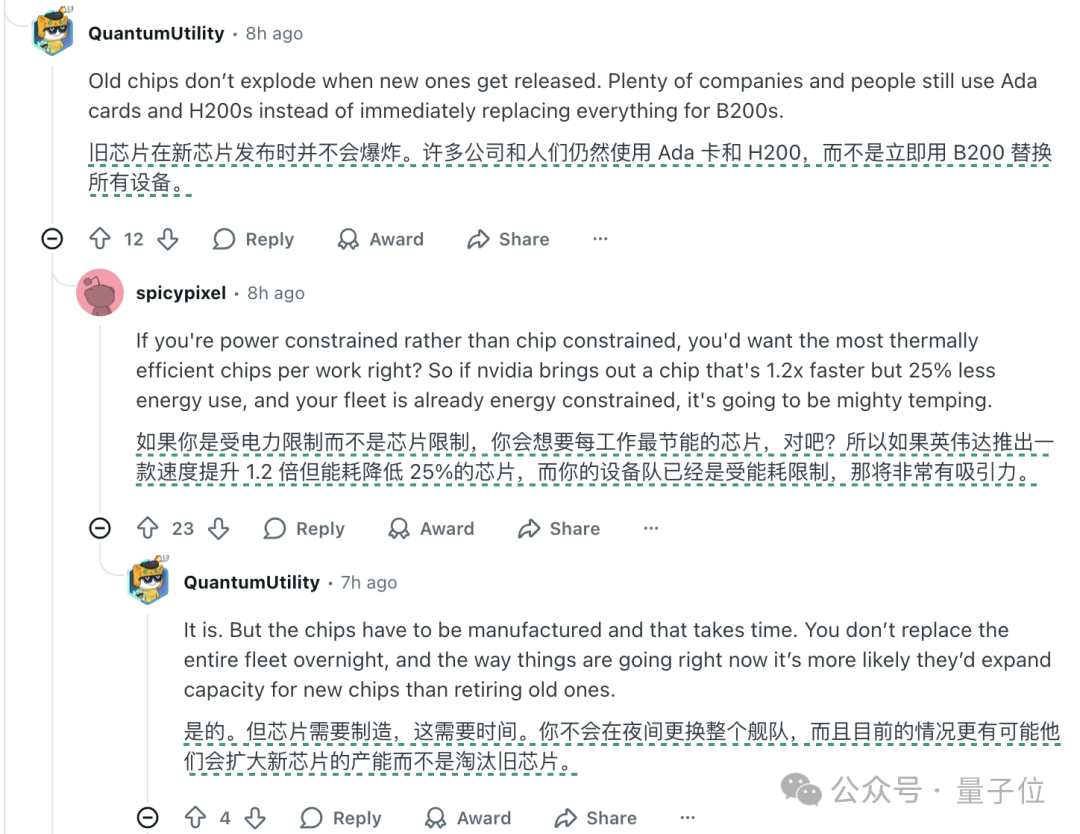

On Reddit, one suggestion stands out:

> “If electricity is the limit, you’d want the most energy-efficient chip possible. If a new GPU is 1.2× faster but uses 25% less power, that’s a win.”

Industry takeaway: Performance metrics may need to shift toward efficiency, not just raw speed.

---

04 · Microsoft Expands AI Infrastructure to Energy-Rich Regions

Microsoft announced on 𝕏:

- Received U.S. government approval to ship NVIDIA chips to the UAE for AI data centers

- Will invest $8 billion in Gulf nations over 4 years in data centers, cloud, and AI projects

Why it matters:

Energy-abundant regions may become AI infrastructure hubs — GPUs can finally run at full capacity.

---

References

---

Join the Technical Group

How to Join:

- Long-press to add our assistant on WeChat.

- Provide your details in the format: Name–School/Company–Research Area–City

- Example: `Alex–ZJU–Large Models–Hangzhou`

- Apply to join deep learning / machine learning technical groups.

---

Related Reading

- Latest Review on "Cross-Lingual Large Models"

- Which deep learning paper’s idea amazed you the most?

- Algorithm engineer “capability to implement” — what does it mean?

- Comprehensive survey of Transformer model variants

- From SGD to NadaMax: Ten optimization algorithms explained

- PyTorch implementations of attention mechanisms

---

If you’d like me to tighten this into an ultra-concise briefing format for tech executives while keeping all the data points intact, I can prepare a 1-page executive summary from this Markdown — would you like me to do that?