Master Local Large Models: Official Ollama Learning Guide Released

Released by the Datawhale Team 🚀

---

Open Source Contribution: Datawhale Handy-Ollama Team

---

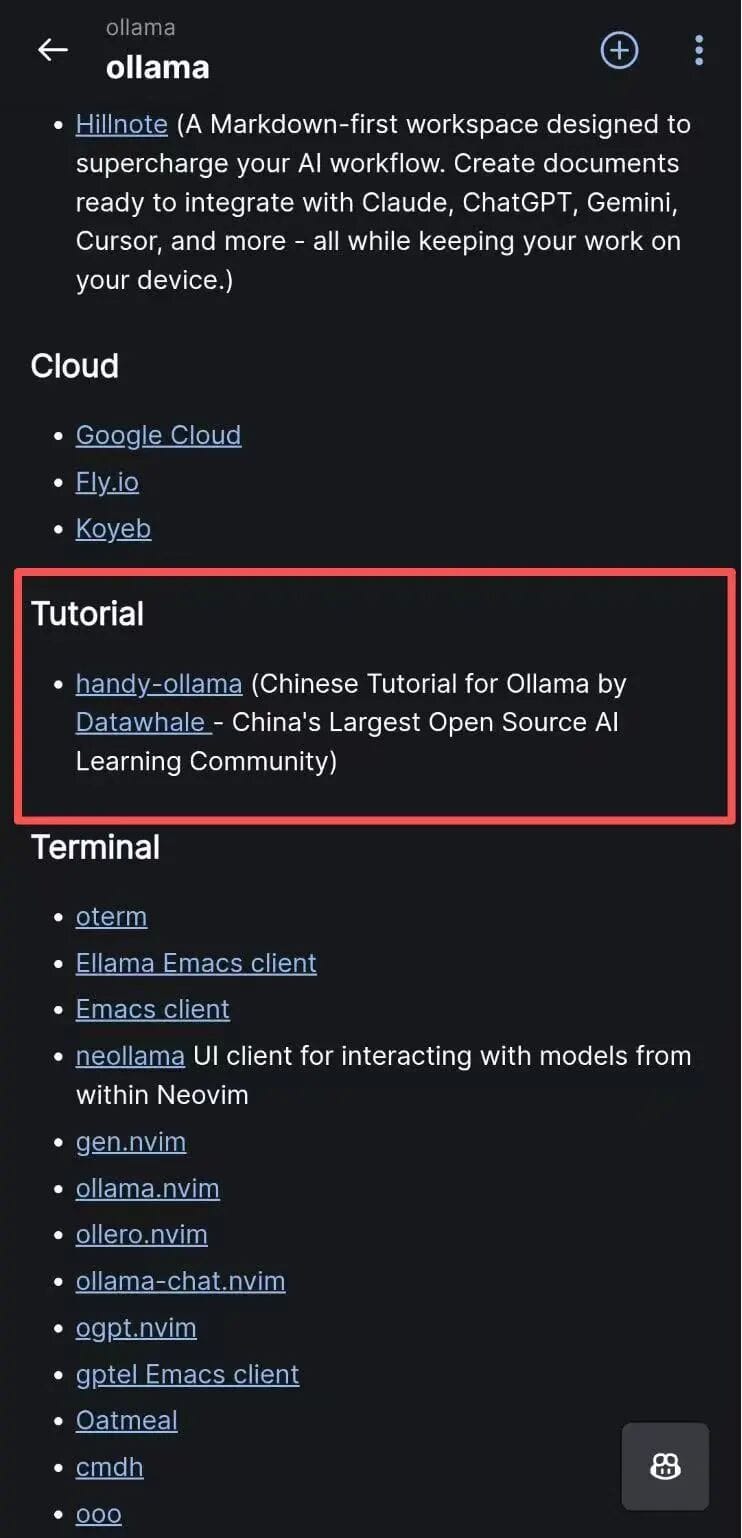

🎉 Ollama’s Only Officially Approved Tutorial

On November 6, the Datawhale Handy-Ollama open-source tutorial was officially included by Ollama — making it the only currently designated official learning tutorial.

📌 Official listing:

https://github.com/ollama/ollama#tutorial

> 💬 Readers who wish to join the discussion group can leave a comment at the end of this article.

---

Why We Open-Sourced Handy-Ollama

Mission: Make Large Model Technology Accessible to Everyone

Large models are transforming industries, with countless open-source options now available.

However, many learners and developers face barriers:

- 💸 High hardware costs

- 📦 GPU requirements

The question becomes: How can we bring large model technology to anyone’s computer — affordably and easily — while enabling AI exploration and app creation without steep requirements?

Ollama as the Solution

Ollama is a lightweight local deployment tool that can run mainstream large models directly on CPU hardware, drastically reducing the entry threshold.

The challenge? Few comprehensive, hands-on tutorials exist.

That’s where our project comes in.

---

Introducing Handy-Ollama

Handy-Ollama is an open-source tutorial offering systematic learning resources plus practical guides, enabling anyone to:

- Deploy large models locally

- Explore application development workflow

- Experience AI technology firsthand

- Contribute to making AI accessible across industries and households

---

📚 Project Overview

Handy-Ollama — Your hands-on guide to using Ollama.

In This Tutorial, You’ll Learn:

- 🔍 Install & configure Ollama step-by-step

- 🏗 Load and customize your own models

- 🛠 Use Ollama’s REST API

- ⚙ Integrate Ollama with LangChain in detail

- 🚀 Deploy visual interfaces & example AI applications

📂 Open-source repo:

https://github.com/datawhalechina/handy-ollama

---

🎯 Target Audience

Perfect for learners who:

- ✅ Want to run large models locally without GPU requirements

- ✅ Want effective inference on consumer-grade hardware

- ✅ Need local deployment for app development

- ✅ Seek secure and reliable model management

---

🙏 Special Thanks

We thank all contributors who made this tutorial possible.

---

💡 How to Support

If you find Handy-Ollama useful:

- ⭐ Give us a star

- 👀 Click watch on GitHub

---

📢 AI Creation Ecosystem: Handy-Ollama + AiToEarn

For creators exploring AI deployment and publishing, Handy-Ollama fits naturally into broader AI creative workflows.

Platforms like AiToEarn官网 empower developers and content creators to:

- Generate AI-powered content

- Publish simultaneously to major channels (Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X/Twitter)

- Monetize creativity with analytics and ranking tools

Together, Handy-Ollama and AiToEarn help you deploy models locally and share AI creations globally.

---

> ⚠️ This link appears to lead to a WeChat article.

> If you paste the full text here (without author info), I can translate it to English while keeping the exact Markdown structure and enhancing readability.