MCP Meets Code Execution: Building More Efficient AI Agents

Code Execution with MCP: Building More Efficient Agents

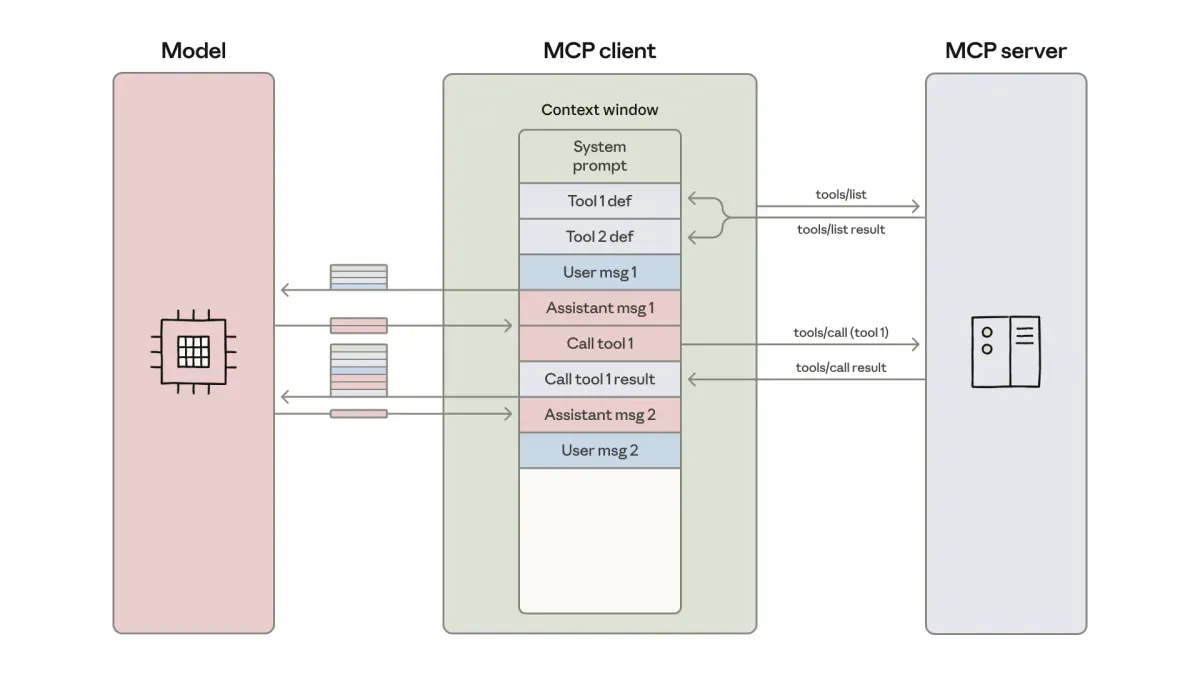

Model Context Protocol (MCP) is an open standard for connecting AI agents to external systems.

---

Why MCP Matters

Traditionally, connecting agents to tools or datasets required custom development for each “agent-tool” pair, leading to:

- Severe fragmentation.

- Duplicated effort.

- Low scalability.

MCP fixes this by offering one universal protocol:

- Implement MCP once in your agent.

- Gain access to the entire integration ecosystem.

Since its November 2024 launch, MCP has seen rapid growth:

- Thousands of MCP servers created.

- SDK support for all major languages.

- Industry adoption as the de facto agent-tool connection standard.

---

The Core Problem: Token Overload

Agents now connect to hundreds or thousands of MCP tools.

But current practices introduce severe performance and cost issues:

Common Inefficient Patterns

- Loading all tool definitions at start-up

- Passing every result through the context window

> Context window = Agent’s working memory for reasoning. It’s powerful but space-limited.

These patterns slow down agents and increase token consumption — the basic unit of model processing.

---

1. Tool Definitions Overwhelm the Context Window

Most MCP clients preload tool definitions before execution:

gdrive.getDocument

Description: Retrieves a document from Google Drive

Parameters:

documentId (required, string): The ID of the document to retrieve

fields (optional, string): Specific fields to return

Returns: Document object with title, body, metadata, permissions

salesforce.updateRecord

Description: Updates a Salesforce record

Parameters:

objectType (required, string)

recordId (required, string)

data (required, object)

Returns: Updated record object with confirmationWhy this is costly:

- Thousands of tools = hundreds of thousands of tokens.

- They occupy valuable context space before the actual task starts.

---

2. Extra Token Use from Intermediate Results

Direct tool calls often duplicate large data in context:

TOOL: gdrive.getDocument('abc123')

→ returns full transcript (stored in context)

TOOL: salesforce.updateRecord(..., Notes: full transcript)

→ transcript sent to model againImpact:

- A 2-hour meeting transcript = +50,000 tokens overhead.

- Large datasets can overflow context limits.

---

The Solution: Code Execution with MCP

Instead of direct tool invocation:

- Treat MCP servers as code APIs.

- Let the agent write and run code to call these APIs.

- Load only the tools needed for the task.

- Process and filter results inside the execution environment before sending minimal output to the model.

Example Project Structure

servers/

├── google-drive/

│ ├── getDocument.ts

│ └── index.ts

├── salesforce/

│ ├── updateRecord.ts

│ └── index.ts---

Sample Tool File

// ./servers/google-drive/getDocument.ts

import { callMCPTool } from "../../../client.js";

interface GetDocumentInput {

documentId: string;

}

interface GetDocumentResponse {

content: string;

}

export async function getDocument(input: GetDocumentInput): Promise {

return callMCPTool('google_drive__get_document', input);

}---

Multi-Tool Code Execution Example

import * as gdrive from './servers/google-drive';

import * as salesforce from './servers/salesforce';

const transcript = (await gdrive.getDocument({ documentId: 'abc123' })).content;

await salesforce.updateRecord({

objectType: 'SalesMeeting',

recordId: '00Q5f000001abcXYZ',

data: { Notes: transcript }

});Result: Token usage dropped from 150,000 → 2,000 (98.7% savings).

---

Benefits of MCP + Code Execution

1. On-Demand Tool Loading

- Model reads only needed tools.

- Optional `search_tools` endpoint with adjustable detail levels.

2. Context-Efficient Results

Filter large datasets in code before returning to model:

const allRows = await gdrive.getSheet({ sheetId: 'abc123' });

const pending = allRows.filter(r => r.Status === 'pending');

console.log(pending.slice(0,5)); // show only first 5---

3. Rich Logic Support

Loops, conditionals, and real error handling without repeated context hops:

let found = false;

while (!found) {

const messages = await slack.getChannelHistory({ channel: 'C123456' });

found = messages.some(m => m.text.includes('deployment complete'));

if (!found) await new Promise(r => setTimeout(r, 5000));

}

console.log('Deployment notification received');---

4. Privacy-Preserving Workflows

Sensitive data stays in the execution environment — never exposed to the model:

const masked = contacts.map(c => ({

name: c.name,

phone: tokenize(c.phone),

email: tokenize(c.email)

}));

salesforce.importContacts(masked);---

5. State Persistence & Skills

Store intermediate results and build reusable functions:

await fs.writeFile('./workspace/leads.csv', csvData);

const saved = await fs.readFile('./workspace/leads.csv', 'utf-8');Skills = folders of reusable scripts + `SKILL.md` descriptions for model reference.

---

Security Considerations

Running agent-generated code requires:

- Sandboxing (details)

- Resource limits

- Monitoring for safe execution

---

Summary

MCP connects agents to massive toolsets efficiently.

Code execution fixes context overflow by:

- On-demand tool loading.

- Pre-processing results locally.

- Supporting complex logic securely.

This approach:

- Reduces token cost.

- Improves speed & scalability.

- Preserves privacy.

---

For creators & multi-platform publishing:

Platforms like AiToEarn官网 align perfectly:

- AI content creation

- Cross-platform distribution

- Analytics & model ranking

- Monetize workflows across Douyin, Bilibili, Instagram, X (Twitter), and more.

---

Learn More & Join the Discussion: