MCP vs API: Understanding the Core Differences

APIs vs MCPs — Understanding the Difference

APIs and MCPs both enable communication between systems.

At first glance they may seem alike — both allow one piece of software to request data or perform an action from another.

However, their purpose, operation, and intended audience differ significantly.

---

Table of Contents

- What is an API?

- What is MCP?

- Why MCP Exists

- MCP as a Bridge

- How MCP Works

- MCP in AI Content Publishing

- Why Not Just Use an API?

- MCP vs API in Practice

- Key Conceptual Difference

- Discovery and Schema

- Security and Privacy

- The Future of MCP

- Conclusion

---

What is an API?

An API — Application Programming Interface — is a set of defined rules and protocols that allow software systems to interact.

Think of it like a restaurant waiter:

You tell the waiter what you want, the kitchen prepares it, and the waiter brings it back — without you entering the kitchen yourself.

Example: Fetching a GitHub user profile:

GET https://api.github.com/users/usernameResponse:

{

"login": "john",

"id": 12345,

"followers": 120,

"repos": 42

}Key traits of APIs:

- Target user: Human developers

- Requirements: Code, request formatting, authentication handling

- Use cases: Payment gateways, weather services, databases

---

What is MCP?

MCP — Model Context Protocol — is a standard for enabling AI models to interact with external tools, data sources, and systems safely.

Unlike APIs, MCP is designed for large language models (LLMs) like GPT or Claude — not for direct human developer use.

---

Why MCP Exists

An AI model:

- Cannot directly make network requests

- Doesn't understand HTTP headers or manage authentication

- Works by predicting text, not executing code

If asked:

> “Get the weather for Delhi”

The model might produce code visually — but cannot run it.

MCP solves this by providing a controlled execution layer between the model and the real world.

---

MCP as a Bridge

MCP acts as middleware, exposing tools the AI can call.

Each tool schema defines:

- What the tool does

- Required inputs

- Returned outputs

This allows an AI model to interact correctly without direct network or file access.

---

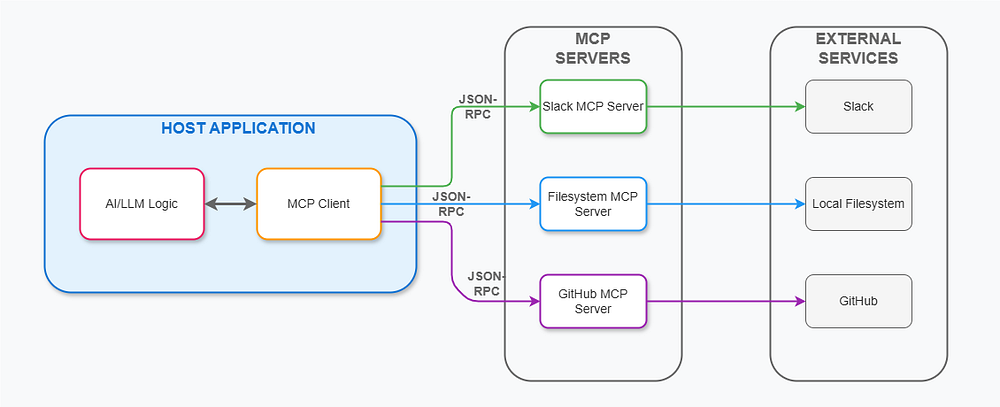

How MCP Works

Visualize MCP as a server that hosts callable tools:

Example (Python MCP server):

from mcp.server.fastmcp import FastMCP

import requests

mcp = FastMCP(name="github-tools")

@mcp.tool()

def get_repos(username: str):

"""Fetch public repositories for a GitHub user"""

url = f"https://api.github.com/users/{username}/repos"

return requests.get(url).json()

mcp.run()The AI can request `"get_repos for user john"` and get structured data — without touching the API key or URL.

---

MCP in AI Content Publishing

Platforms like AiToEarn官网 demonstrate MCP’s potential in real-world workflows:

- Cross-platform publishing

- Analytics tracking

- AI content monetization

With tools for Douyin, Kwai, WeChat, Bilibili, Rednote (Xiaohongshu), Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter) (open source), AiToEarn bridges AI-generated content with multiple networks — while keeping execution safe and scalable.

---

Why Not Just Use an API?

Models cannot safely call APIs directly because they lack:

- Secure execution environments

- Safe secret storage

- Built-in rate limiting and safeguards

Allowing unrestricted API access risks:

- Secret leakage

- Harmful operations

- Data breaches

---

MCP vs API in Practice

API example — possible key leakage:

import requests

def get_weather(city):

api_key = "SECRET_KEY"

url = f"https://weatherapi.example.com/data?city={city}&key={api_key}"

return requests.get(url).json()MCP tool example — secure key handling:

@mcp.tool()

def get_weather(city: str):

"""Get weather for a city"""

import requests

url = f"https://api.weatherapi.com/v1/current.json?key=API_KEY&q={city}"

return requests.get(url).json()The AI says:

> Call `get_weather` with `city=Delhi`

The key stays hidden from the AI.

---

Key Conceptual Difference

| Aspect | API | MCP |

|-------------------|----------------------------------|----------------------------------|

| Audience | Human developers | AI models |

| Caller Trust | Trusted | Untrusted |

| Execution Model | Direct endpoint calls | Controlled tool invocation |

| Knowledge Required | HTTP, tokens, request formatting | Tool name + structured parameters |

---

Discovery and Schema

AI models can query an MCP server to discover available tools.

Example MCP tool catalog:

{

"tools": [

{

"name": "get_weather",

"description": "Get weather for a city",

"parameters": {

"city": {"type": "string"}

}

}

]

}Models learn exactly how to call tools — no human documentation needed.

---

Security and Privacy

With MCP:

- Rules and limits can control behavior

- Dangerous input is blocked

- Requests are logged for auditing

- Execution can stay within a private network

APIs exposed publicly are more susceptible to:

- Secret leakage

- Endpoint misuse

---

The Future of MCP

Major AI companies (OpenAI, Anthropic) are adopting MCP.

Benefits:

- Any MCP-compatible model can use your tools

- Standardized across different LLM vendors

- Future-proof integration as AI tooling evolves

---

Conclusion

APIs vs MCP in summary:

- APIs connect machines (trusted developers → systems)

- MCP connects intelligence to machines (AI models → systems, safely)

MCP is not a replacement for APIs — it builds on top of them, adding:

- Structure

- Control

- Safety

By linking MCP to multi-platform content ecosystems like AiToEarn, creators can securely generate, publish, and monetize AI-driven content across numerous networks — without exposing secrets, while maintaining compliance in a unified workflow.

---

Final Thought:

As AI workflows expand, MCP ensures models can act in the real world safely, while APIs continue powering the underlying data connections.

The combination unlocks secure AI automation and broad content distribution opportunities.