Meituan’s New Standalone App — You Can’t Order Food, Only AI

Meituan’s “Food Delivery Speed” Approach to AI Models

Meituan appears to be embracing a “fast and stable” AI development mantra — rapidly releasing new models while maintaining high performance. Over the past two months, multiple models have been launched, and the momentum continues.

---

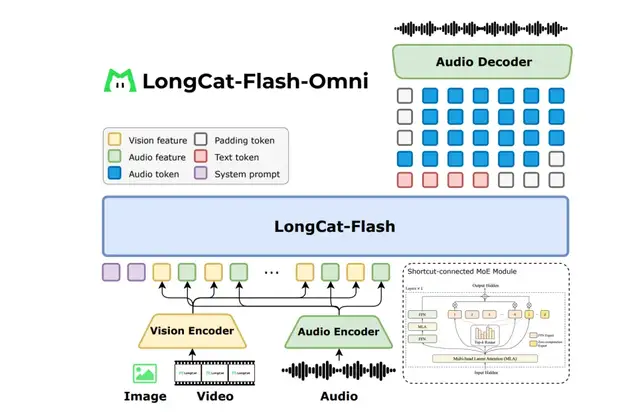

Introducing LongCat‑Flash‑Omni

The newest release — LongCat‑Flash‑Omni — is multi‑modal (“Omni” meaning all‑capable) and supports text, image, audio, and video inputs.

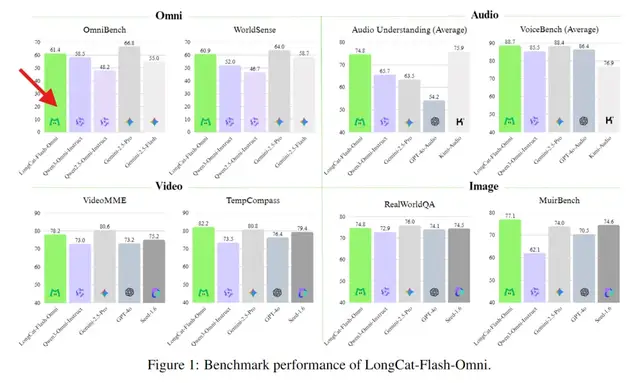

Benchmark Achievements

- Comprehensive benchmarks (Omni‑Bench, WorldSense): Surpasses Qwen3‑Omni & Gemini‑2.5‑Flash.

- Matches closed‑source Gemini‑2.5‑Pro in performance.

- Top-tier rankings for each individual modality.

---

Speed: The Flash Advantage

Inherited from the LongCat‑Flash series:

- 560B total parameters, only 27B active (MoE architecture).

- Large knowledge base + efficient inference.

This makes it the first open‑source model capable of full‑modal real‑time interaction at mainstream flagship specs.

---

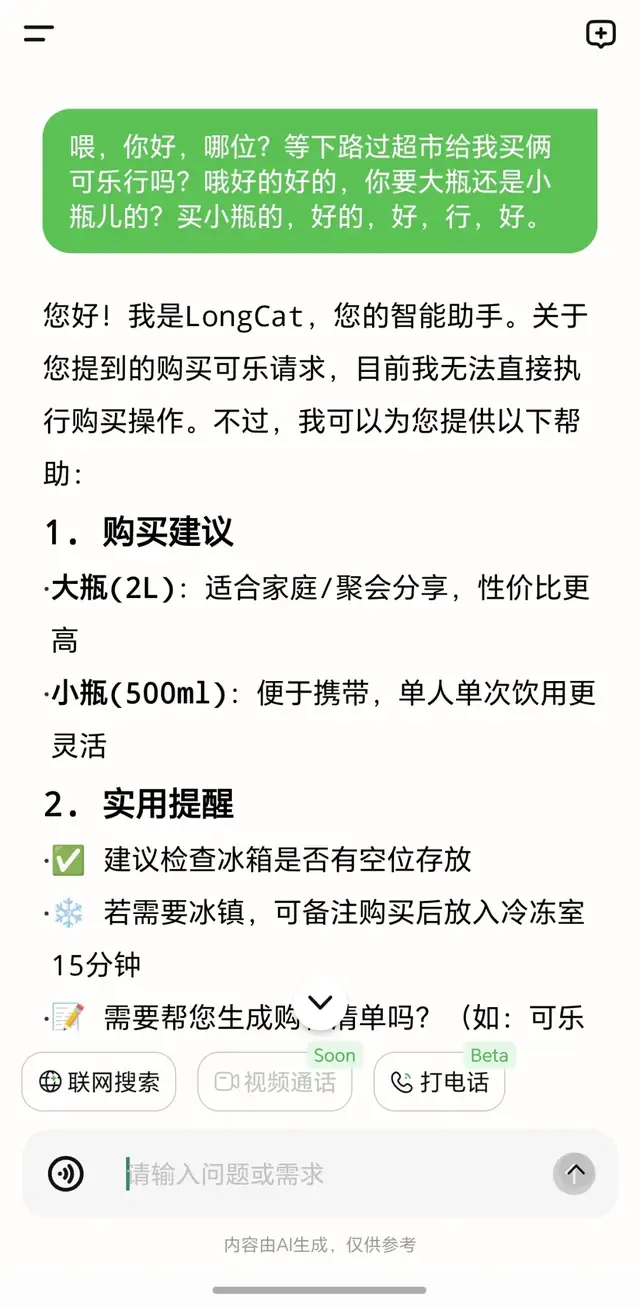

Public Access

- Available now on LongCat app & web — free to try.

- Supports text/voice input, voice calls; web allows image/file uploads.

---

Hands‑On Tests

“AI Counting Sheep”

Video: link

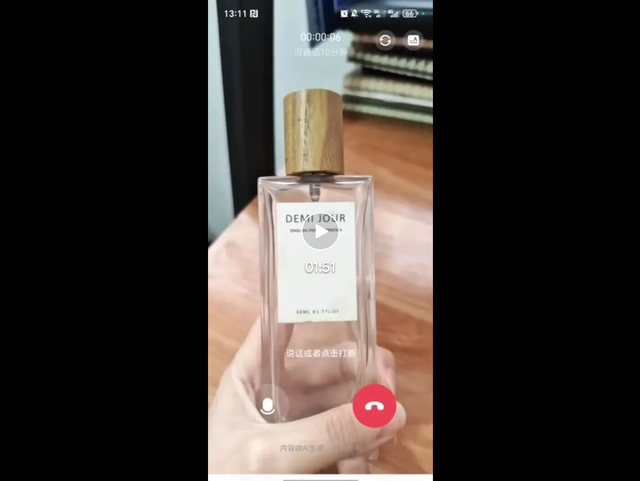

Video Call Beta

Tested with a perfume bottle — successful visual recognition and Q&A.

Physics Simulation Prompt

> Show a ball bouncing inside a rotating hexagon, affected by gravity and friction, with realistic wall collisions.

Result — code + visualization:

Meme Recognition

Answer: Duck + New Year’s Money (homophone pun)

Audio Noise Test

Audio: link

Passed — accurate speech extraction despite noise.

---

Performance Summary

Fast + Stable:

- Instant response even for complex multimodal tasks.

- Excels at deep reasoning, conversation, and recognition.

Genealogy:

- Descendant of LongCat‑Flash‑Chat (speed‑optimized)

- Descendant of LongCat‑Flash‑Thinking (professional depth)

---

Meituan’s Iteration Logic

- Speed First — responsive, smooth model interactions.

- Specialization — deep reasoning, physics simulation, robust speech recognition.

- Full Modal Expansion — text, audio, visual integration; future image/video generation.

---

Related Model: LongCat‑Video

Generates long videos (~5 minutes) with stable output.

Video: link

---

Technical Challenges in Multimodal AI

- Fusion Difficulty — different modalities need careful integration.

- Offline vs. Streaming — differing processing logic.

- Real‑Time Performance — latency and lag issues.

- Training Efficiency — large multimodal data volumes slow training.

---

Architectural Innovations — ScMoE

Features:

- End‑to‑end unified architecture.

- Accepts text, audio, images, and video simultaneously.

---

Real‑Time Interaction Layer

- Segmented audio‑video feature interleaving — sync by time segments; low latency in speech & visuals.

Training Strategy

- Progressive multi‑modal fusion — start with text, add audio, then visuals.

- Context expansion to 128K tokens — supports >8 minutes of multimodal interaction.

- Modality‑Decoupled Parallel (MDP) training — independent optimization of LLM & encoders.

---

Achievements

LongCat‑Flash‑Omni combines:

- Full‑modality coverage

- Efficient inference

- Real‑time experience comparable to closed‑source, but open‑source.

---

Meituan’s Broader Strategy

Two‑Pronged Approach:

- Software — building a “world model”.

- Hardware — accelerating embodied intelligence deployment.

Timeline Highlights

- 2018–2020 — investments in local life services & consumer brands.

- 2021 — shift to “Retail + Technology”, growth in robotics/autonomous driving.

- 2022 onward — autonomous driving, AI chips, embodied robotics.

---

Embodied Intelligence Vision

Emphasis on Autonomy:

- Drones, autonomous vehicles, indoor delivery robots.

- Nationwide approval for drone ops — including nighttime flights.

Goal: Retail is the scenario, Technology is the enabler.

---

Future Direction

Connecting bits to atoms — using AI brains + robotic bodies to merge digital and physical worlds.

---

Try LongCat‑Flash‑Omni

- LongCat Chat: https://longcat.ai

- Hugging Face: https://huggingface.co/meituan-longcat/LongCat-Flash-Omni

- GitHub: https://github.com/meituan-longcat/LongCat-Flash-Omni

---

Note for Creators

Platforms like AiToEarn官网 let creators:

- Generate AI content

- Publish across multi‑channels (Douyin, Kwai, Bilibili, YouTube, Instagram, etc.)

- Track analytics and rankings (AI模型排名)

Docs: AiToEarn文档

---

Bottom Line: LongCat‑Flash‑Omni is a fast, stable, fully multimodal open‑source model — demonstrating Meituan’s unique speed‑first, specialization, full‑coverage strategy in AI, and hinting at a larger ambition: to connect the virtual and physical worlds seamlessly.