Musk Taps Karpathy to Take on Grok 5 — Don’t Mythologize LLMs, AGI Still a Decade Away

📰 New Intelligence Report

Editor: KingHZ

---

1. Introduction — Karpathy on AGI’s Realistic Timeline

Key takeaway: AGI (Artificial General Intelligence) is not arriving tomorrow, but it’s not a mirage either.

Andrej Karpathy — founding member of OpenAI and former Tesla Director of AI — believes:

> The road to AGI has appeared, but it’s filled with obstacles. Estimated timeline: ~10 years.

Challenges he outlined:

- Sparse reinforcement learning (RL) signals and the limitations of alternatives

- Model collapse hindering human-like learning in LLMs

- Integration challenges: lack of environments, evaluation methods, and real-world system cohesion

- Safety concerns: security, poisoning, jailbreaking risks

- Historical context — AGI’s impact may follow the ~2% GDP growth trend seen over 250 years

- Lessons from autonomous driving's slow progress

---

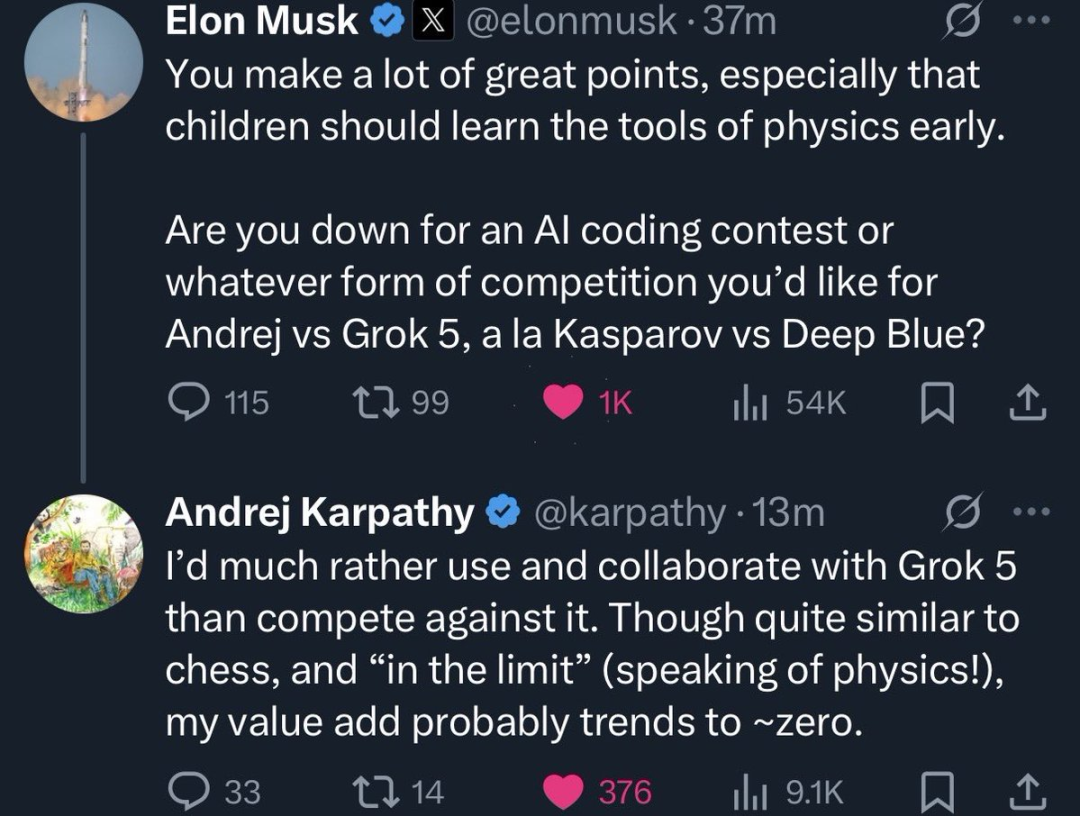

2. Musk vs. Karpathy — The Grok 5 Challenge

- Musk acknowledges Karpathy’s valid points, but then publicly challenged him to a coding face-off against Grok 5 — reminiscent of Kasparov vs. Deep Blue.

- Karpathy declined:

- > “I’d rather work with Grok 5 than compete against it.”

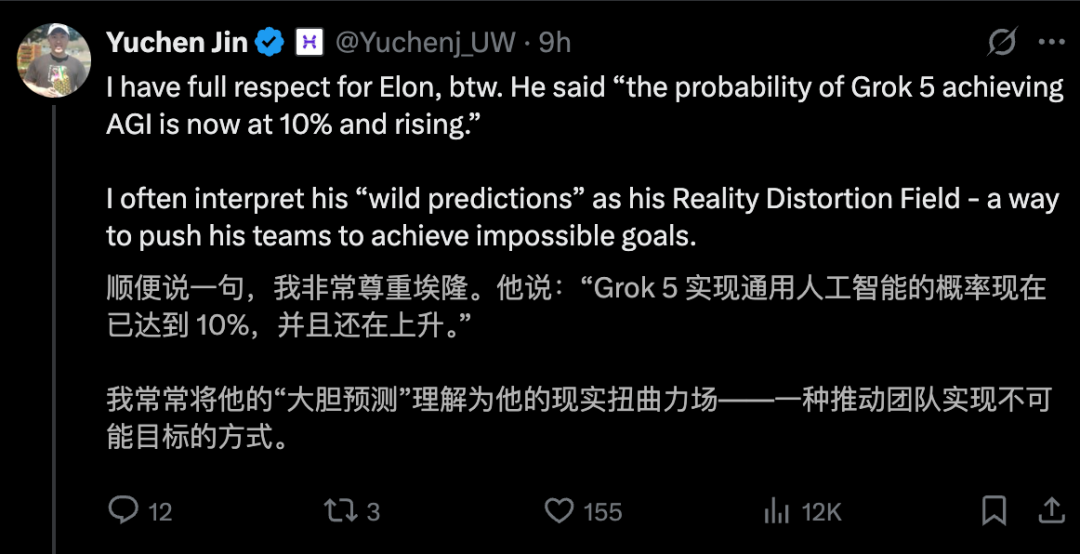

- Musk believes Grok 5 has only 10% AGI likelihood, yet still wanted the duel.

- Possible motive? Founder Yuchen Jin suggests:

- > Musk is using his “reality distortion field” to push xAI toward impossible goals.

---

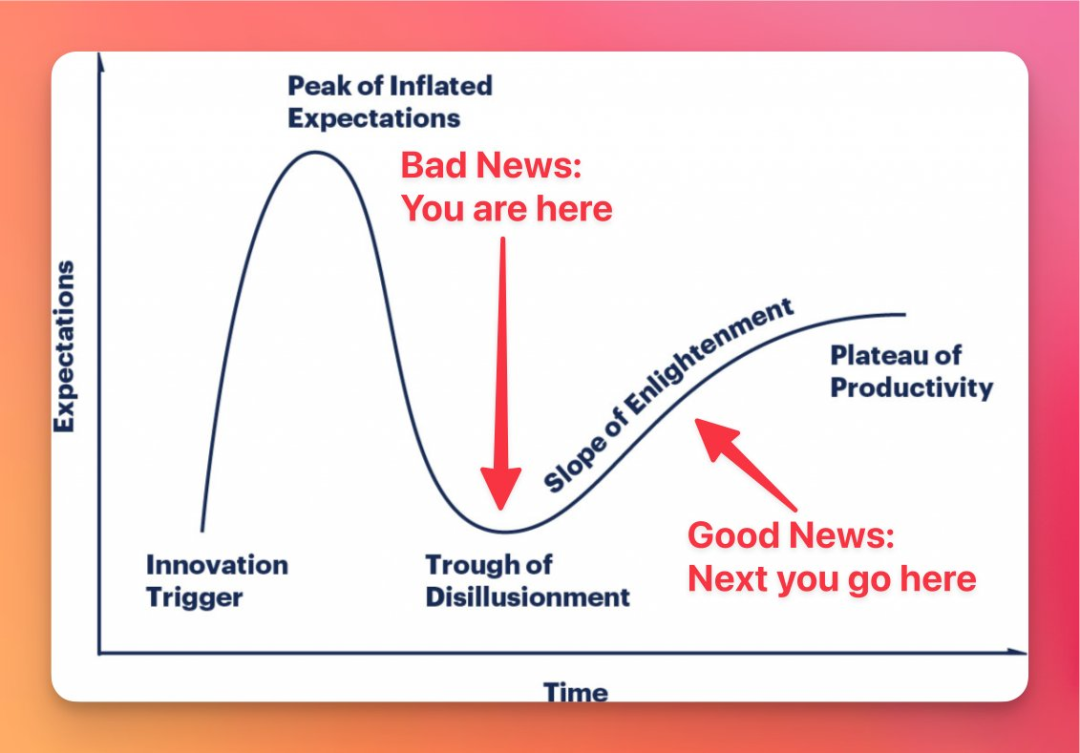

3. The “Trough of Disillusionment”

Dan Mac points out Karpathy sees LLM hype as entering the trough of disillusionment — a realistic stance that emphasizes improving tools over hype battles.

Next phase: The slope of enlightenment — slow, steady productivity gains leading to a far-off plateau.

---

4. Karpathy’s Self-Reflection

Karpathy revisited the podcast, noting:

- Some points came out rushed (“mouth quicker than mind”)

- Nerves led to avoiding tangents and oversimplifying complex themes

---

5. AGI in a Decade — Optimism vs. Hype

Points of Agreement:

- LLMs have advanced tremendously

- Still far from hiring them universally over humans — challenges remain across:

- Hard labor tasks

- Complex system integration

- Real-world perception + action

- Large scale collaboration

- Safeguards and reliability

Verdict: 10 years is very optimistic, especially compared to hype.

---

6. Artificial Ghost Intelligence

Karpathy speculates: Could a simple algorithm, placed in the real world, learn everything from scratch?

- Animals aren’t like this — they start with evolved, pre-loaded intelligence.

- LLMs, however, are “pre-loaded” differently — via token prediction over huge internet datasets.

- Their intelligence is ghost-like — distinct from biological minds.

---

7. Reinforcement Learning Limitations

Issues Karpathy highlights:

- High noise in RL signals

- Poor signal-to-compute ratio

- Accidental “right answers” wrongly reinforced

- Models fooling evaluators with nonsense outputs (e.g., “da da da da”)

Position: RL will continue producing results, but isn’t the full solution.

Karpathy is bullish on agentic interaction and environments as training/evaluation tools — but stresses the need for large, diverse, high-quality environment sets.

---

8. Emerging Learning Paradigms

System Prompt Learning:

- LLMs auto-generate much of their own system prompts (like self-written manuals)

- Similar to RL environments but uses editing operations instead of gradient descent

- Early forms already seen — e.g., ChatGPT’s memory features

---

9. The Cognitive Core

Forecast: Future LLM cores will:

- Be permanently local on devices

- Support multimodal I/O

- Use nested architectures for flexible scaling

- Feature on-device LoRA fine-tuning

- Trend big → small as architectures mature

Learning takeaway: Limiting memory can improve generalization.

---

10. LLM Agents — Balanced Collaboration

Karpathy’s preferred principles:

- Explain what/why the model codes

- Cite APIs/standards

- Ask when uncertain

- Keep iteration manageable and reviewable

Danger: “Genius AI intern” syndrome — confident but sloppy code, bloated repositories, bigger attack surfaces.

---

11. Work Automation & Physics Education

Factors in automation adoption:

- Standardized I/O

- Manageable error costs

- Clear verification processes

- Frequent, repeated decision cycles

Radiology example — AI as second reader, not primary replacement.

Karpathy also pushes earlier physics education — treating it as the core “OS install” for analytical thinking.

---

📌 Relevant Links

- AiToEarn官网 — AI-powered content generation & multi-platform publishing

- AiToEarn开源地址

- AI模型排名

- Documentation

- Link Proxy

---

Suggested Next Steps for Readers

- Track agentic AI and environment-based learning research

- Compare timelines from optimists vs. skeptics

- Examine multi-platform AI publishing tools for sharing such discussions at scale

---

Would you like me to also create a visual timeline diagram summarizing Karpathy’s “10-year AGI” roadmap and key obstacles? That could make this report even more digestible.