Nano Banana is Finally Literate, But I Might Become “Stupid”

Nano Banana 2: The AI Image Model That Thinks

Over the past weekend, Gemini 3 Pro Image has been stress-tested in increasingly creative ways — though you may know it by its other name: Nano Banana 2.

A name that sounds like a joke, yet somehow stuck.

Nano Banana 2 has shown exceptional capability across multiple domains, enough to win a nod from “friendly rival” Sam Altman.

▲ Image source: The Information

---

Why Nano Banana 2 Matters

The second stage of Nano Banana represents a turning point in AI image generation:

- From probability-based “analogy” → To understanding-based “logical construction”.

- AI now engages not just your eyes, but also your intellect.

---

Large Language Models Are No Longer “Illiterate”

AI-generated images have faced a notorious, long-standing flaw: artistic brilliance coupled with chaotic text. This issue dates back to the Midjourney era — improved over time, but never fully solved.

Why Text Was Always Wrong

One of the clearest giveaways that an image was AI-generated? Look at its text.

Diffusion models treat text as a texture rather than a symbol — resulting in broken letters, misspellings, and nonsense.

---

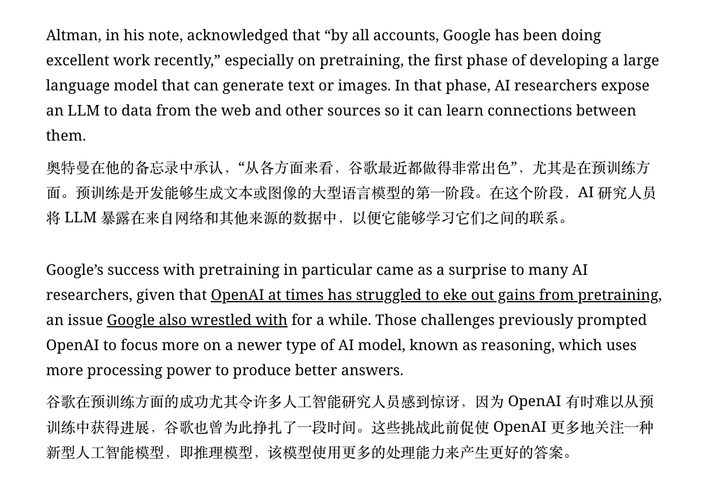

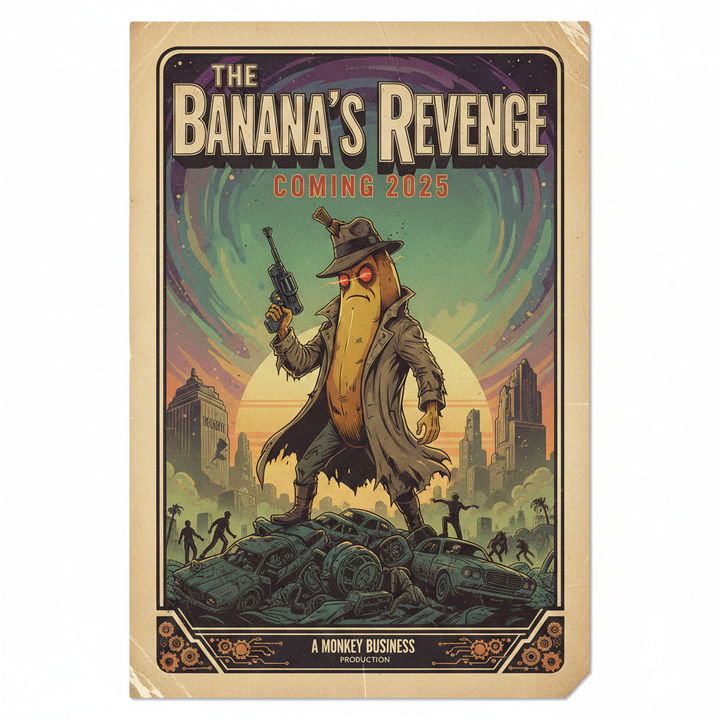

Breakthrough: Accurate Text Rendering

Nano Banana 2 changes the game with Text Rendering — the ability to recognize and accurately produce text in visual compositions.

Example Prompt:

> Generate a retro movie poster titled “Banana’s Revenge,” with the subtitle “Releasing in 2025” in red serif font.

Before vs After

- Before: Main title often passable; smaller captions fell apart; misspellings like “BANNANA” were common.

- Now: Text appears accurate, clear, and beautifully typeset within the image.

---

Why This Matters

For everyday users:

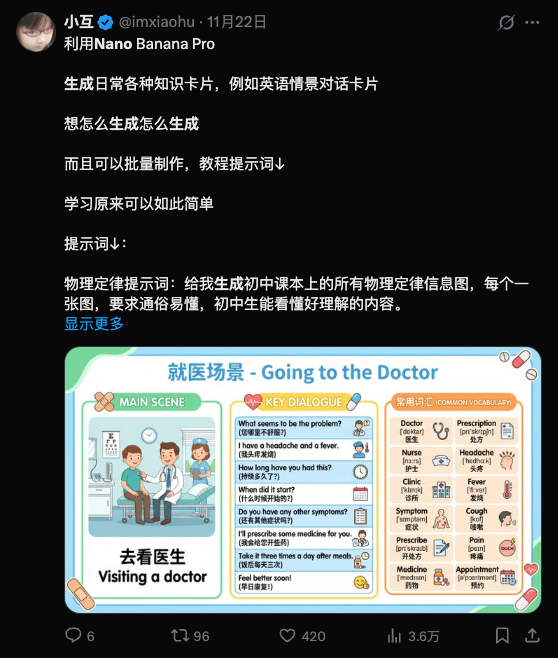

- “Meme freedom” — generate perfectly captioned, sarcastic images without manually adding text boxes.

For businesses:

- AI image generation has crossed from the “material stage” into the “deliverable stage.”

- Accurate text enables:

- E-commerce product posters

- Presentation illustrations

- Data charts and infographics

- Designs where text matches perspective and layout

▲ Image source: X user @chumsdock

This is the “last mile” in commercial-ready AI visuals — enabling fully packaged deliverables.

---

From Guesswork to Understanding

Text rendering is just the surface-level proof of Nano Banana 2’s deeper technical leap:

It’s moved from statistical guesswork to reasoning-driven image generation.

Traditional Models:

> “A cat sitting on a glass table” → Output based on statistical pixel patterns learned from millions of cat images.

Nano Banana 2:

- Leverages reasoning from the Gemini 3 language model.

- Builds an implicit physical model before generating.

- Understands shadows, light refraction through glass, and object relationships.

---

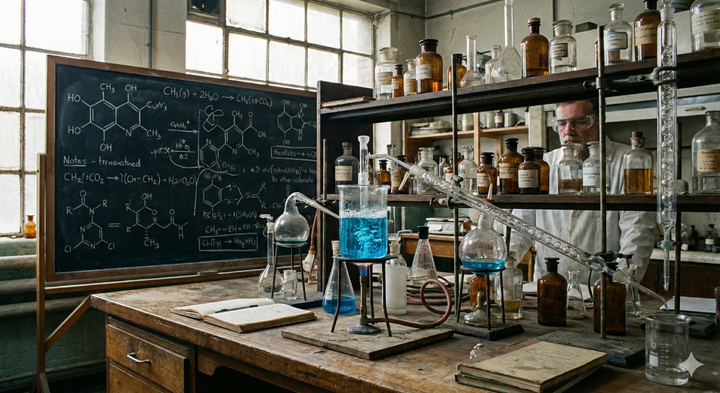

Example — Chemistry Lab Prompt

> “Generate a complex chemistry lab, with beakers containing blue liquid on the table, and molecular formulas on the blackboard in the background.”

Key improvements:

- Correct meniscus in the beaker

- Realistic glass refraction

- Structured — not random — chemical formulas (minor flaws remain)

---

The Thinking Canvas

Text Rendering is the visible result. Reasoning is the hidden engine. Together, they make Nano Banana 2 a Thinking Canvas.

Google has tightly integrated the model for more than just “pretty pictures” — now aiming at:

- Infographics

- Teaching materials

- Explanatory graphics

- Complex, information-rich visuals

---

Probabilistic Guesswork → Causal Inference

Previously:

- Users supplied 20% of the info; AI guessed the remaining 80% via random filling.

Now:

- AI uses causal logic — encoding processes along with outcomes.

- Enhances narrative and emotional depth in visuals.

Example: Even mechanical diagrams now show rivets and bolts in plausible places — a step toward logical visual correctness.

---

The Creative Double-Edged Sword

While perfection improves utility, it can also:

- Homogenize creativity — everything looks flawless, but risks losing the human imperfections that give design its character.

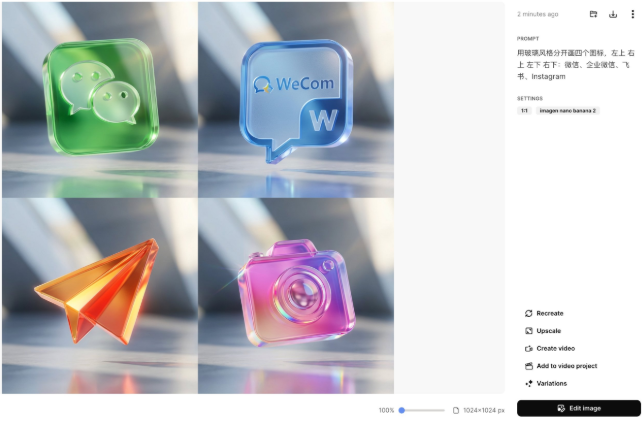

▲ Image source: X user @dotey

Risks Ahead

- Truth distortion: Mass-producible, logical visuals make “pleasing the intellect” dangerously easy — and potentially hollow.

- Deepfake challenges: Google uses SynthID watermarking, yet visual impact often outweighs such countermeasures.

From now on, every pixel you see could be machine-generated — thrilling and, at times, chilling.

---

Platforms for Adaptation: AiToEarn

In a rapidly evolving content world, platforms like AiToEarn官网 help creators adapt by:

- Generating AI content

- Publishing across multiple platforms simultaneously (Douyin, Kwai, WeChat, Bilibili, Rednote/Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X/Twitter)

- Providing analytics and model rankings

- Monetizing creative output

Open-source resources:

AiToEarn allows creators to maintain individuality and value amid automation — turning AI-generated deliverables into sustainable income streams.

---

In summary:

Nano Banana 2 isn’t just a new image model. It’s the start of reasoning-driven visual creation, where design perfection meets intellectual understanding — bringing both opportunity and challenge to creators, brands, and society alike.