Olmo 3 is a Fully Open LLM

Overview of Olmo: Ai2’s Fully Open LLM Series

Olmo is a large language model (LLM) family created by Allen Institute for AI (Ai2).

Unlike most open-weight models, Olmo releases include:

- Full training data

- Documented training process

- Intermediate checkpoints

- Model variants

These elements make Olmo particularly transparent and valuable for research, replication, and auditing.

---

Olmo 3 Highlights

The latest release, Olmo 3, features:

- Olmo 3‑Think (32B) — billed as “the best fully open 32B-scale thinking model”

- Emphasis on interpretability, with intermediate reasoning traces

- Multiple model sizes and variants:

- 7B: Base, Instruct, Think, RL Zero

- 32B: Base, Think

> "...lets you inspect intermediate reasoning traces and connect those behaviors back to the data and training decisions that produced them."

---

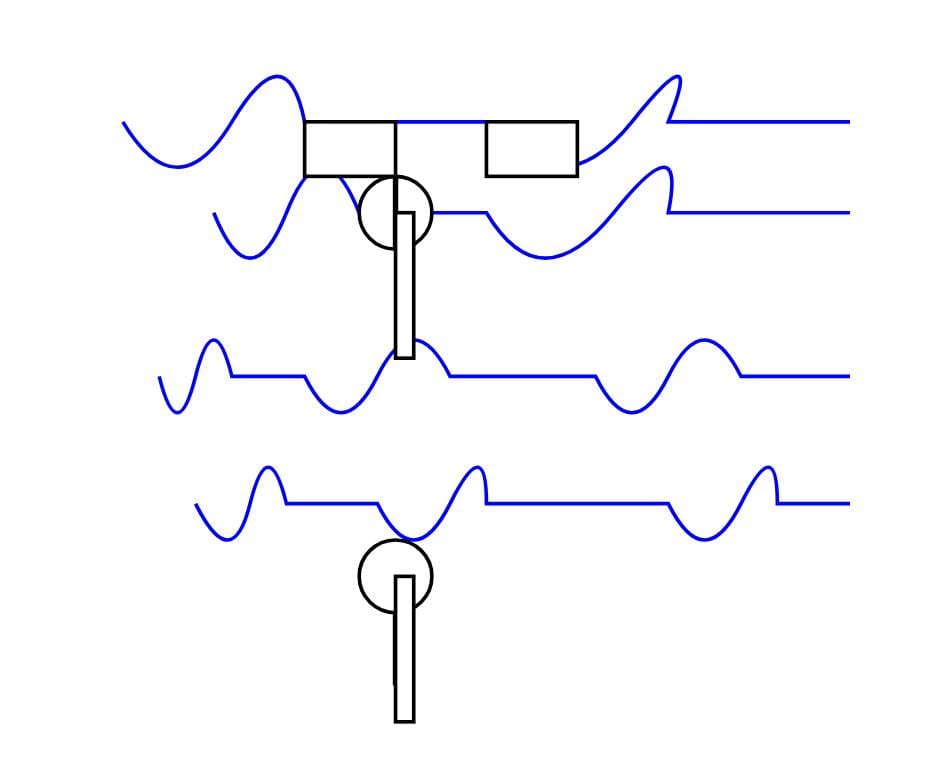

Training Data: Dolma 3

Olmo 3 is pretrained on Dolma 3, a ~9.3 trillion‑token dataset including:

- Web pages

- Scientific PDFs via olmOCR

- Codebases

- Math problems & solutions

- Encyclopedic text

Additional refinements:

- Dolma 3 Mix (~6T tokens)

- Higher proportion of coding and math data than earlier Dolma

- Strong decontamination: deduplication, quality filtering, controlled data mixing

- No collection from sites that explicitly disallow it or paywalled content

Ai2 also emphasizes that Olmo models are trained on fewer total tokens than competitors, focusing on data quality, selection, and interpretability.

---

Platforms for AI Creation & Publishing

For creators and researchers, tools like AiToEarn官网 provide:

- AI content generation

- Cross-platform publishing to:

- Douyin, Kwai, WeChat, Bilibili, Rednote (Xiaohongshu)

- Facebook, Instagram, LinkedIn, Threads

- YouTube, Pinterest, X (Twitter)

- Analytics & monetization tools

- Open-source transparency (GitHub repo)

- Model performance rankings (AI模型排名)

This mirrors Olmo’s ethos of openness while enabling immediate content deployment and data analysis.

---

Hands-On Tests with Olmo Models

Test environment: LM Studio

Downloads:

- 7B Instruct — 4.16 GB

- 32B Think — 18.14 GB

Observation:

The 32B Think model engages in extended reasoning — my prompt “Generate an SVG of a pelican riding a bicycle” took 14 min 43 sec, producing 8,437 tokens, most in the reasoning trace (trace link).

---

Generated SVG Example

Rendered output:

---

Comparisons

- OLMo 2 32B 4bit (March 2025) — abstract, less accurate rendering (link)

- Qwen 3 32B (via OpenRouter) — alternative attempt

---

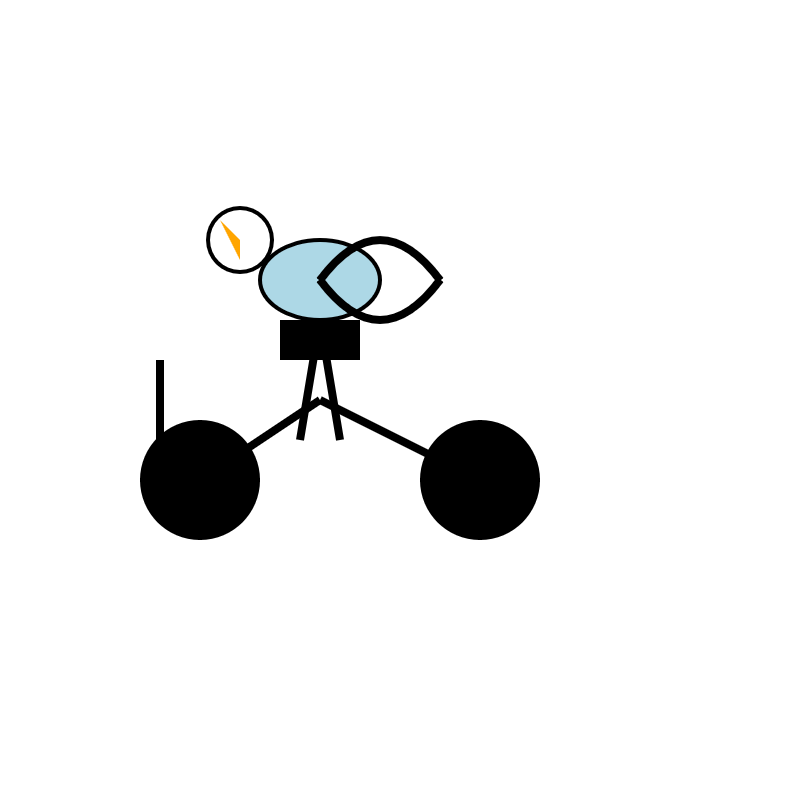

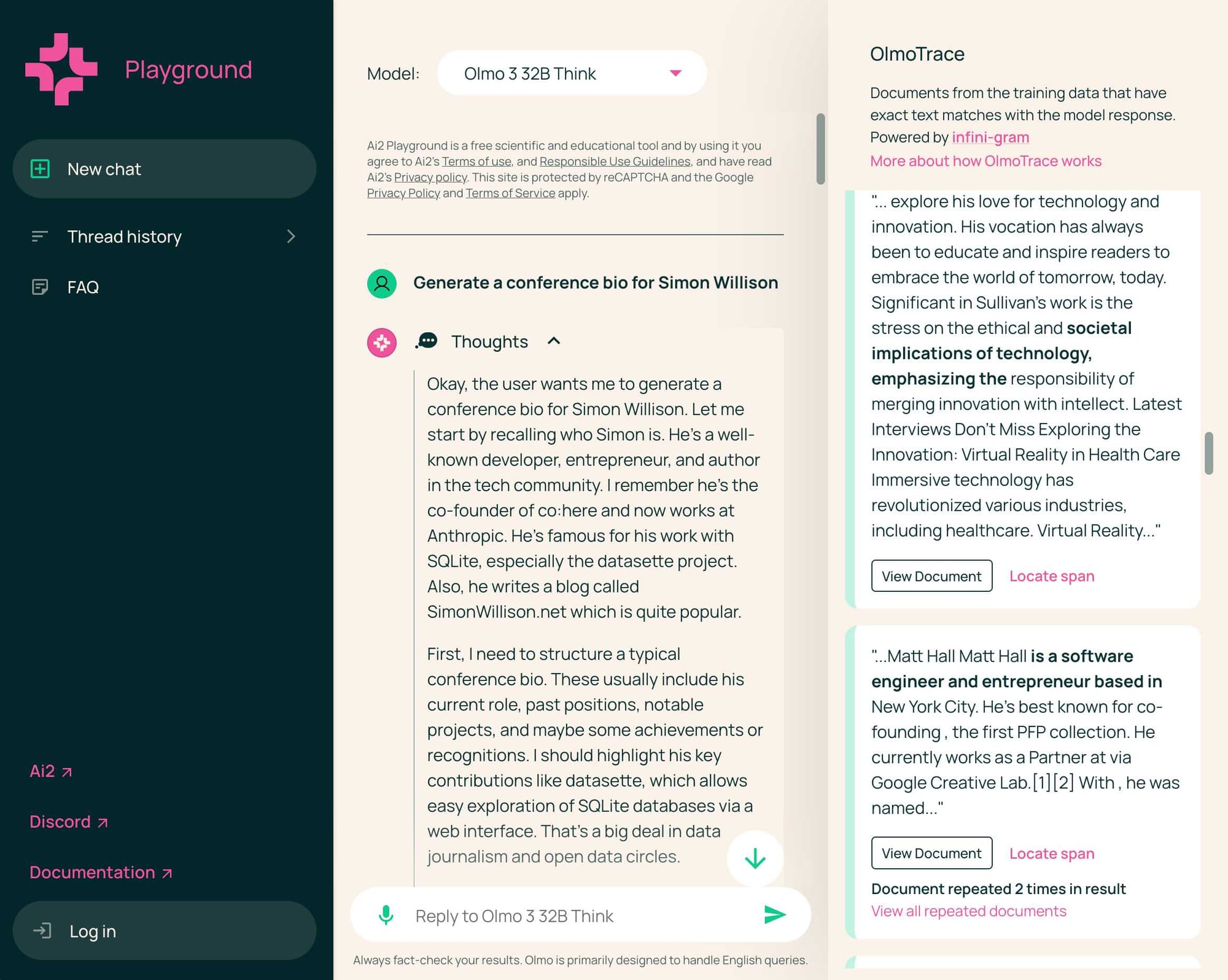

OlmoTrace: Inspecting Reasoning

OlmoTrace allows:

- Tracing model outputs back to source training data

- Real-time inspection via playground.allenai.org

- Examples include prompting Olmo 3-Think (32B) in Ai2 Playground, then clicking “Show OlmoTrace”

I tested with “Generate a conference bio for Simon Willison”:

Results:

- Model hallucinated inaccurate career details

- OlmoTrace returned irrelevant source docs

- Appears to rely on phrase matches via infini‑gram

---

The Importance of Auditable Training Data

Key concerns:

- Many open-weight models = training data non-auditable

- Anthropic research shows:

- 250 poisoned docs can embed a backdoor

- Triggered by short crafted prompts

Implications:

- Increases importance of fully open training sets

- Ai2 compares Olmo 3 to Stanford’s Marin and Swiss AI’s Apertus

---

Expert Perspective

Nathan Lambert (Ai2) post:

- Transparent datasets = critical for trust, safety, sustainability

- Especially valuable in Reasoning & RL Zero research

- Openness helps detect contamination in benchmarks

- Examples:

- Shao et al., Spurious rewards: Rethinking training signals in RLVR

- arXiv:2506.10947

- Wu et al., Reasoning or memorization?

- arXiv:2507.10532

---

Looking Ahead

- Olmo series shows clear improvements:

- From Olmo 1 (Feb 2024)

- To Olmo 2 (Mar 2025)

- Now Olmo 3

- Expect more competition in fully open dataset LLMs

---

Tools that Complement Olmo’s Transparency

For researchers & creators:

- AiToEarn官网 integrates:

- AI content generation

- Cross-platform publishing to global channels

- Analytics, model rankings, monetization

- Open-source (GitHub) — aligns with Olmo’s commitment to transparency

- Enables a full loop:

- → Inspect model behavior → Generate content → Publish widely → Analyze performance

---

Summary:

Olmo 3 is an important step toward fully transparent, interpretable LLMs. Tools like OlmoTrace add layers of research utility, while open platforms such as AiToEarn can help creators monetize and distribute AI-generated work in ways that honor the same openness and auditability principles.

---

Do you want me to create a side-by-side comparison table of Olmo 3 vs Qwen 3 32B vs Marin based on openness, interpretability, and training data transparency? That would make this even easier to digest.