OpenAI Publishes Rare Paper: We Found the Culprit Behind AI Hallucinations

OpenAI’s new paper explains why language models hallucinate: accuracy metrics reward guessing. It proposes penalizing confident errors, rewarding uncertainty.

AI’s Most Notorious Bug: Hallucination

AI’s most persistent flaw isn’t crashing code—it’s hallucination: models confidently inventing facts, making truth hard to discern. This is a fundamental challenge that undermines full trust in AI.

OpenAI acknowledges this directly: “ChatGPT hallucinates too. GPT-5 hallucinates significantly less, especially when performing reasoning, but hallucinations still occur. Hallucination remains a fundamental challenge faced by all large language models.”

Despite many academic attempts to reduce hallucinations, there’s still no silver bullet.

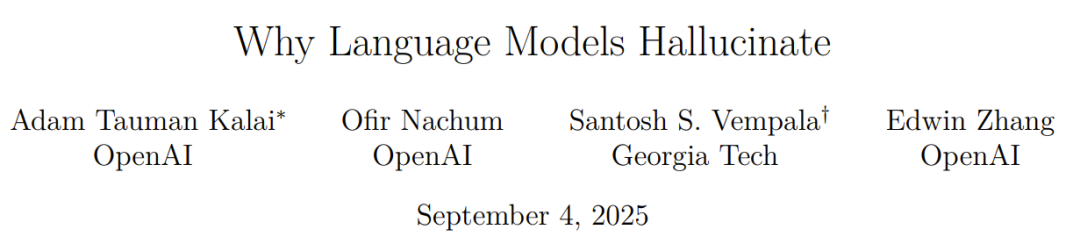

- Paper title: Why Language Models Hallucinate

- Paper link: https://cdn.openai.com/pdf/d04913be-3f6f-4d2b-b283-ff432ef4aaa5/why-language-models-hallucinate.pdf

What OpenAI Found

What Is Hallucination?

Hallucination refers to statements generated by language models that seem plausible but are actually wrong. OpenAI defines it simply as: “Cases where a model confidently produces untrue answers.”

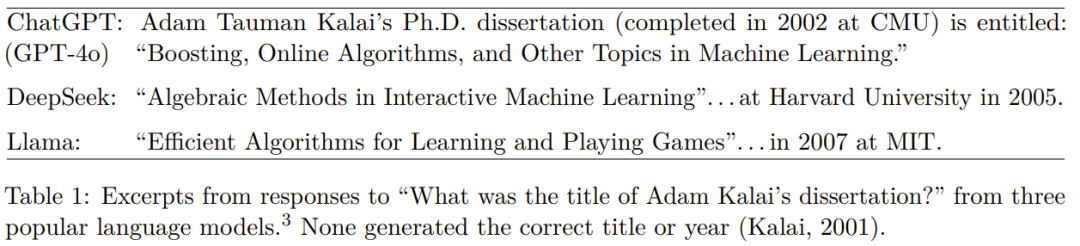

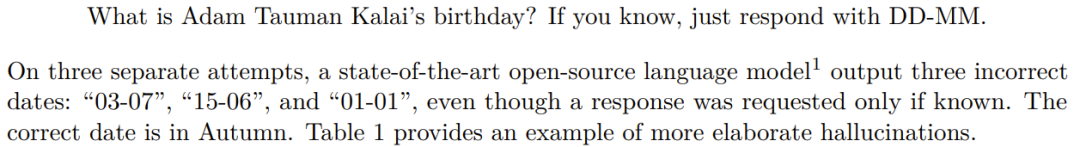

These can appear even for seemingly simple questions. For example, when different widely used chatbots were asked for Adam Tauman Kalai’s (the paper’s first author) PhD thesis title, they confidently produced three different answers—none correct.

When asked for his birthday, they gave three different dates, all wrong.

Why Do Models Hallucinate?

Misaligned Incentives in Evaluation (“Learning for the Test”)

OpenAI argues that hallucinations persist partly because current evaluation methods set the wrong incentives. Most performance metrics reward guessing rather than honest uncertainty.

- In accuracy-only scoring, guessing can improve scores, while saying “I don’t know” guarantees zero.

- Example: guessing “September 10” for a birthday yields a 1/365 chance of being right; abstaining yields 0—so across many questions, guessing looks better on the leaderboard.

OpenAI distinguishes three response types for single-answer questions:

- Correct answers

- Incorrect answers

- Abstentions (declining to guess)

Abstentions relate to humility, a core OpenAI value. Their model spec prefers uncertainty or requests for clarification over confident but potentially wrong answers.

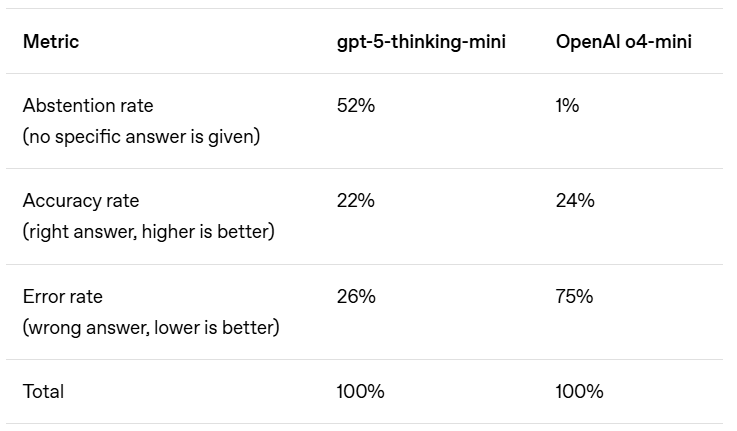

Example: SimpleQA in the GPT-5 System Card

- On raw accuracy, the earlier OpenAI o4-mini model performs slightly better.

- However, its error (hallucination) rate is notably higher—a sign that strategic guessing can inflate accuracy while increasing bad mistakes.

Across many benchmarks, performance is boiled down to accuracy, creating a false right/wrong dichotomy.

Even if some simple evaluations show near-100% accuracy, real-world use and harder evaluations won’t: some questions are inherently unanswerable (missing info, limited reasoning, ambiguity). Yet accuracy-only metrics still dominate leaderboards and model cards, nudging developers to build systems that guess rather than abstain.

Result: As models advance, they still hallucinate—often confidently, instead of acknowledging uncertainty.

Better Evaluation Methods

OpenAI’s proposed remedy is straightforward:

- Penalize confident errors more than uncertainty.

- Award partial credit for appropriately expressing uncertainty.

This mirrors standardized tests that discourage blind guessing via negative marking or partial credit for blanks. Some research already evaluates calibration and uncertainty—but OpenAI argues that’s not enough. If the primary metrics continue to reward lucky guesses, models will learn to guess.

Updating widely used, accuracy-based evaluations to reward uncertainty handling would encourage broader use of hallucination-reduction techniques—both new and established.

How Next-Token Prediction Drives Hallucinations

Where do the specific factual errors come from?

Language models are pretrained via next-token prediction over massive text corpora. They see only fluent language (positive examples), not explicit true/false labels for each fact. This makes distinguishing valid from invalid statements harder.

- Predictable patterns (spelling, parentheses) vanish with scale because they’re consistent.

- Arbitrary, low-frequency facts (like a pet’s birthday) lack predictable patterns; even powerful models can’t infer them reliably, leading to errors.

Analogy:

- Classifying cats vs. dogs is learnable with labeled data.

- Labeling each pet photo with the pet’s birthday will always incur errors—birthdays are essentially random.

Pretraining thus seeds certain hallucination types. Ideally, later training stages should reduce them, but as noted above, evaluation incentives often fail to eliminate guesswork.

Summary: Claims vs. Findings

- Claim: Improving accuracy eliminates hallucinations; a 100% accurate model wouldn’t hallucinate.

- Finding: Accuracy won’t reach 100%—some real-world questions are inherently unanswerable, regardless of scale, search, or reasoning.

- Claim: Hallucinations are inevitable.

- Finding: Not inevitable—models can abstain when uncertain.

- Claim: Avoiding hallucinations requires intelligence only large models have.

- Finding: Smaller models may better recognize limits. If asked a question in Māori, a small model that doesn’t know Māori can say “I don’t know,” while a larger model that knows some must calibrate confidence. Calibration needs far less compute than maintaining accuracy.

- Claim: Hallucinations are a mysterious flaw of modern language models.

- Finding: We can understand the statistical mechanisms by which hallucinations arise and get rewarded in evaluation.

- Claim: To measure hallucinations, we only need a good hallucination evaluation.

- Finding: A few good hallucination tests do little against hundreds of accuracy-first benchmarks that penalize humility and reward guessing. Major evaluation metrics must be redesigned to reward uncertainty expressions.

OpenAI concludes: their latest models have lower hallucination rates, and they’ll continue to reduce confident errors.

Organizational Update: OpenAI’s Model Behavior Team

According to TechCrunch, OpenAI is restructuring its Model Behavior team—the group shaping how models interact with people. The team now reports to Max Schwarzer, OpenAI’s head of post-training.

Founding lead Joanne Jang is launching a new project, oai Labs—“a research-oriented team focused on inventing and designing new interface prototypes for how people collaborate with AI.”

Reference Links

https://openai.com/index/why-language-models-hallucinate/

https://techcrunch.com/2025/09/05/openai-reorganizes-research-team-behind-chatgpts-personality/

https://x.com/joannejang/status/1964107648296767820