OpenAI’s In-House Chip Secrets Revealed: AI-Optimized Design Began 18 Months Ago

OpenAI & Broadcom Partnership: Building 10 GW AI Infrastructure

“Using models to optimize chip design — faster than human engineers.”

After 18 months of quiet collaboration, OpenAI and Broadcom have officially announced a massive partnership. Top leaders from both companies met in person to share details of the deal:

- Sam Altman — CEO of OpenAI (2nd from right)

- Greg Brockman — President of OpenAI (far right)

- Hock Tan — Broadcom CEO & President (center)

- Charlie Kawwas — President, Semiconductor Solutions Group at Broadcom (2nd from left)

---

Partnership Overview

Deployment Scale:

- 10 GW-scale AI accelerators

- Rollout begins: 2nd half of 2026

- Full deployment target: end of 2029

Roles:

- OpenAI: Design accelerators & systems, embedding model development experience into hardware.

- Broadcom: Co-develop and deploy, leveraging Ethernet and interconnect tech for global AI demand.

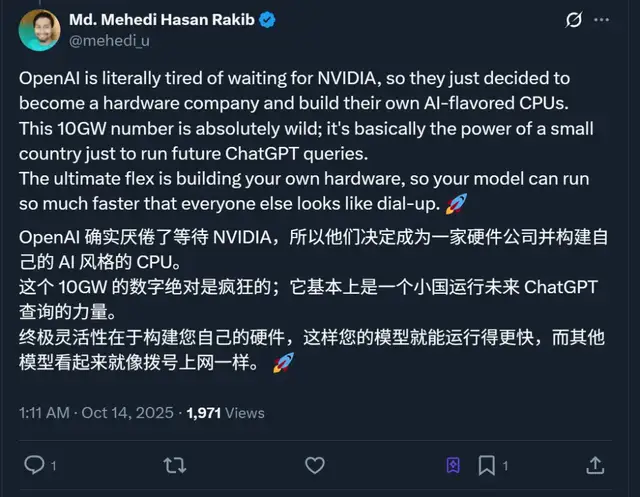

What Does 10 GW Mean?

- Traditional supercomputing centers: a few hundred MW.

- 10 GW = 10,000 MW — enough to power 100 million 100‑watt light bulbs simultaneously.

---

Official Statements

> Sam Altman — “Partnering with Broadcom is a key step in building infrastructure required to unlock AI’s potential.”

> Hock Tan — “Marks a critical moment in pursuing AGI… jointly developing 10 GW of next-gen accelerators.”

> Greg Brockman — “By making our own chips, we unlock new capabilities and intelligence levels.”

> Charlie Kawwas — “Custom accelerators + Ethernet solutions optimize next-gen AI infrastructure for cost and performance.”

---

Strategic Significance

For Broadcom:

- Custom accelerators + Ethernet as core scaling tech in AI data centers.

For OpenAI:

- Alleviating compute shortages (ChatGPT has ~800M weekly active users).

- Reduced dependence on GPU supply from Nvidia.

---

Inside the On-Stage Discussion

Two key questions posed:

- Why is OpenAI developing its own chips now?

- What will happen once they move to self-developed chips?

---

1. Why Develop Chips Now

Greg Brockman cited three main reasons:

- Deep understanding of workloads — identify gaps current chips don’t serve.

- Scaling discoveries — past breakthroughs often came from scaling.

- Frustrations with external partnerships — inability to align chip design with their vision.

Key takeaway: Vertical integration to deliver solutions optimized for specific workloads.

Brockman admitted he once opposed vertical integration, but changed stance, citing the iPhone as a model for tightly integrated, high-performance products.

---

2. Scaling Impact on AI Development

- In 2017, scaling was discovered to be critical during reinforcement learning experiments in Dota 2.

- OpenAI now sees chip development as essential to continually increase computing power.

Without chips, you have no voice: Brockman noted chip makers often ignored OpenAI’s design directions — self-development gives them control.

---

3. Expected Outcomes of Self-Developed Chips

Efficiency gains: Optimizing the full stack to deliver more intelligence per watt — faster, better, cheaper models.

Same mindset as Jensen Huang: People always want more — deliver it.

---

4. AI in Chip Design

Greg revealed AI is already key in design: models optimize chips faster than human engineers.

> Human designs → AI suggestions → reviewed by experts → time savings up to a month for certain optimizations.

---

Related Partnerships

- Nvidia: 10 GW AI clusters, millions of GPUs. $100B planned investment.

- AMD: 6 GW AI infrastructure.

Now Broadcom joins the circle.

---

The Bigger Picture

OpenAI’s approach: self-developed + partnerships — planned quietly for nearly 2 years to break compute bottlenecks.

This mirrors trends in AI creator platforms like AiToEarn官网 — an open-source global AI content monetization platform enabling:

- AI content generation

- Cross-platform publishing

- Analytics & ranking across Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter)

Parallel: Just as OpenAI vertically integrates hardware for maximum output per watt, creators use AiToEarn’s integrated workflow to maximize reach and monetization efficiently.

---

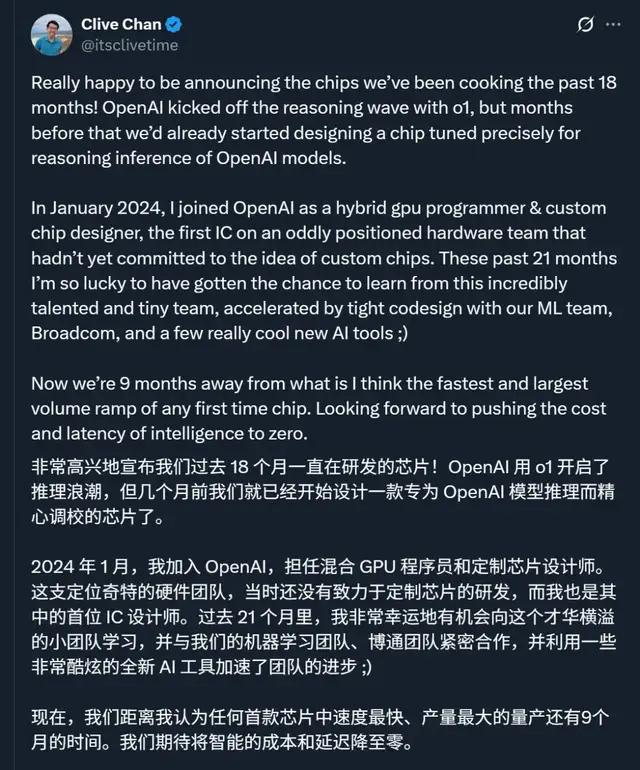

Current Status of OpenAI’s Chip Development

- Timeline: 18 months in progress

- Since o1 model inference wave, focus shifted to designing inference chips.

- Estimated 9 months until fastest large-scale mass production for any first-release chips.

Unknowns: Performance of the first mass-produced chip — something to watch closely.

---

Final Thought

From hardware infrastructure to creative monetization, the trend is clear: vertical integration + scaling = competitive advantage. Whether it’s OpenAI’s chips or AiToEarn’s creative pipeline, the winners will be those who own and optimize the entire stack.