OpenAI’s Major Setback: GPT‑5 Is Just a Reskinned GPT‑4o, No Breakthrough After 2.5 Years of Pretraining

After Ilya’s Departure: No Breakthrough in Sight

Report by New Intelligence Source

Editors: KingHZ, Taozi

---

📢 Lead Summary

OpenAI is in urgent need of a comeback.

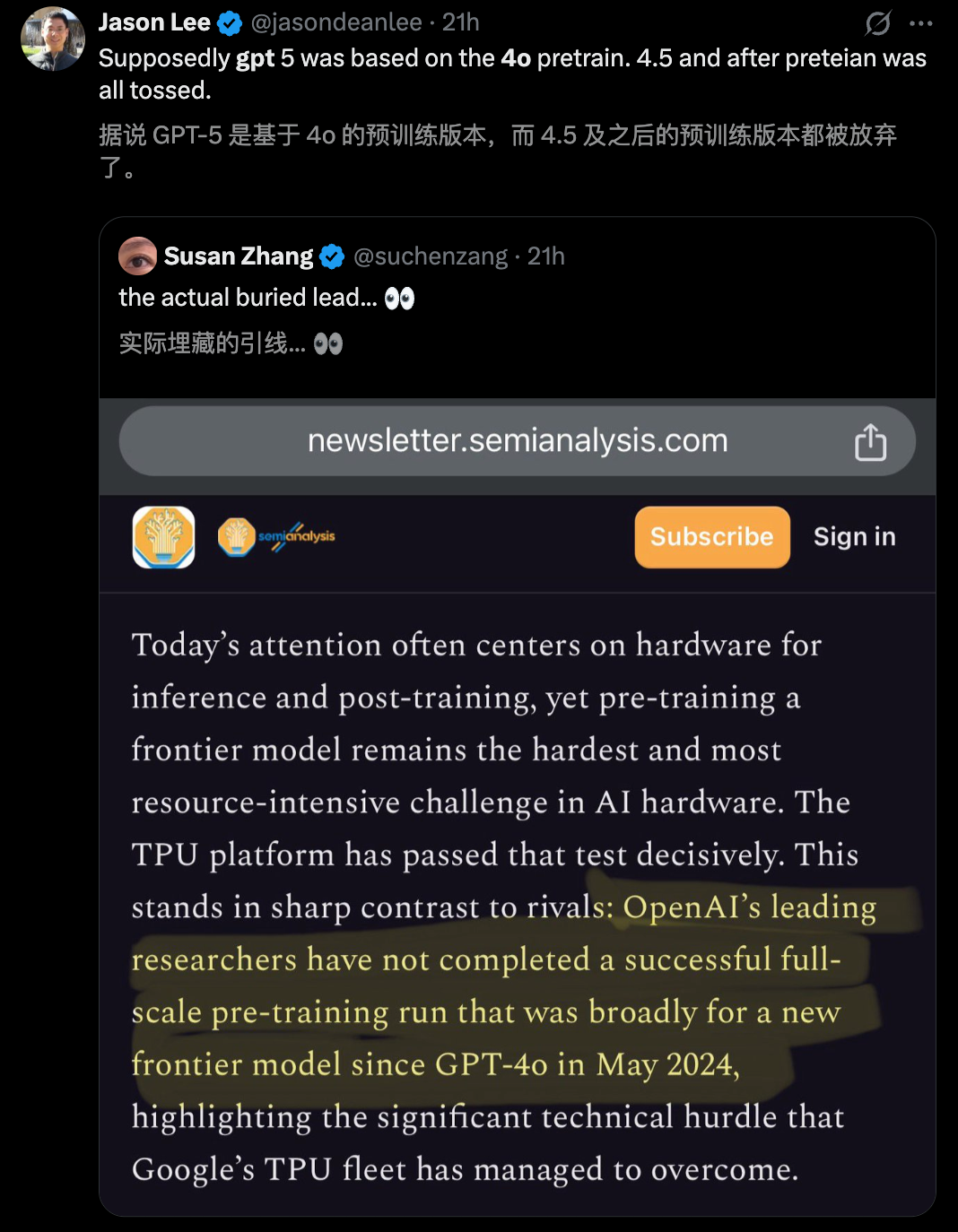

Today’s biggest leak online: The cornerstone of GPT‑5 is actually GPT‑4o — and since the release of 4o, subsequent pre-training has repeatedly encountered obstacles, to the point of near abandonment.

---

OpenAI Core Pre-Training: Consecutive Setbacks?

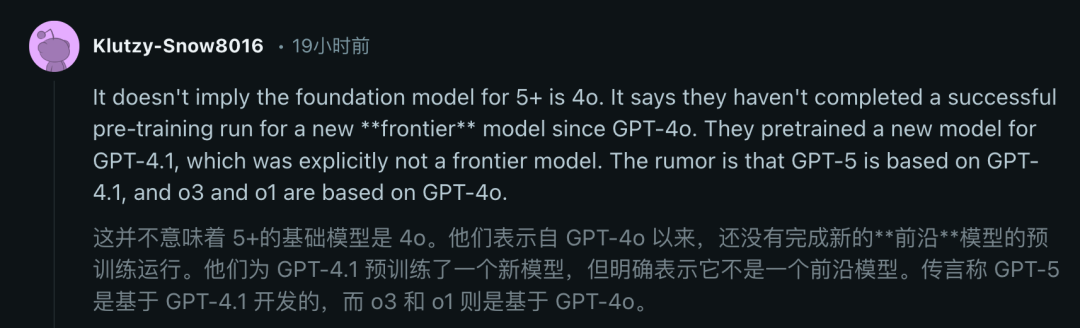

Rumors suggest GPT‑5’s foundation is still GPT‑4o, with all pre-training versions after GPT‑4.5 abandoned.

This isn’t idle speculation — the claim comes from a recent SemiAnalysis report revealing:

- Since GPT‑4o’s release, OpenAI’s top-tier team has yet to complete a single, large-scale pre-training run for a true next‑gen frontier model.

---

Hardware & Industry Context

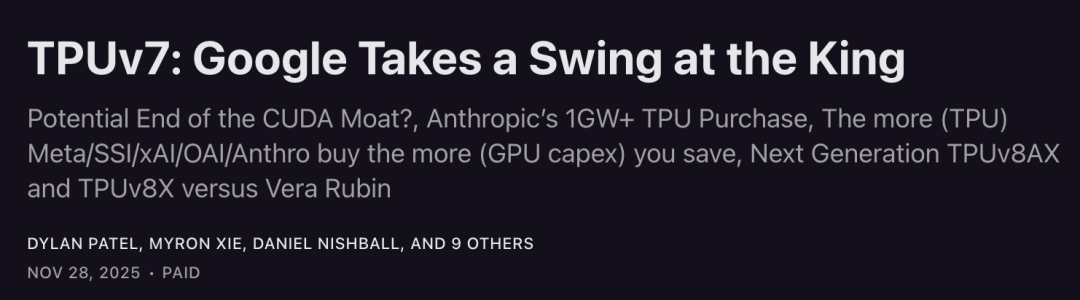

- Google is leveraging TPUv7 to potentially challenge NVIDIA’s CUDA moat.

- OpenAI trains its full-stack models entirely on NVIDIA GPUs.

Yet without large-scale model pre-training, inference and post-training hardware debates mean little — pre-training is the most resource-intensive stage in the entire AI chain.

Insider facts:

- ✔ Google’s TPU successfully met pre‑training challenges.

- ✘ OpenAI has made no progress in pre-training since GPT‑4o’s May 2024 launch.

---

Timeline of Stagnation

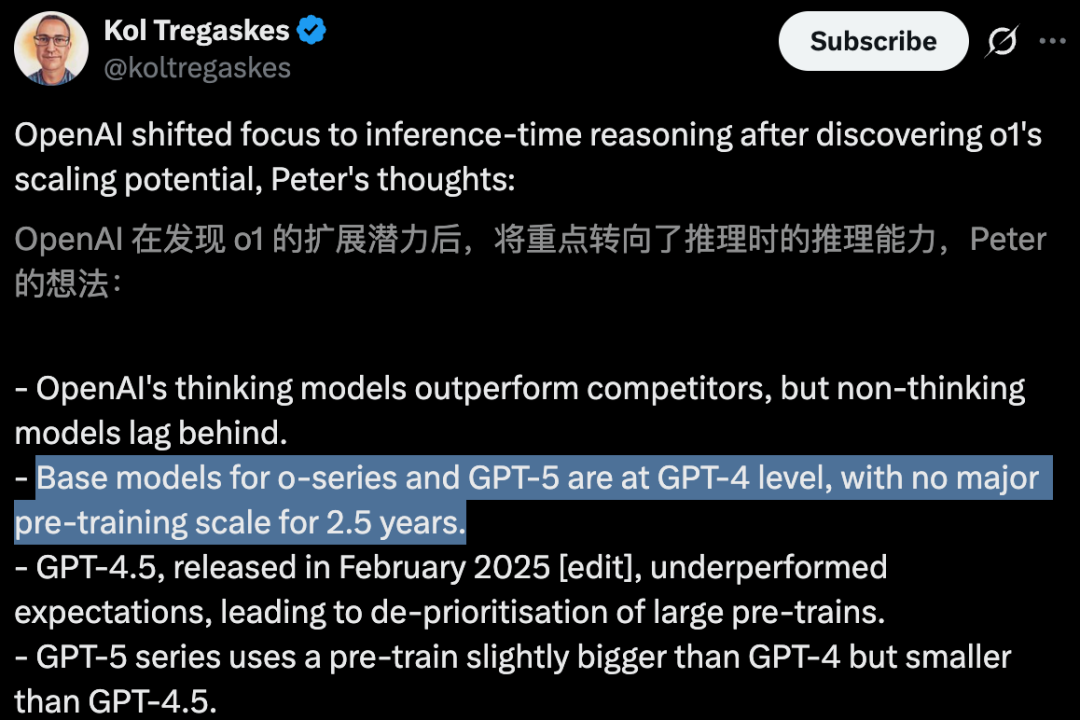

- Last 2.5 years: No real scale-up in OpenAI’s pre-training.

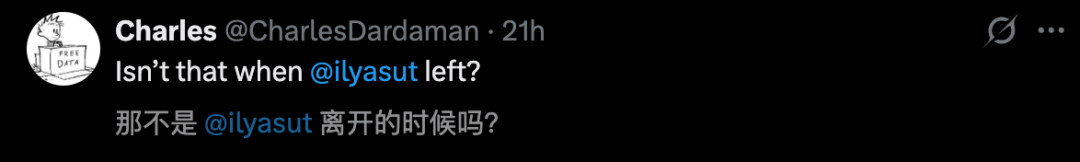

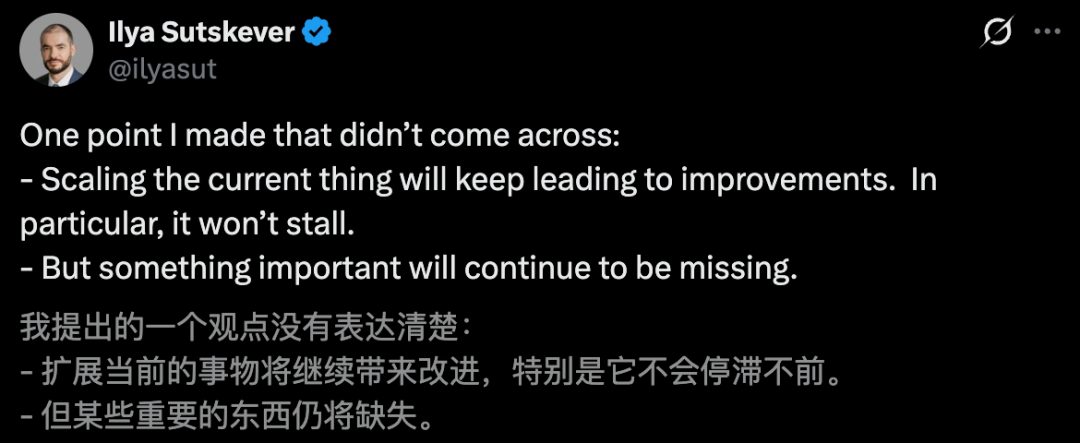

- Possible “ceiling” was hit after 4o — coinciding with Ilya’s departure.

Even Ilya commented recently: Scaling won’t stop, but “something important” will still be missing.

---

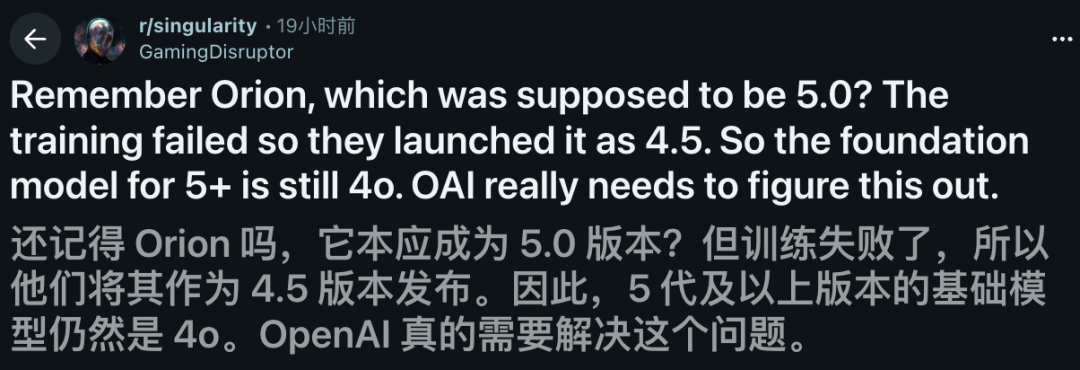

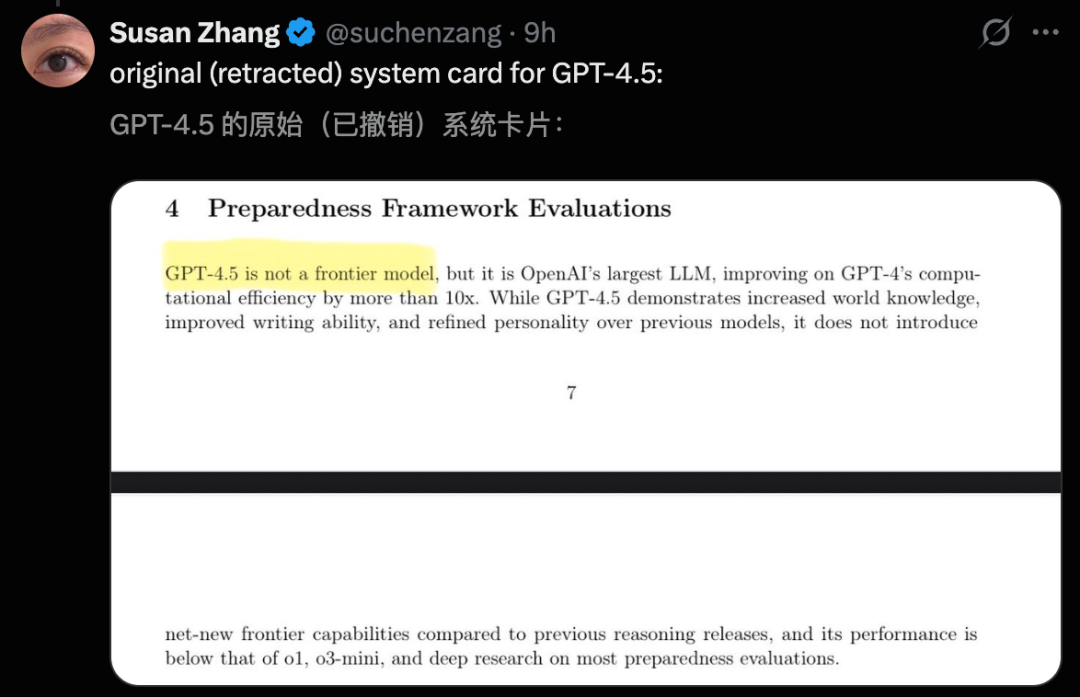

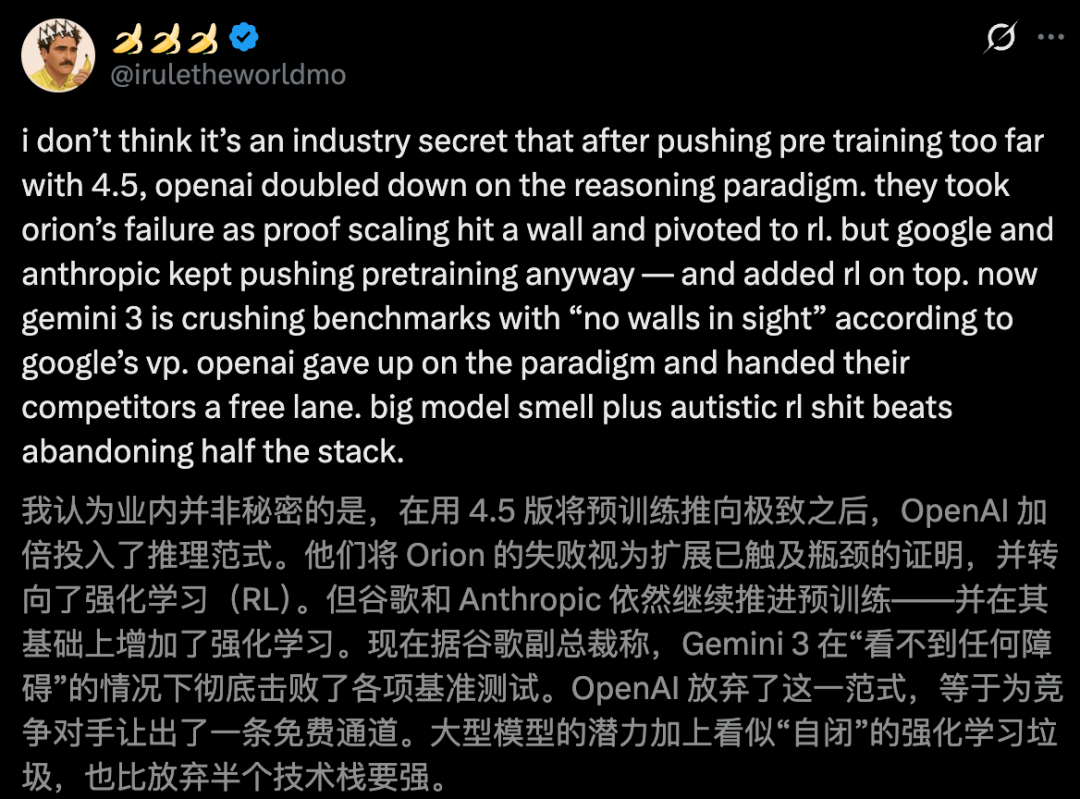

Major Pre-Training Collapse: The “Orion” Case

- Late last year, “Orion” was intended to be GPT‑5.

- After underperforming, it was downgraded to GPT‑4.5.

Issues with Orion:

- Training took over 3 months — breaking the industry norm of 1–2 months.

- Performance gains were mostly in language, with coding quality worse than older models.

- Costs were higher without proportionate capability boosts.

---

Innovation Pressure & Ecosystem Needs

Frontier model setbacks point to compute limits and ecosystem gaps.

Sustainable AI R&D demands integrated platforms connecting development, deployment, and monetization.

Example: AiToEarn — open-source AI content monetization platform.

It enables creation, publishing, and monetization across:

- Douyin

- Kwai

- Bilibili

- Xiaohongshu

- Threads

- YouTube

- X (Twitter)

With analytics and AI model rankings, AiToEarn bridges AI generation with real-world economic impact.

---

February Origins of GPT‑4.5 (“Orion”)

Focus areas:

- Advanced language capabilities

- Stable conversational experience

- Expanded knowledge base

Keyword for GPT‑4.5: Emotional Intelligence.

Coding ability improved, but less emphasized — supporting rumors that Orion’s leap was modest.

---

GPT‑4o as Scaling Path?

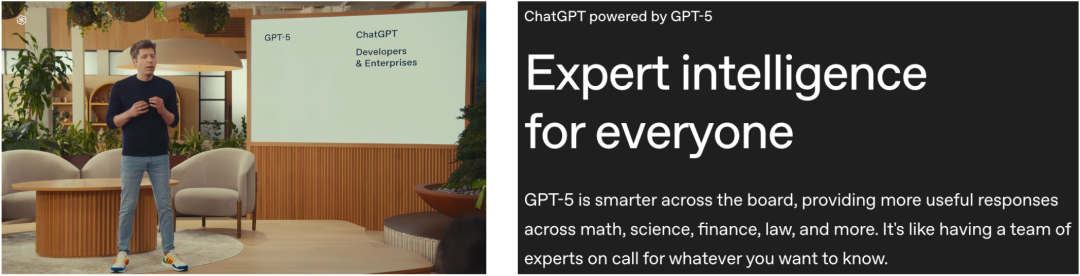

- August release of GPT‑5 framed by Sam Altman as “Ph.D.-level AI towards AGI” — met with mixed reactions.

- Many saw GPT‑5 as a refined GPT‑4.5, not a disruptive overhaul.

Evidence points to no large-scale pretraining of an entirely new model, possibly still based on GPT‑4o.

OpenAI now focuses on reasoning paradigms + RL rather than scaling pretraining — leaving rivals a speed advantage.

---

Altman Acknowledges Google’s Lead

Leaked memos show Altman conceding Google’s excellence in LLM pretraining.

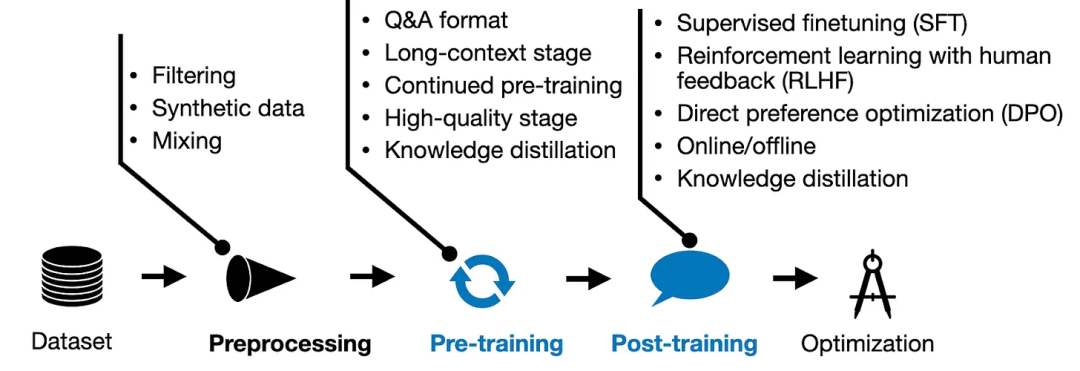

Pretraining Stage Essentials:

- Feed massive data to the model (e.g., web pages).

- Teaches relationships & structure — precursor to post-training and deployment.

---

Why This Matters

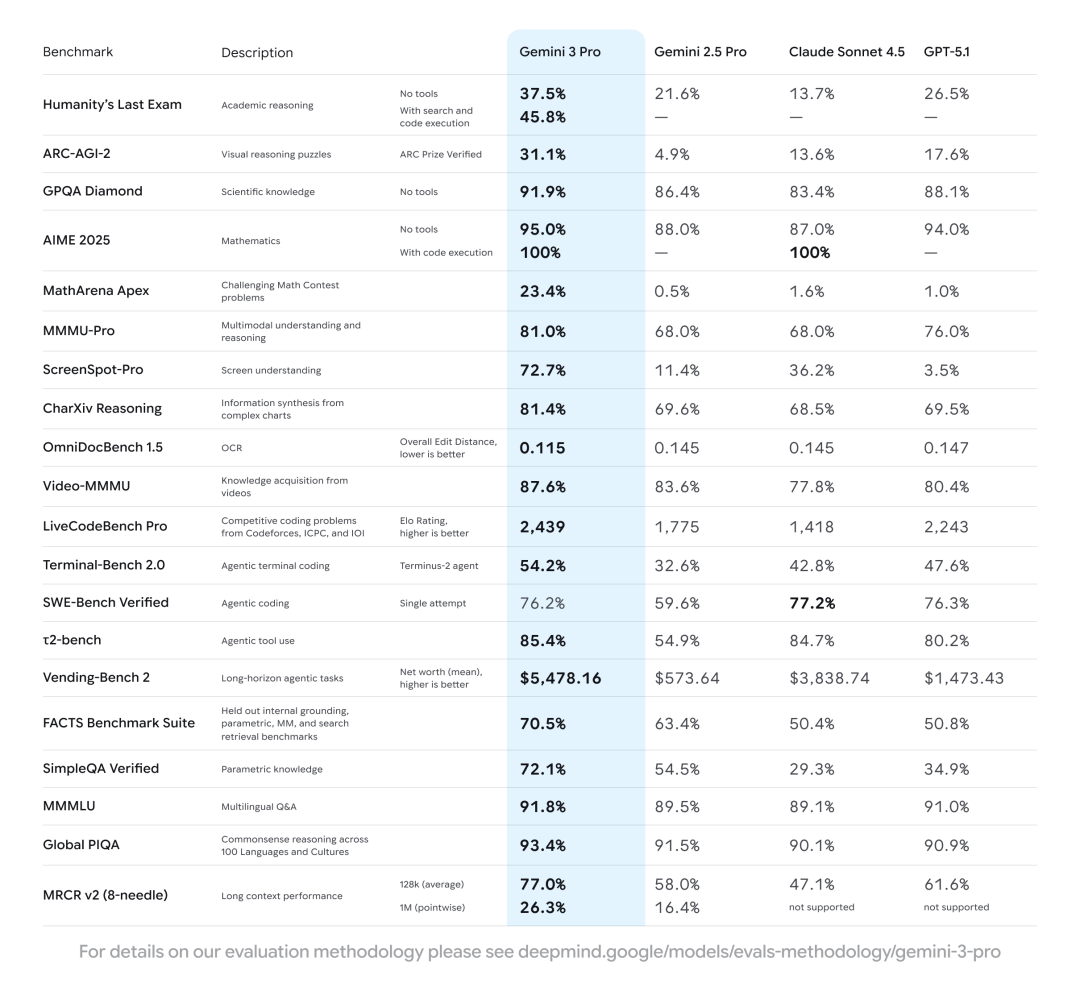

- Google’s breakthroughs made Gemini 3 deeper in reasoning.

- Given OpenAI’s 2023 setbacks, this was surprising.

- OpenAI pivoted toward heavy-compute “reasoning models” but these failed to scale successfully.

Javier Alba de Alba on GPT‑5:

> Excellent performance and cost-effectiveness — but far from the generational leap expected.

---

Strategic Shift: Scaling → Reasoning

For creators and developers, this shift reinforces the need for adaptability.

Platforms like AiToEarn官网 connect AI outputs to monetization and analytics, allowing reach across multiple channels.

---

GPT‑5: Improvements but Not a Leap

- Boost in coding

- Advanced reasoning

- Hallucination reduction

- Enhanced medical applications

- Unified naming (no more turbo/mini/o-series labels)

Javier Alba de Alba warns: GPT‑5 is closer to “GPT‑4.2” than a new-gen product.

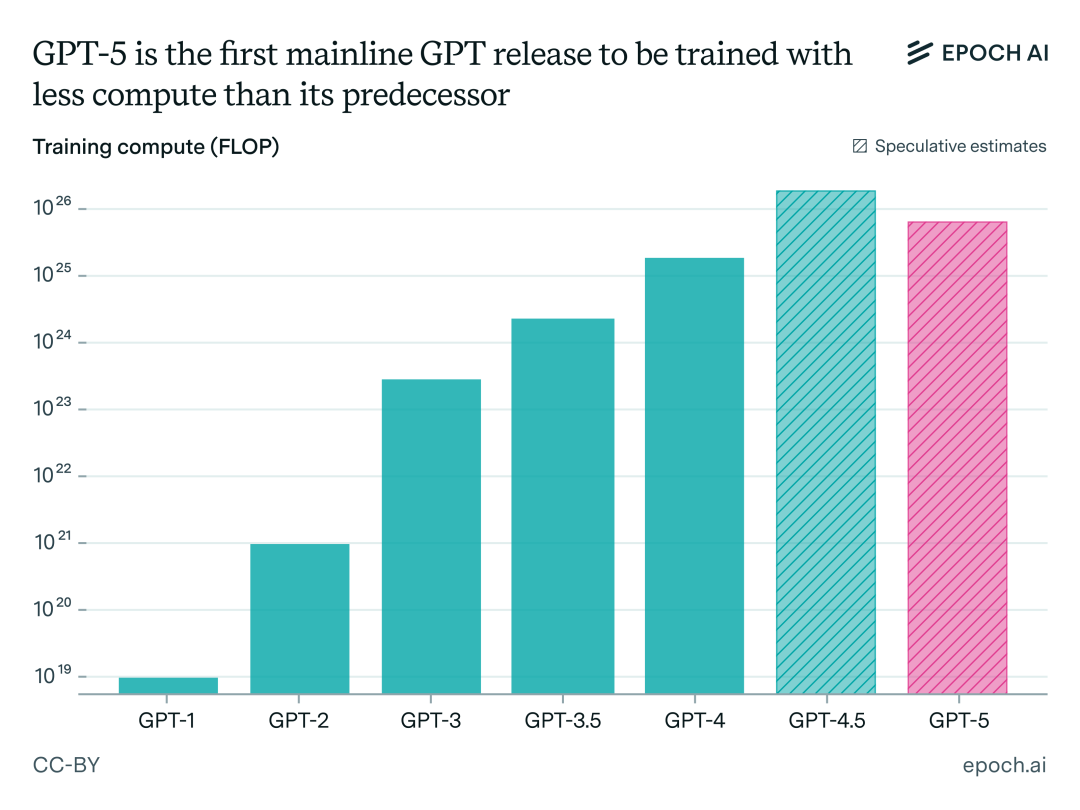

Epoch AI suspects GPT‑5 used less training compute than GPT‑4.5.

---

Future Plans: “Shallotpeat”

Goal: Fix persistent pre-training issues.

For users: GPT‑5 is good but evolutionary, not revolutionary.

---

Platform Strategy Insights (Sherwin Wu Interview)

Shift in consensus:

- From “one supreme model” → Specialized diversified models (e.g., Codex, Sora).

- Separate tech stacks for text, image, video.

Fine-Tuning Evolution:

- Early: adjust tone/instructions

- Now: Reinforcement Fine-Tuning (RFT) using proprietary enterprise data.

---

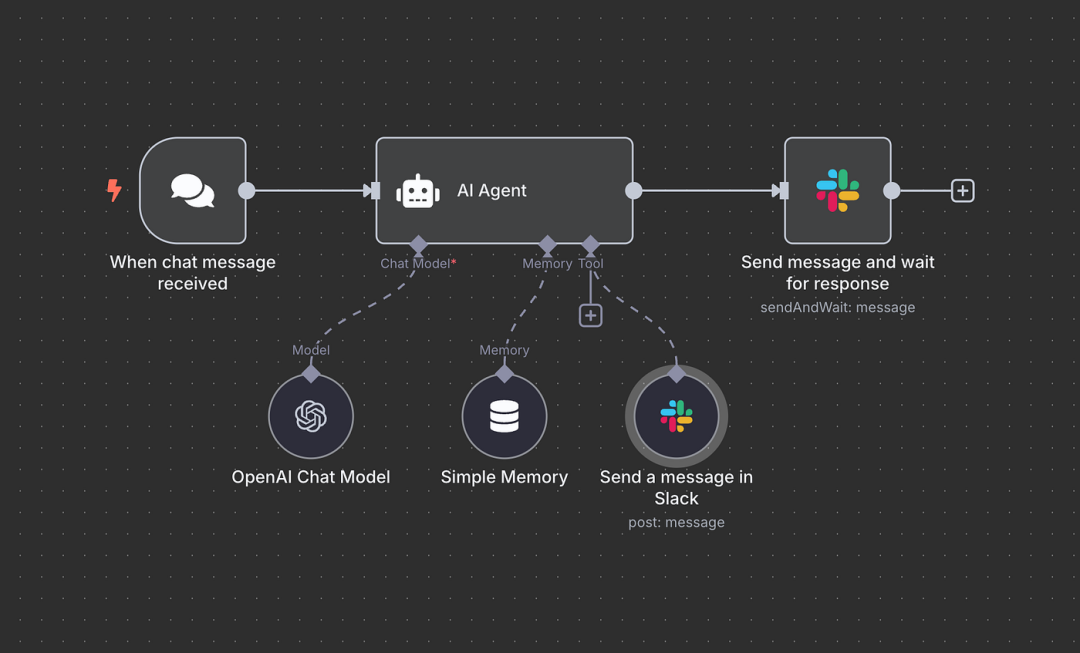

Agent Development Model

Dual-track strategy: App + API to reach both consumers and developers.

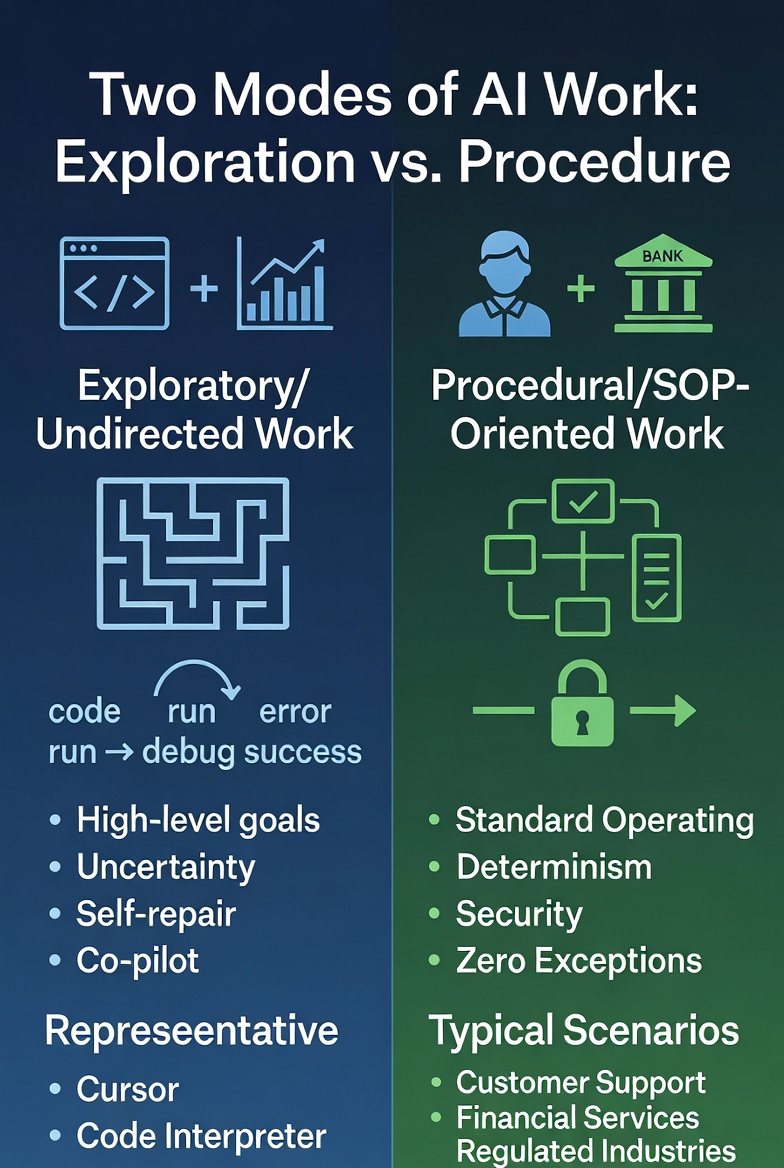

Two agent types:

- Undirected / Exploratory

- Procedural / SOP-oriented — where hard-coded logic is essential.

Agent Builder provides controlled, verifiable agent workflows — critical for regulated industries.

---

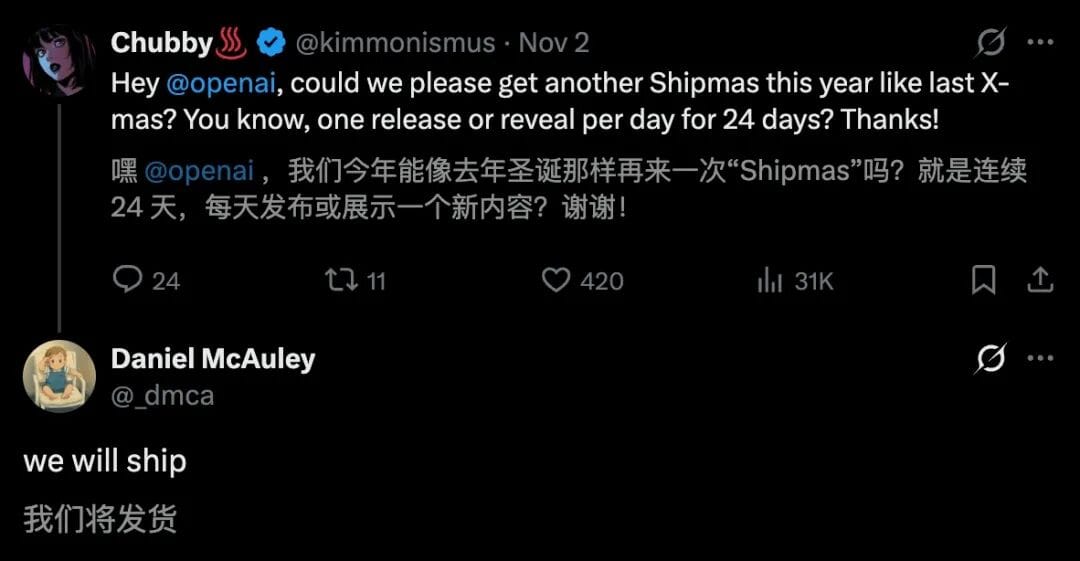

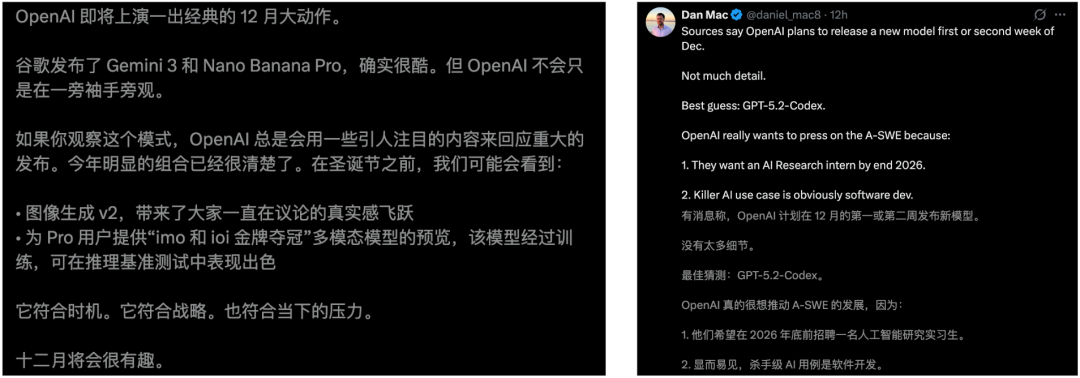

One More Thing: December Launch Wave

Rumored releases:

- Image Gen v2

- IMO & IOI multimodal models

- GPT‑5.2 Codex

---

Reference

Leaked memo: Sam Altman sees ‘rough vibes’ and economic headwinds at OpenAI

---

Instant ASI Tracking

⭐ Like · Share · Watch — all in one click ⭐

Turn on notifications to get instant updates from New Zhiyuan.

---

Shallotpeat could be OpenAI’s consolidation before a major leap — aligning talent, compute, and API innovation to match Gemini’s pace.

Meanwhile, creator economy tools like AiToEarn let AI-generated content go global in one click. For developers tracking these shifts, integration of model performance, ecosystems, and monetization will be key in the months ahead.

---

Would you like me to now produce a concise timeline infographic mapping OpenAI’s pre-training milestones from GPT‑4o to “Shallotpeat”? That would make these developments even clearer.