# **New Intelligence Report**

**Editor:** LRST

---

## **Summary Overview**

Powered by **big data** and **large models**, fine-tuning has emerged as a powerful, low-cost, and efficient method for tackling **complex remote sensing scenarios** — especially those involving **small samples** and **long-tail targets**.

### **Key Evolution Path**

1. **Full-Parameter Fine-Tuning** – Early approach, updating most model parameters to achieve task transfer.

2. **Parameter-Efficient Fine-Tuning (PEFT)** – Techniques like adapters, prompts, and re-parameterization.

3. **Hybrid Fine-Tuning** – Unified frameworks integrating multiple PEFT methods for better scalability.

A joint team led by **Tsinghua University** published a review in **CVMJ** outlining nine research directions to strengthen remote sensing applications in **agricultural monitoring**, **weather forecasting**, and beyond.

---

## **Foundation Models + Fine-Tuning: The New Paradigm**

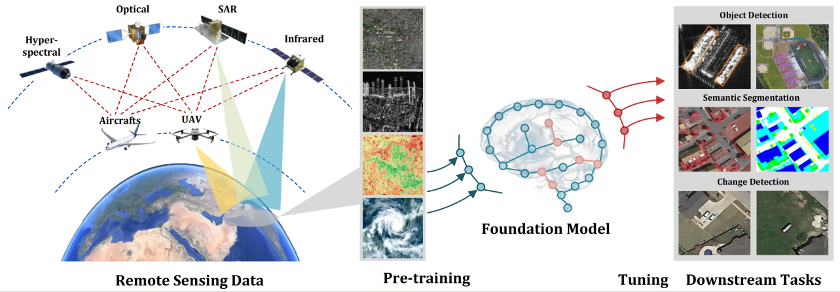

In recent years, the focus in remote sensing image interpretation has shifted from designing model architectures to the paradigm of **"foundation models + fine-tuning"**, which enables:

- **Better transferability**

- **Improved application performance**

- **Lower resource costs**

> **Challenges addressed:** small datasets, long-tail targets, limited computing resources.

**Figure 1** – Role of foundation models and fine-tuning in downstream remote sensing task adaptation.

---

## **Technology Evolution Timeline**

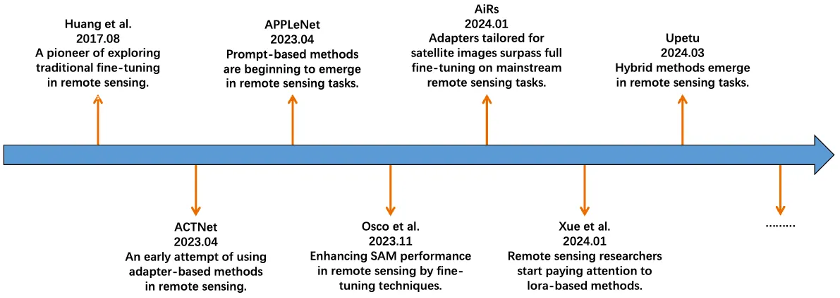

- **Early Stage:** Full-parameter fine-tuning for cross-task transfer.

- **PEFT Stage:** Low-cost adaptation methods like Adapters, Prompt Tuning, and LoRA.

- **Hybrid Stage:** Combining multiple PEFT techniques for **multi-modality** and **multi-task** adaptability.

**Figure 2** – Timeline of representative remote sensing fine-tuning technologies.

---

## **Research Review by Leading Institutions**

Researchers from **Tsinghua**, **Nankai**, **Hunan**, **Wuhan University**, and **CAS** trace the progression from traditional fine-tuning to modern hybrid PEFT paradigms.

**Paper:** [https://ieeexplore.ieee.org/document/11119145](https://ieeexplore.ieee.org/document/11119145)

**Code:** [https://github.com/DongshuoYin/Remote-Sensing-Tuning-A-Survey](https://github.com/DongshuoYin/Remote-Sensing-Tuning-A-Survey)

---

## **Six Core Fine-Tuning Paradigms**

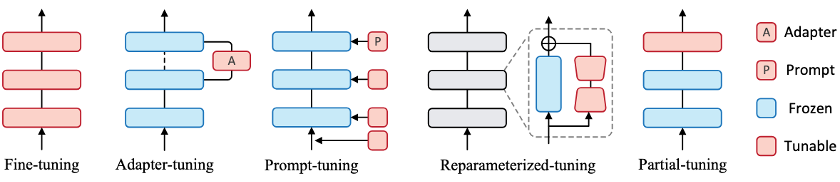

**Figure 3** – Representative paradigms of parameter-efficient fine-tuning.

1. **Adapter Tuning** – Add lightweight trainable modules into frozen models.

2. **Prompt Tuning** – Learn prompt vectors to guide frozen models.

3. **Reparameterized Tuning (e.g., LoRA)** – Low-rank decomposition to minimize trainable parameters.

4. **Hybrid Tuning** – Combine two or more approaches to enhance flexibility.

5. **Partial Tuning** – Fine-tune only selected model layers.

6. **Improved Tuning** – Optimize full-parameter fine-tuning with new strategies and losses.

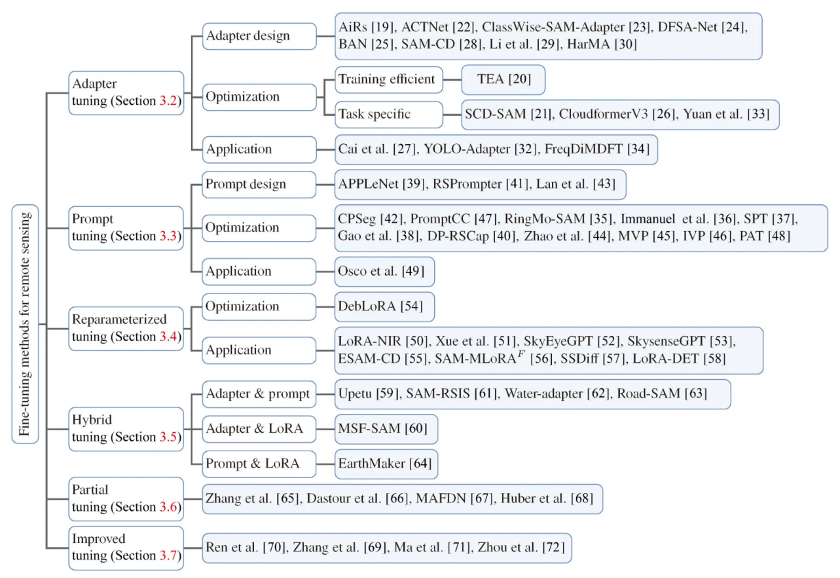

**Figure 4** – Overview of remote sensing fine-tuning techniques.

---

## **Representative Applications in Remote Sensing**

- **Adapter Tuning**

- **AiRs**: Spatial Context Adapters (SCA) & Semantic Response Adapters (SRA).

- **SCD-SAM**: Improved overlapping patch handling and multi-scale semantic integration.

- **Prompt Tuning**

- **RSPrompter**: Chain-of-Thought prompting for multi-step reasoning in context-rich images.

- **Reparameterized Tuning**

- **LoRA-NIR**: Optimized for near-infrared imagery.

- **LoRA-SAM**: Applied to segment roads and water bodies.

- **Hybrid Tuning**

- **Upetu**: Multi-technique integration.

- **MSF-SAM**: Combines Adapter + LoRA.

- **Improved Tuning Strategies**: Metric discriminative loss + knowledge distillation to reduce catastrophic forgetting.

---

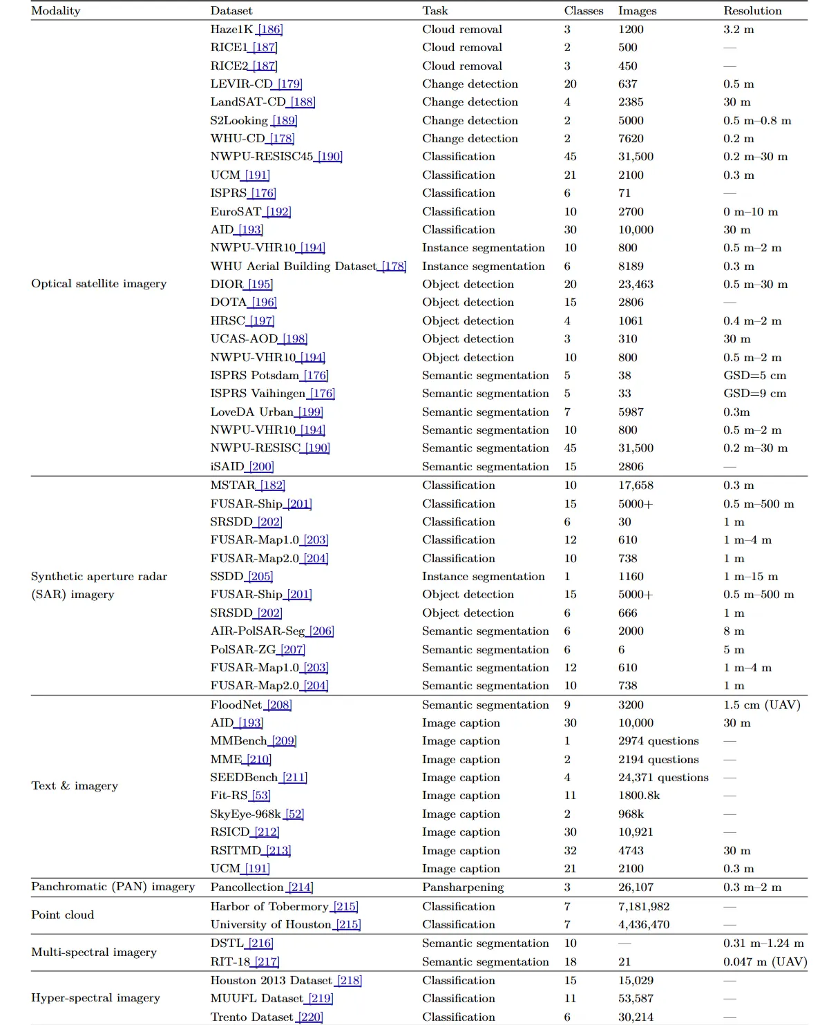

## **Datasets for Fine-Tuning**

**Table 1** – Summary of datasets for remote sensing fine-tuning.

Covers:

- **Modalities:** Optical, SAR, Hyperspectral, Point Cloud, Text-Image multimodal.

- **Tasks:** Dehazing, Change Detection, Segmentation, Detection, Captioning.

---

## **Challenges & Future Research Directions**

**Primary Focus Areas**

- **Efficient few-shot fine-tuning** for rare targets.

- **New application domains:** super-resolution, dehazing, object tracking.

- **Optimizing RS Foundation Models (RSFM)** for performance.

- **Leveraging RS-specific characteristics** in custom fine-tuning designs.

**Forward-Looking Strategies**

- Introduce **new PEFT paradigms** (structured sparsity, quantization-aware tuning).

- Explore **multi-approach hybrid tuning** combinations (Adapter + LoRA + Prompt).

- Develop **fine-tuning theory** for RS.

- **Optimize training configurations** (learning rate, layer count, optimizer choice).

- Research **scaling laws** linking model size, data volume, and performance.

---

## **Conclusion**

**Key Takeaway:** The combination of **foundation models + fine-tuning** is setting new standards in remote sensing efficiency. A thorough understanding of evolution from full-parameter to hybrid fine-tuning helps navigate future innovations.

Cross-domain AI content workflows, like **[AiToEarn官网](https://aitoearn.ai/)**, can accelerate the dissemination and monetization of research findings across platforms such as Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter).

**Reference:**

[https://ieeexplore.ieee.org/document/11119145](https://ieeexplore.ieee.org/document/11119145)

[Read the original text](2652641040)

[Open in WeChat](https://wechat2rss.bestblogs.dev/link-proxy/?k=d889d4a1&r=1&u=https%3A%2F%2Fmp.weixin.qq.com%2Fs%3F__biz%3DMzI3MTA0MTk1MA%3D%3D%26mid%3D2652641040%26idx%3D3%26sn%3D101665da1e746a7193bc3e48ad9a53cd)