# AI-Powered Group Chat Understanding for Community Operations

## 1. Why We Need AI for Group Chat Analysis

At **Bilibili**, our operations team manages numerous creator group chats — including category support groups, growth bootcamps, specialized forums, and Q&A channels. These groups generate **massive amounts of daily messages**.

Manual tracking is:

- Inefficient

- Prone to missing critical issues

- Limited by keyword-only analysis

- Unable to detect context, implicit meaning, or emerging topics

- Producing unstructured manual feedback that’s hard to analyze in real-time

**Our Goal:**

Build an **AI-driven system** that:

- Automatically reads community conversation content

- Understands creator intentions and sentiment

- Produces **structured insights, alerts, daily and weekly reports**

---

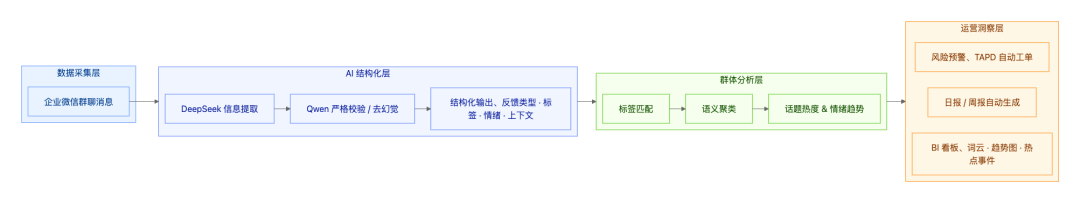

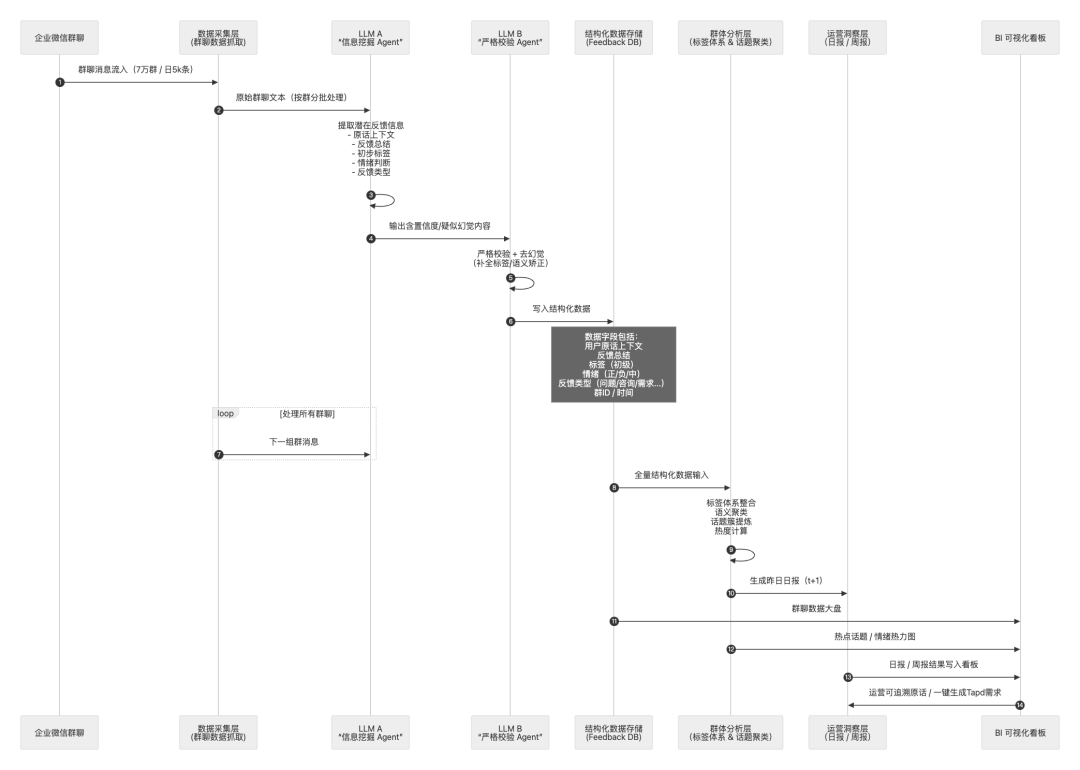

## 2. System Architecture: LLM-Driven Community “AI Middleware”

We designed a four-layer pipeline:

**Data Collection → AI Structuring → Group Analysis → Operational Insight**

### Key Innovations:

- Multi–large-model Agent Pipeline

- Governable, reusable, evolvable **Prompt Engineering** system

- Semantic analysis architecture with controllable outputs

---

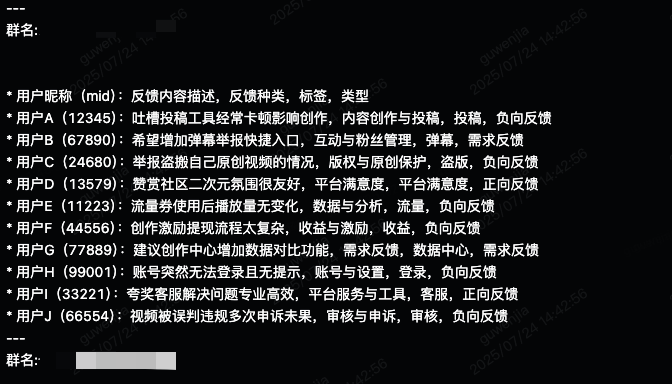

## 3. Layered Prompt Engineering — Balancing Recall and Precision

We split model processing into **four prompt layers**:

1. **Information Extraction Layer**

- High-recall extraction of all possible user feedback

- Fixed schema outputs for **structural stability**

- Embedded business taxonomy (feedback types, tags, emotion classes)

2. **Content Governance Layer**

- Hallucination removal, noise reduction, high-precision validation

- Fuzzy sentence filtering + **Emotion × Intent** dual validation

- Merge duplicates, remove weak feedback, exclude test/admin messages

3. **Semantic Clustering Layer**

- Auto topic grouping using LLM semantics

- Unified tag naming to avoid splitting similar topics

- Merge or create tags dynamically based on meaning

4. **Insight Generation Layer**

- 100-character hotspot summaries

- Automated daily/weekly reports with trend comparison and risk detection

**Outcome:** A controllable, explainable structured output pipeline.

---

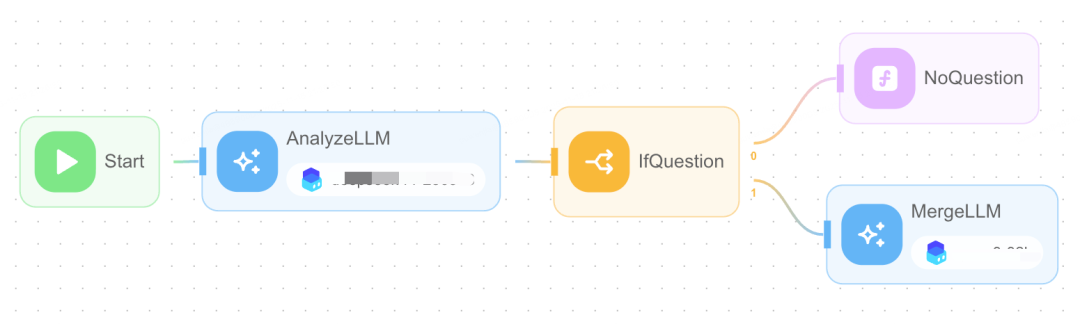

## 4. Dual-Model Collaboration — Precision vs Cost

Community messages are **informal and context-heavy**.

Solution:

- **LLM A:** High recall, extraction

- **LLM B:** High accuracy, hallucination reduction

> Models are anonymized; described only by capability.

---

## 5. Real-World Challenge — Model Hallucination

Early in development, using a single model, we saw:

- **Reality:** No valid feedback submitted

- **Output:** Dozens of fabricated feedback items

**Impact:** False feedback risks **wrong business decisions**.

---

### Anti-Hallucination Strategy: Two-Stage Review

Pipeline:

**LLM A → LLM B** with strict rules:

- No invented user quotes

- “No feedback” if no source text

- Output must map **1-to-1** to raw text

- Ambiguous tone flagged for review

- Correct tags/fields mandatory

**Results:**

- Hallucination rate: **8–12% → < 1%**

- All feedback traceable to origin

---

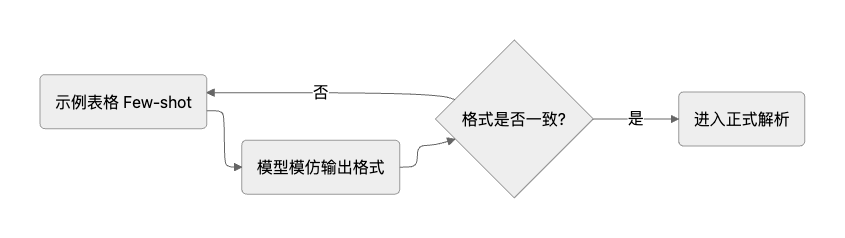

## 6. Structured Stability via Few-Shot Prompting

**Problem:** LLM outputs (tables) drifting in format.

**Solution:**

- Provide 2–3 standard table examples in prompt

- Require exact format match

- Minor correction hints fix occasional drift

**Benefits:**

- No extra validator

- Low cost

- High stability

---

## 7. Semantic Clustering That Adapts in Real-Time

Language changes fast in creator groups:

- Multiple phrases for same issue

- New slang appearing constantly

- Aliases & abbreviations common

Our approach:

- LLM-based semantic similarity, **not keyword matching**

- Unified topic labels

- Auto creation of new topics when needed

**Example Prompt Tasks:**

- Topic grouping

- Concise “Weibo-style” label naming

- Event summaries

- Hotness scoring

- Feedback ID mapping

- Table output sorted by hotness

---

## 8. Risk Control Early Warning

We combine clustering data with metrics to detect risks:

- Volume changes

- Growth rate spikes

- Negative sentiment ratio

- Sudden emotional spikes

- Cross-group signal consistency

If detected:

- Classify as **emergency**

- Assign risk level

---

## 9. End-to-End Process Flow

---

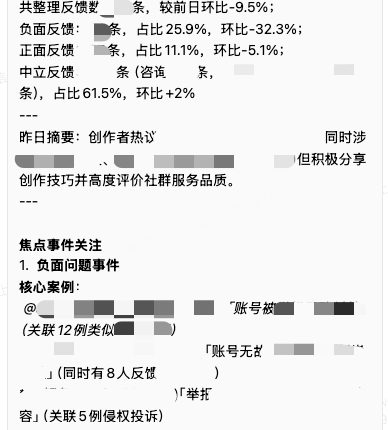

## 10. Business Impact

**Before:** ~50 valid feedback/day (manual)

**After:** ~600 valid feedback/day (AI) → **10× coverage increase**

Additional benefits:

- Daily/weekly briefs for ops/product teams

- TAPD requirements auto-created

- Reduced communication overhead

- Emotional, topic, and trend data visualized in BI dashboards

---

## 11. Summary of Advantages

- **Efficiency Boost:** Auto-analysis frees time for deep issue review

- **Full Coverage:** Capture implicit/weak/new signals with LLM semantics

- **Emotion Quantification:** Measurable sentiment → actionable alerts

- **Topic Aggregation:** Merge repetitive opinions, expose long-tail issues

- **Closed-Loop Workflow:** Seamless from discovery to resolution

---

## 12. Future Outlook

We’ll continue enhancing:

- Risk detection

- Creator support

- Community ecosystem optimization

---

## 13. Monetization Synergy — AiToEarn Integration

For creators wanting to deploy their AI insights across platforms,

**[AiToEarn](https://aitoearn.ai/)** is an open-source global AI monetization platform.

It enables:

- AI content generation

- Cross-platform publishing (Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X)

- Analytics and model ranking ([AI模型排名](https://rank.aitoearn.ai))

- Multi-channel revenue streams

---

**From manual monitoring → proactive AI insights.**

Our system captures **nuances and early signals**, transforming scattered voices into

strategic drivers for product and community growth.