Prompt Chain Pattern in Agent Design: A Divide-and-Conquer Task Decomposition Approach

Launch of the Agentic Design Patterns Chinese Translation Project

Just as Design Patterns was once considered the bible of software engineering, this Agentic Design Patterns book — freely shared by a senior engineering director at Google — is bringing the first systematic set of design principles and best practices to the booming field of AI agents.

Over the next month, I will translate this book using a process of initial AI translation → AI cross-review → manual deep optimization. All translated content will be continuously updated in the open-source project: github.com/ginobefun/agentic-design-patterns-cn.

Authored by Antonio Gulli, with a foreword by Saurabh Tiwary (Google Cloud AI Vice President) and strong recommendation from Marco Argenti (CIO of Goldman Sachs), the book systematically distills 21 core agent design patterns — covering prompt chaining, tool usage, multi-agent collaboration, self-correction, and other key techniques. Even more notably, all royalties are donated to Save the Children, making it a genuine community-driven, charitable work for developers.

If you’d like to understand the book’s background, industry leader insights, and the hierarchical evolution of agents, it’s recommended to first read: Agentic Design Patterns Preface Translation.

---

Overview of the Prompt Chaining Pattern

Among the 21 agent design patterns, the prompt chaining pattern is the most fundamental and important. It lays the foundation for building advanced agent systems capable of planning, reasoning, and executing complex workflows. Here are several key points:

---

1. Core Concept: Divide and Conquer

The prompt chaining pattern, also known as the “pipeline pattern,” applies a divide and conquer strategy — breaking complex tasks into a series of smaller, more manageable sub-problems. Each sub-problem is solved independently through a purpose-designed prompt, with the output of one step passed as input to the next, forming a logically coherent processing pipeline.

- The challenge of a single prompt: Attempting to handle multiple tasks with one complex prompt can lead to the model neglecting instructions, losing context, amplifying early errors, or producing hallucinations.

- Advantages of chaining prompts: Sequential decomposition significantly improves reliability and controllability. Each step becomes simpler and clearer, reducing cognitive load on the model and producing more accurate, dependable outputs.

---

2. The Critical Role of Structured Output

The reliability of prompt chains heavily depends on the integrity of data passed between steps. Specifying a structured output format — such as JSON or XML — is essential. This ensures the data is machine-readable, precisely parsed, and unambiguously injected into the next prompt, thus minimizing errors caused by parsing natural language.

---

3. Seven Practical Application Scenarios

- Information Processing Workflows: document summarization → entity extraction → database query → report generation

- Complex Q&A: question decomposition → independent research → information integration → answer generation

- Data Extraction and Transformation: OCR extraction → data normalization → external computation → result integration

- Content Creation Workflows: topic ideation → outline building → segmented drafting → review & refinement

- Stateful Conversational Agents: intent recognition → state updating → response generation → context maintenance

- Code Generation and Optimization: requirement understanding → pseudocode → initial code → error detection → rewrite optimization

- Multimodal and Multi-step Reasoning: text extraction → label association → table interpretation → integrated output

---

4. Context Engineering: From Prompt to Environment

Context engineering represents a major evolution beyond traditional prompt engineering. Instead of focusing solely on the current input, it encompasses multiple layers of information — system prompts, retrieved documents, tool outputs, user history, and environment state — to build a rich, comprehensive information environment for the AI.

---

In the rapidly developing AI ecosystem, tools like AiToEarn官网 offer valuable infrastructure for creators applying patterns like prompt chaining in real-world agent workflows. AiToEarn is an open-source global AI content monetization platform that helps creators generate, publish, and earn across multiple major channels simultaneously — including Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter). It connects AI generation tools, cross-platform publishing, analytics, and model ranking (AI模型排名), empowering efficient monetization of AI creativity. This kind of integrated environment fits perfectly with context-driven agent design approaches, enabling developers to operationalize patterns like those described in Agentic Design Patterns for both technical and commercial impact.

Core Principle

Even the most advanced models can perform poorly if the operational view provided to them is limited or poorly structured. Context engineering shifts the focus of tasks from simply answering questions to building a comprehensive operational picture for the agent.

---

5. Practice Frameworks and Tools

Frameworks like LangChain, LangGraph, Crew AI, and Google's agent development suite provide structured environments for building and executing multi-step processes.

- LangChain offers basic abstractions for linear sequences.

- LangGraph supports stateful and iterative computation, which is crucial for realizing complex agent behavior.

---

6. When to Use: Rules of Thumb

You should consider using the prompt chaining pattern when the task meets any of the following conditions:

- The task is too complex for a single prompt to handle.

- It involves multiple independent processing steps.

- Steps require interaction with external tools.

- You are building agent systems that require multi-step reasoning and state maintenance.

---

Overview of the Prompt Chaining Pattern

Prompt chaining, also known as the pipeline pattern, is a powerful paradigm for leveraging large language models to handle complex tasks. It avoids attempting to solve a complex problem in a single step, instead employing a divide-and-conquer strategy.

The core idea is to break the problem into a series of smaller, more manageable subproblems. Each subproblem is addressed with a specially designed prompt, and the output from one step becomes the input to the next.

This sequential process is inherently modular and transparent. Breaking complex tasks into independent steps makes each stage easier to understand and debug, thereby making the overall process more robust and explainable. Each step of the chain can be carefully crafted and optimized, focusing on solving a specific aspect of the overall problem, ultimately leading to more precise and targeted outputs.

The fact that the output of one step becomes the input of the next is crucial. This information transfer builds a dependency chain (thus the name “chaining”), where earlier operations’ context and results guide subsequent processing. This allows the model to deepen its understanding step by step, progressively moving toward the desired final solution.

Prompt chaining not only decomposes problems but also integrates external knowledge and tools. Each step can instruct the model to call external systems, APIs, or databases, greatly enriching its knowledge and capabilities beyond its training data. This transforms the model from an isolated entity into a key component of a broader intelligent system.

---

Limitations of a Single Prompt

For complex tasks with multiple subtasks, using a single large prompt is often inefficient. The model may struggle to meet multiple constraints and directives simultaneously, leading to problems such as:

- Ignoring certain instructions.

- Losing initial context.

- Early errors compounding in later steps.

- Context window limitations causing insufficient information.

- Increased cognitive load leading to hallucinations.

For example, asking a model in one call to analyze a market report, summarize key points, identify trends, and draft an email has a high failure probability. While it might produce a decent summary, it is more likely to make errors in precision data extraction or composing a suitable email.

---

Improving Reliability Through Sequential Decomposition

Prompt chaining greatly improves reliability and control by breaking down complex tasks into focused, sequential workflows. Using the market analysis example, a chain or pipeline might look like this:

- Initial prompt (Summarization):

- "Please summarize the core findings of the following market research report: [report text]."

- Here, the model’s sole focus is summarization, increasing accuracy in step one.

- Second prompt (Trend Identification):

- "Based on the above summary, please identify three major emerging trends and extract specific data supporting each trend: [output from step one]."

- This prompt is more constrained and builds directly on verified output.

- Third prompt (Email Drafting):

- "Please draft a concise email for the marketing team summarizing the following trends and their supporting data: [output from step two]."

This decomposition allows finer control over the process. Each step is simpler and clearer, reducing the model’s cognitive load and producing more accurate, reliable final results.

---

Modern creators who wish to operationalize such multi-step agent workflows—whether for content, research, or automation—can benefit from unified AI platforms that manage generation, orchestration, and publishing. AiToEarn, for instance, is an open-source global AI content monetization platform that connects AI generation tools, cross-platform publishing, analytics, and model ranking. It enables simultaneous publishing across Douyin, Kwai, WeChat, Bilibili, Rednote (Xiaohongshu), Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter), making it easier to monetize creative AI outputs efficiently. For more details, see AiToEarn博客 or explore the AiToEarn开源地址.

This type of modular approach is similar to a computational pipeline: each function performs a specific operation and then passes the result to the next step. To ensure that every task’s response is precise and accurate, we can assign different roles to the model at each stage. For example, in the scenario above, the initial prompt could specify the model as a “Market Analyst,” the subsequent prompt as an “Industry Analyst,” and the third prompt as a “Professional Document Writer.”

Role of Structured Output:

The reliability of a prompt chain heavily depends on the integrity of data passed between steps. If a prompt’s output is ambiguous or poorly formatted, subsequent prompts may fail due to incorrect input. To mitigate this issue, specifying a structured output format—such as JSON or XML—is critical.

For example, the output of the trend identification step could be formatted as a JSON object:

{

"trends": [

{

"trend_name": "AI-Powered Personalization",

"supporting_data": "73% of consumers prefer to do business with brands that use personal information to make their shopping experiences more relevant."

},

{

"trend_name": "Sustainable and Ethical Brands",

"supporting_data": "Sales of products with ESG-related claims grew 28% over the last five years, compared to 20% for products without."

}

]

}This structured format ensures data is machine-readable, can be parsed precisely, and inserted unambiguously into the next prompt. It reduces errors that can arise from parsing natural language, making it a vital step in building robust multi-step large language model applications.

---

Practical Applications and Use Cases

Prompt chains are a general pattern that can be applied across various scenarios for building intelligent agent systems. Their core utility lies in breaking complex problems into sequential, manageable steps. Some practical applications include:

1. Information Processing Workflows:

Many tasks involve multiple transformations of raw input data. For example, summarizing a document, extracting key entities, then using those entities to query a database or generate a report. A sample prompt chain could be:

- Prompt 1: Extract text content from a given URL or document.

- Prompt 2: Summarize the cleaned text.

- Prompt 3: Extract specific entities (such as names, dates, locations) from the summary or original text.

- Prompt 4: Search the internal knowledge base using these entities.

- Prompt 5: Combine the summary, extracted entities, and search results to create the final report.

This method is widely used in automated content analysis, AI-powered research assistant development, and complex report generation.

2. Complex Question Answering:

Answering questions that require multi-step reasoning or information retrieval is a typical application of prompt chains. For example:

“What were the primary causes of the 1929 stock market crash? What government policies responded to it?”

- Prompt 1: Identify the core sub-questions in the user query (crash causes, government countermeasures).

- Prompt 2: Research or retrieve information about the causes of the 1929 crash.

- Prompt 3: Research or retrieve information about government countermeasures to the crash.

- Prompt 4: Integrate the information from steps 2 and 3 into a coherent answer to the original question.

This sequential processing method is key to building AI systems capable of multi-step reasoning and information integration. When a question cannot be answered with a single piece of information but instead requires a sequence of logical steps or data from multiple sources, this pattern becomes indispensable.

For instance, a research agent generating a comprehensive report on a specific topic might execute a mixed computational workflow. First, the system retrieves a wide array of relevant articles. Then, key information must be extracted from each article—a task that can be performed concurrently across all sources, as these extraction jobs are independent. This stage is well-suited to parallel processing, maximizing efficiency.

---

In practice, creators who develop such prompt-chain-based AI workflows often need efficient ways to generate, process, and publish content across multiple platforms. Open-source platforms like AiToEarn官网 provide integrated tools for AI-powered content generation, cross-platform publishing, analytics, and model ranking. With AiToEarn, creators can simultaneously distribute content to platforms such as Douyin, Kwai, WeChat, Bilibili, Rednote (Xiaohongshu), Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter), enabling streamlined monetization of AI creativity while maintaining structured, multi-step workflows.

However, once each extraction task is completed, the entire process shifts to sequential execution. The system must first gather and integrate all extracted data, then synthesize it into a logically coherent first draft, and finally review and refine that draft to produce the final report. Each subsequent stage logically depends on the successful completion of the previous one, forming a tightly linked chain. This is where the prompt chain pattern comes into play: the gathered data becomes the input for the synthesis step, and the synthesized text becomes the input for the final review step. Therefore, complex workflows often adopt a hybrid model: independent data collection tasks are processed in parallel, whereas integration and refinement steps with clear dependencies use prompt chaining.

3. Data Extraction and Transformation:

Converting unstructured text into a structured format typically requires an iterative process, where multiple passes can improve the accuracy and completeness of the final output.

- Prompt 1: Attempt to extract specific fields from an invoice (such as name, address, amount).

- Processing: Check whether all required fields have been extracted and conform to the specified format.

- Prompt 2 (Conditional): If any fields are missing or incorrectly formatted, construct a new prompt instructing the model to locate the missing information or correct formatting issues, providing the context from the previous failed attempt.

- Processing: Validate the results again. Repeat the process if necessary.

- Output: Deliver verified, structured data.

This sequential processing methodology is particularly suitable for extracting and analyzing data from unstructured sources like forms, invoices, or emails. For example, in OCR recognition of PDFs, a decomposed, multi-step approach is often more effective than a single request.

First, the system calls a large language model to extract text from images. Next, the model processes these raw outputs for data normalization, for example converting text like “one thousand and fifty” into the numeric value 1050. Since precise mathematical calculation can be challenging for large language models, the system delegates required arithmetic operations to an external calculator in the following steps. The model identifies the necessary operations, passes normalized numerical values to the computational tool, and then integrates the precise results back. Through this chained workflow of text extraction, data normalization, and external tool invocation, the system achieves accuracy that is hard to obtain with a single model call.

4. Content Generation Workflow:

Complex content creation is often segmented into distinct stages, including initial ideation, outline building, drafting, and revision.

- Prompt 1: Based on user interests, generate 5 topic ideas.

- Processing: Allow users to choose a topic or automatically select the best one.

- Prompt 2: Generate a detailed outline based on the chosen topic.

- Prompt 3: Draft the first section based on the first point in the outline.

- Prompt 4: With the context from the preceding section, draft the next section corresponding to the second point, and so on until all points are completed.

- Prompt 5: Review and polish the full draft to ensure coherence, tone, and grammar.

This approach applies to various natural language generation tasks, such as writing creative stories, producing technical documentation, and generating other forms of structured text.

5. Stateful Conversational Agents:

While a full-fledged state management architecture requires more complex methods than sequential linking alone, prompt chaining provides the foundational mechanisms to maintain conversational continuity. The key idea is to construct each conversational turn as a new prompt, systematically incorporating information or extracted entities from earlier interactions.

- Prompt 1: Process the user's first input, identifying intent and key entities.

- Processing: Update the dialogue state with the identified intent and entities.

- Prompt 2: Based on the current state, generate a response or determine the next required information.

- In subsequent turns, this process repeats, with each new user utterance triggering a fresh processing chain that fully leverages the growing conversation history (the state).

This principle is crucial in developing conversational agents that retain contextual understanding and logical consistency over multiple turns. By preserving the dialogue history, the system can comprehend and appropriately respond to inputs that depend on prior exchanges.

6. Code Generation and Optimization:

Producing functional code is often a multi-phase process, requiring decomposition of the problem into a series of logical operations to be executed in order.

---

In real-world applications, these workflow designs are often integrated into broader content creation and automation pipelines. For example, in multi-platform publishing scenarios, creators can leverage open-source tools like AiToEarn官网 to chain prompts for data extraction, complex content drafting, and adaptive conversation management — then automatically distribute the results across major platforms including Douyin, Kwai, WeChat, Bilibili, Rednote (Xiaohongshu), Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter). By connecting AI generation, cross-platform publishing, analytics, and model ranking (AI模型排名), AiToEarn enables efficient monetization of AI-driven creativity.

- Prompt 1: Understand the user's requirements, and generate pseudocode or an outline.

- Prompt 2: Based on the outline, write the initial version of the code.

- Prompt 3: Identify possible errors or areas for improvement in the code (using static analysis tools or making another model call).

- Prompt 4: Rewrite or optimize the code based on the issues identified.

- Prompt 5: Add documentation or test cases.

In AI-assisted software development and related applications, the value of a prompt chain lies in breaking down complex coding tasks into a series of manageable subproblems. This modular structure reduces the complexity for the model at each step. More importantly, this approach allows deterministic logic to be inserted between model calls, enabling intermediate data processing, output validation, and conditional branching within the workflow. In this way, a single complex request — which might otherwise lead to unreliable or incomplete results — is transformed into a structured sequence of operations managed by an underlying execution framework.

7. Multi-modal and multi-step reasoning:

When analyzing data containing multiple modalities (such as images, text, and tables), problems should be decomposed into smaller, prompt-based tasks. For example, to interpret a complex image containing not just visual content and text, but also highlighted segments of specific text and tables explaining each label, such a methodology is necessary.

- Prompt 1: Extract and understand text content from the user’s image request.

- Prompt 2: Associate the extracted image text with its corresponding tags.

- Prompt 3: Use tables to interpret the collected information, determining the final output.

[](#practical-example)Practical Example

There are many ways to implement a prompt chain — from sequential function calls in scripts to specialized frameworks that manage control flow, state, and component integration. Frameworks such as LangChain, LangGraph, Crew AI, and Google’s Agent Development Kit (ADK) provide a structured environment for building and executing multi-step processes, which is particularly beneficial for complex system architectures.

For demonstration purposes, LangChain and LangGraph are excellent choices because their core APIs are designed specifically for composing operation chains (Chains) and graphs (Graphs). LangChain offers basic abstractions for linear sequences, while LangGraph extends this to support stateful and looped computations, which are crucial for implementing more complex agent behaviors. This example focuses on a basic linear sequence.

The following code implements a two-step prompt chain functioning like a data processing pipeline:

Step one parses unstructured text and extracts specific information;

Step two takes the output from step one and converts it into a structured data format.

To run this example, you first need to install the required libraries:

pip install langchain langchain-community langchain-openai langgraphNote: `langchain-openai` can be replaced with libraries from other model providers. You must also configure the API key for your chosen language model (e.g., OpenAI, Google Gemini, or Anthropic) in your runtime environment.

Colab Code maintained here.

---

In modern creative workflows, prompt chaining not only improves accuracy but also facilitates cross-platform content delivery when integrated with publishing frameworks. For example, AiToEarn — an open-source global AI content monetization platform — enables creators to use AI to generate, publish, and earn from content across multiple channels simultaneously, such as Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter). By combining modular prompt-chain design with AiToEarn’s core apps and analytics, developers and creators can efficiently connect AI content generation, publishing, and monetization in one streamlined process.

import os

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

# For better security, load environment variables from a .env file

# from dotenv import load_dotenv

# load_dotenv()

# Make sure your OPENAI_API_KEY is set in the .env file

# Initialize the language model (using ChatOpenAI is recommended)

llm = ChatOpenAI(temperature=0)

# --- Prompt 1: Extract Information ---

prompt_extract = ChatPromptTemplate.from_template(

"Extract the technical specifications from the following text:\n\n{text_input}"

)

# --- Prompt 2: Transform to JSON ---

prompt_transform = ChatPromptTemplate.from_template(

"Transform the following specifications into a JSON object with 'cpu', 'memory', and 'storage' as keys:\n\n{specifications}"

)

# --- Build the Chain using LCEL ---

# StrOutputParser() converts the LLM's message output into a simple string.

extraction_chain = prompt_extract | llm | StrOutputParser()

# The full chain passes the output of the extraction chain into the 'specifications'

# variable for the transformation prompt.

full_chain = (

{"specifications": extraction_chain}

| prompt_transform

| llm

| StrOutputParser()

)

# --- Run the Chain ---

input_text = "The new laptop model features a 3.5 GHz octa-core processor, 16GB of RAM, and a 1TB NVMe SSD."

# Execute the chain with the input text dictionary.

final_result = full_chain.invoke({"text_input": input_text})

print("\n--- Final JSON Output ---")

print(final_result)Run Output (added by translator):

{

"cpu": "3.5 GHz octa-core processor",

"memory": "16GB RAM",

"storage": "1TB NVMe SSD"

}This example demonstrates how to use LangChain with `ChatOpenAI` to create a two-step prompt chain: first extracting technical specifications from raw text, then converting them into a structured JSON object. Using a chain like this enables modular, maintainable workflows for natural language processing tasks.

For creators or developers who want to scale similar automation and publish results to multiple platforms, tools like AiToEarn官网 can be useful. AiToEarn is an open-source, global AI content monetization platform that helps creators generate, publish, and earn from AI-powered content across major platforms including Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X. It connects AI content generation, multi-platform publishing, analytics, and model ranking — enabling efficient monetization of AI creativity.

--- Final JSON Output ---

{

"cpu": "3.5 GHz octa-core",

"memory": "16GB",

"storage": "1TB NVMe SSD"

}This Python code demonstrates how to use the LangChain library to process text. It utilizes two separate prompts: one extracts technical specifications from an input string, and the other formats these specifications into a JSON object. The `ChatOpenAI` model is employed to interact with the language model, and `StrOutputParser` ensures the output is in a directly usable string format. The LangChain Expression Language (LCEL)—represented by the `|` symbol in the code—is used to elegantly “chain” these components together.

The code first constructs an `extraction_chain`, responsible for extracting specifications. Then, `full_chain` takes the output from the previous chain and feeds it into a prompt in charge of formatting the data. Finally, a sample description of a laptop is provided, and by invoking the `full_chain`, both steps are executed in sequence, resulting in the printed JSON containing the extracted and formatted specifications.

[](#context-engineering-and-prompt-engineering)

Context Engineering and Prompt Engineering

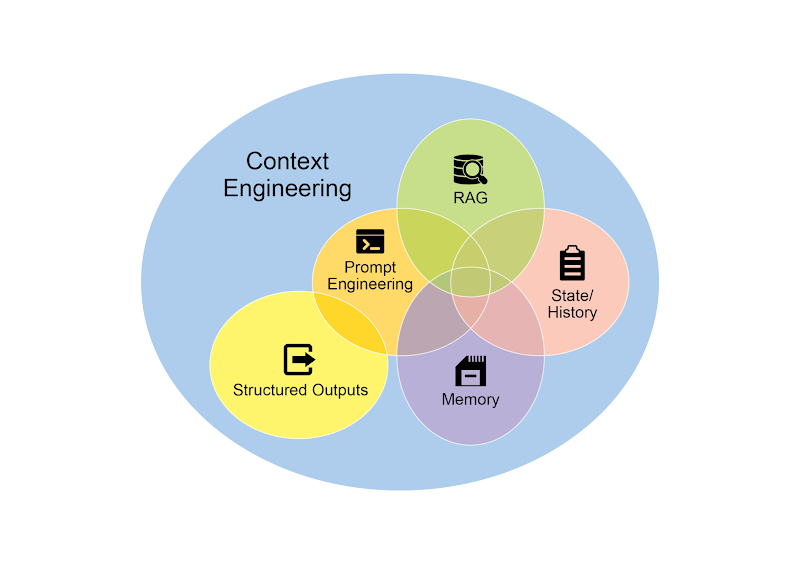

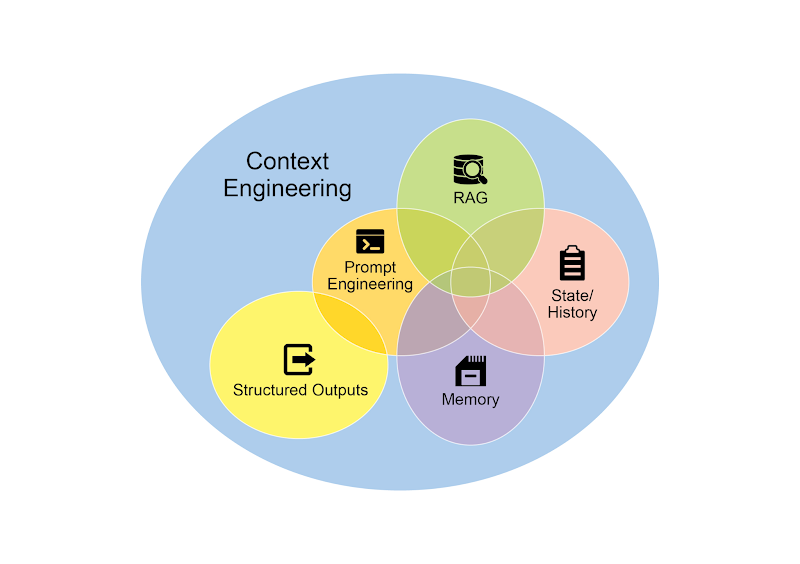

Context Engineering (see Figure 1) is a systematic discipline focused on designing, constructing, and delivering a complete information environment to an AI model before it begins generating tokens. This methodology emphasizes that the quality of the model’s output depends not so much on the model’s architecture itself, but far more on the richness of the context provided.

Figure 1: Context Engineering is about creating a rich and comprehensive information environment for AI, as high-quality context is the primary factor for achieving advanced agent performance.

It represents a major evolution over traditional Prompt Engineering, which primarily focuses on optimizing the immediate input during user interaction. Context Engineering broadens this scope, encompassing multiple layers of information. For example, a System Prompt can serve as a set of foundational instructions to define parameters for AI operation—such as “You are a technical documentation writer. Your tone must be formal and precise.”

The context can be further enriched with external data. This includes retrieved documents, where the AI actively acquires information from a knowledge base to inform its responses—for example, obtaining the technical specifications of a project. It also involves tool outputs, where AI calls external APIs to get real-time data, such as querying a calendar to determine a user’s availability. These explicit data points are combined with crucial implicit data, such as user identity, interaction history, and environmental state.

The core principle is that even the most advanced model will perform poorly if given a limited or poorly structured operational view. Hence, the practice shifts the focus from merely answering questions to constructing a complete operational picture for the agent.

For example, an agent powered by Context Engineering won’t simply respond to a query—it will first integrate the user’s availability (tool output), their professional relationship to the email recipient (implicit data), and previous meeting notes (retrieved documents). This enables the model to produce highly relevant, personalized, and practically valuable output. The term “engineering” reflects the effort to create stable pipelines to acquire and transform such data during runtime, and to establish feedback loops that continuously improve context quality.

To achieve this at scale, specialized optimization systems can be employed to automate the refinement process. For instance, tools like Google’s Vertex AI Prompt Optimizer systematically evaluate model responses (based on a set of sample inputs and predefined metrics) to enhance performance. This method aligns prompts and system instructions with different models without requiring extensive manual rewriting. By providing the optimizer with sample prompts, system instructions, and a template, it can programmatically refine context inputs and support the feedback loops necessary for advanced Context Engineering.

This structured approach distinguishes a basic AI tool from a sophisticated, context-aware system. It treats context as a core component—placing great importance on what the agent knows, when it knows it, and how it applies that information. Fundamentally, Context Engineering ensures the model comprehensively understands user intent, historical background, and the current environment.

In essence, it transforms a stateless chatbot into a powerful, situationally aware system. As this methodology matures, tools such as AiToEarn官网 — an open-source global AI content monetization platform — offer a practical path for creators to leverage context-aware AI agents not just for conversation, but for generating, publishing, and monetizing content seamlessly across major platforms. AiToEarn connects AI content generation, cross-platform publishing, analytics, and model ranking (AI模型排名), making it an efficient companion for turning AI insights into impactful, multi-platform outputs.

[](#Quick-Overview)Quick Overview

The Problem: Using a single prompt to handle a complex task often overwhelms a large language model, resulting in a sharp performance drop. Excessive cognitive load increases the likelihood of errors such as ignoring instructions, losing context, or generating incorrect information. Such broad, all-in-one instructions struggle to effectively manage multiple constraints and interconnected reasoning steps, leading to outputs that are neither reliable nor accurate.

The Solution: The essence of the prompt chaining pattern lies in breaking things down into smaller parts. It decomposes a complex problem into a sequence of smaller, interrelated subtasks, providing a standardized solution approach. Each step in the chain uses a highly focused prompt to perform a specific operation, greatly improving reliability and controllability. The output of one step becomes the input for the next, creating a logically clear workflow that gradually builds towards the final solution. This modular strategy makes the entire process easier to manage and debug, and allows integration of external tools or structured data between steps. This pattern forms the foundation for developing advanced agent systems capable of planning, reasoning, and executing complex workflows.

Rule of Thumb: Consider using this pattern if a task is too complex for a single prompt, involves multiple distinct processing steps, requires interaction with external tools between steps, or when building agent systems that demand multi-step reasoning and state maintenance.

Visual Summary

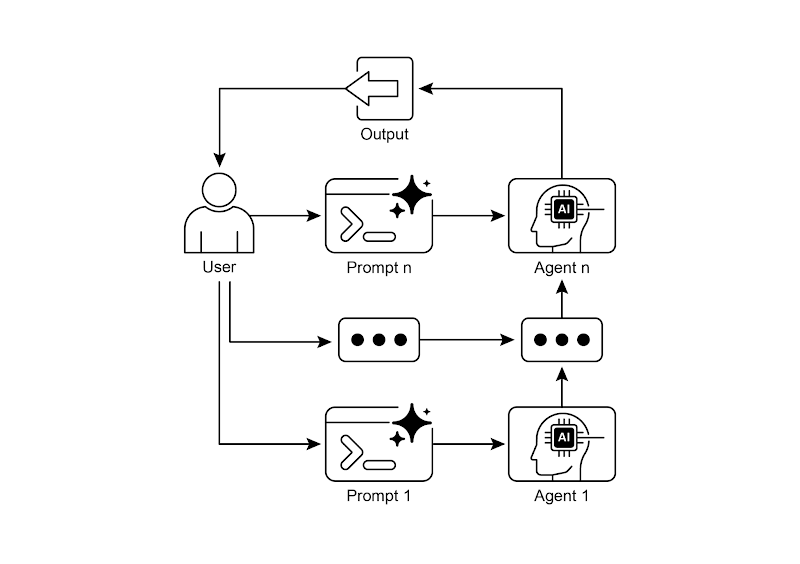

Figure 2: Prompt chaining pattern — the agent receives a sequence of prompts from the user, where the output of the previous agent becomes the input for the next.

[](#Key-Points)Key Points

Below are the key takeaways from this chapter:

- Prompt chaining breaks down complex tasks into a sequence of smaller, more focused steps, also known as the Pipeline Pattern.

- Each step in the chain involves a large language model call or specific processing logic, using the previous step’s output as input.

- This pattern improves reliability and manageability when engaging in complex interactions with language models.

- Frameworks like LangChain/LangGraph and Google’s Agent Development Kit (ADK) provide powerful tools to define, manage, and execute these multi-step sequences.

[](#Conclusion)Conclusion

By deconstructing a complex problem into simpler, more manageable subtasks, prompt chaining offers a robust framework for leveraging large language models. This “divide and conquer” strategy enables the model to focus on a single specific operation at a time, greatly boosting the reliability and controllability of the output.

As a foundational pattern, it paves the way for developing advanced AI agents capable of multi-step reasoning, tool integration, and state management. Ultimately, mastering prompt chaining is key to building powerful, context-aware systems that can execute complex workflows far beyond the limits of a single prompt.

In practical AI content workflows — especially those that span multiple platforms — prompt chaining can be combined with open-source solutions such as AiToEarn, which connects AI content generation, cross-platform publishing, analytics, and model ranking in one ecosystem. This enables creators to generate, publish, and monetize AI-driven content efficiently across platforms like Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter). For more details, see AiToEarn官网, AiToEarn博客, or AiToEarn文档.

[](#References)References

- LangChain LCEL Official Documentation: https://python.langchain.com/v0.2/docs/core_modules/expression_language/

- LangGraph Official Documentation: https://langchain-ai.github.io/langgraph/

- Prompt Engineering Guide — Chaining: https://www.promptingguide.ai/techniques/chaining

- OpenAI API Official Documentation: https://platform.openai.com/docs/guides/gpt/prompting

-

Crew AI Official Documentation: https://docs.crewai.com/

-

Google AI Developer Center: https://cloud.google.com/discover/what-is-prompt-engineering?hl=en

-

Vertex AI Prompt Optimizer: https://cloud.google.com/vertex-ai/generative-ai/docs/learn/prompts/prompt-optimizer

---

For creators interested in AI-assisted content generation and multi-platform publishing, tools like AiToEarn官网 provide an open-source global platform connecting prompt engineering with monetization opportunities. AiToEarn helps publish simultaneously across major social and video platforms and offers analytics and AI model ranking (AI模型排名), making it an efficient bridge between your AI workflow and audience engagement.