# Prompt Optimization Guide for AI Systems

---

## Article Overview

- **Preface**

- **Intent Recognition Optimization** — strategies to capture user needs accurately

- **Context Information Optimization** — delivering precise background knowledge to resolve ambiguous intent

- **Instruction Execution Optimization**

- **Model Capability Activation Optimization** — unleashing the full potential of large models

- **Prompt Structure Optimization** — building clear, efficient, and stable frameworks

---

## Data Assurance

Ensuring **data integrity**, **consistency**, and **relevance** is the foundation of AI model optimization.

- **High-quality, domain-specific datasets** → support intent recognition

- **Robust data cleaning & preprocessing** → prevent noise and ambiguity

---

In **AI-driven customer service**, improvements in intent recognition and context handling lead to higher **accuracy** and **user satisfaction**. Activating latent capabilities using structured prompts ensures **stability** and **responsiveness**.

Creators building multi-platform AI workflows can explore [AiToEarn官网](https://aitoearn.ai/) — an open-source ecosystem integrating AI generation, publishing, analytics, and model ranking. Supported platforms include Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter).

---

## 1. Introduction

In practice, **prompt adjustments** may fail or cause "runaway" outputs.

This guide shares real-world experience from the **QuNar flight pre-sales customer service** project.

**Use case**:

The flight pre-sales service answers questions before booking: schedules, ticket rules, services.

---

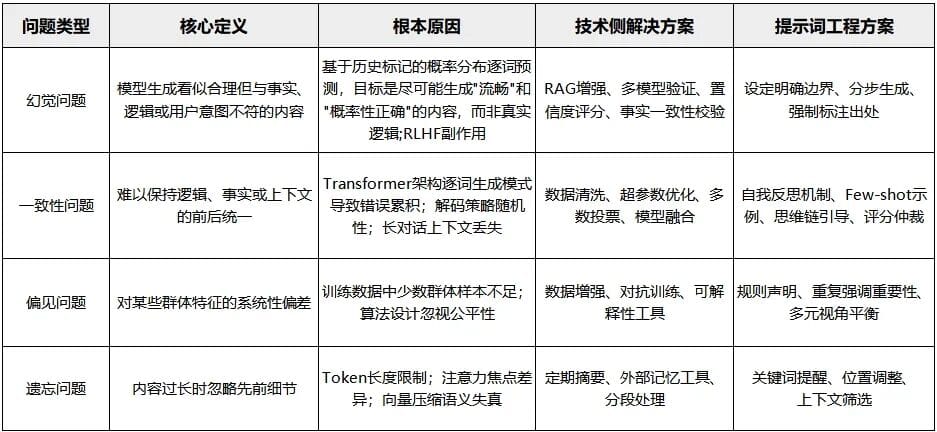

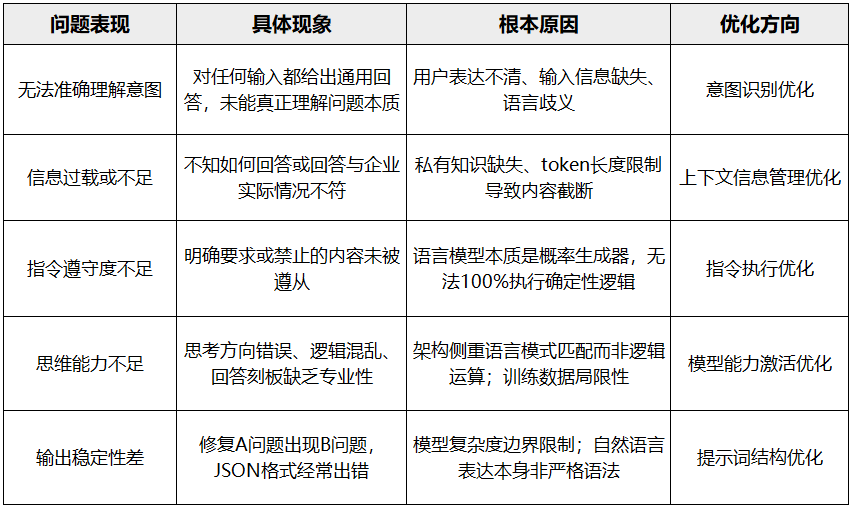

### 1.1 Common Issues & Countermeasures

#### **A. Model-level issues & strategies**

#### **B. Prompt-level issues & solutions**

---

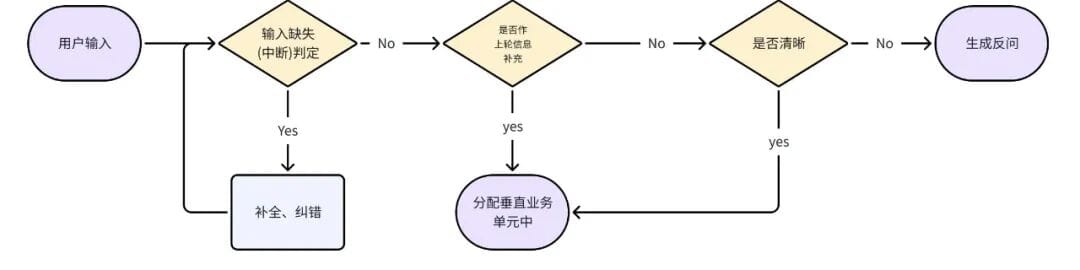

## 2. Intent Recognition Optimization

Accuracy in understanding the **request** and **motivation** is key — especially with incomplete or ambiguous input.

### 2.1 Missing Input Information

Common causes:

- Incomplete expression

- Typos / oversights

**Action**:

- Add auto-completion & correction logic in prompts

- Use **orthogonal examples** to cover multiple error types

**Example**:

- "Check next week’s price" → infer full intent: "Check ticket price for this flight next week."

- "New Yark" → correct to "New York"

---

### 2.2 Ambiguity in Language

Example: "Can you give me a discount?" could mean:

- Request for a special offer

- Asking about discount rules

- Dissatisfaction with price

**Solution**:

- Add a **multi-turn clarification mechanism**

- Use **counter-questions** based on:

- Unclear intent boundaries

- Missing tool parameters

- Unknown domain knowledge

**Confidence Scoring Framework**:

0–5 scale judging request clarity → guides whether to clarify or proceed.

---

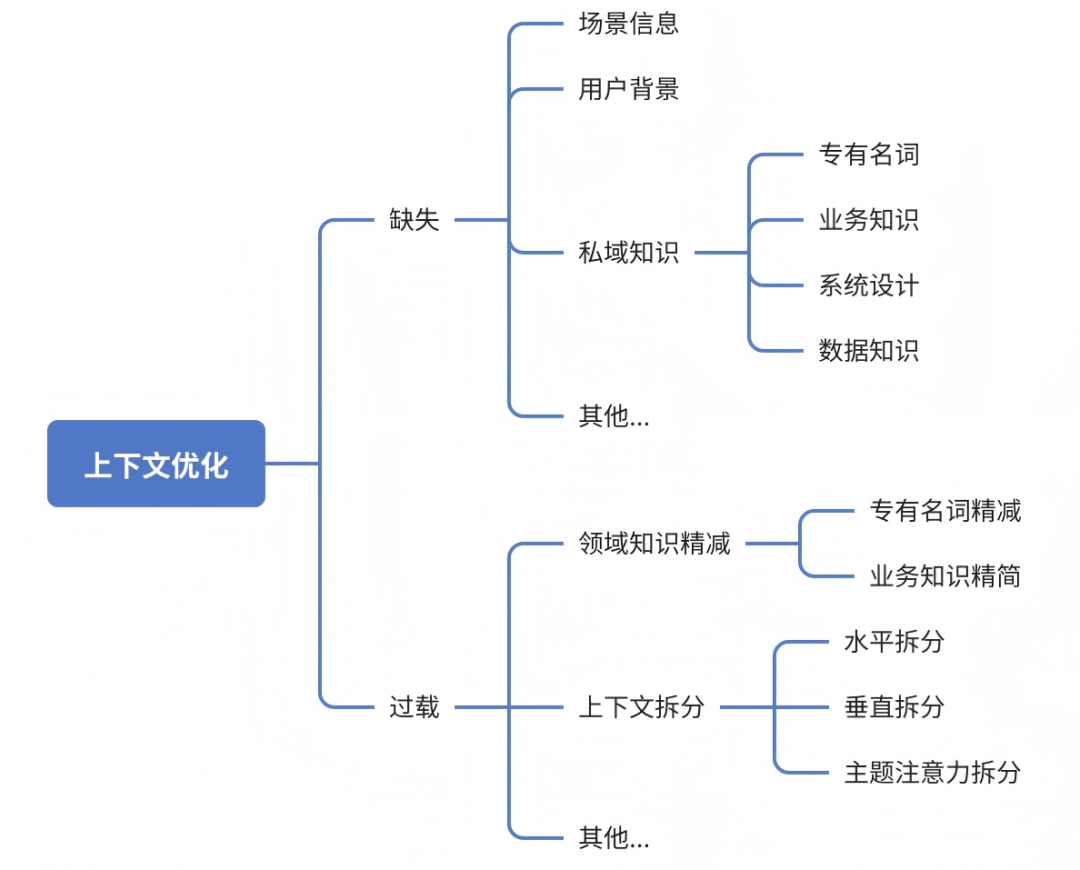

## 3. Context Information Optimization

When intent clarity is low, provide **structured contextual data**:

- User profile

- Business/product knowledge

- Agent role & process background

**Identify missing context**:

1. Test task description with a newcomer

2. Analyze bad cases with the model — verify missing info

---

### 3.1 Domain Knowledge Simplification

**Steps**:

1. Extract key terms from bad cases

2. Test comprehension — remove terms the model already understands

3. Tier remaining terms (macro → meso → micro)

4. Consider keyword matching or dynamic recall

---

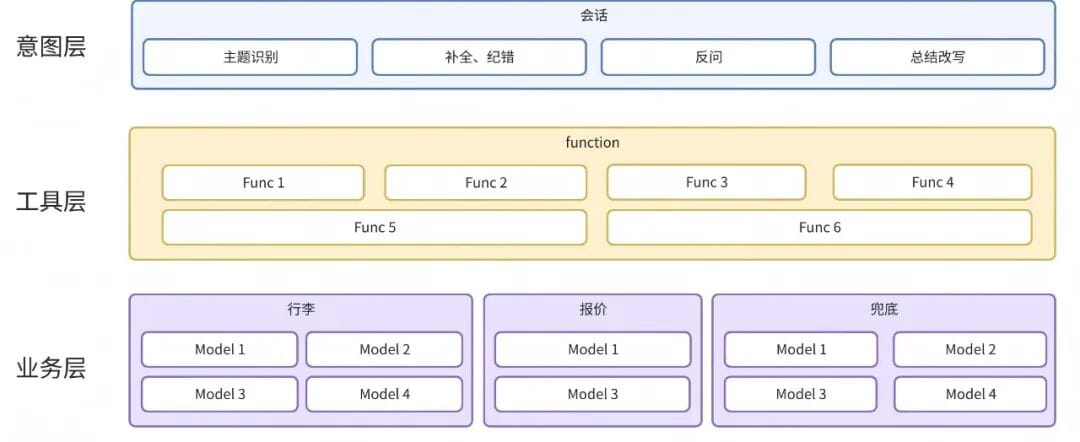

### 3.2 Context Splitting

**Types**:

- **Horizontal splitting** → by process stage

- **Vertical splitting** → separate agents by business type

- **Topic-based splitting** → guide reasoning by topic

---

## 4. Instruction Execution Optimization

When models **ignore instructions** or **break formats**, activate optimization mechanisms.

### 4.1 Root Causes

- **Impossible Triangle**: Truth–Flexibility–Security

- SFT data quality issues

- CoT reasoning → decreased detail sensitivity

- Multiple constraints → lower compliance

---

### 4.2 Optimization Methods

#### 4.2.1 Logic Consistency Check

- Detect contradictions between requirements

- Ensure constraints are logical & feasible

#### 4.2.2 Complexity Simplification

- **Splitting & merging** tasks

- Reorder instructions linearly

- Use structured formats (Markdown)

#### 4.2.3 Special Rewriting

- Replace negative expressions with affirmative ones

- Mitigate false positive bias — activate critical thinking

#### 4.2.4 Hallucination Reduction

Four-layer constraints: Identity + Data Source + Thinking + Output

#### 4.2.5 Missed Instructions

- Use anchoring (numbering, formatting)

- Optimize order

- Set preconditions

- Use nearby examples

---

## 5. Model Capability Activation Optimization

### 5.1 Proactive Activation

Treat model as a "**collaborative employee**":

- Set clear goals & motivations

- Trigger proactive thinking via self-evaluation, peer review, bug search

### 5.2 Cognitive Activation

**Broad-domain linking**:

- Multi-field synthesis

- Forced analogies

- Concept fusion

- External thinking frameworks

**Deep-domain linking**:

- Clarify professional stance

- Use authoritative references

- Apply meta-thinking

- Continuous principle probing

---

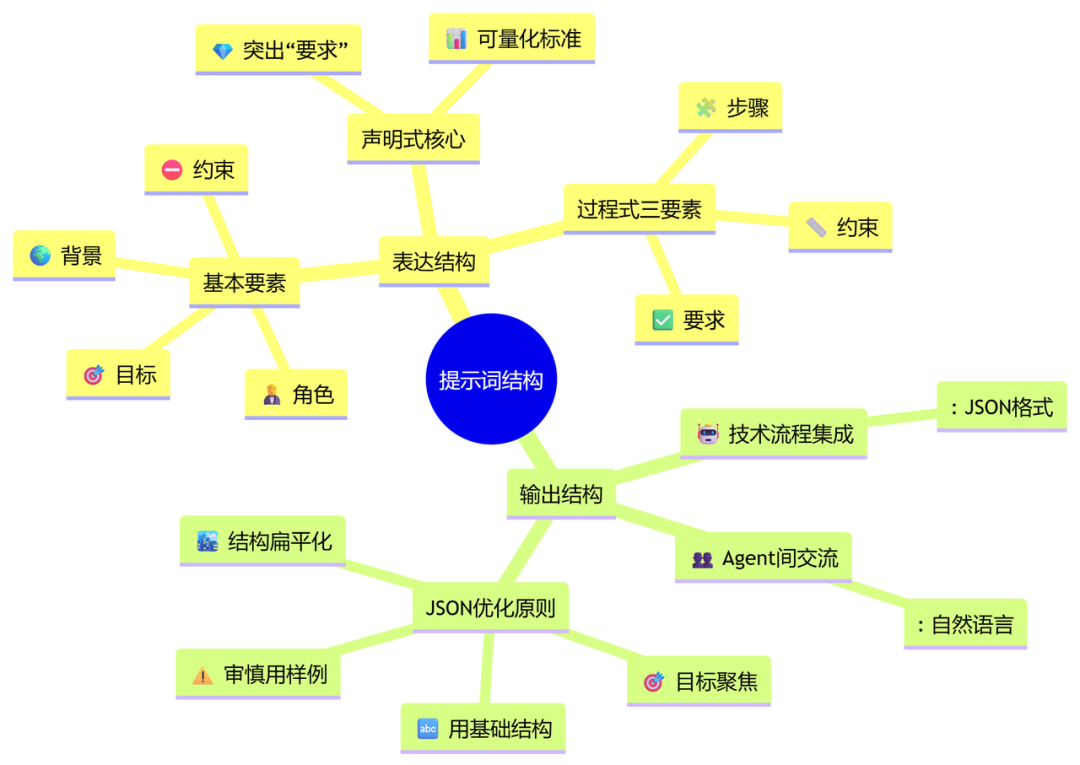

## 6. Prompt Structure Optimization

Adjusting overall structure after detail tweaks can yield major gains.

**Types**:

- **Declarative** → set goals without steps

- **Procedural** → specify detailed execution

**Expression Structure**:

- Role, background, objective, constraints

- Procedural elements: Steps + Constraints + Requirements

**Output Structure**:

- Match integration needs (JSON, natural language)

- Flatten structure

- Keep goals focused

- Mark examples clearly

---

## Summary

Prompt optimization refines how you **express needs** to AI, balancing its limitations and strengths.

Combining:

1. **Intent recognition**

2. **Context enrichment**

3. **Instruction compliance**

4. **Capability activation**

5. **Structured design**

…yields clearer, more stable, monetizable outputs — especially when integrated into multi-platform publishing ecosystems like [AiToEarn官网](https://aitoearn.ai/).

---

**References**:

- [Read Original](2649281253)

- [Open in WeChat](https://wechat2rss.bestblogs.dev/link-proxy/?k=9bc107c4&r=1&u=https%3A%2F%2Fmp.weixin.qq.com%2Fs%3F__biz%3DMzA3NDcyMTQyNQ%3D%3D%26mid%3D2649281253%26idx%3D1%26sn%3D300d335527f970f69230a8b5e93849be)