Qwen3-VL Technical Report English-Chinese Bilingual Version.pdf

Qwen3-VL: Alibaba’s Flagship Multimodal Foundation Model

Qwen3-VL is Alibaba’s most advanced multimodal model, designed for unified understanding and reasoning across:

- Text

- Images

- PDFs

- Tables

- Graphical User Interfaces (GUI)

- Videos

It features a native 256K context window, enabling:

- Stable handling of documents spanning hundreds of pages.

- Whole-textbook comprehension.

- Processing of long videos with accurate localization and citation.

This results in a full-stack multimodal engine tailored for enterprise-grade scenarios.

---

Model Configurations

- Dense models: 2B / 4B / 8B / 32B

- Mixture of Experts (MoE): 30B-A3B / 235B-A22B

Benefits: Flexible trade-offs between latency, throughput, and accuracy.

> Note: Multimodal training enhances — rather than reduces — language capabilities, outperforming pure‑text LLMs on multiple NLP benchmarks.

---

Key Technical Upgrades

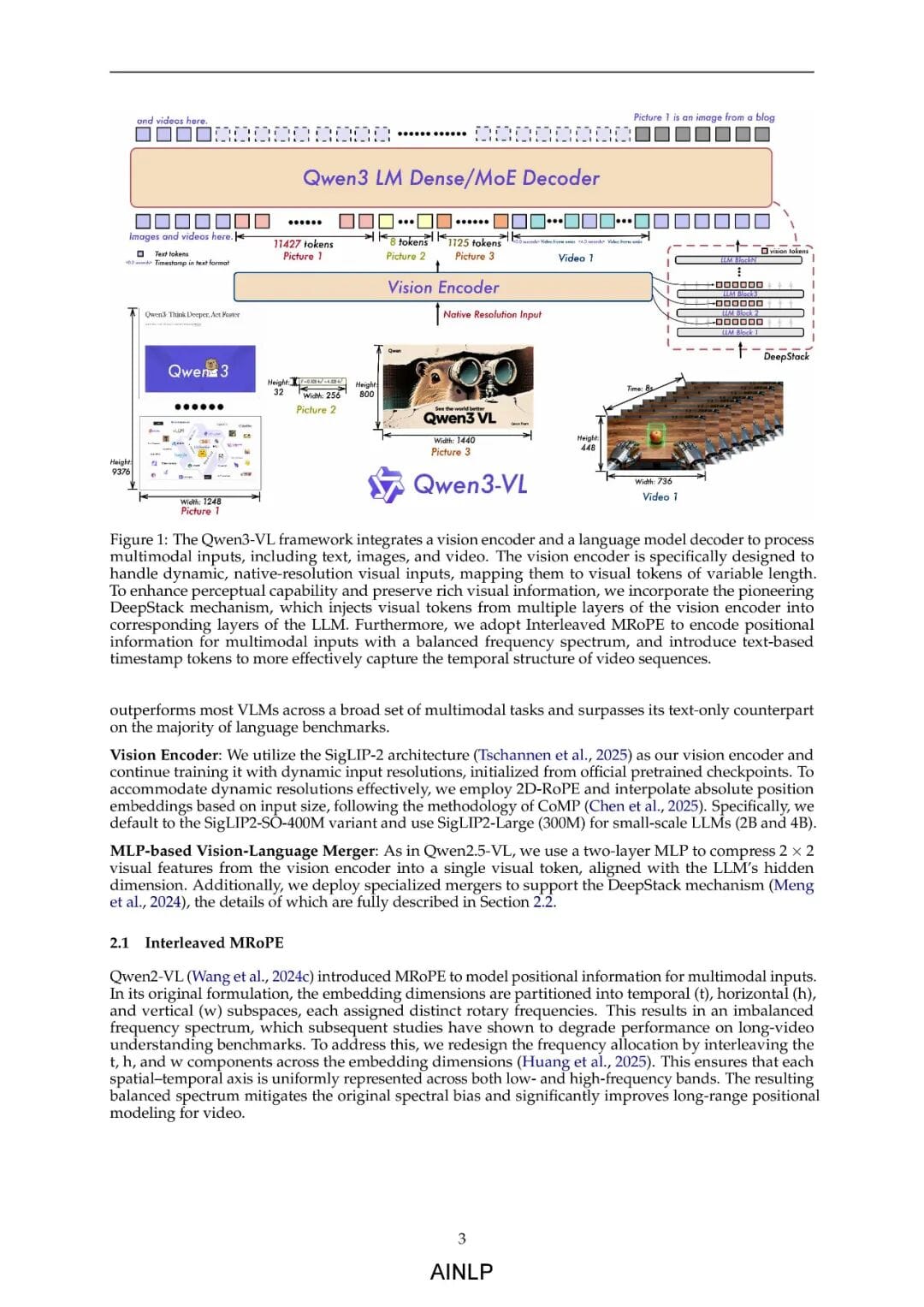

1. Interleaved-MRoPE

- Mixes temporal, horizontal, and vertical frequency signals.

- Resolves legacy MRoPE frequency bias in long video sequences.

- Enables more stable spatiotemporal modeling.

2. DeepStack Visual Cross-Layer Injection

- Extracts multi-level features from the visual encoder.

- Injects features into corresponding LLM layers.

- Fuses low-level details with high‑level semantics for improved visual reasoning accuracy.

3. Text-Based Timestamps

- Uses explicit tokens like `<3.0 seconds>` instead of complex encodings.

- Improves control and generalization for long video temporal understanding.

---

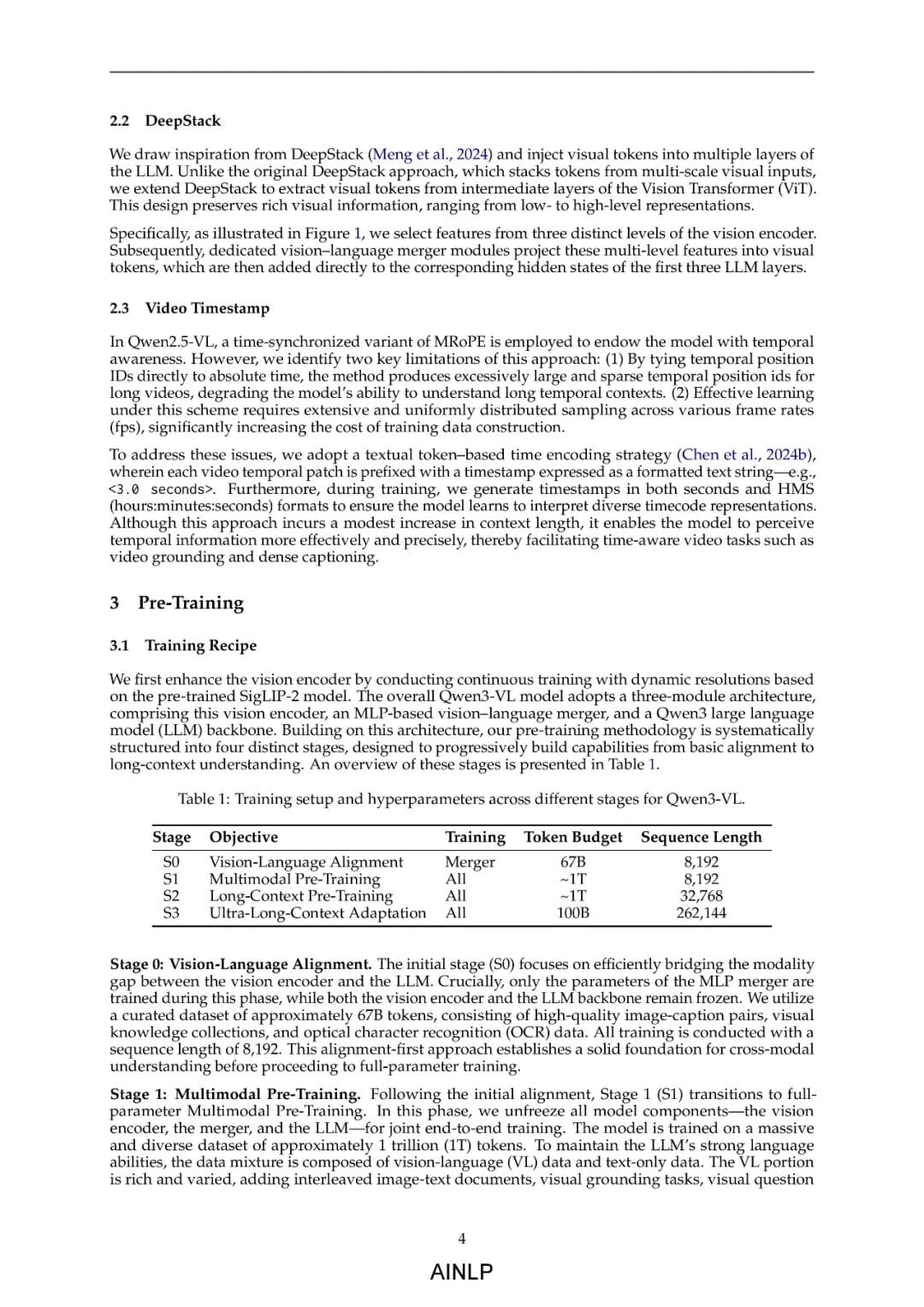

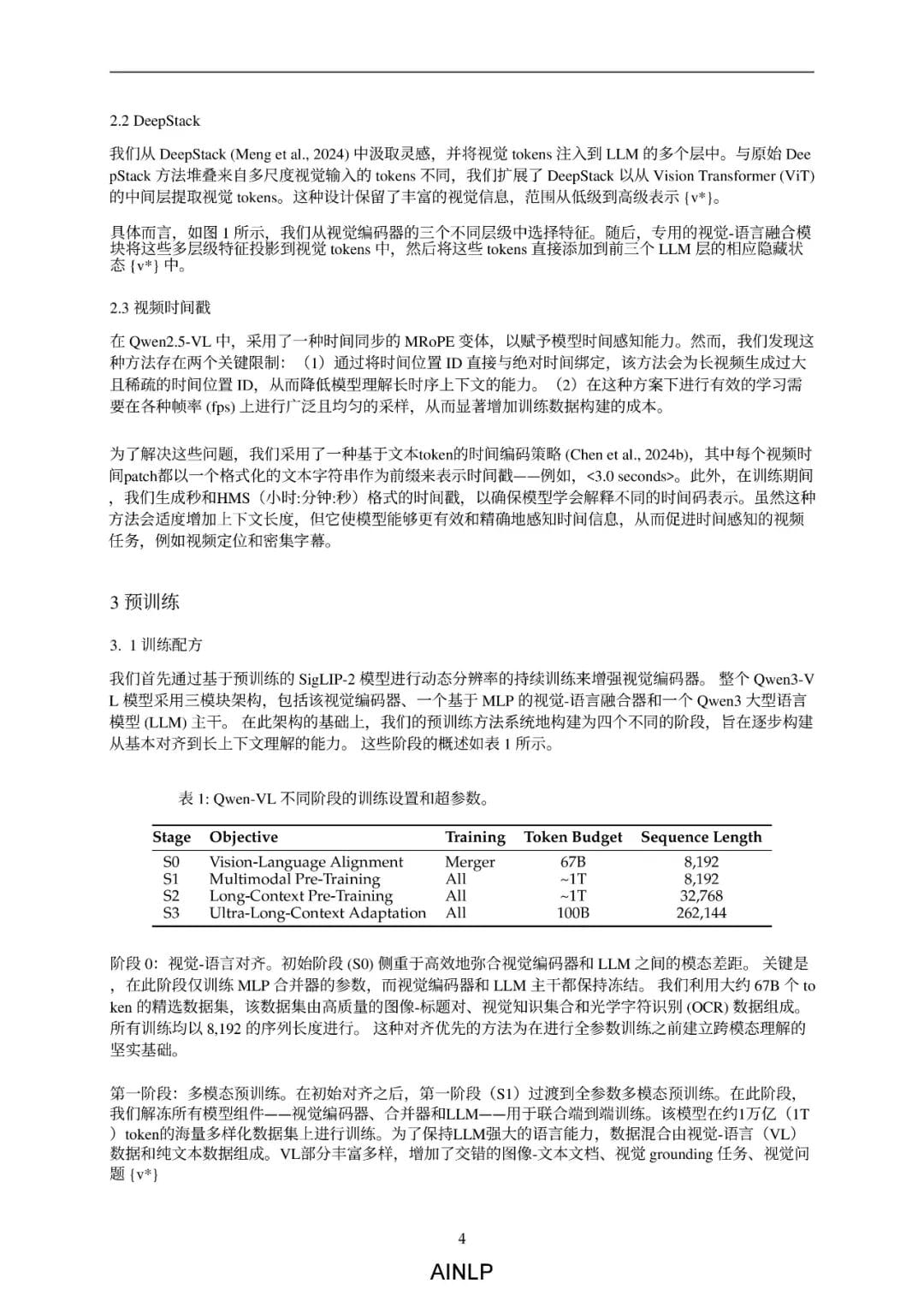

Training Methodology

- Four-stage pretraining: 8K → 32K → 256K

- Supervised Fine-Tuning (SFT)

- Strong-to-weak distillation

- Reinforcement Learning (RL) for both Reasoning and General modes

- Dual modes: Thinking / Non-Thinking to balance speed and reasoning depth

---

Multimodal Data Sources

- High-quality image-text pairs

- Web pages and textbooks

- Structured PDF parsing (HTML/Markdown)

- OCR in 39 languages

- 3D and spatial data understanding

- Action/event-level video semantics

- Cross-layer grounding datasets

- 60M+ STEM problems

- GUI and multi-tool Agent behavioral data

---

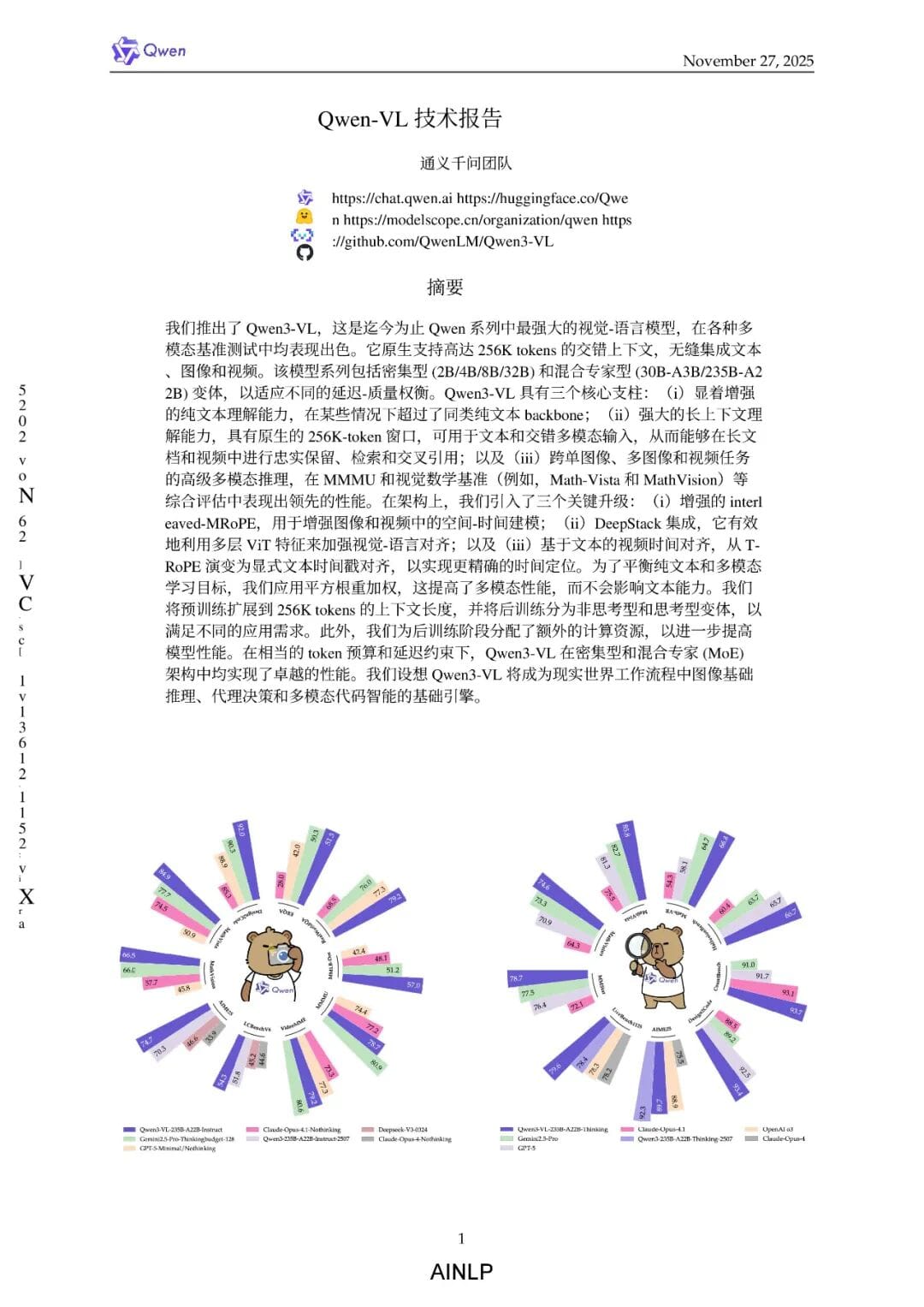

Performance Highlights

- 235B-A22B:

- Leading multimodal reasoning

- Superior long-document comprehension

- Strong video understanding, OCR, and spatial reasoning

- Comparable to or surpassing Gemini 2.5 Pro, GPT‑5, Claude Opus

- 32B: Outperforms GPT‑5-mini and Gemini Flash

- 2B / 4B / 8B: Competitive for lightweight, fast‑response tasks

---

Enterprise Applications

Qwen3-VL serves as a multimodal intelligence backbone for tasks including:

- Long PDF parsing

- Chart comprehension

- GUI automation

- Process-oriented AI agents

- Video surveillance analysis

- Technical document retrieval

- Multimodal code generation

Covers the full chain: Understanding → Reasoning → Decision-making → Automated execution

---

---

AiToEarn: AI-Powered Multi-Platform Workflow Integration

For teams and developers, AiToEarn offers an open-source ecosystem enabling:

- AI content generation

- Simultaneous publishing across global platforms:

- Douyin, Kwai, WeChat, Bilibili, Rednote (Xiaohongshu)

- Facebook, Instagram, LinkedIn, Threads

- YouTube, Pinterest, X (Twitter)

- Built‑in analytics and model rankings

Explore resources:

---

AINLP Community

AINLP is a vibrant AI/NLP community focused on:

- Large language models & pre-trained models

- Automated content generation & summarization

- Intelligent Q&A & chatbots

- Machine translation & knowledge graphs

- Recommendation systems & computational advertising

- Job opportunities & career insights

Join Us:

- Add AINLP Assistant on WeChat (ID: `ainlp2`)

- Include your work/research focus and reasons for joining

---

Useful Links

---

Turning AI Creativity into Value

For AI-powered language applications, AiToEarn官网 can help generate, publish, and monetize content seamlessly, connecting NLP professionals and AI developers with multi-platform analytics and competitive model rankings.

---

Would you like me to also create a concise one-page summary table comparing Qwen3-VL’s variants against competing models? That would make the Markdown even more business-friendly.