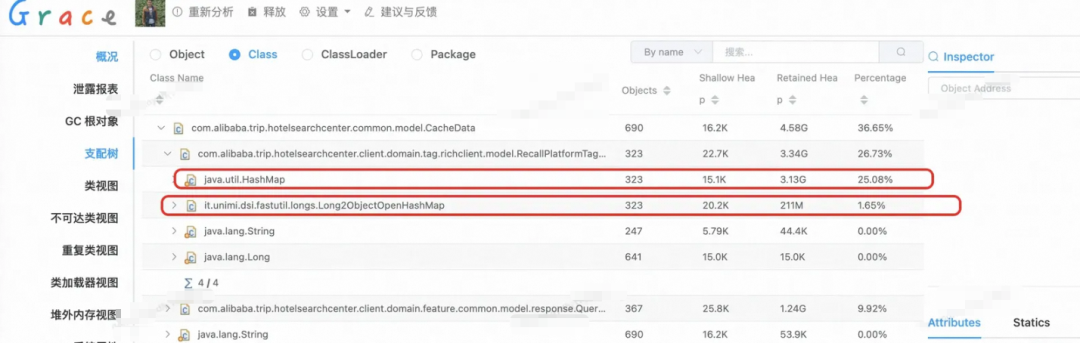

Refactoring a Class Saves 2.9G Memory in the JVM?

Data Structure Refactoring That Saved Nearly 3 GB JVM Heap Memory in Production

This article explains how we refactored a core class’s data structure from

`HashMap>` → FastUtil `Long2ObjectOpenHashMap`.

By leveraging business data characteristics—tags as small integers, sparsely distributed—we replaced `HashSet` with sorted `int[]` arrays plus binary search lookups.

Key Results

- Heap drop: ~3.2 GB → 211 MB (↓ 94% memory usage)

- Validated in production with dual-write checks before rollout

- No performance trade-off in lookups; in many cases, lookups improved

---

Optimization in Numbers

Refactoring one class dropped JVM heap by ~3 GB. This was measured in production, not a test lab:

Verification steps:

- Dual-write the old and new data structure

- Compare memory metrics in real-time

- Switch fully to new model after validation

Takeaway: General-purpose containers (`HashMap`, `HashSet`) and boxing can be surprisingly costly at scale.

---

1. Background

In our business pipeline, an interface filters product tags per city with complex logic:

- Multi-condition `AND`/`OR` nesting

- Multi-level expression trees

- Runtime-only computations for some rules

Because these filters couldn’t be expressed efficiently in our search backend, we cached full product-tag data in memory keyed by city ID for real-time filtering.

⚠️ Without this cache, each request rebuilt the structure → massive temporary memory spikes.

---

2. Legacy Structure

public class RecallPlatformTagsResp extends BasePageResponse {

private static final long serialVersionUID = 8030307250681300454L;

private Map> resultMap;

}Example:

[

{1:["2246","2288","3388","1022"]},

{2:["12246","12288"]}

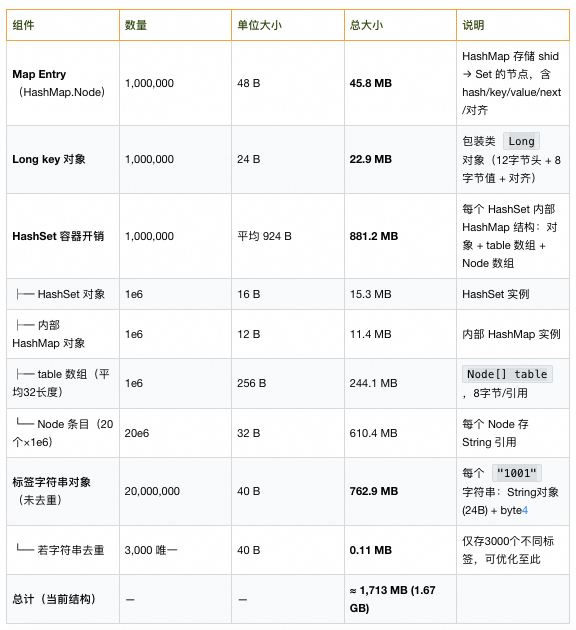

]Problem: At scale, nested `HashMap` + `HashSet` → huge overhead from:

- Object headers

- Boxing/unboxing

- Hash table arrays

- Node pointers

---

3. Tag Distribution Analysis

From 1 million sample items:

- 80% ≤ 16 tags

- 90% ≤ 32 tags

- 99% ≤ 64 tags

- Max tags < 128

Implications:

- Tags are bounded and small → arrays suffice

- Data is immutable after init → binary search works

- Tags are integers → `int[]` avoids `String` + object overhead

---

Visual Memory Impact:

---

4. New Structure Choices

Alternatives tried:

- FastUtil primitive maps (`Long2ObjectOpenHashMap`)

- Sorted `int[]` + binary search

- RoaringBitmap

- Custom compact object pool

Test metrics: memory footprint, lookup speed, init cost, code complexity

---

Best Combo:

- Key: FastUtil `Long2ObjectOpenHashMap` → no boxing, tighter layout

- Value: sorted `int[]` → tiny footprint, fast binary search

Memory Effect:

- Replace value (`HashSet` → `int[]`) in JDK `HashMap` → 1.3 GB → 64 MB

- Then replace JDK map with FastUtil → 25 MB

---

Why `int[]` over `HashSet`:

| Approach | Complexity | Memory | Notes |

|------------------------|------------|--------|-------|

| HashSet.contains() | O(1) avg | High | Hash collisions possible |

| int[] + binary search | O(log k) | Low | Cache-friendly; predictable |

With k ≤ 128:

- ≤ 7 comparisons worst-case

- 90% of lookups ≤ 5 comparisons

- Negligible latency impact; massive memory savings

---

5. Test Harness

JProfiler memory dumps compared:

- `Map>`

- `HashMap`

- FastUtil `Long2ObjectOpenHashMap`

- `BitSet`

- RoaringBitmap

import it.unimi.dsi.fastutil.longs.Long2ObjectOpenHashMap;

...(full Java test code above)

---

Result: Long2ObjectOpenHashMap is optimal

public class RecallPlatformTagsResp extends BasePageResponse {

...

private Map> resultMap; // legacy dual-write

private Long2ObjectOpenHashMap itemTags = new Long2ObjectOpenHashMap<>(1_600_000);

}---

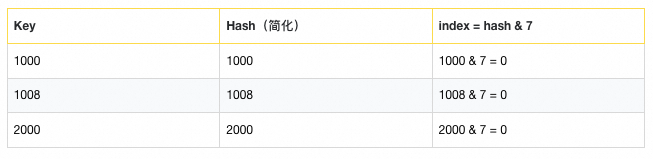

6. Why FastUtil `Long2ObjectOpenHashMap`

Advantages

- Primitive keys — no boxing (`long` direct)

- Open addressing — faster, cache-friendly

- Compact arrays — no linked lists or tree nodes

Internals:

long[] key; // primitive keys

Object[] value;

mask = capacity - 1; // power of twoHash: `(int)(key ^ (key >>> 32)) & mask`

---

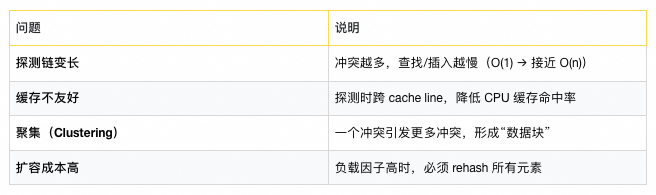

Collision Handling

Linear probing: place colliding keys in next free slot

get(): probe until match or empty slot

remove(): use Backward Shift algorithm — slide items back to fill gaps, avoid breaking probe chains

---

Performance Notes

- Load factor ≤ 0.7 recommended

- Avoid sequential keys causing clustering

- Initialize with estimated capacity

new Long2ObjectOpenHashMap<>(16384);---

Suitable Use Cases

✅ High-frequency `long → Object` lookups, GC-sensitive systems

❌ Concurrent writes without external sync; highly skewed keys

---

Final Summary

Before:

HashMap>After:

FastUtil Long2ObjectOpenHashMapOutcome:

- Memory ↓ 94%

- Speed maintained

- GC pressure reduced

---

Key Takeaways:

- Generic containers can hide massive overhead at scale

- Let data characteristics drive structure choice

- Simple primitives + targeted algorithms beat general-purpose designs for bounded, read-mostly datasets