Researchers Introduce ACE Framework for Self-Improvement in LLM Contexts

Agentic Context Engineering (ACE): A New Path for Self-Improving LLMs

Researchers from Stanford University, SambaNova Systems, and UC Berkeley have introduced Agentic Context Engineering (ACE) — a novel framework designed to enhance Large Language Models (LLMs) using evolving, structured context instead of traditional weight retraining.

The goal is simple yet powerful: enable self-improvement without continual full retraining.

---

Why ACE Matters

LLM-based systems often depend on prompt or context optimization to improve reasoning and performance. Existing methods like GEPA and Dynamic Cheatsheet have shown notable gains — but often focus on brevity, risking context collapse, where essential details are lost through repeated rewriting.

ACE avoids this trap by treating contexts as evolving playbooks that grow modularly over time through:

- Generation

- Reflection

- Curation

---

The Three Core Components

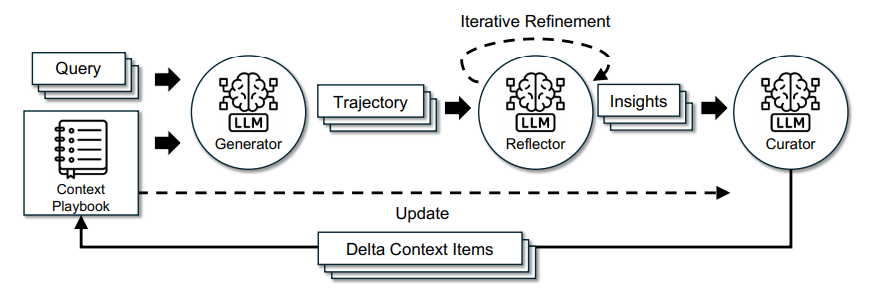

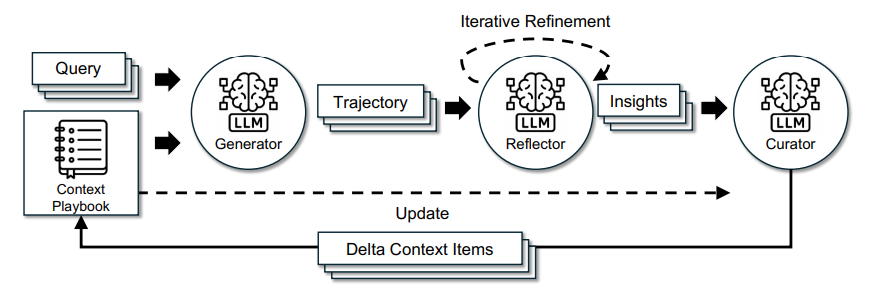

ACE’s architecture is organized into three specialized agents:

- Generator – Produces reasoning traces and outputs

- Reflector – Analyzes successes and failures to extract actionable lessons

- Curator – Integrates lessons as incremental updates to context

Source: https://www.arxiv.org/pdf/2510.04618

---

Key Innovations

- Delta Updates: Instead of rewriting entire prompts, ACE applies targeted context edits, preserving useful knowledge while embedding new insights.

- Grow-and-Refine Mechanism: Controls context size and redundancy by merging or pruning entries using semantic similarity.

- Natural Feedback Utilization: Improvements are driven by task outcomes, code execution results, and other natural signals — no fine-tuning or labeled data required.

---

Performance Highlights

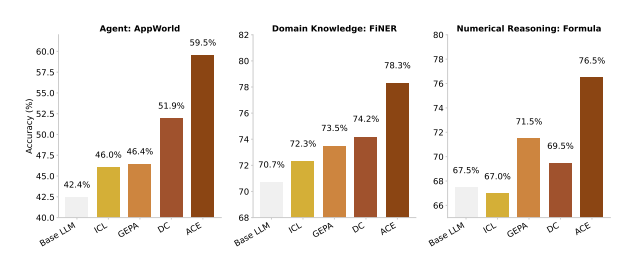

In evaluations, ACE achieved significant gains:

- AppWorld Benchmark (AppWorld.dev): 59.5% average accuracy — 10.6 points higher than prior methods.

- Financial Reasoning (FNER, Formula datasets): 8.6% average improvement with ground-truth feedback.

Source: https://www.arxiv.org/pdf/2510.04618

---

Efficiency Wins

- Adaptation latency reduced by up to 86.9%

- Computational rollouts cut by over 75% compared to baselines like GEPA

---

Applications & Ecosystem Integration

ACE’s continuous context evolution and interpretability make it highly relevant for domains requiring transparency and selective unlearning (e.g., finance, healthcare).

It also aligns with multi-platform content workflows. For example:

- AiToEarn官网 — an open-source global AI content monetization platform

- Features include AI content generation, cross-platform publishing, advanced analytics, and performance ranking

- Distributes content to Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X (Twitter)

By combining ACE’s adaptive reasoning with AiToEarn’s distribution and monetization capabilities, content creators and developers can:

- Continuously improve AI output quality

- Expand reach across global platforms

- Maximize monetization opportunities

---

Community Feedback

Positive reception is already visible. One Reddit user commented:

> “That is certainly encouraging. This looks like a smarter way to context engineer. If you combine it with post-processing and the other ‘low-hanging fruit’ of model development, I am sure we will see far more affordable gains.”

---

Conclusion

- ACE shows that scalable LLM self-improvement is achievable through structured, evolving contexts.

- It offers a cost-effective alternative to continual retraining, maintaining efficiency and interpretability.

- Integration with cross-platform monetization tools like AiToEarn could accelerate both technical innovation and creator economy growth.

By merging adaptive AI learning with global content distribution ecosystems, creators and developers stand to gain a powerful, sustainable advantage in the evolving AI landscape.

---

Do you want me to also create a quick 5–step diagram showing how Generator → Reflector → Curator interact? That would make the workflow even clearer.