Rock & Roll! Alibaba Built a Real-World Training Ground for AI Agents | Open Source

Kreisy Reporting from AF Temple

---

Introduction: A Live-Fire Training Ground for Intelligent Agents

Alibaba has officially open-sourced its new project — ROCK — solving one of the biggest challenges in AI training: scaling complex task environments in the real world.

With ROCK, developers can launch standardized one-click deployments for large-scale agent training, removing the need to manually craft and configure environments.

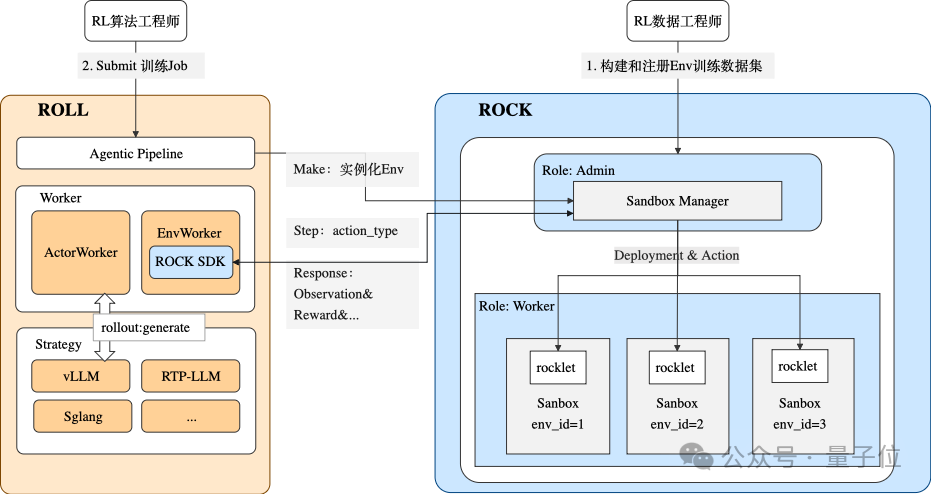

The Perfect Pair: ROLL + ROCK

This forms a seamless synergy with Alibaba’s earlier RL training framework ROLL:

- ROLL → Teaches AI how to think (training algorithms)

- ROCK → Gives AI a practice arena (environment sandbox)

Together, they create a complete agent training loop — enabling research teams to move from small, single-machine experiments to massive, multi-node cluster training.

---

Environment Services — The Overlooked Link in Agent Evolution

State-of-the-art LLMs are shifting toward Agentic AI — models that interact deeply with the outside world.

Why Models Need More Than Just Talk

Modern agents not only converse, but also:

- Call tools

- Execute code

- Invoke APIs

- Perform real-world actions

From a commercial standpoint, this means automation pipelines can execute immediate, real actions, rather than just producing recommendations.

The Four Puzzle Pieces of Agent Training

Training capable agents requires more than a strong LLM “brain” — they must learn sequencing, planning, and execution in realistic environments. This involves:

- The Brain — LLM

- The Test Paper — Task descriptions

- The Coach — RL framework

- The Training Ground — Environment service (often forgotten)

Why Environment Stability Matters

For Agentic models:

- Stability and efficiency of the environment service directly control scalability.

- Training requires:

- Massive concurrency — thousands to tens of thousands of parallel runs

- Low-latency feedback — millisecond responses

- Robust state control — reset, rollback, recovery

- Flexibility — handle varied task complexities

Without this infrastructure, environment bottlenecks become the choke point of the pipeline.

---

ROLL — High-Powered Agentic RL Engine

ROLL is built on Ray for large-scale LLM reinforcement learning — scaling from research prototypes to 100B-parameter, multi-thousand-GPU deployments.

Key ROLL Features

- Multi-domain training: math, code, reasoning

- Native Agentic RL support: games, multi-turn dialogue, tool calls, code agents

- Deep integration: Megatron-Core, Deepspeed, 5D parallel execution

- Sample-level generation, asynchronous inference/training

ROLL uses asynchronous environment interaction and redundancy sampling, with a clean standard interface — GEM:

env.reset()

env.step(action)Environment developers simply implement `reset` and `step`, instantly connecting tools from simple games to complex APIs.

The Scaling Challenge

ROLL demands environment scalability — and ROCK delivers exactly that.

---

ROCK — Reinforcement Open Construction Kit

Mission: Scalable Agent Training

ROCK breaks the traditional ceiling on training environments:

- Built on Ray — abstracts heterogeneous clusters into a unified resource pool.

- Elastic deployment — spin up 10K+ environments in minutes.

- Mixed-mode clusters — homogeneous and heterogeneous environments run side-by-side.

Transparent Debugging with “God Mode”

- Programmable Bash interaction via SDK/HTTP API.

- Check logs, files, processes across thousands of Sandboxes as if local.

Deployment Modes

- Local standalone — unit testing and basic sanity checks.

- Local integrated debugging — run full end-to-end training with ROLL.

- Cloud-scale deployment — move to production with zero config changes.

---

Stability Standards

ROCK meets Alibaba internal infra standards:

- Fault isolation — one crash never cascades.

- Precision scheduling — no noisy neighbors, consistent performance.

- Fast recovery — restart and reset in seconds.

---

ModelService — Decoupling Agent Logic from Training

Previously, Agent business logic had to be embedded inside the training framework — creating code conflicts and heavy maintenance burdens.

ROCK’s ModelService is an intelligent model proxy inside each Sandbox, enabling clean separation:

- Question — Agent sends prompt.

- Intercept — ModelService passes prompt back to ROLL.

- Answer — ROLL calculates rewards/optimizations, returns result.

Benefits:

- Complete decoupling — independent codebases.

- Training control stays with ROLL.

- Cost efficiency — CPU Sandboxes + centralized GPU inference.

- Broad compatibility — supports custom agent logic.

---

The ROCK + ROLL Advantage

With ROCK open-sourced:

- Elastic scalability — 1 → 10K environments in minutes

- Production-ready stability — fault isolation & rapid recovery

- Seamless workflow — dev → test → cloud deployment smoothly

- Architectural innovation — ModelService enables modular design

---

Related Ecosystem — AiToEarn

Platforms like AiToEarn官网 complement this infrastructure by enabling multi-platform AI content monetization — from Douyin, Kwai, and WeChat to Instagram, YouTube, and X.

With:

- Integrated AI generators

- Cross-platform publishing

- Analytics and AI模型排名

AiToEarn pairs perfectly with ROCK/ROLL workflows, applying scalable agent logic to content automation.

---

Getting Started

Repositories:

- ROCK: https://github.com/alibaba/ROCK

- ROLL: https://github.com/alibaba/ROLL

Quick Start Guide: Train your first agent in 5 minutes

https://alibaba.github.io/ROCK/docs/Getting%20Started/rockroll/

---

Bottom Line:

ROCK and ROLL transform agent training from elite tech experiments into a standard, industrialized process accessible to every developer.

Whether for research, enterprise automation, or creative AI content generation — this infrastructure is designed to scale with you.